Leaderboard

Popular Content

Showing content with the highest reputation on 11/06/2017 in all areas

-

Magic Lantern for 80D coming soon?

Drew Allegre and one other reacted to Dave Maze for a topic

Just browsing the ML forums and stumbled across the progress on the ML 80D hack. Looks promising! Quote from the forum: "...It seems that 80D has a writing speed close to 80 MB/s (70D only 40 MB/s). It is quite possible that we can get more than 1080p with compressed raw. The 10 bit should work as well..." https://www.magiclantern.fm/forum/index.php?topic=17360.1502 points -

Yes if your video contains 400% enlargements of stopped frames of complex motion Editing should be easier but the management of large files can eat that benefit out.2 points

-

Lately all I'm using is the Voigtländer 17.5mm ... wish the WiFi Remote had a better resolution so it would be easier to judge sharpness when wide open. Wish there was a lens like that with autofocus. Played around with it on one of the last warm days we had. It's only super short clip because it's for the girlfriend's IG. Password: smucho GH5 with the Voigtländer 17.5mm 0.95 (pretty much all f/0.95 - 1.4) with Tiffen Warm Black Pro Mist 1/8 filter, all handheld. Total sloppy hack job. Just liked the combination of rendering and setting sun's light.2 points

-

hehe, the ultimate pixel peepers' thread. You guys are crazy ! So to see a difference you have to actually stop moving image, take one frame out, zoom said frame to 400% and on top of it draw an arrow pointing towards the difference because it's hardly to see it otherwise. It's a great exercise and I appreciate your findings but do you think this difference is going to make you more money or make you stand out from others? In my opinion thanks to your test I learned a valuable lesson. For my clients, I'm happy to use IPB and save space.2 points

-

Could be useful on the GH5 then......1 point

-

You just quoted a forum member who have 4 posts on the whole forum and cleary dont understand how the hacking process work. Yes there is a slow progress on hacking Digic 6 cameras like 5D Mark IV and 80D, but the guys constantly hitting in walls while trying to hack the newer (Digic6) canon cameras. Probably they will do it, but not in the near future... and in about a year (i hope) we will have lot of 10 bit cameras with good codec which means RAW recording will no longer be as shiny as it was years ago..1 point

-

thatd be dope!!! id get one EDIT: wait, would the touch screen dpaf work alongside magic lantern for video? speaking of cameras with flippy screens, did you guys see this patent?1 point

-

Keep in mind that shooting HD with a 1.5x anamorphic adapter will require you to shave a little off the edges to get to appropriate resolution, which then means you'd have to upscale a little to get to proper HD 2.39:1 resolution. A 1.33x anamorphic lens wouldn't require an upscale. Seems pretty average to me. Still a good 1/3rd - 1/2 smaller than an Alexa or Amira with medium sized zoom. Dont know what you plan to shoot, but the F3 will be easier to balance on the shoulder.1 point

-

EVA1 will allow external raw with a future firmware update. The low light sensitivity beats Blackmagic and the GH5 hands-down. And probably will match or better the C200 for low light. As for everything else, paper specs mean little. The Alexa doesn't even have a 4K sensor and shoots ProRes for the most part. On paper, RED should beat it in every quantifiable spec. But it doesn't.1 point

-

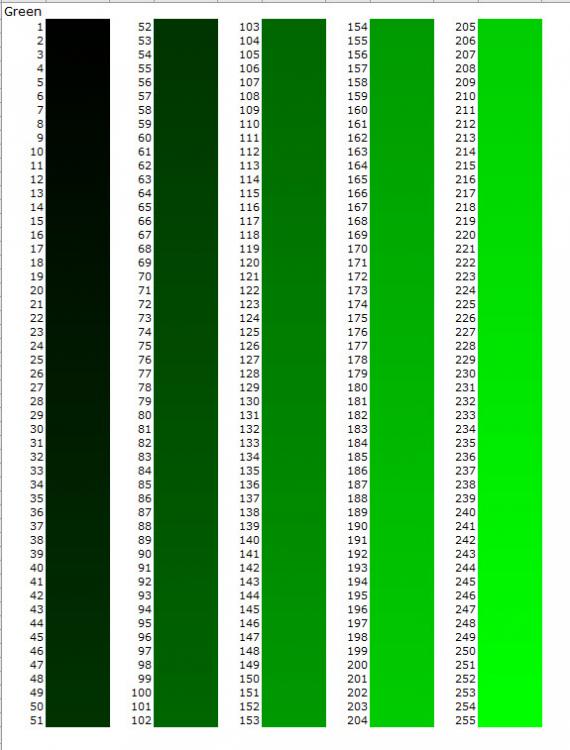

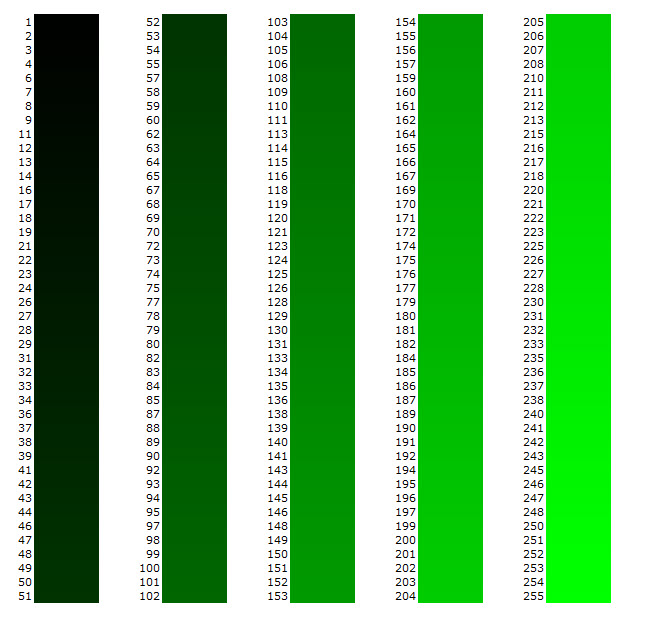

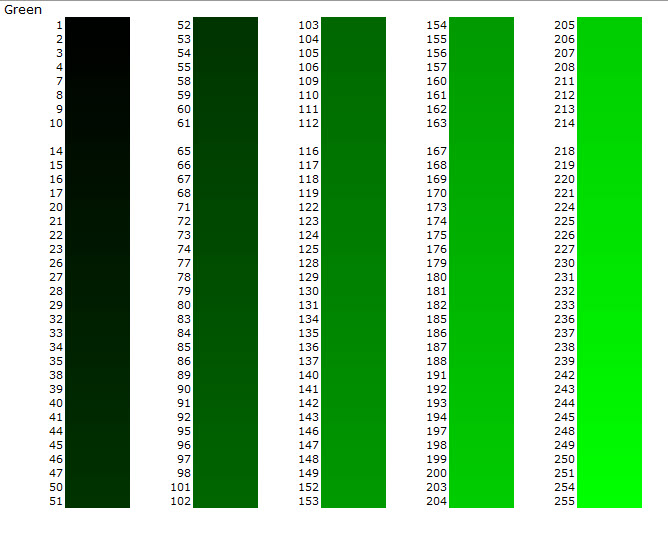

It's a taste thing, right, trading color saturation for greater dynamic range. We certainly wouldn't want HDR if it did that because people who favor saturation over DR would then be left with inferior images. We need both. When I say "saturation" (and maybe someone can give me a better term) I mean the amount of color information we need to discern all colors within the display gamut. Banding is the clearest example of what I mean. As I mentioned elsewhere, if you display, say 20 colors (saturation) of yellow on an 8-bit, 6DR gamut display, you will see banding, because your eye can tell the difference. Here are some examples I created. The first is all 255 shades of green an 8bit image, which should render "bandless" on a 6DR screen I can already see some banding, which tells me that the website might re-compresses images at a lower bit-depth. Here's a version where 18% of the colors are removed, let's call it 7-bit And now for 32% removed, call it 6-bit The less colors (saturation information) there is, the more our eye/brains detect a difference in the scene. HOWEVER, what the above examples show is that we don't really need even 8bits to get good images out of our current display gamuts. Most people probably wouldn't notice the difference if we were standardized on 6bit video. But that's a whole other story How does this relate to HDR? The more you shrink the gamut (more contrast-y) the less difference you see between the colors, right? In a very high contrast scene, a sky will just appear solid blue of one color. It's as we increase the gamut that we can see the gradations of blue. That is, there must always be enough bit-depth to fill the maximum gamut. For HDR to work for me, and you it sounds like (I believe we have the same tastes), it needs the bit-depth to keep up with the expansion in gamut. So doing some quick stupid math (someone can fix I hope), let's say that for every stop of DR we need 42 shades of any given color (255/6 DR). That's what we have in 8bit currently, I believe. Therefore, every extra stop of DR will require 297 (255+42) shades in each color channel, or 297*297*297 = 26,198,073. In 10bits, we can represent 1,024 shades, so roughly, 10-bit should give us another 24 stops of DR; that is, with 10bit, we should be able to show "bandless" color on a screen with 14 (even 20+) stops of DR. What I think it comes down to is better video is not a matter of improved bit-depth (10bit), or CODECs, etc., it's a matter of display technology. I suspect that when one sees good HDR it's not the video tech that's giving a better image, it's just the display's ability to show deeper blacks, or more subtle DR. That's why I believe someone's comment about the GH5 being plenty good enough to make HDR makes sense (though I'd extend it to most cameras). Anyway, I hope this articulates what I mean about color saturation. The other thing I must point out, that though I've argued that 10bit is suitable for HDR theoretically, I still believe one needs RAW source material to get a good image in non-studio environments. And finally, to answer the OP. I don't believe you need any special equipment for future HDR content. You, don't even need a full 8bits to render watchable video today. My guess is that any 8bit video graded to an HDR gamut will look just fine to 95% of the public. They may be able to notice the improvement in DR even though they're losing color information because again, in video, we seldom look at gradient skies. For my tastes, however, I will probably complain because LOG will still look like crap to me, even in HDR, in many situations 10bit? Well, we'll just have to see!1 point

-

Seeking Info About the B&H Projection Lens

Bold reacted to reaction105 for a topic

@Bold, tito, nickgorey, nahua, richg101, quickhitrecord and everyone else in this thread; great work! Thanks for sharing so much info from all your work on this lens. I recently decided to dive into anamorphic shooting and this thread was invaluable. My setup is typical: GH5 Taking lens (Helios 44 58mm/Minolta 55mm/Fujinon 55mm) B&H with Redstan clamp Here's the setup, showing the lenses mounted on some rails; taking a cue from Bold (well, more than just one), I've used some telescope guide rails attached to a smallrig clamp. But I think I prefer using the standard smallrig V-shaped lens support you can see in the image. This is because I'm actually finding this setup to be dual focus; that is, I can't get anything in focus with the taking lens set to infinity, I have to keep it around 1.5-2m and work the B&H focus from there. I know it's supposed to be single focus, so I'm not sure why this is the case or if there is something wrong with my B&H (it's pretty beat up, as you can see). As such, the B&H moves forward and back as I adjust the focus on the taking lens. Anyway, here are the first couple shots I've taken with this setup. All are with the Helios 44, except the Kodak camera shot, which was taken with the Minolta. I plan to do some more comparative tests with different taking lenses soon.1 point -

The correct way to expose for SLOG3 when using 8bit cameras

hyalinejim reacted to cpc for a topic

One discriminating characteristic of log curves compared to negative is that (on most of them) there is no shoulder. (Well, the shoulder on Vision3 series films is very high, so not much of a practical consideration unless you overexpose severely.) An effect of this lack of shoulder is that you can generally re-rate slower without messing up color relations through the range, as long as clipping is accounted for. Arri's Log-C has so much latitude over nominal mid gray that rating it at 400 still leaves tons for highlights. I don't think any other camera has similar (or more) latitude above the nominal mid point? Pretty much all the other camera manufacturers inflate reported numbers by counting and reporting the noise fest at the bottom in the overall DR. No wonder that a camera with "more" DR than an Alexa looks like trash in a side-by-side latitude comparison.1 point -

The correct way to expose for SLOG3 when using 8bit cameras

hyalinejim reacted to cpc for a topic

Banding is a combination of bitdepth precision, chroma subsampling, compression and stretching the image in post. S-log3 is so flat (and doesn't even use the full 8-bit precision) that pretty much all grades qualify as aggressive tonal changes. S-log2 is a bit better, but still needs more exposure than nominal in most cases. Actually, I can't think of any non-Arri cameras that don't need some amount of overexposure in log even at higher bitdepths. These curves are ISO rated for maximizing a technical notion of SNR which doesn't always (if ever) coincide with what we consider a clean image after tone mapping for display. That said, ETTR isn't usually the best way to expose log (or any curve): too much normalizing work in post on shot by shot basis. Better to re-rate the camera slower and expose consistently. In the case of Sony A series it is probably best to just shoot one of the Cine curves. They have decent latitude without entirely butchering mids density. Perhaps the only practical exception is shooting 4k and delivering 1080p, which restores a bit of density after the downscale.1 point -

woah just worked on some older FS7 Cine EL/ S-Log 3 Footage I did, to see how it matches to GH5 footage in ACES colorspace: Quite good, but... Videoish as hell Totally overprocessed. No fine detail. Unsharp mask artifacts galore and lots of colorful noise. But unlike the GH5 no fine grain, more like Canon splotchy noise. Ugh Why did I ever complain about the GH5? lol WTH1 point

-

When the GH3 just came out, I bought it on the day of release. I have used it for weddings till the GH5 came out, and replaced the GH3 with that. For years I was one of the few people who shot weddings profesionally with GHx camera's. Also, I was one of the first people who used a Speed Booster and a Sigma 18-35. Bought both of the those in the month after their release. For years I had a bit of a 'unique' look to my films. Now it seems like 90% of the people who bought a GH5 are using it for weddings. And also use the Speed Booster and the Sigma 18-35. I guess the moment has arrived when the playing field is leveled. Like in photography, where 99% of photographers use a full frame DSLR (Canon 5Dx or Nikon Dx). The moment has come that my gear is no longer 'added value' and we're all playing the same game. I knew this moment would come. I just thought it would be a bit later when / if Canon would ever release a 5D which would actually have a good video mode.1 point