Leaderboard

Popular Content

Showing content with the highest reputation on 04/05/2018 in all areas

-

Panasonic Profile Stepper App

billdoubleu and 11 others reacted to BTM_Pix for a topic

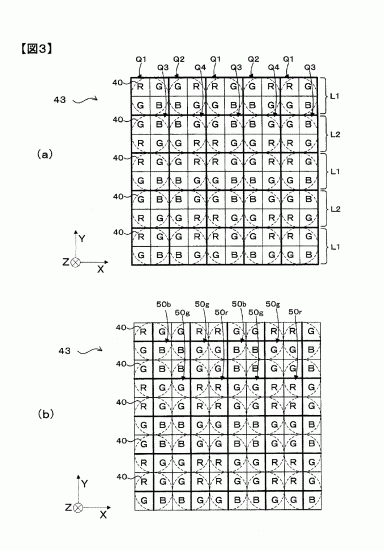

So, as you may recall, I have an interest in tweaking the combinations of the basic profiles in the camera along with the contrast/sharpness/saturation/NR parameters. The purpose of that is basically a mining operation to see if there are some combinations that perhaps may not be intuitive but whose interaction may yield pleasing results. Outside of the usual "select cinelike d, reduce everything to -5" etc stuff that we all do, who really knows if Natural with +4 contrast/-5 sharpness/+3 saturation/+2 noise reduction might actually be the golden ticket? I did a hardware controller last year based on a midi control surface that let you tweak the parameters in real time which, whilst offering a way of 'discovery through play', wasn't exactly ideal from a structured testing point of view. It also wasn't particularly practical (or cheap) for you to go out and replicate yourselves if you fancied a go ! Anyway, in pursuit of something a bit more structured, I created a very simple automated testing app which I thought I might as well hand out in case anyone else is interested in this sort of thing. Its Android only so you elitists with that other phone type are out of luck for now As its also a bit of a utilitarian thing that was only really designed for my own meddling, its not exactly pretty either but it works. Mainly. OK, so here is how it looks, how to use it and what it does. First things first, obviously, is to enable the Wifi on your Panasonic camera as per if you were using their app and then go into your phone/tablet and connect to it. In the ProfileStepper app, you then press the "Connect" button and it will take control of the camera. It might complain the first time about approving the connection but if it does just repeat and it will be fine. Once connected, you press the individual parameter buttons and select the range that you are interested in so in this example we have set it to be using the base Natural profile and how it looks with all permutations of Contrast from -5 to +3, Sharpness from -2 to 0, Saturation from -5 to +5 and NR from -5 to +1. Next up is to set if you want to use Stills or Video, which we toggle between by pressing the Stills button and then to create a delay (of between 1 and 10 seconds) between shots you press the button (currently set to 2 seconds) and select it. When you are happy with the settings range, press the Start button and the app will begin compiling the list of commands it needs to send to the camera. As this is a variable amount of time (in our example there are 2079 commands ) you may see a progress pop-up before it proceeds to the playout screen. Be patient. The progress screen will then appear and display the value of each parameter in the set it is sending to the camera and the current progress (in this case we are on set 4 of 2079). After it sends each set to the camera, if you have the mode set to Stills it will then fire the shutter on the camera. Obviously, make sure you have a decent memory card capacity if you are testing a lot of permutations! If you are set to Video mode, a recording will begin on the camera as soon as you pressed the Start button and the changes will all be recorded into this one recording. This is where the Delay function becomes useful as it determines how long each setting is held on the video as it progresses. As with the Stills mode, be careful regarding capacity as if you have 1000 permutations with a Delay time of 5 seconds then, well.... In either mode, pressing the Stop button will abort and return you to the main screen and if you are in video mode it will stop the recording. The purpose of the progress screen is not just to provide you with a countdown to whats going on but also meant to act as a slate so that you can put your phone/tablet in your test scene and the settings will then be embedded in the images/video when you review them. This is an example frame from a GX80 to illustrate. There is a 3 second delay built in to the beginning of the command playout process to allow you to do this but obviously as the camera is being controlled over Wifi you can place the phone/tablet in the scene and press Start from there anyway. This just gives you a chance to get your hand out of the way ! So there you go. Its a bit of a niche app to say the least but might be useful for some. Bear in mind, its not really meant to be about analysing every single permutation in one go (though it can do that if you want) its more about helping you to look at how more targeted ranges of settings interact with each other (such as Saturation and Contrast in a specific Profile for example). And what exactly is the Noise Reduction bringing to the party in terms of colour shifts and sharpening etc. Download link is here https://mega.nz/#!Vux3UDIK!TPJNYc8gJ6YrQY5T8xkZRAQ_IpBac16xIIGe3ET6R0Y You'll need to set your device to install apps from non-Play store sources etc. Its completely free, no ads or any bollocks like that. As I say, its my own test tool and wasn't designed for general release but thought it might be useful to share. If nothing else, its a quick way to get the Cinelike D hack on to your camera! Because its just a quick and dirty test tool, there is no particularly elegant error handling going on (other than telling you that you haven't connected the camera) so if it throws one up just quit and restart it. If you set the To range to be lower than the From range (i.e. the From is -3 and the To is -4) then it won't execute the commands so don't do that. NB - The eagle eyed amongst you may have spotted the Bracket function at the bottom of the screen. This does exactly what you might expect but its unrelated to the main function but I needed it to test something so you get it as a bonus! You set the shutter speed and then the 1/3rd steps above and below and press Bracket and it takes the required frames with suitably altered shutter speeds. Its a bit flaky on some Panasonic cameras because they don't all allow the 1/3rd shutter changes so your mileage may vary and, as I say, you can largely ignore it.12 points -

Apple leaving professional market?

EthanAlexander and 3 others reacted to IronFilm for a topic

Someone could have asked a while ago why would any one ever buy a phone from a computer company?!4 points -

Canon Full Frame Mirrorless is just around the corner?

TwoScoops and 2 others reacted to webrunner5 for a topic

3 points -

3 points

-

How Many Stops of Dynamic Range Needed for Cinematic Look?

maxmizer and 2 others reacted to Mark Romero 2 for a topic

Firstly, the short video clip you posted looks good, @jonpais The sticker for me is what is meant by "cinematic," since it is a word bandied about so often. Firstly, I don't think it is specifically dynamic range of a camera per se that is the main concern. (As someone pointed out, some of the more popular film stocks had limited dynamic range). I would say that - in terms of brightness and darkness - cinematic in part to me means "controlled lighting" or maybe something more like "well managed lighting." But that is just one part of the recipe for cinematic for me. And "well managed lighting" could be everything from using a cheap foam board reflector to using fill lights to shooting in open shade to shooting at the right time of day to using a camera with more stops of DR. So in essence, I think we might be barking up the wrong tree if we look at it as just "how many stops of DR are needed." Getting back to the nice sample footage you posted. As someone pointed out above, not a whole lot of DR in those shots. What would have made it look more cinematic??? Maybe some of these MIGHT make it look more cinematic (maybe or maybe not - I am not implying at all that you SHOULD do these things, just saying that some people might feel your footage is more cinematic if you were to do these things, although others might not): - gelling your key light - shallower depth of field - epic sounding background music - using a diffusion filter - more base makeup on the talent - stronger grading of the footage - adding film grain - adding audio from the environment. ~~~~~~~ Man, I ramble on a lot. I guess it boils down to - in terms of dynamic range - managing your lighting, which you did well in the clips you posted.3 points -

Not much love for Nikon D850

dahlfors and 2 others reacted to Rodolfo Fernandes for a topic

So i heard about the D850 when it came out, and was willing to pull the trigger on it and sell my a6500 and D800 to cover it and as soon as i read what Andrew wrote about it, it confirmed what i was expecting. Fast forward 3months and i did my first little job with it and really liked what it came out of it, i am still trying to figure out how to get the footage from the mavic pro to look cleaner and softer without becoming too mooshy so excuse that part, but the colors straight from the camera are awesome and the detail on the 120fps are amazing coming from an a6500 this is night and day3 points -

Looks like Apple is spending a lot of time to creat a great pro system for the real pros. A whole new Mac Pro is coming in 2019 https://techcrunch.com/2018/04/05/apples-2019-imac-pro-will-be-shaped-by-workflows/2 points

-

You guys should just go out and buy an entry level Canon then. Why spend thousands upon thousands of dollars on cameras and native lenses? I mean, it’s all up to the operator, right? Obviously, I was being sarcastic there and obviously all of the points that talent and skill will make any camera look good are valid and probably the most important ingredient to a cinematic image. Now if only Hollywood Line Producers were reading this thread, then every film from this point on could be shot on a (insert consumer camera here)2 points

-

How Many Stops of Dynamic Range Needed for Cinematic Look?

Mark Romero 2 and one other reacted to DBounce for a topic

Agreed, but at least you now know the worst case scenario. And I used to say everything looks cinematic in slowmo. This video has proved me wrong.2 points -

Yes, I think this is really becoming the case. Framing, Lighting, Lenses and Filters. These are all important for getting the look. The first two have no quick and easy fix... You have to learn them and experiment... or bring in someone that knows them already. Of all of this something unmentioned is the actual on camera talent. It’s them that sell the story. A compelling enough performance and story will soon make viewer forget what lens or camera was used. They will be too busy watching. I think this is preferable. I know I don’t want viewers being absorbed with technical questions about lenses and cameras when I am trying to portray a story.2 points

-

After watching that Steve Yedlin video again, if I had £10K to spend and wanted a pain free way to get a cinematic look, I'd buy an Alexa for £8K off eBay and give the rest to him to set it up and teach me his workflow2 points

-

I thought they did plenty well enough without my ham fisted blundering about ! Its portable to the NX though from what I can see of their protocol. If I had one. But prices seemed to take a big jump in the last few weeks of March for some reason2 points

-

@BTM_Pix Great analytical mind, just wish you were in the NX camp when the mod/hack scene was a thing! Amazing stuff, nevertheless!2 points

-

2 points

-

I think where we may end up with this is concluding that the only way to truly differentiate the motion cadence between two cameras is by shooting unicorns in full gallop. So it doesn't matter whether you go there for fuel or chicken you're going to end up with gas either way. Smart marketing.2 points

-

2 points

-

Fuji X-H1. IBIS, Phase Detect 4K beast?

Emanuel reacted to Brian Williams for a topic

Well I liked the E-M1ii quite a bit, but knowing that the GH5 had 10bit, 400mbps ALL-I kept gnawing at me (even though I had no issue with the video I was getting from the E-M1ii), so I ended up switching. And the GH5 I quite liked as well, though its AF was definitely a step down from the phase-detection on the Olympus, so again I'm never happy. But everything was fine, until the A7iii was announced, for basically the same price I paid for the GH5, and it just seemed to be by far the smart choice all around. And I've owned quite a few Sony's, and besides the rolling shutter and boring erogomics, the picture and AF was always solid. The problem is the waiting for the A7iii to come out, I start seeing all these rave reviews of the Fuji, a company I also love quite a bit, so here I am. I think it comes down to the fact that I can't afford more than one camera, so I want to make sure I'm getting the best deal for the price (which is completely relative and unobtainable). I really had been doing quite well with sticking to one camera for the past year or so until a few months ago...1 point -

1 point

-

How Many Stops of Dynamic Range Needed for Cinematic Look?

IronFilm reacted to Mark Romero 2 for a topic

@jonpais Getting back to the original question you asked about DR, and to sort of add to the point of being "cinematic" in general... I think it really boils down to, "not looking like video." Think of all the BAD qualities of broadcast video and of early video cameras. Surely limited DR was part of that, but there were other technical (and artistic) things that scream "VIDEO." I think as the video format became the option for lower budget content creaters, there is just a sort of mental connection that low-budget-looking productions are video, and more polished productions are cinematic. One way to look at this is to ask yourself "What ISN'T cinematic???" To me, cinematic ISN'T: - blown highlights / horrible rolloff - oversharpened - compressed skin tones Add on to that artistic features that were amateurish.1 point -

Working ok with no issues at the moment. Really helpfull for match color style of different lumix cameras and fine tunning on the field. Now that you have all my phone contacts, don't forget to call my mom and say her i will be on her house to lunch next saturday... Thanks a lot, you are great!!!1 point

-

Apple leaving professional market?

salim reacted to Trek of Joy for a topic

The Pro Workflow Team cited in that article is interesting - Apple has hired a bunch of creatives to put its hardware/software to work in real world production scenarios in order to see how various workflows are structured, and how they can design to make them more efficient. The eGPU with a MacBook Pro working on a 8k stream in real time was also really interesting. 8k editing, grading and effects on a MBP, nice. Chris1 point -

Fuji X-H1. IBIS, Phase Detect 4K beast?

Emanuel reacted to Brian Williams for a topic

All true, I guess I'm just coming off my few months of GH5/E-M1ii ownerships where I was truly spoiled in that regard.1 point -

Oh man, Thats a real Quick app!! Thanks!!!1 point

-

1 point

-

few random questions

elgabogomez reacted to HockeyFan12 for a topic

I wouldn't know since I must be in the other 5%. It's a huge problem for me.1 point -

It makes no sense1 point

-

Well there was recently a major motion picture shot on an iPhone... this is only going to become more common. There are definite advantages to small size and low weight. Red is working on their new Hydrogen smartphone, so who knows. I think this work was pretty good, not because of any technical reason, it was just shot and edited with some thought. Granted, if you want to nitpick you will have many technical things to pick at. It was after all shot on an iPhone... but I think it’s pretty good nonetheless. And for the record, the better, smaller and cheaper cameras become, the cheaper and faster we will all be able to produce material. Who’s not for that. I’m packing for NAB, and trying to decide what has to stay behind. Will take the GH5/S for testing with some new lenses. But wouldn’t it be nice if your smartphone could do it all? DOF, Anamorphic, low-light, DR, build in NDs... We can dream.1 point

-

I agree regarding a real time tweaker (as with my hardware one) but, yes, this is for a different purpose to build a complete reference file of all the permutations in a very structured way. Let me get back to you about a tweaker app as its not a huge effort to knock one together if you want it.1 point

-

The Canon C200 is here and its a bomb!

mercer reacted to Don Kotlos for a topic

1 point -

NX1 Extended Dynamic Range? New Settings.

Pavel Mašek reacted to Happy Daze for a topic

You are correct Pavel, as far as negative RGB is concerned highlight control is a problem. The histogram shows that the highlights are well within range but they are in reality clipping at a lower level than 255 more like 235/245 that's why everything above these levels is a flat grey.1 point -

Interesting, I really think all-i frames probably make a difference as well.1 point

-

Can someone help me to match Yi 4k footage with Panasonic cameras?

Amazeballs reacted to kye for a topic

No idea on LUTs but this tutorial might help if you're just starting out and are unfamiliar with matching shots? If you don't use Resolve then it might be worth a watch anyway as the controls he uses are fairly standard ones.1 point -

Apple leaving professional market?

Castorp reacted to Andrew Reid for a topic

Why wouldn't it be an x86 instruction set compatible chip? Just because they are currently making ARM based processors doesn't mean to say Apple can't do an AMD type CPU in future. Laptops and desktops don't really need RISC architecture... Whether pro or not. Apple will stay in the pro market and everything else is just speculation for clicks1 point -

How Many Stops of Dynamic Range Needed for Cinematic Look?

kidzrevil reacted to AaronChicago for a topic

There's definitely a camera element involved. I'm just saying we've hit it. Back in 2009 one could argue we need to come a way in tech to be affordable + cinematic. That has plateu'd in my opinion though.1 point -

Fuji X-H1. IBIS, Phase Detect 4K beast?

Brian Williams reacted to KitaCam for a topic

Does Andrew have some clairvoyant type knowledge of some future 'big' video related firmware update I wonder? Would make a lot of sense. My anticipation is that zebra's and/or false colour could come as well as general minor tweaks. Could there be limited record 4K 60p as well? Panning (in video) stabili(s)ation (hehe) tweaks perhaps, if this is even a confirmed issue or room for improvement. The stabilisation is great, but really it's not at present walking about great in my view, especially coupled with let's say average rolling shutter performance. My experience is that it's great for what it is, careful and considered hand held shooting, though don't expect wonders if moving about much unless perhaps coupled with your gimbal of choice....1 point -

BMPC 4k vs. GH5

webrunner5 reacted to IronFilm for a topic

I got my monitor mount on my Sony PMW-F3 that way from Zacuto.1 point -

I had the same question a few months ago, and ended up going with the GH5. I was specifically attracted to the anamorphic mode to use with a 2x lens (Sankor 16c + SLR Magic Rangefinder). The GH5 is the first camera I've used the Sankor with that got an acceptably sharp image. (And I don't have to cut off the sides, I can see the image de-squeezed in the camera, etc.) Yes, I would much rather have ProRes (or raw) files than the compressed codec the GH5 gives me, but the overall usability of the camera for anamorphic shooting is absolutely fantastic.1 point

-

Motion Cadence

jonpais reacted to Mark Romero 2 for a topic

Yeah, back when I lived in Thailand in the 90s for 4 years, I would have killed someone for tacos.1 point -

Canon Full Frame Mirrorless is just around the corner?

webrunner5 reacted to IronFilm for a topic

Meanwhile Nikon still has their Nikon D850 out of stock, EIGHT MONTHS later after release! https://nikonrumors.com/2018/04/02/nikon-d850-filmmakers-kit-now-in-stock-in-the-us.aspx/ And this is why Canikon can be pretty complacent about Panasonic/Sony for now.1 point -

Your Nikon mirrorless wishes

Trek of Joy reacted to IronFilm for a topic

1 point -

I largely think we've managed to get things arse backwards when it comes to emulating a film look. Because everyone now has the ability to change everything about an image in post there is barely any emphasis on getting it right - or at least consistent - in camera first. If a camera manufacturer said : "OK, we are putting all our eggs in one basket here but we have absolutely perfected an in camera look that is identical to Kodak Vision 250D (for example). Our entire sensor and processing design is based on purely just replicating that but we guarantee that if you shoot with this it will be indivisible from if you'd used that stock. Oh and you'll only be able to use it to shoot up to ISO800 though". Would you buy it? I think I would. Personally speaking, taking away all those variables that offer endless rabbit holes to explore but often just disappear down and making something work within a constant framework would be challenging but ultimately more creative and productive. This is a really interesting piece about preparation in camera to match film and the follow up piece is here : http://yedlin.net/OnColorScience/ Its quite timely that this has come up because I've been interested in tweaking combinations of base colour profiles and constrast/saturation/sharpness etc parameters in Panasonic cameras for a while with a view to mining some in camera combinations that may not be obvious ones but actually work well visually. The timely part is I've just finished an app today that does automated stepping through all combinations (from selectable ranges) whilst either capturing a still per combo or as one continuous video with the changes embedded. I'll give it its own thread tomorrow if anyone is interested.1 point

-

How much bit depth? (10-bit / 12-bit / 14-bit)

kye reacted to HockeyFan12 for a topic

Codec is WAY more important, but the whole bit thing is kind of a mess. The human eye is estimated to see about 10 million colors and most people can't flawlessly pass color tests online, even though most decent 8 bit or 6 bit FRC monitors can display well over ten million colors: 8 bit color is 16.7 million colors, more than 10 million. And remember sRGB/rec709 is a tiny colorspace compared with what the human eye can see anyway, meaning 16.7 million fit into a smaller space should be plenty overkill. But also remember that digital gamuts are triangle shaped and the human eye's gamut is a blob, so fitting the whole thing into the blob requires overshooting tremendously on the chromasticities, resulting in many of those colors in digital gamuts being imaginary colors.... so the whole "8 bit is bad" thing needs a lot of caveats in the first place... I haven't tried 10 bit raw from the 5d, but I suspect in certain circumstances (100 ISO just above the noise floor) 10 bit will have visibly higher contrast noise than 14 bit after grading, though only if it's the exact same frame and you A/B it will the difference be apparent. That's my guess. Something VERY subtle but not truly invisible, though possibly effectively invisible. It's possible there could be banding, too, but the 5D III sensor is quite noisy. The science behind it is so complicated I gave up trying to understand. The more I learned the more I realized I didn't understand anything at all. First you're dealing with the thickness of the bayer filter array and how that dictates how wide the gamut is, then you're dealing with noise floor and quantization error and how that works as dithering but there's also read noise that can have patterns, which don't dither properly, then you're dealing with linear raw data being transformed with a certain algorithm to a given display or grading gamma, as well as translating to a given gamut (rec709, rec2020, etc.) and how wide that gamut is relative to the human eye and how much of the color there is imaginary color, and then what bit depth you need to fit that transformed data (less than you started with, but it depends on a lot of variables how much less), and then you introduce more dithering from noise or more banding from noise reduction, then compression artifacts working as noise reduction and to increase banding via macro blocking, then there's sharpening and other processing, then... then it goes on and on to the display and to the eye and of course that's only for a still image. Macroblocking and banding aren't always visible in motion, even if they are in a still, depending on the temporal banding and if the codec is intraframe or inter-frame. It's possible everyone who's proselytizing about this understands it far better than I do (I don't understand it well at all, I admit). But I frequently read gross misunderstandings of bit depth and color space online, so I sort of doubt that's the case that every armchair engineer is also a real one. (That said, there are some real engineers online, I just don't understand everything they write since I'm not among them.) I know just enough about this to know I don't know anything about this. From the armchair engineers, we do have some useful heuristics (overexposed flat log gamma at 8 bits heavily compressed will probably look bad; raw will probably look good), but even those aren't hard and fast rules, not even close to it. All you can do beyond that is your own tests. Even inexpensive monitors these days can display close to 100% NTSC. They should be good enough for most of us until HDR catches on, and when it does bit depth will matter a lot more.1 point -

I'm going to go out on a limb and suggest the reason footage from professional level cameras looks more cinematic than most footage from amateur cameras is more to do with the professionals behind the "professional" cameras. There's a reason lights comes before camera. Capturing what the eye sees is not what cinematography is about. It's about creating art.1 point

-

How Many Stops of Dynamic Range Needed for Cinematic Look?

maxmizer reacted to AaronChicago for a topic

We've hit the point where there is NO excuse for not shooting cinematic footage with even a $1000 camera. Everything from now on will just be nitpicking features to make things easier/quicker/efficient.1 point -

How Many Stops of Dynamic Range Needed for Cinematic Look?

maxmizer reacted to Robert Collins for a topic

My feeling is that there is an element to which the 'filmic look' gets confused with dynamic range. For instance, we tend to associate 'log' footage with both 'high dynamic range' and the 'filmic look'. Log footage is 'low contrast' and 'low saturation' irrespective of how much dynamic range is actually in the scene. And we associate low contrast and low saturation with a filmic look. If you take a look at Jon's footage, you can see it doesnt contain a lot of stops of dynamic range (see histogram) and even the orange shirt is pretty desaturated (see color wheel).1 point -

Storm is coming... SD Card writing speed hack If you are familiar with the ML possibilities, you already know the best RAW capable cameras are those with CF card. The CF Card interface is capable of 70MB/s writing speed with 5D2, 75MB/s with 7D, and 95MB/s with 5D3. 6D / 700D which has SD interface is only capable of 40MB/s, limited by the camera and not the SD card. Now the ML team are pumping up the SD card interface writing speed. It seems like the 40MB/s can be raised up to 70MB/s or even further. That means the 700D will be able to record in 2520x1072 continuously I hope they can make it stable1 point

-

Canon Full Frame Mirrorless is just around the corner?

webrunner5 reacted to Robin Billingham for a topic

I have a mix of audio background. I toured with a couple of metal bands (playing guitar) for a few years. I had my own small recording studio in the Uk and then ran this one for a few years .. https://www.lodgerecording.co.uk I have also toured doing live sound on and off. Most of my income is currently from song royalties. My own, my old bands, tracks i wrote or contributed too and i have a growing collection of library music with a couple of publishers. Other than that i still do some producing and mixing freelance. I have never worked on sound for film like you, with film im happier on the camera and or lighting mostly, but also started making shorts and slowly working on a feature idea Checked out your site.. Great stuff, got some nice gear too !1 point -

My first wish is that they (and Canon) just get on with it.1 point

-

Would You Perhaps Be Interested In A Different GX80/85 Colour Profile???

seppling reacted to Andrew Reid for a topic

I have upgraded your forum profile to a Super Member so you can edit your original post and generally have mod-like access to the forum. Thanks for all your efforts so far on the project.1 point