Leaderboard

Popular Content

Showing content with the highest reputation on 07/04/2018 in all areas

-

Unless you've got a cat http://www.reduser.net/forum/showthread.php?168589-The-Affect-of-Cat-Pee-On-RED-Accessories-(You-ll-want-to-read-this)4 points

-

Video Compression Kills Grain :(

mercer and 3 others reacted to webrunner5 for a topic

What is also interesting is that each eye is split in half. One side of our brain see half, the other side the other half. So one side see left in each eye, the other side see's right in each eye. I know that because my daughter's last baby had a stroke in her womb. So when she was like 2 years old they had a doctor fly in from Germany I think, and literally cut out half her brain that had died from the stroke. So now she only see's on the left side of each eye. She is 5 years old now. She is doing surprisingly well. Here brain that is left is re wiring it's self to do what some of the other side did. She will Never be normal but it is pretty crazy that they can do what they did. But they can only do it until they are around 6 years old or younger. Any older you can't re wire like you can when you are really young. She had like 200 seizures or more a day for a long time, and now she takes liquid Medical Marijuana and is down to only like 15 a day. Helps with her pain also. She has a drop foot also. They have done some crazy open spine operations on her for that also. The Ronald Mc Donald house has paid every dime of her cost since she has been born along with my daughters cost to be with her in LA for operations. Amazing stuff happens behind the scenes that few know about.4 points -

Nikon full frame mirrorless camera specs

ND64 and 2 others reacted to Robert Collins for a topic

This is possibly worth its own thread. According to Nikonrumors (no idea how reliable they are), Nikon mirrorless is coming and coming soon (possibly later this month.) https://nikonrumors.com/2018/07/03/first-set-of-rumored-specifications-for-the-nikon-mirrorless-cameras.aspx/ The specs sound very similar to the Sony A7iii and A7riii. (Which sounds good to me.) The big surprise (at least to me) is that it will supposedly have IBIS. It appears to have a new mount which will take an adaptor for f mount lenses.3 points -

Here's my first quick test with mv1080 full sensor recording on the eos m (1736x1120), no crop and continuous recording in 14 bit lossless raw. I'm happy with the image quality and moire isn't too bad. I think youtube softens the image slightly, so have uploaded at 1440p which hopefully looks a bit better: @webrunner5 In crop mode at 2520x1080 or 2520x1386 the ef-m 15-45mm lens is 50-150mm full frame equivalent (3.33x crop). With a 0.71x speed booster that will be a 2.36x crop - so using an ef-s 10-18mm (modified to fit speed booster), that would result in 24-43mm full frame equivalent. The ef-m 15-45mm won't work with the speed booster. The river thames video I shot at 2224x1200, which has a crop factor of 3.75x, so the 15-45mm lens was 56-170mm equivalent there. The crop factor changes depending the resolution selected - values can be calculated here: http://rawcalculator.bitballoon.com/calculator_desktop3 points

-

Where do you store your gear? Post pics!

JordanWright and one other reacted to Fábio Pinto for a topic

I have 3 cats ? the worst that happened was also while I was out on vacation. I had the lenses in the shelf and when I arrived the Canon 24-105mm f/4 was on the floor. That's one of the reasons I wanna have the equipment accessible but secure.2 points -

So, after 129 pages long thread we started to be permanently learned about all kind of professional life experiences, thoughts and achievements of respected member @John Browley - about his personal views about art of directing, blocking, etc., about (congruencе and suitability of) cameras and mounts, about TV shows, about his ideas/comments regarding future producing-perspective of different camera makers, little bit about resulted destiny of different kind of directors, as well about some important memories, friendships and personally relevant encounters... It seems that, without doubt, for all Eoshd members it is extremely important to read and learn these precious insights, but - wouldn't be better to open a new thread just about mr Browley's advices, tips, suggestions and opinions, where it would be possible to pose similar questions and follow free branching of topics and comments?2 points

-

Artistic / aesthetic use of Bokeh?

Raafi Rivero and one other reacted to Ehetyz for a topic

Eh, Bokeh is alright and good bokeh and separation are important "tools" for a cinematographer, much like any other visual styling. Being for or against distinctive bokeh is kind of moot. It's like going "yeah three-point-lighting is bad, you shouldn't use it because it's artificial". Cinematography doesn't always aim to reproduce reality in an accurate manner. I'd say it's more often the opposite. With pronounced bokeh, like with any tool, you just have to have some taste and brains. Sometimes it works for what you want to achieve, sometimes it doesn't. Edit: I guess I should mention that the times I HAVE messed round with the bokeh in my work, be it with vintage lenses or with self-made bokeh modifiers, they've always garnered praise and astonishment from peers. You can do a lot by reshaping the out of focus areas of your image.2 points -

I knew it! The A7SIII will shoot MEDIUM FORMAT 8K RAW!!! ....the delays in announcing are just them working out how to stop it catching fire ????2 points

-

2 points

-

I'm not so sure about that. The Hasselblad Lunar had a fully capable E-mount. Also, the physical E-mount has already appeared with at least two other camera systems, and that physical mount has been offered separately online for some time. No doubt, it has occurred to Sony's camera division that they could sell more lenses if the E-mount were widely adopted. In light of the Sony CEO's recent declaration that the company is moving away from manufacturing "gadgets" (apparently including digital cameras), it certainly is conceivable that their camera division might consider selling more lenses, in deference to their scrutinized bottom line. I did not know that there are that many EF lenses. That's incredible. The EF scourge is even more prevalent than I realized! So, if I want to get serious, I should ditch my set of M-mount Summicrons and get a set of PL Tokinas? Does that include the PL rehousings of FF (and MF still) glass, especially those that are being used with the recent large format cinema cameras? Or, is it just using an adapter with a stock still lens that is amateurish? Well, I suppose some folks are more "adaptable" than others. I have done okay changing between different mounts and adapters in fairly rapid shoots. With a couple of ACs, usually one of them knows how mount a speed booster, so it makes things much easier. It seems to me that "futzing" is sometimes a part of filmmaking, especially if one is trying something completely new. Furthermore, if a little futzing adds some distinctiveness that sets my work apart from the run-of-the-mill, I will gladly futz. Huh? If you are referring to my earlier mention of the JVC LS300, I brought it up because it merely proves that an M4/3 mount works fine with a S35 sensor. I would not know a show shot with that camera nor with most any other camera. On the other hand, I have seen some good footage from the LS300, including clips shot by our own @Mattias_Burling I would guess that we differ slightly in regards to the notion of what constitutes "amazing creative work" (not that one notion is better than the other). I am not familiar enough with most of the existing footage from the LS300, but I think that it's special capabilities shine if one shoots with a set of lenses made for different formats or if one uses focal reducers or tilt adapters with a S35 sensor. I'm sorry, but I have to disparage some camera manufacturers for their arrogance and short-sightedness (who are possibly unlike the two manufacturers that you disparage). Outfits like BMD, Red and Canon, etc. are not interested in the fact that what I advocate does not preclude the use of EF lenses to their full capability, nor are they interested in the fact that what I propose requires ABSOLUTELY NO FUTZING for EF users. There are several inexpensive ways to make such a versatile front end, of which EF users would be completely clueless to the fact that the EF front is removable for those who need a shallower mount. The simplest example that I can give is to merely imagine a Red camera, but with its lens mount plate set further back to accommodate a shallow mount (such as the E-mount, M4/3, EF-M, Fuji X,... whatever). If such a camera is shipped with a smart EF lens plate already bolted on, the clueless EF users won't notice any difference, and such hidden versatility won't affect sales figures at all. In regards to your mention of Kinefinity, a typical shooter might consider them marginal. However, Kinefinity has already beat the larger "non-marginal" BMD (and several others) to a few important milestones, including offering a raw, M4/3 4k camera and offering a raw, FF camera. Well, the market has also said that it prefers Miley Cyrus and Justin Bieber over the Beatles. Those two scenarios are not exactly what I am advocating, but I would certainly be fine with either. Again, with the right front end design, most would never know that a camera has (or can have) a shallower mount, and the camera manufacturer would not even need to supply an E-mount -- it would not be "commercial suicide." Furthermore, the notion that a S35 sensor is "LARGER" than an M4/3 mount is completely arbitrary -- especially since the LS300 (and other camera/adapter combos) proves that such a configuration works. Actually, it doesn't (not that I find anything wrong with using adapters). I have heard that excuse before, but if the front end is properly designed, there is no problem. Also, even if such a camera only has an M4/3 mount, a prominent qualifier in all literature and on all pertinent web pages should prevent most such problems.2 points

-

Artistic / aesthetic use of Bokeh?

Kisaha and one other reacted to webrunner5 for a topic

I am not too big of a Bokeh fan at all. Now some isolation sure that works, but total out of focus blurs, nah I'll pass. We don't see that kind of stuff in real life unless our eyes are watering or something that is so far away we can't focus on it like headlights in the far distance. And even that is distracting more than pleasing. To me it is overused to hell and back, especially at night in city scenes. Sure you are going to see it in scenes naturally because you are never going to be at infinity all the time, but to do that silly slow zoom out stuff over and over to get in focus, Jesus I hate that. Been used a Million times. Now something like I took today, yeah that works. That is not too radical to me. Pentax M42 55. f2.0 @ f5.6 on my Sony A7s. One of those 4 lenses I bought for a total of 16 dollars and the camera, and camera bag at a thrift store. 2 of the 4 are super sharp and have a nice coloring to the lens output. Sort of Leica like looking. Both of them were the Pentax ones. The 135mm 2.8 Pentax M42 is crazy good. The car shot is the 135mm. The Canon FD is pretty good, but sterile. There is one called a Toyo and it is hard to tell it is so wide, a 24mm at f 2.8. I like though. I have not taken anything super close with it yet.2 points -

Blackmagic Pocket Cinema Camera 4K

IronFilm and one other reacted to John Brawley for a topic

A setup is anytime the script supervisor changes the ID on the slate ? That's a lens change or a substantial change in storytelling shot construction. On take 1 of a setup, if there's something blocking and screwing up the shot and you have to move the A camera six feet to get around it, then the shot is considered the same shot, you go to take 2 and its the same setup because the intention is the same. Kind of the same if the lens change is from a 27mm to a 24mm because you're not quite fitting everything in. Sometimes though on take 3, the B camera has gotten what they need and you give them a different shot to do so that would generate a new setup and a new ID on the slate. (don't get me started on the differences between US and AU slate ID's. The lighting is always being tweaked to each CAMERA (not shot) so that's constantly updating in small ways. I tend to have some go-to ways to light for cross shooting and it's established often on the first setup and then tweaked to each subsequent setup. Kubrick seems to have the reputation of most number of takes. "For The Shining I spent two weeks on the set in Elstree. My scene with Jack Nicholson lasted about eight minutes. We shot it 50 or 60 times, I should think - always in one take. Then Jack Nicholson, Stanley and I would sit down and look at each take on a video. Jack would say, 'That was pretty good, wasn't it, Stanley?' And Stanley would say, 'Yes it was. Now let's do it again'." I always wanted to be in the camera department. My first job was working for a camera rental house but the owner was a working DP. He shot a lot of documentary work and TV promos. I was his full time assistant for nearly 5 years. It was really like going to DP school for 5 years. But being his assistant meant you had to record sound. So I learnt to record sound ? He was by far the biggest single influence on my working style He taught me the importance of a bedside manner on set, he taught me to pull my own focus when shooting (when necessary) he taught me how to test, how to be hungry, to learn. He was a technology innovator and a true pioneer. One of the first to embrace HDTV in Australia. One of the first to buy RED cameras and advocate them. I wrote about his passing here. https://johnbrawley.wordpress.com/2011/04/17/the-passing-of-john-bowring-acs/ It's never too late to change ? I'm not sure what you mean when you ask "finish an episode". It NEVER goes longer than the number of days. It's scheduled within an inch of it's life and if the schedule isn't make-able then it's re-written ? TV drama and Docos are where I've trained in. The pace is not new. What's gotten better is the production standards generally. We've gone from using 2/3" video cameras to S35 sized sensors and cinema style framing and editorial style. Streaming services and VOD has lead to a new era of "elevated" TV drama. It's shot like a movie, it's got MOVIE actors and directors working on it and it's visually told in "movie" style choices. Except we have to still shoot it as fast. Yeah I know time is always the enemy. Typically a movie, even a low budget one, aims for 2-3 mins of screen time a day. TV drama is typically 6-8 mins on location and 7-12 mins in a studio. I like shooting with more cameras (three full time) because it gets me more shots from the same number of setups. More shots = more coverage. Simple maths. Some shows are different. A show like stranger things shoots an episode every 14 days. They shoot it "one camera" style and this takes longer. I know some crew on that and they tell me that they never make their days and that the directors on that can pretty much do whatever they want and Netflix don't care much about the show's budget. But that kind of "auteur" perspective in a TV show is pretty rare and unusual and is only permitted because of the show's great success. Arguably this could be why it's successful too. Most directors would be fired or never re-hired again if they didn't deliver an ep in their allowed days. When I look up directors I'm about to work with, I look at how many episodes they've done on a show. If they've only done one and never gone back, it's a pretty good sign they're going to be....difficult. I know a great director who started doing TV drama. He loved big architectural wide shots. We shot a few scenes without ANY close coverage. I begged him to shoot close up passes "just in case" and he was adamant. Nope. If we shoot those shots they'll use them ! He got reamed in the edit when the producers screening happened. They asked for closeups on his edit and he said he didd't have any. He's never been employed again by one of Australia's most prolific producers. If any other producers call him to ask for a reference guess what he's going to tell them. TV truly is a "producers" medium and these days, most of the producers are really writers. JB2 points -

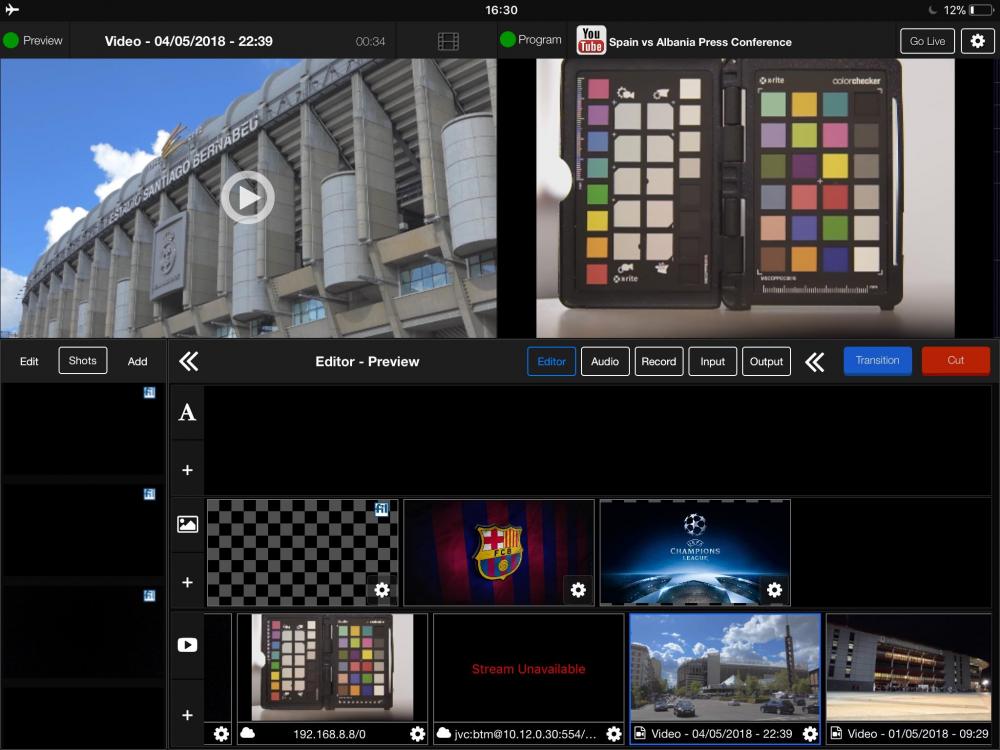

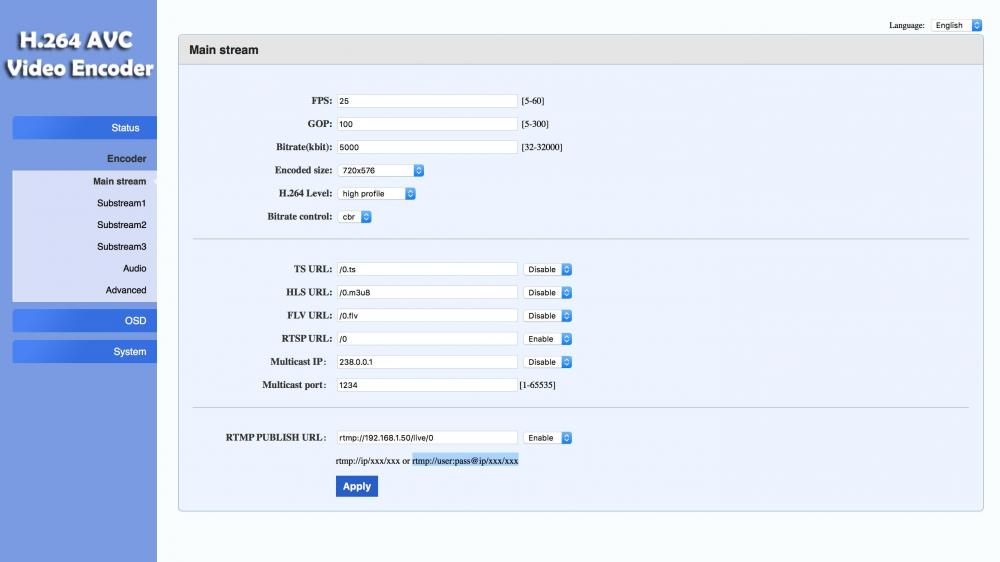

This will probably only have an audience of one - hello @IronFilm - but might be of use for anyone else who has a need for what it does. So what is it? Its a small(ish) box that takes an HDMI input and encodes it into a live H.264 IP data stream that can then be used for monitoring or as an input source by devices on a local network or pushed to streaming services such as YouTube, Facebook etc. The version I have has an integrated Sony NP battery mount and hotshoe mount so is a completely standalone solution for use in the field but it can also be powered via a mains adapter. If you want to save some money and will only be using it in a studio environment then the version without the battery mount and hotshoe mount is also available. There is also a version with an integrated 4G modem so you can live broadcast from your camera on to YouTube or whatever service you use without any additional equipment. There is also an H.265 version available as well. Physically, the unit isn’t massive but isn’t what you would call compact either. Seen here on my LS300 camera (which ironically doesn’t need to use one because it has this stuff built in ) it looks in proportion but to be fair it does dwarf a small mirrorless camera a little bit. Seen here alongside its far more expensive alternative - the Teradek Vidiu - you can see that the base units its not that much bigger but obviously it gets much bigger when you slap the battery on as opposed to the Vidiu which has a built in rechargeable one. What has to be borne in mind though is the functionality you are getting here and should consider how much more unwieldy things would be if you had to mount a laptop to your A6500 as that would be the only way to get the same functionality. At this point, I’m going to have to say that if you’re not comfortable with some basic network configuration stuff then this product is not going to make you any more comfortable and you might want to walk away now OK, after plugging our camera into the HDMI port, we have to log in to the device and configure it. This is done by attaching an ethernet cable to the LAN port and typing in an address in a browser that brings up a control panel of the sort which will be familiar to you if you’ve ever setup a router. In here you set up all the options for the type of stream that you will be generating from the incoming camera signal and you can alter everything from re-scaling it, to frame rate and a wide range of quality settings from data rate to encoding profile. Most of it is self explanatory but you’d better get used to this interface because you’ll be seeing a lot of it as you tweak the settings for the optimum results. OK, so once its encoded your video and is merrily streaming it you can access it directly through its own LAN or WiFi interfaces or you via a wider network if you connect it to another router (which you will need to do if you want to get your stream out to the internet). This router can be anything from your home router to an internet access point in Starbucks or your mobile phone’s hotspot function for onward broadcast to the internet. So lets get down to some actual use cases. The first use - and the one that @IronFilmis waiting on - is to use it as a way of remote wireless monitoring on set from a camera. In this mode, we set it up as a WiFi access point and connect whatever device or devices to it that we want to monitor on and use a media player that can play network streams to watch it. Basically, any device that can run VLC will do so you use your phone, tablet, computer or even smart TV to monitor the signal. All you need to do is to enter the address that the stream is playing on into VLC and away you go. And if there are a few of you, then as you can see here with my MacBook, my iPad and my Android phone, you can all watch it together. Although, as I said, its more likely that you would use the encoder in access point mode for this, you could actually use a larger network infrastructure if you wanted to for extending the range and extent of the monitoring. These devices are used in this capacity to deliver digital TV service to hotel rooms so its very much a scalable thing ! The other use case that I have it for is to use to encode camera signals so that they can be accessed inside Live:Air which is Teradek’s live video production software that runs on the iPad. In this scenario, cameras can be connected to multiple encoder units which can then be brought into Live:Air and switched and have overlays added etc and streamed out to the internet for a pretty comprehensive live broadcast. When used in this way, the encoders and iPad are connected wirelessly though a portable 4G router to move the data around get the final output from Live:Air out on to the internet. The encoder can operate with 2.4G and 5G routers and clients. If you just want to do direct from the camera live broadcasts then the encoder can also be configured to overlay some lines of text and graphic logos onto the outgoing stream. Price wise, you can pick up the encoders for about £110 for the non battery version and about £180 for the version I’ve got. To be honest, you can easily knock up your own powering solutions for these for far less than the £70 difference so I’d be inclined to go for the lower priced one unless you need the really, really long run times you can get out of the NP ones. For comparison, even the basic version of the Teradek Vidiu (which doesn’t support the RTS protocol for monitoring on VLC) to encode live video for Live:Air or for YouTube broadcast is around £700 so it represents a significant saving - particularly for a multi-camera setup. So, is it any good? Yes for me but maybe not for you. It does what it needs to do well enough but the nature of the beast with these devices is that there will be latency and how much of that is acceptable is down to you and what you are using it for. The bigger problem is that this latency is also very dependent on how you configure it and your network infrastructure and even location if you are in a highly saturated place (which is where the 5G capability actually does help). All of this variance makes it difficult to recommend with any confidence purely as a MONITOR solution only. You can tweak away and noodle about with scaling down, bit rate, GOP, profiles etc and mitigate the latency but its never going to go away completely and I’d say that its difficult to find an acceptable balance thats not going to be below 1/2 a second. That doesn’t mean to say it is without utility when used as a monitoring solution but it would be in a secondary capacity as a distribution method where absolute real time instant monitoring isn’t necessary. So in this way you would loop it through from the primary operator’s monitor and allow everyone else on set to wirelessly monitor after that. There is also the question of how much real time you may need if you are using it in the capacity of primarily a framing monitor. If you have a Fuji XT/XH for example, this offers a solution to not being able to monitor and trigger the camera over wifi without it dropping to 720p resolution like it does with Fuji’s app. For Vlogging for example where you just want a remote view of the framing then this would give you that and allied with a cheap wireless shutter trigger you can get round the abysmal state of affairs with the Fuji app. Bear in mind that there is latency but not dropped frames. For my purposes of using it as a (much, much) cheaper way of getting camera signals into Live:Air than using Teradek’s own encoders then the latency is not an issue at all as each channel has an individual time align control to compensate for the latency. Similarly when broadcasting direct out of the camera to the internet, the latency at that level is not relevant. In summary then, if you have a need for the things that it does then it works well and is a huge saving on the alternatives. Its a bit clunky getting it going as the documentation wasn’t exactly readily available so I wasted a lot of time being unable to connect to it as an access point because the router address it uses when wireless is entirely different (and undocumented) to what it is when you are using the LAN port or it is in bridge mode.( Its 192.168.8.8 by the way ) Being able to live stream to YouTube directly out of your DSLR might not be something you want to do every day but if you ever have the need for it (especially away from the studio) then it doesn't come much cheaper and simpler (once you've unearthed the manual) than this. If you want to use it as your primary real time wireless monitor (other than just for framing or as per the Fuji example where its the only game in town) then I don’t think its the way to go because of the latency. If you are interested in purely doing that then I have another solution for that that is really low latency but thats for another day. Full product link here http://www.szuray.com/h-pd-84.html#_pp=0_304_9_-1 And here is the manual that you won’t find on their site either . User Manual Of Mini Video Encoder copy.pdf1 point

-

Video Compression Kills Grain :(

kye reacted to webrunner5 for a topic

1 point -

Sony Rx100 VI with full sensor 4K & HLG

Don Kotlos reacted to BTM_Pix for a topic

1 point -

JVC LS300 did a lot of things right, a few better than anyone else (included the variable sensor) and a few quite wrong. The fact is we still use this camera (together with the GH5), and there are a lot of people using it here and some buying it new. The fact that is the only modern JVC camera that we know the name of, proves that it was a successful one. Limited success, but success nevertheless.1 point

-

Blackmagic Pocket Cinema Camera 4K

zerocool22 reacted to mercer for a topic

Well... since it is fairly likely that JB will be providing some of the long awaited footage for the P4K, I think it probably makes sense that people get to know his background and thoughts on filmmaking and gear. Would you rather we discuss the pointless desire for AF in the P4K? Or if only the P4K would add IBIS to the specs? Or maybe we can keep wondering if we’ll need IR filtration (or ignoring that we probably will) Or I guess we can add some ad hominem questions regarding what cards we can use with the P4K or which is the best M4/3 lens to use with it... In life, conversations evolve... why can’t discussions on forums do the same?1 point -

Where do you store your gear? Post pics!

JordanWright reacted to webrunner5 for a topic

That is a great way to go. If you don't have a whole kit in bags, sort of on the ready to go, it Never fails when you get there you have forgot something, might be a stupid step down ring, but it will stop you cold from getting the shot or shoot. Sure you can't take everything, but unless it is some specialized shoot you rarely need some extra thing you have stuffed away in a drawer you cant find to save your ass when you need it. Wires are the worse. They all sort of look the same when they are grouped together when you are in a hurry. But no way I have ever found to avoid it. If a wire can get tangled up with another it sure as hell will. Plus stuff in bags is pretty well protected compared to even in drawers, or on a shelf.1 point -

Where do you store your gear? Post pics!

EthanAlexander reacted to JordanWright for a topic

I just keep all my stuff in a peli case ready to go1 point -

Where do you store your gear? Post pics!

webrunner5 reacted to BTM_Pix for a topic

Ikea Malm chests of drawers. They're very solid and the drawers are deep, wide and tall enough to accommodate everything. I have a dedicated drawer for Nikon for work and then a draw for mirrorless, a draw for audio and a draw for live video. I use a combination of their dividers for making custom shapes for the longer lenses and the padded divider cases like these from Amazon. I store these in logical sets for different types of jobs I'm going out on and then that way I can just pull them out and put them into real camera backpacks, or Peli cases depending on what it is and where I'm going. On top of the Malm unit I also have a set of 18 plastic drawers for filters, lens adapter, straps, rig components, batteries, media, IT etc Its surprisingly well ordered but still doesn't stop me tipping the place upside down looking for stuff every time I'm doing something. Of course, we mustn't forget my DIY battery charging station1 point -

There is a famous book in software engineering: https://en.wikipedia.org/wiki/The_Mythical_Man-Month https://www.amazon.com/Mythical-Man-Month-Software-Engineering-Anniversary/dp/0201835959 Keep in mind also that productions often have target deadlines to meet. But was that the fault of sensor/mount matching? Or the fault of the brand name it was released under? (JVC) None of us here would disagree that the JVC LS300 saw only limited success. But the question is did it see more or less success because of its mount/sensor combo? I'd say clearly it saw more success due to its mount. Often the only reason we talk about it is because of its MFT mount!! The LS300 would never ever have such a prominence n our minds if it had been just yet another EF mount camera wannabe. Not at all. You could override it manually if you wanted to capture more. (maybe say if you plan to do a wider crop in post than 16:9, or if you want the extra vignette as part of the look) Plus it is very very very common that cameras can crop in. So there is nothing "backwards" about offering the extra functionality of using a variable amount of the sensor. Often slow motion for instance is a crop of the sensor. Or look at the a7Rmk2 which offers a S35 4K crop as a way to get better quality sampled 4K video from the a7Rmk2 Or the fact that all Nikon FX DSLRs offer a DX mode as well. Plenty of examples of other cameras offering a cropped mode to give the user extra functionality! Sony FZ mount. The JVC LS300 wasn't only made to be used with adapted lenses, in fact I think in all the promotional material I saw for it from JVC it was always pictured with native mount lenses?1 point

-

Nikon Rumors would be the most reliable site on the internet for Nikon rumors, 99% of the news you see on english speaking photo websites probably originally sourced their Nikon rumors from NR beforehand. NR has been around for many years, I follow the website fairly closely for news when I have spare time, as I'm a Nikon user. Color Science. Ability to extract the max out of a sensor (look how Sony and Nikon would use the same sensor, but Nikon would do it that little bit better). History of a deep understanding of photographers' need thus good ergonomics / design. Excellent design of lenses together with a massively massive back catalogue of lenses (although this will be less of a factor once they go with a new mount, unless they pull off a fabulously awesome implementation of their Nikon F adapter for their new mirrorless cameras. Which I'm sure they'll be trying their darn hardest to do!). NR says: "(some numbers could be slightly off or rounded)" Also stated: "Two mirrorless cameras: one with 24-25MP and one with 45MP (48MP is also a possibility)." So yeah, could very well be carrying on with using existing sensors. Which makes sense! One less crazy variable to try and control for as they embark on their most important roll out of new cameras they've done in decades and decades. Probably the most important launch of new cameras for Nikon ever in the entire digital age. This is do or die for Nikon. If Nikon gets it right they will cement their place in history as the #2 brand, maybe even grab the #1 spot if Canon screws up their mirrorless big pro launch and Sony's growth stagnates. But if Nikon fails at a mirrorless launch then this is doors shut on their camera division, and even Nikon's entire business. Who knows, maybe all this would be a reason why they'd go for a new sensor? So they can make the biggest splash possible with their new mirrorless line up of cameras. And it wouldn't be odd to me if their brand new sensors stuck with the roughly 20 ish and 40 ish megapixels size. As for people who don't obsess with megapixel count, then 20 something megapixels is a nice sweet spot of giving you very nice images that is plenty overkill resolution but still small ish file sizes. (as we saw with the D500 and D7500 even going "backwards" with their sensor resolution, as resolution doesn't really matter so much now for stills as we've got plenty of it!) And for the number geeks who do obsess about megapickles then even for them 40 something megapixels is a pretty good sweet spot. Do we really want a 60 megapixel full frame camera?! Certainly not for a mainstream launch, no.1 point

-

Where do you store your gear? Post pics!

newfoundmass reacted to Fábio Pinto for a topic

I'm not asking where you hide your gear or if it's under your bed. I just want to know what kind of shelf or cabinet you use, if it's always in the bags, or if you have built something DIY. I always had my cameras and lenses in a ikea kallax 4x2 shelf but I can't store my bags and tripods there, so I'm looking for another solution. Maybe a small wardrobe.1 point -

Mini Review - URay On Camera HDMI IP Encoder

webrunner5 reacted to IronFilm for a topic

It has arrived! :-o A bit of a rubbish video by myself, but meh! And my girlfriend, bless her heart, but I think a tripod would have done a better job as a camera person than she did! :-/1 point -

Advice on eBay anamorphic lens listing (No advertising)

valery akos reacted to leslie for a topic

thank you for the heads up :)1 point -

I entertain myself with niceties of visual perception ever since. It all comes down to what german poet Goethe concluded: (clumsy translation) He had written on perception of colors early on, and we are talking about the 18th century! As William Gladstone proved later on, we may all *see* the same things, but we take completely different things *for real*. His finding: if you don't have a word for blue, you can't (yes: canNOT) distinguish blue from green. The sky turned blue the instance the color could be artificially reproduced, and the sky and lapis lazuli were no longer the only blue things in the world ("blue" flowers are always light or dark purple, and they are described as such in earlier times). So the least we can say is that our perception is way more flexible than we are aware of. But what is still questionable is whether or not ISO grain does convincingly look like scotopic vision. The answer is, it can. Everything that signals a purpose, an intention, a calculated effect, and be it drastically distorted and stylized, will trigger the suspension of disbelief. On the contrary, if an image surrounded us 360°, had 20k resolution, 200fps and 30 stops of DR, we would be smart enough to find it reality-like, but ultimately unreal.1 point

-

Advice on eBay anamorphic lens listing (No advertising)

valery akos reacted to funkyou86 for a topic

Be very careful with that seller, they scammed me twice, but ebay deleted my comment from their wall, not sure why...1 point -

Blackmagic Pocket Cinema Camera 4K

webrunner5 reacted to kye for a topic

I read somewhere that apparently they're making a come-back, or at least subtitles, because people watch on their phones in public and their headphones are probably a small Gordian knot in their bag!1 point -

Blackmagic Pocket Cinema Camera 4K

Emanuel reacted to webrunner5 for a topic

Your lucky that Silent Movies was a passing fad!1 point -

Where do you store your gear? Post pics!

jonpais reacted to webrunner5 for a topic

In Vietnam I can believe you Need that for all the damn humidity!1 point -

Artistic / aesthetic use of Bokeh?

kaylee reacted to Robert Collins for a topic

Bokeh or out of focus areas aesthetically add depth to photo or video. Depicting depth is important because essentially you are trying to depict a 3D scene in a 2D format. Essentially bokeh to a certain extent mimics 'aerial perspective. Aerial perspective is the concept that we view things as being further away if they have less contrast, detail and saturation. Here is a random example off the internet... Essentially we can 'see' a range of hills further and further in the distance. If you consider that bokeh is essentially 'blurring' the background it is creating the illusion of aerial perspective and depth. The bokeh/blurring clearly reduces detail, reduces contrast (by merging the highlights, mid tones and shadows, and reducing saturation (by merging highly saturated areas with less saturation.) (of course you could achieve a similar effect by adding smoke to your background.)1 point -

Yeah, that looks pretty good. You should make a Rear Window inspired short film. Are you still processing your files in Resolve using Juan Melara’s linear method?1 point

-

Video Compression Kills Grain :(

kye reacted to webrunner5 for a topic

According to Ken Rockwell we Do see grain in our eyes if he is to be believed. We probably do. https://kenrockwell.com/tech/how-we-see.htm1 point -

Canon C100 Mk1 external recorder 4:22 compared with internal c300 Mk1

webrunner5 reacted to aestartia for a topic

Thank you very very much webrunner5, I really appreciate it - Heather ?1 point -

Video Compression Kills Grain :(

zerocool22 reacted to andrgl for a topic

And cinema ain't real life. FPN, chroma noise and rolling shutter make film grain look far superior.1 point -

Have tested it and it's working fully. The preview is stretched vertically 1.6x for now - as if there were an anamorphic lens attached, but other than that it's fine. I never thought they would manage to do this! Also, seperately, here's the most extensive test I've done so far with the new crop rec 2.5k mode (max 2520x1386) on the eos m. I recorded at 2224x1200 to get usable record times of around 10 - 20 seconds per clip. It was a very warm day so the camera got pretty hot!1 point

-

Finally!!! I already have the cheap chinese speedbooster, it's pretty bad but it will do the job for what I want.1 point

-

A lot of progress has been made on the EOS M in the last two days and it looks like they've been able to make the camera shoot in mv1080 mode using the full aps-c sensor (no crop) for the first time ever (as opposed to the mv720 mode that the camera was always stuck in). This means that the EOS M will now be as capable as the other digic 5 cameras.... so what I said before about anamorphic shooting changes completely - this will now be a great camera for anamorphic as it can record max 1736x1120 (3:2 aspect ratio) which I think uses the whole sensor height and width. Will do my first test soon - liveview preview isn't working fully yet though. Can't wait for the Viltrox speed booster to be released soon and shoot full frame raw video with this £100 camera! Here's the forum post and first footage: https://www.magiclantern.fm/forum/index.php?topic=9741.msg203530#msg2035301 point

-

1 point

-

Video Compression Kills Grain :(

canonlyme reacted to BenEricson for a topic

I always hear comments like this, but I’ve never heard an actual story of anyone having a “it was all blank sorry” moment. This seems like the modern equivalent of forgetting to load a card into a digital camera. You’d have to either have very serious mechanical error, severe under exposure, or be uneducated in the process, all of which are preventable... especially since the room for error with negative film is extremly high. I recently shot a short piece on a Bolex Rex 4. The scanning prices have dropped recently and the 4k scans available now are really nice and affordable. ProRes LT to Vimeo has worked pretty good for me in the past. Film grain holds up really nice in my experience.1 point -

Blackmagic Pocket Cinema Camera 4K

tupp reacted to John Brawley for a topic

This is known as “the pattern” Resident eps are are shot in 8 working days plus one day of second unit. The second unit happens concurrently with the first day of the second episode shoot. 6 days are shot in studio and 2 day are out on location. We average about 35 setups or “slates” per day. Have done as high as 60 on Resident. Many setups are three cameras. So that’s about 70-90 “shots” per day. Each camera shoots about 60-90 mins of footage per day but it’s not unusual to have 2 hours per camera (for action or slow motion heavy days) i day we have three cameras but I really mean we have theee camera operators. We have many more cameras that are pre-build for different roles. A, B and C are Alexa Mini. D camera is a full time Steadicam camera (Alexa XT for weight and mass). It goes on down to letter q or something silly. There are three sets of Primo Primes and each camera has its own 11-1 as well. We also have a couple of other specialty lenses like a CP 50 macro, and I also use a lot of SLR Magic primes on the micro and APO Hyper primes on the Ursa Mini. We shoot about 6-10 script pages per day. I always say you can’t really go much faster than a setup every 15 mins. That’s 4 per hour. On a 12 hour workday it means we theoretically can do 48 setups. It’s very hard to maintain that kind of pace in a day (and have it look good) Sometimes you can get the shot turnover down by leapfrogging cameras. Have the A cameras do the first shot, prep the B camera to come right in after that setup is finished and the A camera pulls out. There are 12 in the camera department not including me. Ops, firsts and seconds x 3 plus two utilities and a loader. Time is the thing we all struggle most with. Time to light, time to shoot, time to tweak. The pace of TV has a way of “honing” your choices and teaching you to react and trust your instincts. JB1 point -

Not just Kinefinity and JVC, even the behemoth that is Sony used the FZ Mount which made it a breeze to swap over your main mount during a shoot. (for instance you might use mostly PL lenses, then just for that one shot or two switch over to a DSLR mount so you can get that super macro shot, or that super long 400mm tele shot) Although sadly it seems Sony has killed off FZ Mount (as I own a fair few FZ adapters :-/ ah well), but still is holding true to the same general principle of including a shallow mount option on their cinema cameras by having a locking E mount in their new VENICE camera. JVC had smart scaling with their LS300 so you could use native lenses which only covered 4/3" Plus there are numerous MFT mount lenses which do cover an entire S35 sensor, such as the Sigma MFT primes, Veydra primes, Fujinon MK zooms, etc1 point

-

All Kinefinity S35 cameras use a KineMount (and thus can have an E mount). And I expect the new Kinefinity MAVO LF will also use KineMount. While so few cinema cameras lack a shallow sub mount (like Kinemount or FZ mount) then hopefully we'll see more cinema lens manufacturer's over lenses with swappable mounts. Although often it will be options like PL or EF, still leaving Nikon out in the cold. Nikon needs to make a "N100" or "N300" cinema camera (their take on the C100/C300 that is) to keep themselves relevant.1 point

-

Thanks, imagine if a few companies banded together on a new shallow cinema mount standard such as KineMount. Perhaps if Z Cam, AJA, and BMD had got on board. It would be like MFT is with their wide ranging support of manufacturers which helps makes MFT one of the most appealing mirrorless systems to invest into.1 point

-

Liliput A5, or any other cheap 5" or smaller competitors?

Timotheus reacted to KnightsFan for a topic

@Timotheus No, it does not have anamorphic mode.1 point -

Blackmagic Pocket Cinema Camera 4K

webrunner5 reacted to John Brawley for a topic

It's never going to happen. Sony won't allow it. They will never allow it. Kinefinity can say "future adaptors" all they like. Sony run a closed eco-system. This is their MO. Sony own the mount. No other camera other than one made by Sony will have a native E mount. Did you know that this year they went past 130 million lenses made ? 130 million EF mount lenses have been made ! Think of it like this....that's 130 million potential customers. Canon make the worlds most popular and numerously made lens mount. I personally hate them. They should NEVER be on a cinema camera and they suck the WORST for any kind of motion work. EF is an idiotic lens mount for motion work and shouldn't ever be used in my view. But there's a zillion of them out there and for the vast majority of those that are moving into a cinema camera eco system from a DSLR setup, chances are it's EF mount lenses they have. So they make a camera that uses that mount. Once you get serious about cinema glass, then you go to PL. Adapted 135 format glass...is amateurish. I hate to sound like a snob but it's really really hard to make them fly on real jobs. In the end it's often very difficult to make it work on set. Yeah I know you CAN do it, yeah go post your vanity projects and your music clip that looks great but i'm saying generally, it's a pain in the arse and no one aside from hobby-ist and indie shooters can be bothered futzing around with these jigs. Look at how successful that JVC was. Name a show shot with them. Show me someone who did some amazing creative work on that camera because it existed and did something no other camera could do. They're like anamorphic adaptors. Almost no-one uses them on paid shoots. They're just too punk and couture for anyone to put up with. You go get real anamorphic lenses. (and hey I've done it, I used a LA7200 on an Si2K and zeiss supers before anyone knew what an Si2K even was) I'm a lover of obscure lenses, but the obsession with adapting and speed boosting lenses... I say this with love of anything that isn't conventional, but to disparage camera manufactures for making a mount that services BY FAR the vast majority of the existing stills DSLR market for doing just that but holding up very marginal cameras like the JVC and Kinefinity as a beacon of success doesn't fly for me. Because the market has already spoken. We lens nerdists here on mostly THIS forum re the market. It's tiny. Making a camera that has a sensor that is LARGER than it's native lens mount (MFT) which FORCES you to always use and adaptor or advocating a mount that is proprietary (E mount) is commercial suicide. It forces the user to have an adaptor. Imagine all the idiots who go buy an MFT native lenses and post about the lens not covering their sensor. I'm a fan of MFT. But I'm a fan of NATIVE MFT lenses on an MFT sized sensor. JB1 point -

The angry fella does, to be fair, have good insights regarding Nikon lenses. And magnets. I always make a point of tuning to his fuming reactions after the married couple make one of their educational statement videos. In my imagination, they are the yuppy couple who've moved in next door to him and he's got a backyard full washing machines and Triumph moterbikes in various states of disassembly and in theirs they've got a hot tub and a mid life crisis Harley Davidson. They think he plays AC/DC as an act of aggression and he thinks they have their teeth whitened in an act of passive aggression.1 point

.thumb.jpg.8ef451c7f4952e1f296758ea4fc600ff.jpg)

.thumb.jpg.978aff9a6d6c852d107b0c5f63c134be.jpg)

.thumb.jpg.da93728d0ce25f5967abef671c31b952.jpg)

.thumb.jpg.cdb6aec6171cc9d7f2b5e18471815cdf.jpg)