Leaderboard

Popular Content

Showing content with the highest reputation on 03/28/2020 in all areas

-

I've seen lots of live streams pop up in my YT feed, which is normally much higher percentage of edited content. I must admit I'm not a fan. Watching someone read the chat in real-time mixed in with "is this on" and then deliver unrehearsed unfocused content just makes me angry that the person chose to waste my time instead of spending theirs editing. Professional live streams are like TV talk shows or the news and require large amounts of hours of prep and a crew of multiple people. Most streaming online is like watching the dailies from a set that never hit stop between takes. The minimum number of people required for a professional live stream is three: someone to control the tech someone to present the content someone to manage the chat and feed good questions to the presenter so we don't spend minutes at a time watching them read the chat They also require a huge amount of preparation - to the extent that the presenter can deliver the content with crystal clarity and almost zero mistakes.4 points

-

Help grading HLG (GH5)

Mark Romero 2 and one other reacted to kye for a topic

I think so. This post (https://www.liftgammagain.com/forum/index.php?threads/lab-processes-before-the-digital-intermediate.12923/#post-129058) from Marc Wielage describes a workflow that doesn't seem to include a conversion: Assuming I am interpreting correctly, Marc may well use a conversion to get the files into a log format and then goes manual from there. HLG is a log format but has a pretty extreme curve so that might not be the best curve to start with. Having said that though, here's some tests. GH5 HLG image with no processing: GH5 HLG image with CST (rec2020/rec2100 HLG to rec709): GH5 HLG image with contrast, saturation, and a slight hue rotation of all colours to get skin tones in range: Are they the same? No. Is the manual one nicer? Not really But you can't deny that it's pretty similar to the CST. They're just different. As a more general comment, this test took be about 10 minutes and that included finding some music to put on and finding the project with these test shots in them. Before you make a statement or ask a question, ask yourself if it's about something you can actually test yourself, and if you can, try it out and see what actually happens. Grading is so easy like this because you don't need to actually go shoot anything, you just fire up Resolve / FCPX / PP and click a few buttons and see what happens2 points -

Sigma Fp review and interview / Cinema DNG RAW

Scott_Warren and one other reacted to Chris Whitten for a topic

You start on the import page. Browse your hard drive for the camera footage folder. Open it, then drag all the individual sequence folders on to the Resolve media window. Resolve automatically creates single continuous clips for you, not hundreds of DNGs. There are great free tutorials on Youtube - Ripple Training, Avery Peck, Casey Farris, Color Grading Central, The Modern Filmmaker and BMD themselves. Lot's of get going in Resolve fast type content. It's actually pretty easy to create a graded image without using a LUT.2 points -

Live Streaming Explosion

tupp and one other reacted to fuzzynormal for a topic

Try being an older video pro that's had a long career and now has to deal with the devaluation and ad-hoc nature of this new video production reality. It's hard not to keep one's head from exploding.2 points -

Help grading HLG (GH5)

heart0less and one other reacted to kye for a topic

My logic of HLG -> rec709 -> HLG = HLG will work if you use the CST, which is what I was doing. You're right that the HLG has colours or gammas outside the range of rec709, but Resolve retains those out of range values non-destructively - that's why Juan uses CSTs instead of LUTs. In terms of the logic about gamma vs colour space, I was interested in finding a technically correct way of converting as I am interested in doing some exotic things rather than just getting an image that looks good. Those exotic things require an exact match and that's what I was looking for. One of the things that people don't realise is that with gamma curves, the LOG curves are all very similar to each other, with the main difference in DR. If you haven't done it, try taking a shot that's been shot in LOG (of any variety) and make a bunch of copies of it on the timeline and grade each of them like this: Curves adjustment where you adjust the highest point and lowest point for setting white and black points in final image CST from <gamma> to rec 709 Add saturation Make each clip have a different <log gamma> in the CST and adjust all of them to have the same white and black points. Then go through them and see how each of them looks. You may be surprised at how similar they are. LOG curves are based on a mathematical function called "log" and while each manufacturer does push and pull their own versions for various reasons, they're very similar. It's the same thing with RAW - all RAW is Linear and so you can use just about any Linear space on any RAW image. They won't match exactly but the differences will only be very slight. If you're just interested in getting something 'close enough' then Rec2020 or Rec2020 / Rec2100 HLG is fine, and is what was recommended to me over at LGG by the pros: https://www.liftgammagain.com/forum/index.php?threads/grading-gh5-hlg-for-rec709-output.11858/ It's great you're watching Juans videos, I've watched each of them dozens of times. One thing worth noting is that there are two schools of thought about grading, one is Juans approach which is very technical and the other is completely non-technical and just involves taking any input and using the normal grading controls to make it look good without converting or anything. I see advantages in both approaches, and it just depends on what you're trying to achieve.2 points -

Stuck at home like many, so I made my daughter the actor and my wife the grip and script supervisor What have you all been up to during these strange times?1 point

-

Happy new year all! I’ve finally (finally!) made my first short narrative film. I’ve been a full time videographer for 8 years now, writing on and off in my spare time, but never actually got out and turned my writing into a film. So I decided enough was enough and asked my brother if he was up for the challenge of making something in our home village over Christmas. 8 hours writing and pre-production, a single 8 hour day of filming, and 8 hours of editing. Loved the process. Lots of lessons learned. Here’s the result, and would love your feedback. Cheers, James1 point

-

If you decide later on that you want to go a bit further and use something like vMix, the ATEM Mini will still not be a wasted investment as at the very least it can act as a 4 into 1 camera switch input into the computer as one channel while you can also then bring in other sources on the others. Also, because it is idiot proof, you can dry hire it to people who are doing conferences and what not. This channel is excellent not only for product reviews of affordable products for live work but also in detailing how he implements and scales his kit for differing jobs. https://www.youtube.com/channel/UC-6rohWvTkl4mzvoDbxDGxQ/videos1 point

-

My first thought was that the Sony sounded bad enough to the point of being faulty. I've got a feeling there will be a lot of people who own that particular model running their own tests today !1 point

-

Help grading HLG (GH5)

Mark Romero 2 reacted to stephen for a topic

Hmmm... Am not convinced this is true. And am not sure what non-destructive means. Everything that watch and read from professional colorists including Juan Melara's video point in the opposite direction. (HLG -> rec709 -> HLG = rec709) Let's check Melara's video. He concentrates mainly on dynamic range (Gamma). Whole point of the video can be summarized like this: URSA Mini is capable of 15 stops of dynamic range. But it looks you don't get all those 15 stops because BMD gamma is able to hold only 12 stops effectively cutting some of the dynamic range of the camera. Later he proposes two methods / solutions: 1. Play with curves. (But you still play with 12 stops of dynamic range at input) 2. Choose Gamma=Linear. It looks Linear container(value for Gamma) can hold more stops of dynamic range. And you get full range of 15 stops, which yields better result at the end. At 1.25: "My theory is that BMD 4.6K film curve as a container is actually not able to hold the entire 15 stops of URSA Mini..." About color space: At 2:20: "Normally I'd recommend to output to the largest color space available and you can use P3 60... To keep things simple I'll choose REC709..." In my understanding he says: Get the widest color space you can. But here for simplicity I choose REC709 (narrower) because my main point in this video is about Gamma (dynamic range). Now if we assume what you say is true, then BMD Gamma should hold all values of URSA Mini dynamic range (15 stops). Why then go to Linear ? It would have been sufficient to choose it in color space transform and it would reveal the whole 15 stops. But that's not what Melara is saying. He's saying exactly the opposite. He is saying that BMD Gamma as container (Gamma value) is limiting dynamic range of URSA Mini sensor. And REC709 gamma has even less stops of dynamic range than BMD gamma ! Now let's go to HLG color space and gamma. As you said there are several approaches / methods to color correct and grade. Let's compare the one that plays with curves, saturation etc. with Color Space Transform. When you place a GH5 or Sony A7 III HLG or LOG clip on timeline, Davinci assumes by default that your clip is REC709 color space and gamma. If you don't tell Resolve the color space and gamma your clips were shot it doesn't know and assumes REC709 (same as is your timeline by default). But REC709 color space and gamma are much more limited than REC2020 color space and REC2010 Gamma that HLG clips are. By doing so you effectively destroy the quality of your video. No matter what you do later, curves saturation, LUTs, your starting point is much lower. Yes at the end it's always REC709 but everything so far points that you loose quality when correct conversion was not done because you don't use the full range of colors and dynamic range your camera is capable of. That's why they say that color space transform do this transformation non destructively. At least that's my understanding. But may be wrong and am curious to hear others opinion. If your scene has limited dynamic range you may not see a difference between the two methods. But in extreme scenes with wide dynamic range it does make a difference. Same for colors. Some color spaces approximate nicely to REC709, others (Sony S.Gammut) don't. There is no surprise people are complaining about banding, weird colors and so on. Most of those problems could be resolved with Color Space Transform even for 8bit codecs footage. Second problem with using curves, saturation etc. is that this method is not consistent from clip to clip, between different lighting, etc. And involves a lot more work to get good results. At least that's my experience. Have to tune white, black point for each clip individually, then saturation etc. Change one color, another one goes off. Can't apply all settings from one clip to all especially when shooting outdoor in available light and different lighting conditions. It's a lot of work. Just read how much work was put to create Leeming LUTs, how much shots had to analyzed, etc. With Color Space Transform it takes me 3 to 5 min to have a good starting point for all my clips on the time line. Apply CST on one clip, take a still grab then apply the still (and CST settings) to all clips. And almost all of them have white black points more or less correct, skin tones are OK etc. It's much faster method. It was a game changer for me With BMPCC 4K clips can get away with the first method and not spend tons of time because Davinci knows quite a lot about their own video clips, when you place them on the timeline. It's much better than GH5 or Sony HLG. It is for those 8bit compressed codecs where CST method shines the most. Again at least that's my experience. Color matching BMPCC 4K BRAW and Sony A7 III 8bit HLG is for me now easier than ever.1 point -

I don't think you can really go wrong with the new BM ATEM Mini for that sort of thing. It takes 4 HDMI inputs, has an easy to use control surface and has a webcam compliant output. That last part is really crucial as it means you don't have to have an encoder or any messing about to get it out to the internet as the Surface Go would just see it as a webcam input and can then stream it to YouTube, Facebook etc or even Skype if you are using it for business type streaming. Its tiny as well in terms of physical size and price as its under €350. This is a good end to end demo of it from inputs to streaming output. The big weakness with the ATEM Mini versus its bigger brothers in the ATEM range is that it doesn't have multi view preview output so you can't see all four inputs on an external screen. This can be got around with some cheap HDMI splitters for the camera and a cheap 4:1 HDMI multiviewer should you need it. If you were to use your gaming laptop with a software switcher such as OBS or vMix, that issue wouldn't be there but obviously using a software solution you would need four converters to take the camera HDMI inputs into it in the first place and good ones can easily run to not far off the cost of the ATEM Mini for just one. The big advantage of the laptop route is that something like vMix can do more (virtual sets, bring in Skype calls etc) and is more expandable, particularly if you embrace the NDI standard for bringing in cameras as you can then have unlimited sources as long as they are on your network but you are in to significant extra cost and complexity. To make any solution fully mobile then you can do that using the Teradek Vidiu Pro which is a standalone HDMI encoder/streamer that as well as ethernet and wifi connectivity also has a bonding function to gang together the data bandwidth of several cellular devices (including phones) to provide high quality streams when no 'real' internet connection is available. https://teradek.com/collections/vidiu-family1 point

-

For the simple setup I'm going for I'm going to have OBS studio (Free) and have a USB camera by the laptop as a wide angle (safety) and my F3 through a SDI converter box as a movable camera. For one event I will setup the live stream as a picture in picture, and producer will be switching the camera. The other event is fine with just a one camera setup. That + lighting should be professional enough for their needs. I have a friend that I've been tossing bigger jobs because he was lucky enough to be working on a live event setup before this all went down. He has a teradeck wireless rig to a blackmagic switcher with three mobile Blackmagic 4K cameras. He has his moniter and switching in a van that he can just drive to a location and setup quick.1 point

-

1 point

-

Help grading HLG (GH5)

Mark Romero 2 reacted to kye for a topic

The video was on YT, but appears to have gone now. I remember it well. What I was talking about was that you can grade using technical transforms, or just with the tools. Juan is obviously a fan of the technical conversions, but there are other more old-school pro colourists who have straight-out told me they just grade LOG footage by using contrast/pivot/saturation and then the LGG wheels. What Juan was talking about in the Linny rebuild video was about being as accurate as possible in emulating something, but the guys who grade freehand are just looking for a nice image using the motto "if it looks good then it is good". Colour grading can be as simple or complex as you like, and I've heard that most footage shot well just needs a WB, contrast adjustment, and primaries, and then everything (including skin-tones) will just drop right in place.1 point -

What @kye said. Photographers are doing: What’s in my camera bag? What’s in my bottom draw? What’s in my pocket? What’s out of my window?1 point

-

i have been watching jeff dunham, the guy that does ackmed the dead terrorist, he's done a 3 part live stream of a new puppet. Which has been interesting i thought. I think perhaps some people do it better than others. I dont mind a few inconsistencies, as i figure i'd be at the same level or worse, but if its consistently annoying then i'll give it the heave ho. If you have done it for a living i can see how it would be grow annoying pretty quickly. I bet the only companies making money would be internet providers, netflix and youtube at the moment. I'd prepare for an influx of " new content " with everyone at home, its either that or go stir crazy in some respects. Presuming total lockdown is a few days away or maybe a week, i went for a drive to a larger town today and bought a meat smoker. I figure there's not much i got control over at the moment, but at least i can control the bbq. I didnt get a wood chips as i live in an orchard growing area and i have already sourced all the plum, apricot, pear limbs i can use. Your all welcome, just bring a mask and your own potato salad. 😁1 point

-

I read the section on color management in the resolve manual last night, and it seemed to be that CSTs are the preferred method over luts for this kind of thing. The reasoning being that CSTs are non-destructive so you can still get any data thats out of bounds (provided it wasn't clipped in the original footage). At least that was my understanding. Color is incredibly complcated, and I can't say I understand it well. I'll see how I go with the approach I outlined above, and report back.1 point

-

@Jimbo Great stuff! My criticisms are more general in nature, and to a certain extent everything about it could have been "better", but I think there are a couple of huge caveats that need to be talked about. The first is limited time. 8/8/8 hours is a huge restriction on something like this, and everything about the final result was of a good standard. Not great, but solid. The editing could have been tighter, the shots could have been more varied, camera angles refined, sound more natural, VFX improved, etc, but for such an extremely short process these are all things that I'm sure you could improve given a lot more time. With the time limits involved I think you did very well. The second is that this is not an easy piece to shoot. What I mean is that the acting skill required was significant. I thought your brothers performance was quite good, but not great, but this is a world away from an easy role. It called for the main character to be distraught, drunk, partly incoherent, (literally) suicidal, and completely overwhelmed with grief. These are the emotions that separate the great actors from the spectacular award-winning actors, so combining the fact that there wasn't a month of rehearsals followed by a month of shooting I think you did very well. I've been on shoots where the main actor absolutely nailed the monologue on take 27 and that's the one that ended up as the crown clip in their showreel. Of course, this occurred at 3am when we'd been shooting for 6 hours and they'd also worked that day in their day job and part of the emotional delivery was sheer exhaustion on their part. We can always do better, but one of the main things you achieved was actually finishing it and publishing it. That's harder than it sounds1 point

-

Sigma Fp review and interview / Cinema DNG RAW

imagesfromobjects reacted to Scott_Warren for a topic

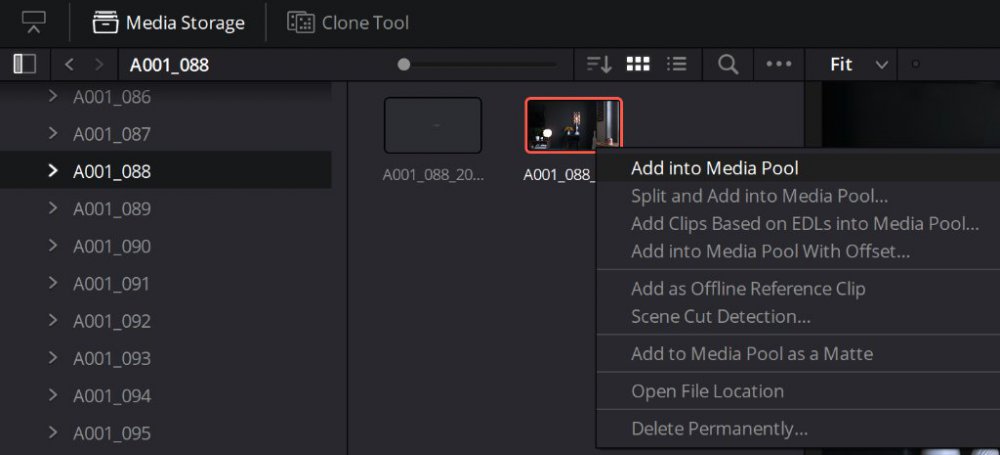

@imagesfromobjects You'd want to browse to the root of where the DNG sequence lives on your drive in the media browser, then either drag it into the media pool or right-click it and Add to the media pool. From the media pool, you would create a new timeline with it and off you go! It's easier to add DNG sequences through the media pool browser instead of manually adding a folder filled with frames where you'd need to select all the frames, then add... it gets tedious after several clips.1 point -

Sigma Fp review and interview / Cinema DNG RAW

imagesfromobjects reacted to Scott_Warren for a topic

@imagesfromobjects One exercise that helped me get started with Resolve was opening an image in Lightroom and tweaking it to taste, and then opening that same image in Resolve & using the same controls (exposure, contrast, saturation, Hue vs Hue, Hue vs Lum curves, etc.) to try to recreate the same result that I saw in Lightroom. I know that video adjustments tend to exist in their own universe when you dig in to try to make things more technically sound for video compared to a still, but it might be worthwhile to do something similar. With that approach, I could still think in terms of editing a still but developed more of a video mindset to how the controls translate differently between stills and video. Curves still largely function the same, though they tend to be more sensitive in Resolve than Lightroom, and instead of using one master stack for operations, you apply them to nodes which can then be re-wired to create different results depending on the flow of the math. I also had experience with the Unreal Engine material editor for years, so node-based editing and order of operations wasn't a huge leap. I think @paulinventome 's approach with injecting a DNG into the RED IPP2 workflow is one of the faster ways to get to a solid image that I've seen recently.1 point -

Yep, churches, sports club programs, universities, and fitness clubs. Looking at possibly a blackmagic setup.1 point

-

Autocue for beginners...

Mark Romero 2 reacted to kye for a topic

I've seen a few setups that I thought (?) ran of iPads and used speech recognition to scroll automatically? it's not that difficult a technical challenge these days. I didn't look at them in full detail as I'm not in the market for one, but I do remember thinking that it was a solved challenge and wasn't that difficult to get a good setup.1 point -

A few video experiments to try at home

TrueIndigo reacted to fuzzynormal for a topic

Hours and hours of diffraction.1 point -

You Trump hating fake news motherfu...... Sorry, wrong thread. Here is a guide to iOS versions, some of which can be used with games controllers which look like OK options if you have a PS4 controller around etc. There are a few whole kits on Amazon with the shoot through glass but who knows when they will be able to deliver. https://telepromptermirror.com/ipad-teleprompter-apps/1 point

-

Creation of a chimaera

User reacted to Simon Young for a topic

Wow @sanveer maybe take it easy with the name calling, finger pointing and fascism? Your last post is truly vile and I don’t think you want to go down this route in this forum.1 point -

EOSHD in lockdown in Barcelona - photos from Coronavirus ghost town

Andrew Reid reacted to ade towell for a topic

Wonder if he'll self isolate in the fridge again1 point -

Canon EOS 1D MKIII specs revealed

Trankilstef reacted to gt3rs for a topic

To summary up the findings so far around Resolve Studio 16.2 and 1Dx III files: - HEVC ALL-I and IPB 10bit 4:2:2 25fps neither Quick Sync or Nvidia 20xx can HW decode them. From our test seems all done in the CPU. - A top class desktop CPU 9900K can software decode in realtime both HEVC ALL-I and IPB 10bit 4:2:2 25 fps - A top class Notebook CPU 8750H can software decode in real-time but really at the limit (not really usable on a timeline without setting half resolution) HEVC but only IPB 10bit 4:2:2 25 fps not ALL-I (this is a strange one as IPB are more computationally intensive, probably the software decoder is more optimized for IPB). - A top class desktop CPU 9900K with a Nvidia 2080ti can realtime playback RAW 24fps. Probably even at 60fps I would need to upload and send a file to @Trankilstef - A top class Notebook CPU 8750H but with a medium gpu 1050ti cannot real-time playback RAW 24fps, CPU is at 70% and GPU at 100% so maybe a gaming notebook with 8750H or 9750H with a 2070 or 2080 is able to do real-time RAW playback. Hope to find it out soon - A top class Notebook 9750H with 2070 cannot playback real-time HEVC ALL-I 10bit 4:2:2 25fps. We would need to test IPB I just cannot imagine if the R5 at 8K will also be HVEC 10bit 4:2:2 if there is a machine that can playback those files. Hopefully there will be HW decoding in the future for this file type. Thanks a lot to @KnightsFan @Trankilstef and @Matthew19 for the tests and contribution1 point -

(Rumor) Panasonic New Camera S1V

MurtlandPhoto reacted to newfoundmass for a topic

The GH5 is one of the best cameras released in the last 20 years? It's auto focus is leaps ahead of GH4 and has improved even more in firmware updates.1 point