Leaderboard

Popular Content

Showing content with the highest reputation on 04/03/2021 in all areas

-

There are a number of blind camera tests around the place, and I find them useful to compare your image preferences (instead of the prejudices we all have!) so thought collecting them in a single thread might be useful. To get philosophical for a second, I think that educating your eye is of paramount importance. It's easy to "train" your eye through the endless cycle of 1) hear a new camera is released, 2) read the specs and hear about the price 3) build up a bunch of preconceived notions about how good the image will be, 4) see test footage, 5) mentally assume that the images you saw must fit with the positive impression that you created based solely on the specs and price, and 6) repeat for every new camera that is released. That's a great way to train yourself to think that over-sharpened rubbish looks "best". The alternative to this is evaluating images based solely on the image, and to go by feel, rather than pixel-peeping based on spec/price. To this end, it's useful to view blind tests of cameras you can't afford, lenses you can't afford, and old cameras you turn your nose up at because they're not the latest specs. News flash, the Alexa Classic doesn't have the latest specs either, so how many cameras have you dismissed based on specs but were making an exception for the Alexa all this time? Also, although you may find that you like a particular cine lens but can't afford it (or basically any cine lens for that matter), often the cine lenses have the same glass as lenses that cost a tenth or less of the cine version. Furthermore, you may be able to triangulate that you like lenses or a camera / codec combo that gives a particular image look, and perhaps that look can be created by lighting differently, or using filters, or changing the focal lengths you use. Educating your eye can literally lead to you getting better images from what you have without purchasing anything. To kick things off, here's Tom Antos' most recent test, with Sony FX3, Sony FX6 BM Pocket Cinema Camera 6K Pro, RED Komodo, and Z-Cam E2 F6 Test footage: and the results and discussion: Here are his tests from 2019 - BM Pocket 6K, Arri Alexa, RED Raven, Ursa Mini Pro Test footage: and results and discussion: Another blind test from TECH Rehab, comparing Sony F65, Sony F55, Arri Alexa, Kinefinity Mavo 6K, BM Ursa 4K, BMPCC 6K, BMPCC 4K Test footage: and results: Another test from Carls Cinema, comparing OG BMPCC 2K and BMPCC 4K: Another test from Carls Cinema comparing OG BMPCC 2K to GH5: A big shootout from @Mattias Burling comparing a bunch of cameras, but interestingly, also comparing different modes / resolutions of the cameras, and also paired with different lenses because (hold the front page!) the camera isn't the only thing that creates the image. Shocking I know.... I won't name the cameras here, as not even knowing which cameras are in there is part of the test. The test footage: And the results and discussion: More camera tests: Another great camera/lens combo test, this time from @John Brawley: And a blind lens test: If anyone can find the large blind test from 2014 (IIRC?) that included the GH4 as well as a bunch of cine cameras, it would be great to link to it here. I searched for it but all I could find was a few articles that included private vimeo videos, so maybe it's been taken down? It was a very interesting test and definitely worth including. If you know of more, please share! 🙂4 points

-

Teaser shot on Zcam and S1

Trek of Joy and 3 others reacted to TomTheDP for a topic

Shot mostly on the Zcam S6 with some Panasonic S1 in there too. I feel like the trailer was harder to make than the actual film. 😅4 points -

Xiaomi Mi 11 Ultra - Why a technical marvel cannot come close to flattering the subject

PannySVHS and one other reacted to Andrew - EOSHD for a topic

I remember when the first 1" sensor smartphone came out (Panasonic CM1) was around the same time the Samsung NX1 arrived. It's still worth keeping an eye out for on eBay if you want a smart compact camera... https://www.eoshd.com/review/panasonic-cm1-review-part-1-smartphone-first-impressions/ Takes some great shots in RAW mode. In many ways it's still the best sensor in any smartphone for RAW at least. I recently re-bought one for 100 euros.2 points -

Xiaomi Mi 11 Ultra - Why a technical marvel cannot come close to flattering the subject

PannySVHS and one other reacted to Brian Williams for a topic

2 points -

That looked quite nice, but still had the 'video' look to me. I wonder if everyone is so used to seeing stuff with the same look that people are now blind to it. Here's some things that don't share the same look, for contrast.2 points

-

For anyone who is reading this far into a thread about resolution but for some reason doesn't have an hour of their day to hear from an industry expert on the subject, the section of the video I have linked to below is a very interesting comparison of multiple cameras (film and digital) with varying resolution sensors, and it's quite clear that the level of perceptual detail coming out of a camera is not that strongly related to the sensor resolution:2 points

-

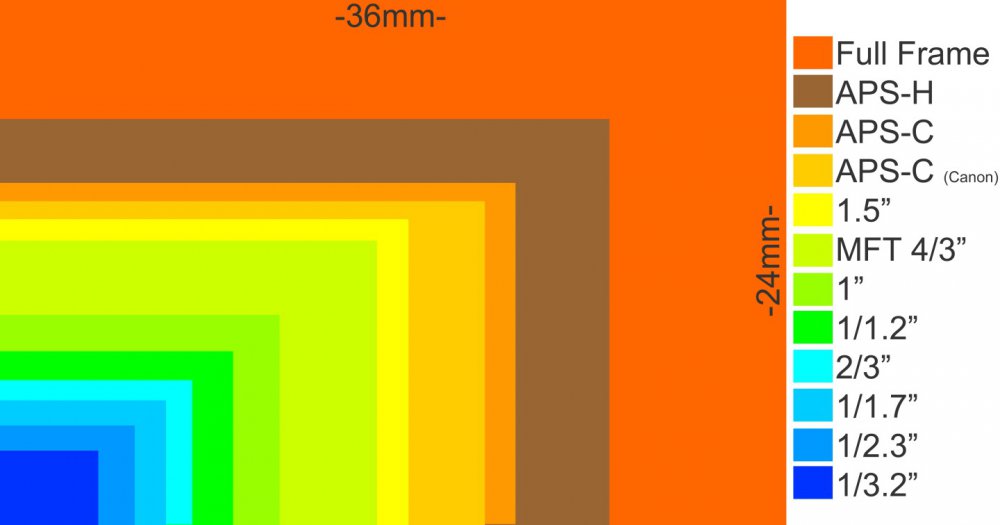

Somebody with a clue about photography needs to walk into the Chinese smartphone manufacturers and tell them how to do things. In 2014 the Panasonic CM1 with large 1" sensor came along and there's been a 7 year wait for another 1" smartphone to add the latest computational photography image processing. The Samsung ISOCELL GN2 in the Xiaomi Mi 11 Ultra is finally it! There's no doubt about the technical capabilities of this smartphone and that it's capable of some wonderful results (after a bit of grading) as well as 8K. Technically this sensor has every latest development - 50 megapixel, 8K, dual gain ISO readout (per pixel!), Quad Bayer, Dual Pixel Pro AF (split pixels horizontally as well as diagonally!) and more. The phone even has a Time of Flight sensor to assist AF in complete darkness. So why does it look so shit? DXOMark has the full run-down on the Mi 11 Ultra with sample photos. You'd expect with these specs, combining the enthusiast grade Sony RX100 size sensor with cutting edge AI and image processing that smartphone manufacturers would have taken the time to learn something about presentation and optics too. https://www.dxomark.com/xiaomi-mi-11-ultra-camera-review-large-sensor-power/ https://www.eoshd.com/news/xiaomi-mi-11-ultra-with-1-sensor-and-8k-video-gets-reviewed-why-a-technical-marvel-cannot-come-close-to-flattering-the-subject/1 point

-

Getting started again.

Mark Romero 2 reacted to PannySVHS for a topic

You have a better battery than I do.:) Mine lasts rather 2h. Nothing close to a GH4 or even GH5.1 point -

Are Sony sensors ruining video with the 'Sony look'?

PannySVHS reacted to MicahMahaffey for a topic

Theres no definitive proof that the S series uses sony sensors, but lets say they do, because that's obviously very possible. I think they still look good and different than my sony cams not just because of how its processes, but the fact that theres no phase detect autofocus on the sensor. Looking at side by side comparisons of current sensors, the contrast detect autofocus on the lumix cams gives the footage a much more pleasing look. If you crop in on a sony, you get like halos on edges from the sensors auto focus system. This gives sony cams a subtle feeling of digital sharpness. Although cams like the red komodo share similar auto focus tech, it somehow doesn't have this problem. Or maybe it does? Idk I've never used it. But as a mirrorless camera the s series cams imo avoid this issue by having contrast detect autofocus. Of course this is just my point of view, but I think someone could plop and arri beside the S1h,S1,S5 and grade them to match and nobody would notice which cam is which.. Although, the motion cadence of the Arri will likely stand out as better. But again, I really dont know for sure.1 point -

@Sage any progress with the Z-cam?1 point

-

To me it just looks hyper clinical, revealing, digital although it also depends on the photographer and lighting, so we can't blame the phone for everything. She looks better in this one: But this one by all accounts is just a bad shot. Greasy, tired skin and blue cast on her forehead. Granted it is a backlit shot. In this one the camera is clutching onto every last drop of dynamic range and has that HDR puke look in the windows. I just think the PROCESSING dial needs turning down several notches to be honest.1 point

-

Are Sony sensors ruining video with the 'Sony look'?

MicahMahaffey reacted to omega1978 for a topic

Thank you. Ok when you compare Arri Alexa Classic( with some vintage lens ) with S1 and a 24-70 Tamron( that ads a lot of Contras ) i think as consumer we are in a good position for what we pay 😜1 point -

Are Sony sensors ruining video with the 'Sony look'?

MicahMahaffey reacted to Stab for a topic

Wasnt the sensor in the s1 and s1h made by TowerJazz? That name has been thrown around here a lot when the cams came out. Although it is a bit curious that the cams of that generation all have pretty much the same specs, i.e. Sony a73 / Nikon z6 / S1(h).1 point -

1 point

-

Blackmagic Camera Update Feb 17

Emanuel reacted to A_Urquhart for a topic

I got mine yesterday. Didn't bother with the EVF so can't comment but the ND'S work well and no noticeable colour shift on mine. Definately 'pro enough'. This is the upgrade from the 4k I was hoping for. I will be picky and say that I'd rather the camera was in more of a box form like Komodo or zcam but the fact that it had a great usable screen now and internal ND's more than makes up for its form factor. Fan is much noisier than 4K but still acceptable.1 point -

1 point

-

They also offer an extra wide angle lens.1 point

-

V5 - Any ETA?

kye reacted to Mark Romero 2 for a topic

I am guessing that the original author @GURN is hoping for an ETA on version 5 of the EOSHD Pro Color for Sony a7S III. Although I agree with others that it would be difficult to make the OP's post more vague than it already is.1 point -

YouTube question

kye reacted to newfoundmass for a topic

Thanks for clarifying. My friend is going through a nightmare right now because Russian trolls have filed takedown notices on a documentary he self funded. They'll falsely claim a clip is being used illegally, which pulls down his video for the 30 or whatever days they have to file legal actions after he refutes it. Then when the video is finally restored another troll will do it all over again. It has happened 3 times so far, all because it documents Russian atrocities in Syria. It has made it pretty much impossible for him to recoup the tens of thousands of dollars he spent making it and he has no recourse other than going through YouTube's process since he's not an outlet like VICE that can reach out to YouTube easily and put an end to it.1 point -

Ok.. Let's discuss your comments. His first test, which you can see the results of at 6:40-8:00 compares two image pipelines: 6K image downsampled to 4K, which is then viewed 1:1 in his viewer by zooming in 2x 6K image downsampled to 2K, then upsampled to 4K, which is then viewed 1:1 in his viewer by zooming in 2x As this view is 2X digitally zoomed in, each pixel is twice as large as it would be if you were viewing the source video on your monitor, so the test is actually unfair. There is obviously a difference in the detail that's actually there, and this can be seen when he zooms in radically at 7:24, but when viewed at 1:1 starting at 6:40 there is perceptually very little difference, if any. Regardless of if the image pipeline is "proper" (and I'll get to that comment in a bit), if downscaling an image to 2K then back up again isn't visible, the case that resolutions higher than 2K are perceptually differentiable is pretty weak even straight out of the gate. Are you saying that the pixels in the viewer window in Part 2 don't match the pixels in Part 1? Even if this was the case, it still doesn't invalidate comparisons like the one at 6:40 where there is very little difference between two image pipelines where one has significantly less resolution than the other and yet they appear perceptually very similar / identical. He is comparing scaling methods - that's what he is talking about in this section of the video. This use of scaling algorithms may seem strange if you think that your pipeline is something like 4K camera -> 4K timeline -> 4K distribution, or the same in 2K, as you have mentioned in your first point, but this is false. There are no such pipelines, and pipelines such as this are impossible. This is because the pixels in the camera aren't pixels at all, rather they are photosites that sense either Red or Green or Blue. Whereas the pixels in your NLE and on your monitor or projector are actually Red and Greed and Blue. The 4K -> 4K -> 4K pipeline you mentioned is actually ~8M colour values -> ~24M colour values -> ~24M colour values. The process of taking an array of photosites that are only one colour and creating an image where every pixel has values for Red Green and Blue is called debayering, and it involves scaling. This is a good link to see what is going on: https://pixinsight.com/doc/tools/Debayer/Debayer.html From that article: "The Superpixel method is very straightforward. It takes four CFA pixels (2x2 matrix) and uses them as RGB channel values for one pixel in the resulting image (averaging the two green values). The spatial resolution of the resulting RGB image is one quarter of the original CFA image, having half its width and half its height." Also from the article: "The Bilinear interpolation method keeps the original resolution of the CFA image. As the CFA image contains only one color component per pixel, this method computes the two missing components using a simple bilinear interpolation from neighboring pixels." As you can see, both of those methods talk about scaling. Let me emphasise this point - any time you ever see a digital image taken with a digital camera sensor, you are seeing a rescaled image. Therefore Yedlin's use of scaling is on an image pipeline is using scaling on an image that has already been scaled from the sensor data to an image that has three times as many colour values as the sensor captured. A quick google revealed that there are ~1500 IMAX theatre screens worldwide, and ~200,000 movie theatres worldwide. Sources: "We have more than 1,500 IMAX theatres in more than 80 countries and territories around the globe." https://www.imax.com/content/corporate-information "In 2020, the number of digital cinema screens worldwide amounted to over 203 thousand – a figure which includes both digital 3D and digital non-3D formats." https://www.statista.com/statistics/271861/number-of-digital-cinema-screens-worldwide/ That's less than 1%. You could make the case that there are other non-IMAX large screens around the world, and that's fine, but when you take into account that over 200 Million TVs are sold worldwide each year, even the number of standard movie theatres becomes a drop in the ocean when you're talking about the screens that are actually used for watching movies or TVs worldwide. Source: https://www.statista.com/statistics/461316/global-tv-unit-sales/ If you can't tell the difference between 4K and 2K image pipelines at normal viewing distances and you are someone that posts on a camera forum about resolution then the vast majority of people watching a movie or a TV show definitely won't be able to tell the difference. Let's recap: Yedlin's use of rescaling is applicable to digital images because every image from every digital camera sensor that basically anyone has ever seen has already been rescaled by the debayering process by the time you can look at it There is little to no perceptual difference when comparing a 4K image directly with a copy of that same image that has been downscaled to 2K and the upscaled to 4K again, even if you view it at 2X The test involved swapping back and forth between the two scenarios, where in the real world you are unlikely to ever get to see the comparison, like that or even at all The viewing angle of most movie theatres in the world isn't sufficient to reveal much difference between 2K and 4K, let alone the hundreds of millions of TVs sold every year which are likely to have a smaller viewing angle than normal theatres These tests you mentioned above all involved starting with a 6K image from an Alexa 65, one of the highest quality imaging devices ever made for cinema The remainder of the video discusses a myriad of factors that are likely to be present in real-life scenarios that further degrade image resolution, both in the camera and in the post-production pipeline You haven't shown any evidence that you have watched past the 10:00 mark in the video Did I miss anything?1 point

-

YouTube question

John Matthews reacted to rdouthit for a topic

For those of us that like to revise posts after the fact for clarity... the 5 minute lock on articles here is really frustrating. Now I have grammatical errors that will live on forever. Above should say "Even if my forum posts AREN'T as comprehensive as they should be."1 point -

1 point

-

Great post and thanks for the images. That is exactly what I am seeing with my Micro footage - the images just look spectacular without having to do almost anything to them. I've done tests where I have been able to match the colours from the BM cameras, but only under "easy" conditions where the GH5 sensor was not stressed. The fact you are talking about the performance under extremely challenging situations only reinforces my impressions. I'll be very curious to see your results. In my Sony sensor thread everyone was talking about how Sony sensors can be graded to look like anything, but I never see people actually trying, or if they do, it's under the easiest of controlled conditions. Here is a test I did a while ago trying to match the GH5 to the BMMCC: There are differences of course, due to using different lenses for starters, but the results would likely be passable. There's no way in hell you can get a good match under more challenging situations. To put it another way, when the OG BMPCC and BMMCC came out people were talking about cutting them together with Alexa footage and that they matched really easily and nicely with little grading required. No-one really says that about affordable modern consumer cameras, and what I hear from the professional colourists is that when someone uses a GH5 or a Sony or an iPhone, it's about doing the best the can and putting the majority of effort into managing the clients expectations.1 point

-

I don't remember what angle the S1H was set to for that particular shot. I have long since trashed the original files as this was my second and last music video, as the only thing that pisses me off more than a bridal diva is a creative diva who can't get 10,000 free soundcloud streams 😁. It may be okay for some to make a $1000 in a day, but I make that in a few hours for corporate work with dedicated creative directors and set creators setting up the look just the way they desire. All I have to do is create pristine video and sound. With indie music videos, you need to do everything. I think I wouldn't have a problem using ISO-10000 with H.264 with my S1 or S1H. I once pushed to ISO-16000, but that needed post noise reduction, and I wish I would have just jacked up internal noise reduction instead of having to clean up a 20 min of reception speeches in post.1 point

-

Getting started again.

MicahMahaffey reacted to DanielVranic for a topic

The S5 + 28-70 Sigma has really seemed like an awesome option especially if there is a firmware update planned for RAW.1 point -

1 point

-

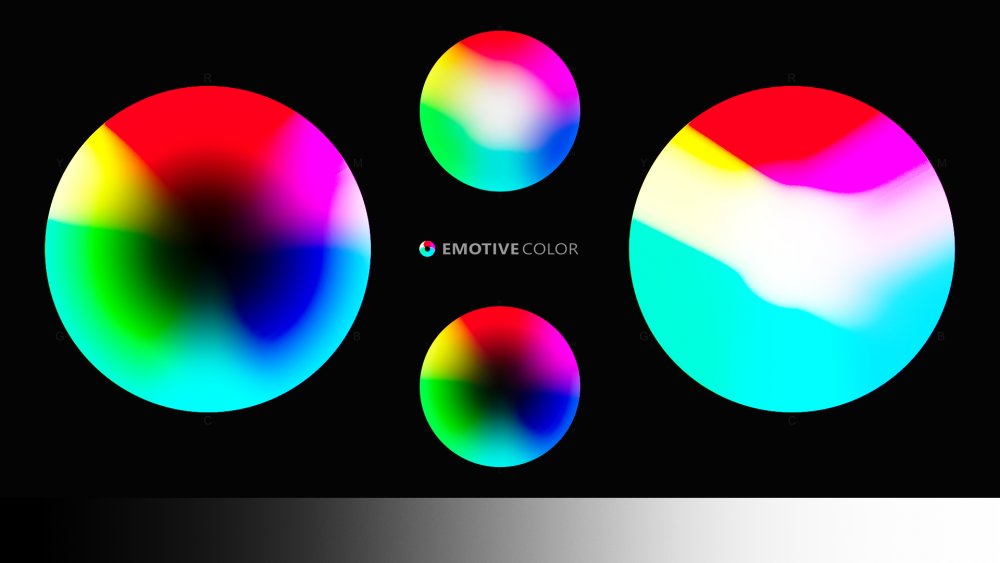

@Attila Bakos Very cool, I was on a 3770k; I switched to Ryzen last year for this very purpose. Now I'd like to swap out to one of the newest Ryzens, but they are sold out everywhere. I'm doing something atm wherein I throw enormous amounts of data in, and the renders can easily go upwards of an hour at 100% cpu. My data files are usually around 1Mb per each; these are going upwards of 50Mb atm @zerocool22 CST is a versatile tool that allows whitepaper transforms between any two spaces. These are the manufacturer specified color spaces. Emotive is a measured conversion between two cameras (or profiles etc), such that it factors in sensor response to a light source and internal camera processing (of WB, profile, etc). Emotive has simulations of sensor LogC solely using Resolve color tools (sans CST) called Matrix. Both the interpolated conversions (with Tetrahedral interpolation enabled) and the Matrix LogC simulations are non-destructive, while Arri's Rec709 (that can be added to Matrix LogC) is partly destructive (of extreme Led gamut). CST can be either destructive or non-destructive, depending on the transform specified and relevant gamut. CST + Arri 709: Emotive Color1 point

-

Binned the S1R and pushed the button on a used S1H! It doesn't specify, but with this particular dealer it's usually 'open box' kit when it's sold 'like new'. It's not the best time for me to be buying kit ideally as my entire wedding season is still in question, but at the same time, I have a number of commercial projects and styled venue shoots to do over the next few months. I also would like to spend time getting familiar with using my entire kit as I intend to use it for at least the next >4 years. I could at a pinch, use just the S5, but after nearly 6 months of wrestling with my 'perfect' minimalist lineup of bodies and lens combos, I have settled on: S1H with the Sigma 28-70mm f2.8 as a my video-centric workhorse, principally because a lot of my long work days are in high temperatures and some ceremonies and some speeches can go on for a bit so the unlimited recording is a bonus. I hate flippy screens but the S1H is perfect in that it can just be used as a tilt. The Sig 28-70 keeps the overall size & weight down but still a flexible focal range that is perfect for my needs; approx 42-105mm as I shoot 4k 50p. The S5 is going to get a battery grip to add more continuous battery life, but mainly to balance out the 24-105mm f4. I'm not really a zoom kind of person, so anticipate using the Sig as a 42 or 105mm and with the Panny lens, FF as a 24 or 105mm to give me the same focal length for stills and video at the long end which I work a lot with. F4 is not my ideal, but then it's equivalent to the Fuji f2.8 that has been my previous workhorse so... The Panny 85mm f1.8 remains as an option lens for video, - purely back of church or low-light outdoor speeches, because in APSC mode, it's like a 130mm. The Panny 20-60mm kit lens remains also, purely as a 20mm wide angle for stills and it's my hiking lens on the S5. The Sigma 45mm f2.8 I'm not sure. I love this lens but have next to zero use for it. I think I'll probably sell it and replace it with another option lens which will be the Sigma 65mm f2 for church and low-light speeches, I have a 100mm equiv, still f2 light gathering instead of the F4 zoom. 2 bodies, 2 workhorse zooms, 1 option zoom, 2 option primes. Sony ZV1 as a backup ceremony & speeches video unit. That's as minimalist as I can go and still cover all my bases and within a certain budget! Ideally, Panny will pop out a 47mp version of the S5, ie, an S5R as there are times when the hi res mode doesn't work for me but I want more than 24mp. Otherwise, I'm sorted and look forward to shooting more than the last miserable 6 months!0 points