Leaderboard

Popular Content

Showing content with the highest reputation on 04/15/2021 in all areas

-

Best affordable camera as of 2021?

Phil A and one other reacted to MicahMahaffey for a topic

I dont use autofocus so I forgot about that one. I mean, i use my sony a6300 along side my S1 as a gimbal cam and its negligible once graded. The xt4 being 10bit pretty much means that it can look like any camera with enough adjustments. We're definitely in a time were most cameras can get the job done and nobody will ever notice what camera you used.2 points -

Spider-Man maybe, but not Batman as there’s zero contrast and good luck with The Flash.2 points

-

Shure. Exactly this image has heavy compression artifacts and I was unable to find the original chart but I got the idea and recreated these pixel-wide colored "E" and did the same upscale-view-grab pattern. And, well, it does preserve sharp pixel edges, no subsampling. I dont have access to Nuke right now, not going to mess with warez in the middle of a work week for the sake of internet dispute, and I'm not 100% shure about the details, but last time I was composing something in Nuke it had no problems with 1:1 view, especially considering I was making titles and credits as well. And what Yedlin is doing - comparing at 100% 1:1 - it looks right. Yedlin is not questioning the capability of given codec/format to store given amount of resolution lines. He is discussing about _percieved_ resolution. It means that image should be a) projected, b) well, percieved. So he chooses common ground - 4K projection, crops out 1:1 portion of it and cycles through different cameras. And his idea sounds valid - starting from certain point digital resolution is less important then other factors existing before (optical system resolving, DoF/motion blur, AA filter) and after (rolling shutter, processing, sharpening/NR, compression) the resolution is created. He doesn't state that there is zero difference and he doesn't touch special technical cases like VFX or intentional heavy reframing in post, where additional resolution may be beneficial. The whole idea of his works: starting from certain point of technical resolution percieved resolution of real life images does not suffer from upsampling and does not benefit from downscaling that much. For example, on the second image I added a numerically subtle transform to chart in AE before grabbing the screen: +5% scale, 1° rotation, slight skew - essentially what you will get with nearly any stabilization plugin, and it's a mess in term of technical resolution. But we do it here and there without any dramatic degradation to real footage.2 points

-

1 point

-

Thank you for your answer. I do have a Shinobi and I was wondering whether the 4:2:2 recording of the ninja V would give me something more until the X-H2 hopefully comes out :). You are of course correct in all counts, especially about having a proper backup which is worrying sometimes. Quality wise I do not seem to get a straight answer and it is a testament to the integrity of your answer that you cannot possibly say a resounding yes or no to my question. Thank you for your reply.1 point

-

They're ProReslicious.1 point

-

Panasonic S5 User Experience

IronFilm reacted to herein2020 for a topic

It works perfect on the GH5. I'm not an audio person, so to me the audio sounds fine. I've never had a problem with noise from the module and anything minor I've been able to clean up in Fairlight; but since I'm not an audio person it is possible what sounds good to me isn't actually that good. The XLR module was one of my favorite GH5 accessories, and the fact that it worked with the S5 was a major selling point for me. I do wonder if maybe the lav mics I am using is causing the issues. But I remember I had a problem with the module and my Sennheiser wireless receiver as well. Very annoying problem to say the least. I am going to do a lot more testing before my next job to see if it is something that is my fault. Personally, I've always found audio the most difficult part of videography, between placing the mics, dealing with room treatment or lack thereof, trying to keep the audio from peaking, trying to keep the mic from rubbing against clothing, trying to block ambient noises like the wind; etc......its very obvious why it is a whole specialty in and of itself.1 point -

Development Update - AFX Focus Module For PBC

majoraxis reacted to alanpoiuyt for a topic

1 point -

Panasonic S5 User Experience

Thpriest reacted to Mark Romero 2 for a topic

Back button focus works great on the S5 and S1. I set the AF switch to MF, and leave the AF switch ON for my Canon EF 16-35 f/4 L. Hitting the AF on button then focuses the lens. I have it set up so that rotating the focus ring on the lens then brings up picture-in-picture for fine tuning focus. No idea about sigma lenses and linear manual focus.1 point -

I use Ninjas with my T3 and T4 all the time for paid work for small nonprofits and businesses. Besides the ease of editing, which you've mentioned, it seems like my Prores files tend to have a bit more latitude for color correction. I haven't done side by side test, so could just be a perception based on my expectations of a 422 file's color depth over 420. Using a Ninja also means I can record two high quality files for each take, so I have a backup in case something goes wrong. Hard drives for the Ninja are way cheaper per Gig of fast storage than cards. I run a 1TB drive with each Ninja and can edit straight on the drive if I don't have space on my main editing drives on the workstation. Western Digital 1TB WD Blue drives are dirt cheap at about $100 a pop. There are also tangible benefits to using a bright, high quality 5-inch monitor with all the exposure tools and ability to de-squeeze anamorphic, using different framing guides, etc. You can use something like a SmallHD or an Atomos Shinobi, but I figure if you're already buying an on-camera monitor, it makes sense to go with one that records too.1 point

-

Those cookies look mighty tasty!1 point

-

Camera resolutions by cinematographer Steve Yeldin

MaverickTRD reacted to kye for a topic

Yes and no - if the edge is at an angle then you need an infinite resolution to avoid having a grey-scale pixel in between the two flat areas of colour. VFX requires softening (blurring) in order to not appear aliased, or must be rendered where a pixel is taken to have the value of light within an arc (which might partially hit an object but also partially miss it) rather than at a single line (with is either hit or miss because it's infinitely thin). Tupp disagrees with us on this point, but yes. I haven't read much about debayering, but it makes sense if the interpolation is a higher-order function than linear from the immediate pixels. There is a cultural element, but Yedlins test was strictly about perceptibility, not preference. When he upscales 2K->4K he can't reproduce the high frequency details because they're gone. It's like if I described the beach as being low near the water and higher up further away from the water, you couldn't take my information and recreate the curve of the beach from that, let alone the ripples in the sand from the wind or the texture of the footprints in it - all that information is gone and all I have given you is a straight line. In digital systems there's a thing called the nyquist frequency which in digital audio terms says that the highest frequency that can be reproduced is half the sampling rate. ie, the highest frequency is when the data goes "100, 0, 100, 0, 100 , 0" and in the image the effect is that if I say that the 2K pixels are "100, 0, 100" then that translates to a 4K image with "100, ?, 0, ?, 100, ?" so the best we can do is simply guess what those pixel values were, based on the surrounding pixels, but we can't know if one of those edges was sharp or not. The right 4K image might be "100, 50, 0, 0, 100, 100" but how would we know? The information that one of those edges was soft and one was sharp is lost forever.1 point -

Camera resolutions by cinematographer Steve Yeldin

MaverickTRD reacted to kye for a topic

I think perhaps the largest difference between video and video games is that video games (and any computer generated imagery in general) can have a 100% white pixel right next to a 100% black pixel, whereas cameras don't seem to do that. In Yedlins demo he zooms into the edge of the blind and shows the 6K straight from the Alexa with no scaling and the "edge" is actually a gradient that takes maybe 4-6 pixels to go from dark to light. I don't know if this is do to with lens limitations, to do with sensor diffraction, OLPFs, or debayering algorithms, but it seems to match everything I've ever shot. It's not a difficult test to do.. take any camera that can shoot RAW and put it on a tripod, set it to base ISO and aperture priority, take it outside, open the aperture right up, focus it on a hard edge that has some contrast, stop down by 4 stops, take the shot, then look at it in an image editor and zoom way in to see what the edge looks like. In terms of Yedlins demo, I think the question is if having resolution over 2K is perceptible under normal viewing conditions. When he zooms in a lot it's quite obvious that there is more resolution there, but the question isn't if more resolution has more resolution, because we know that of course it does, and VFX people want as much of it as possible, but can audiences see the difference? I'm happy from the demo to say that it's not perceptually different. Of course, it's also easy to run Yedlins test yourself at home as well. Simply take a 4K video clip and export it at native resolution and at 2K, you can export it lossless if you like. Then bring both versions and put them onto a 4K timeline, and then just watch it on a 4K display, you can even cut them up and put them side-by-side or do whatever you want. If you don't have a camera that can shoot RAW then take a timelapse with RAW still images and use that as the source video, or download some sample footage from RED, which has footage up to 8K RAW available to download free from their website.1 point -

Camera resolutions by cinematographer Steve Yeldin

MaverickTRD reacted to KnightsFan for a topic

I'm going to regret getting involved here, but @tuppI think you are technically correct about resolution in the abstract. But I think that Yedlin is doing his experiments in the context of real cameras and workflow, not an abstract. I mean, it's completely obvious to anyone who has ever played a video game that there is a huge, noticeable difference between 4k and 2k, once we take out optical softness, noise, debayering artifacts, and compression. If we're debating differences between Resolutions with a capital R, let's answer with a resounding "Yes it makes a difference" and move on. The debate only makes sense in the context of a particular starting point and workflow because in actual resolution on perfect images the difference is very clear. And yeah, maybe Yedlin isn't 100% scientific about it, maybe he uses incorrect terms, and I think we all agree he failed to tighten his argument into a concise presentation. I don't really know if discussing his semantics and presentation is as interesting as trying to pinpoint what does and doesn't matter for our own projects... but if you enjoy it carry on 🙂 I will say that for my film projects, I fail to see any benefit past 2k. I've watched my work on a 4k screen, and it doesn't really look any better in motion. Same goes for other movies I watch. 720p to 1080p, I appreciate the improvement. But 4k really never makes me enjoy it any more.1 point -

Camera resolutions by cinematographer Steve Yeldin

MaverickTRD reacted to kye for a topic

A 4K camera has one third the number of sensors than a 4K monitor has emitters. This means that debayering involves interpolation, and means your proposal involves significant interpolation, and therefore fails your own criteria.1 point -

Camera resolutions by cinematographer Steve Yeldin

John Matthews reacted to slonick81 for a topic

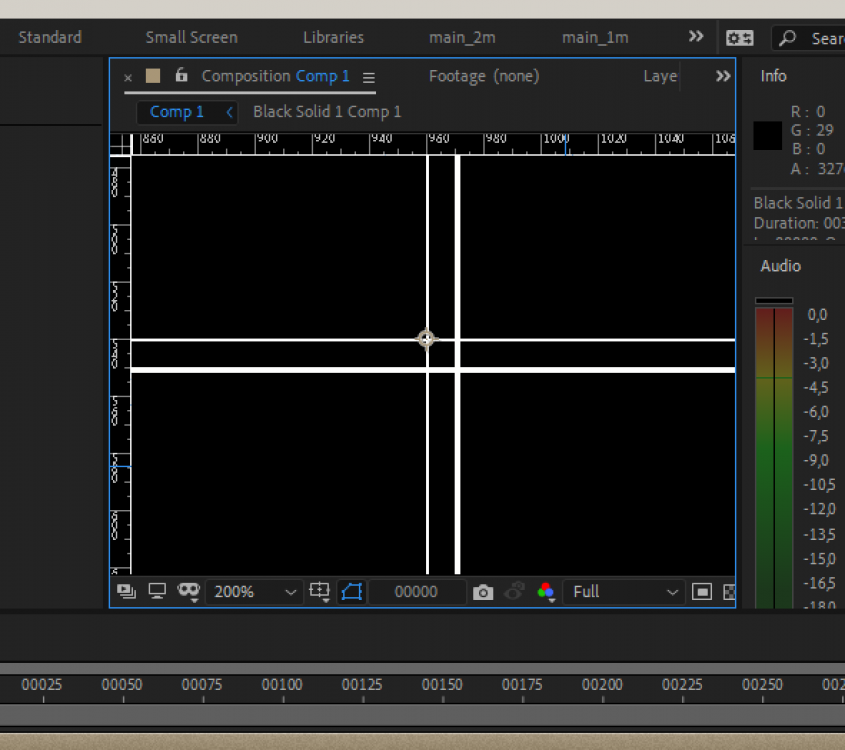

The attached image shows 1px b/w grid, generated in AE in FHD sequence, exported in ProRes, upscaled to QHD with ffmpeg ("-vf scale=3840:2160:flags=neighbor" options), imported back to AE, ovelayed over original one in same composition, magnified to 200% in viewer, screengrabbed and enlarged another 2x in PS with proper scaling settings. And no subsampling present, as you can see. So it's totally possible to upscale image or show it in 1:1 view without modifying original pixels - just don't use fractional enlargement ratios and complex scaling. Not shure about Natron though - never used it. Just don't forget to "open image in new tab" and to view in original scale. But that's real life - most productions have different resolution footage on input (A/B/drone/action cams), and multiple resolutions on output - QHD/FHD for streaming/TV and DCI-something for DCP at least. So it's all about scaling and matching the look, and it's the subject of Yedlin's research. More to say, even in rare "resolution preserving" cases when filming resolution perfectly matches projection resolution there are such things as lens abberations/distorions correction, image stabilization, rolling shutter jello removal and reframing in post. And it works well usually because of reasons covered by Yedlin. And sometimes resolution, processing and scaling play funny tricks out of nothing. Last project I was making some simple clean-ups. Red Helium 8K shots, exported as DPX sequences to me. 80% of processed shots were rejected by colourist and DoP as "blurry, unfitting the rest of footage". Long story short, DPX files were rendered by technician in full-res/premium quality debayer, while colourist with DoP were grading 8K at half res scaled down to 2K big screen projection - and it was giving more punch and microcontrast on large screen then higher quality and resolution DPXes with same grading and projection scaling.1 point -

Kind of reminds me of this advertisement, directed by Frank Budgen:1 point