Leaderboard

Popular Content

Showing content with the highest reputation on 03/04/2022 in all areas

-

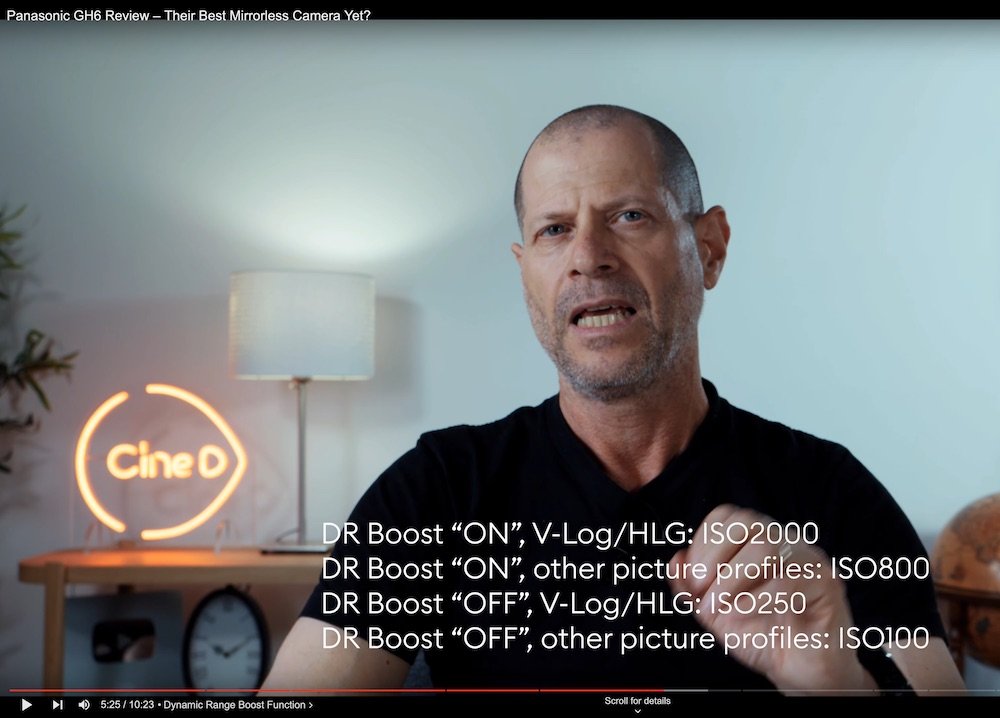

I think the GH6 is a triumph of incremental upgrades that provide a real difference when shooting run-n-gun video in the real world. With the headline features so prominent, it's easy to miss the many smaller improvements that can actually make a big difference to shooting. We talk about cameras like they're museum pieces or engineering spectacles, but often these things don't translate to the real world. The following are my views of the GH6 from the perspective of a travel videographer upgrading from the GH5. I've tried to include as many example images as I can - please note that these aren't fully graded and are often compromised due to me pushing the limits of what is possible from the GH5, what was possible under the circumstances, and what my limited skills could create... But let's get some obvious things out of the way first. Things I don't care about..... Autofocus The AF is improved. People who used the GH5 AF will like the GH6 AF, and people who didn't like the GH5 AF probably won't be moved by the GH6 AF. I don't use it, basically ever, so I don't care. If you do care, then good for you.. move along, nothing to see here. Extra resolution There's more resolution, but the GH5 had enough resolution for downsampled DCI4K and lower resolutions, and the 2x digital zoom and ETC modes provided useful cropping modes as well. The extra resolution doesn't really matter to me. Size and weight I care about this a lot actually, but it's not substantially larger than the GH5 from the front, which is the dimension that matters when filming in public. The extra depth is immaterial when you have a lens on the camera. The extra weight is unfortunate, but isn't that much and is well worth it for the fan and screen improvements. Internal Prores Prores is a professional codec that offers considerable improvements throughout the whole workflow. I'm not going to say much else on this as I created another thread to talk about this but it's great to have the option, and I definitely appreciate it. Many people were happy with the GH5s codecs, especially the 10-bit 422 ALL-I codecs, which were a shining beacon in a sea of poor-quality IPB cameras, but even those have been improved.... Huge improvements on h264/h265 codecs The GH5 had 200Mbps 1080p and 400Mbps DCI4K, but the slow-motion modes are limited to 150Mbps (4K60) 200Mbps (1080p60) or 100Mbps (1080pVFR up to 180fps) and the effective bitrate dropped significantly when you conform your 60p or 180p footage to 24p - as low as 13Mbps!!. The GH6 has 4K60 at up to 600Mbps (SD card) or 800Mbps (CFexpress type B) - an effective bitrate of 320Mbps, and in 1080p240 800Mbps which is an effective bitrate of 80Mbps. Oh, and these are all 10-bit 422 ALL-I, as opposed to the GH5 8-bit 420 IPB. Oh, and also also, the 120p is now in 4K too. If you're shooting sports this is a big deal as often players are in direct sunlight, depending on the time of day there might not be much ambient fill light, and any reflections from the field may be strange colours such as green grass, or various coloured artificial turf, which will drastically benefit from the 422 10-bit when colour correcting footage. The extra bitrate is also great for having clean images when players may be bright against a very dark background really highlighting any compression nasties on their edges. The ALL-I will be great in post too. I'm curious to see what the 300fps looks like in real-world situations, but I've shot a lot of sports with 120p and it seems slow enough to me. Dynamic Range Boost This is a big deal for anyone recording in uncontrolled conditions, and especially for travel. This isn't a vanity exercise or BS tech nerdery, this is a real creative consideration. When you're shooting travel, the film is about the location and the experience of the people in that location, and the weather is a key consideration of this. With the GH5 I often had to choose between clipping the highlights (ie, sky) and exposing for the people. I also chose to expose for the sky on my XC10 and in combination with 8-bit C-Log recorded a large amount of spectacularly-awful looking footage of my honeymoon. Here's a frame of C-Log SOOC from that debacle: The shot was also mixed lighting with the subject in the shade, being lit by a coloured Italian shop-front immediately behind me that was in full sun.... Being forced to decide between the subject and the sky is problematic if the shot you want is "person at location X at sunset" and you can't feature that person and also the sunset. It's also relevant for anywhere with tall buildings that create deep shade - the below is a nice dynamic image but it's a tad overdone and not how we actually see the world: My experience with shooting the OG BMPCC / BMMCC is that their ~2 stop advantage in DR over the GH5 almost completely covers this gap. Images with someone standing in-front of a sunset no longer feature either an anonymous shadow person swimming in technicolour-grain or a digitally clipped sky. The GH6 DR Boost feature will help in these situations, allowing subjects to be filmed in-context rather than only in select locations were the light is just right but the scene is irrelevant. Would more be better? Sure. I can hear lots of people thinking "go full frame then - moron" but the GH5 was (until now) a camera with a unique set of features not bettered by any other offering for this type of travel shooting, so while the current alternatives give with one hand they also take away with the other, normally taking away a lot more than they give. Full V-Log Having a colour profile that is supported natively by Resolve is a huge thing for me, as it allows WB and exposure adjustments to be done in post without screwing up the colours. This is important because in shooting travel there are times when you can't even stop walking to get a shot, let alone have enough time to pause, get exposure dialled in, and heaven-forbid to pull out a grey-card and do a custom WB! A great man once said "tell him he's dreamin"! This lighting all looked neutral to the naked eye - GH5 HLG SOOC: The advice I got from the colourists on that was to lean into it and just pick one or the other and balance to those, but sometimes the mixed lighting isn't really something you want to lean into.... GH5 HLG SOOC - look at the colour temperatures on the grey sleeve from what appears to be the strip-light from hell hanging just above their heads: Better Low-light performance Filming in available light in uncontrolled conditions often means needing to use high ISO settings. This is a shot I've posted many times before, and the BTS of me shooting it, in a row-boat lit only by the floodlights on the river bank maybe 50m away. Notice that the smartphone taking the BTS photo couldn't even focus due to the low-lighting. It was using the GH5 in full-auto (likely a 360-degree shutter) and with the Voigtlander wide open at F0.95. I don't know what the ISO on the shot would have been, but there's quite a bit of noise, especially considering this is a UHD clip downsampled from 5K in-camera (200% zoom on a UHD timeline): I don't mind the quality of the noise actually, and it responds to sharpening really well giving quite an analogue image feel, but if the image is this noisy then the colours aren't going to be at their best, and that isn't desirable. There are a number of fast primes on MFT (ie, faster than F2) but getting sharp images wide-open is problematic, even from the very-expensive Voigtlander f0.95 primes. The GH6's improved low-light is great, and not only does it allow recording in ever-darker environments, but it also allows the use of modest lenses and faster lenses stopped down to be within a higher quality part of their aperture range. An extra $1000 cost on the body of a camera seems like a lot, but if it saves you $500 on every lens because you can buy slower lenses then it's an investment that has a reasonable return. ...and lest you think that the above is an extreme example, having good low-light performance is really just about filming people doing what they're doing, and in case somehow you haven't noticed, it's dark outside about half the time and people go out into it and do things. Another example, here's a shot taken right at the end of blue-hour: and minutes later, here's a shot showing what you can get when lit only by the light of a phone, and providing a reference for how dark this location was: Think of the benefits of better low-light performance here.... it's very low-light, mixed colour temperature lighting, and the main feature is skintones. The noise on the GH5, with the Voigtlander wide open is pretty brutal: New Screen My XC10 has amongst the nicest ergonomics of any camera and a big part of that for me was the tilt screen that didn't get in the way when using your right hand to hold the camera and the left to support the camera and manually focus the lens. The fact that the new GH6 screen allows for both tilting as well as for flipping is great. Contrary to what many believe, the 'flippy' screen is actually very useful for filming things other than yourself. Any time when you cannot stand directly behind the camera is when the flipping screen is useful. I have used it while filming out of windows (such as in moving vehicles, filming the view around the corner of a panorama framed by an arch, or taking a sneaky shot of the kids around a corner without them spotting me and pulling faces). Sometimes you need to hold the camera in funny ways to get a shot: They're also very useful for those who want to take vertical photos from a low or high angle. Fan The GH5 never overheated on me, even in desert conditions, unlike my iPhones have on many occasions, and the GH6 will never overheat either. It's not an "improvement" in the sense that it doesn't offer anything new, but it is a guarantee that all the extra other features won't come at an unacceptable cost. Having a camera that overheats is just stupid, and Panasonic doesn't insult us by providing tools that aren't reliable. Punch-in while recording Checking focus while recording is a pretty fundamental thing, and the GH5 didn't have it (and didn't have the best focus peaking either) but now the GH6 does. Boom. Custom Frame Guides The frame guides on the GH5 were worthless - pale dotted lines that were difficult to see under ideal conditions, let alone while holding the camera at arms length in a moving vehicle. The GH6 has custom frame guides that have a much more visible outline and also dim the out-of-area image, making it super easy and intuitive to use. Frame from @Tito Ferradans review on YouTube: I might be tempted to engage this for a 2.35:1 permanently and then choose what ratio to use in-post when I get to editing. I've tried to shoot for this ratio before with the GH5, forgot and couldn't see the frame guides and intuitively composed like normal, and then downloaded the footage and found that I'd framed every shot too close for the ratio. Fail. Buttons and controls The GH6 has more programmable buttons than the GH5 (which was already great), and the extra custom slot C4 on the mode dial is very welcome. I have my GH5 configured to be C1 1080p24, C2 1080p60, C3-1 1080p120, C3-2 4K24 (in case there's something I'd need 24p for), and C3-3 as 4K manual everything for shooting camera tests. The first four modes are ones I want to just be one dial away while I'm out shooting, so having the extra one is really useful. The GH5 also saved the preset focal lengths for the IBIS on a per-profile basis, so when you switch profiles you switch focal lengths. This is important because when the camera goes to sleep it wakes up but forgets the focal length and goes back to the default one for that profile. As such, you could duplicate profiles and use them to swap between focal lengths, like if you were recording sports on a manual zoom lens, just to name a purely-theoretical example that no-one would ever contemplate.... Better IBIS The GH5 IBIS is great, and when you shoot with fully manual primes and without a rig, is an important function. Having improved IBIS on the GH6 is very welcome and can really help salvage shots that wouldn't otherwise have made the cut. One of the killer features of action cameras is that you can put them anywhere and get a huge variety of images while out in the real world, and that works for MILCs too, but if you're balancing on a chair and shooting at full arms-reach to get a shot and the IBIS isn't able to take up the slack and stabilise it, then it won't make the edit. It doesn't matter how good the stabilisation of something is, there are instances where it will fail, and I seem to keep finding them. USB-C port The Prores and high bitrates will chew through storage and the ability directly record to an SSD would be spectacular. Being able to charge the camera with USB-C would also be useful in some instances. In camera LUT support I'm yet to see how this is implemented but LUTs can be great references while shooting. You can use LUTs for things like false-colour, sure, but I'll be very interested in experimenting with ones that are very high contrast to allow for better visibility in bright conditions, and other more extreme applications that help you get the shot. I normally use the EVF on the GH5 as it eliminates ambient light and adds another point of contact for better stabilisation, but sometimes you have to use the screen and it's not always ideal, so these could be useful for that. Better colour science? The GH5 colour science wasn't winning any awards, and the GH6 combination of V-Log + Prores + higher bitrates + higher DR seems to be noticeably better, which is a welcome improvement for me, especially as I am struggling to shoot in difficult situations often involving mixed WB lighting and other nasties. The latitude tests from CineD look spectacular: This will allow huge flexibility for imperfect shooting conditions. Many times I pull up the shadows in an image (maybe I was trying to get the subject and the sky in the shot - shock horror) only to find the shadows a tinted awful mess. If they look like the above then it will be tremendously useful in practice. 4-channel audio recording with the XLR module This is slightly tempting for me. In post I want a stereo ambient sound, and I also want a directional audio from a shotgun mic, ideally with a safety-track. I can get either of those with the GH5 pretty easily by using the Rode Videomic Pro Plus with the safety-track feature enabled, and simply unplug it when I want a stereo ambience track using the cameras internal microphones (which are good enough if you're only using this in combination with music and other sound-design). But the problem is that I don't know when something will happen, so if I'm recording stereo ambient sound I don't know if my subject will say something at the same time that a car goes past behind me, and I also don't know if my subject will say nothing while I'm recording directional mono audio and then before I know it we'll be somewhere else and I'll have no ambient audio from that location. If this situation seems far-fetched, you've obviously never been on a tour bus in a third-world country before, where you might get dropped off near a small market, you then walk though the market with your group, where there is a stall every 5 steps with a different composition / lighting / and ambient sounds, and there is literally only room for people to walk single-file and you don't have time to stop at each interesting booth for a minute as you capture different compositions and audio options without losing your tour guide. Being able to stop for a few seconds, smile at the person there, and grab a few seconds of action with directional audio and ambient sounds would be really useful. I don't fancy the added size of the module though. Audio screen The GH6 has an audio button function that brings up an audio screen showing all the audio things you'd want. Perfect for quickly checking how the audio is going. With so much going on while shooting it's easy to forget to look at the meters OSD, and having everything in one place seems excellent. I've screwed up the audio on many occasions, so this will be quite useful. In summary.... The GH6 has tonnes of little improvements that I think will make it a much more useful camera when out in the field recording things in difficult conditions as they happen. It looks like Panasonic has done a great job in not just grabbing headlines, but in actually taking the things that niggle or limit real shooters and making them better. Now, if only the world would return to a state where I'd feel remotely comfortable wandering out into it with a camera in hand.....4 points

-

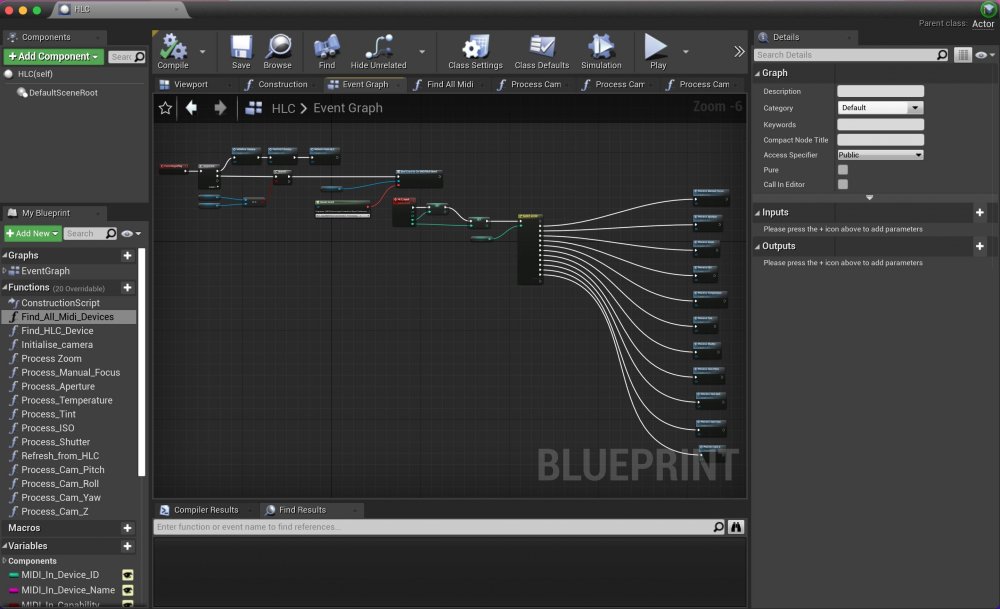

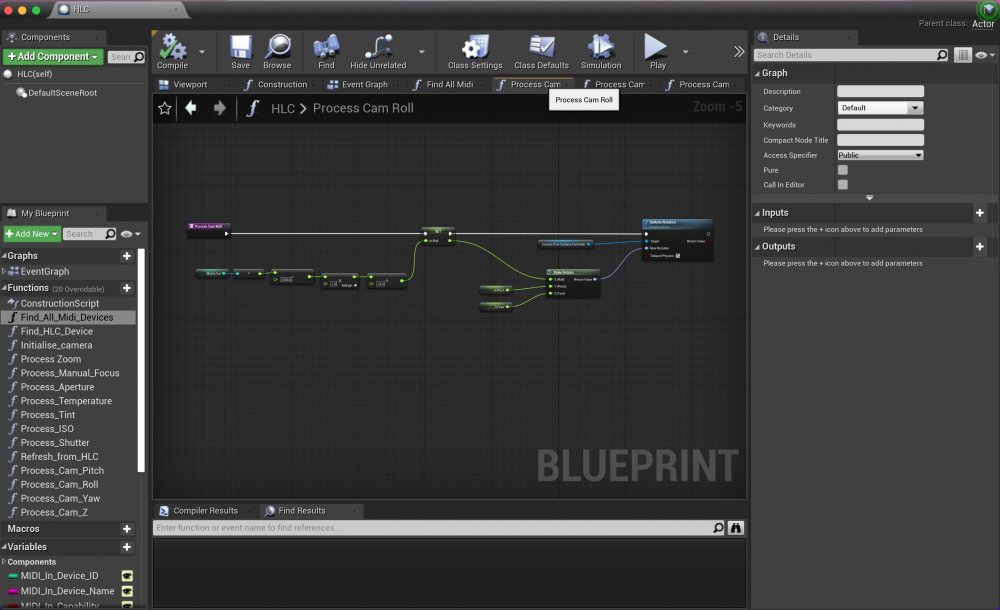

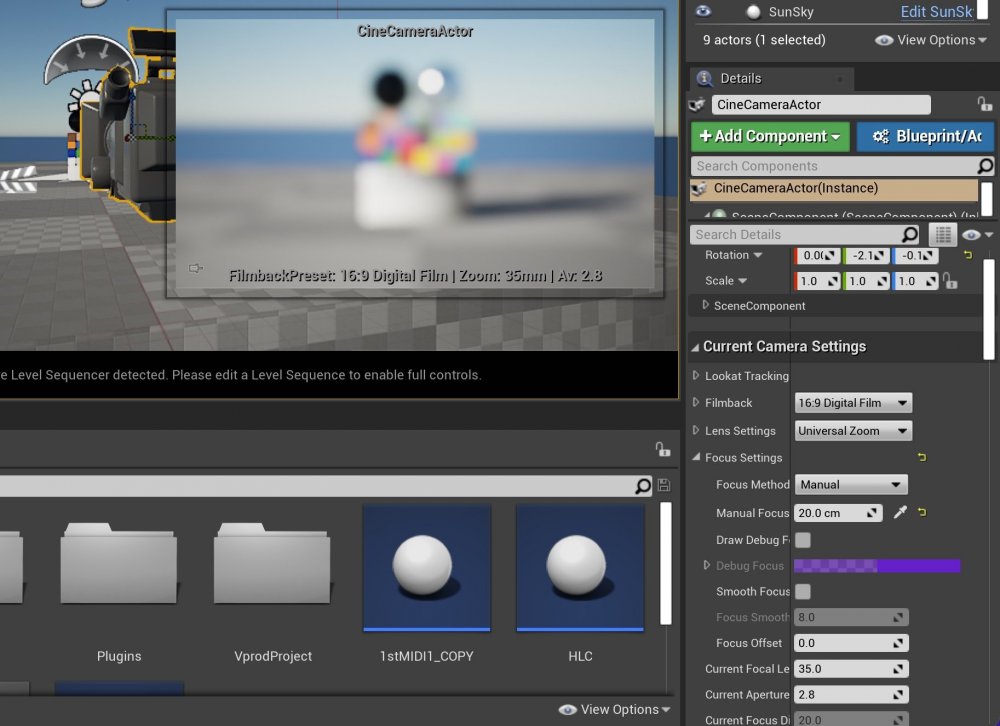

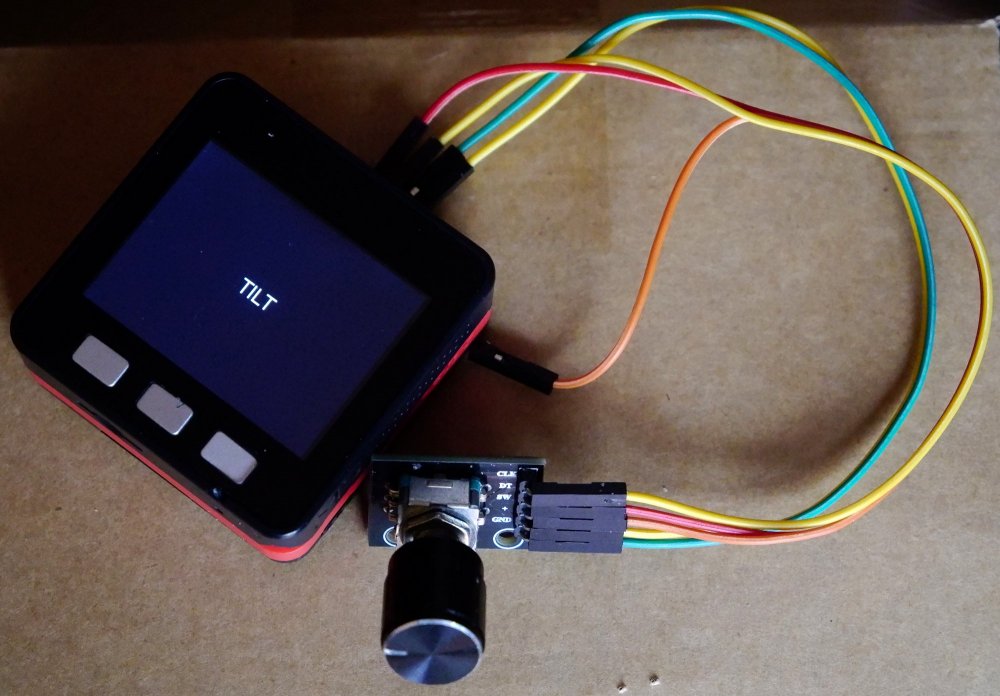

So....getting into the time machine and going back to where the journey was a few weeks ago. To recap, my interest in these initial steps is in exploring methods of externally controlling the virtual camera inside Unreal Engine rather than getting live video into it to place in the virtual world. To me, that is the bigger challenge as, although far from trivial, the live video and keying aspect is a known entity to me and the virtual camera control is the glue that will tie it together. For that, we need to get the data from the outside real world in terms of the camera position, orientation and image settings in to Unreal Engine and then do the processing to act upon that and translate it to the virtual camera. To achieve this, Unreal Engine offers Plug Ins to get the data in and Blueprints to process it. The Plug Ins on offer for different types of data for Unreal Engine are numerous and range from those covering positional data from VR trackers to various protocols to handle lens position encoders, translators for PTZ camera systems and more generic protocols such as DMX and MIDI. Although it is obviously most closely associated with music, it is this final one that I decided to use. The first commercially available MIDI synth was the Sequential Prophet 600 which I bought (and still have!) a couple of years after it was released in 1982 and in the intervening four decades (yikes!) I have used MIDI for numerous projects outside of just music so its not only a protocol that I'm very familiar with but it also offers a couple of advantages for this experimentation. The first that, due to its age, it is a simple well documented protocol to work with and the second is that due to its ubiquity there are tons of cheaply available control surfaces that greatly help when you are prodding about. And I also happen to have quite a few lying around including these two, the Novation LaunchControl and the Behringer X-Touch Mini. The process here, then, is to connect either of these control surfaces to the computer running Unreal Engine and use the MIDI plug in to link their various rotary controls and/or switches to the operation of the virtual camera. Which brings us now to using Blueprints. In very simple terms, Blueprints allow the linking of elements within the virtual world to events both inside it and external. So, for example, I could create a Blueprint that when I pressed the letter 'L' on the keyboard could toggle the light on and off in a scene or if I pressed a key from 1 to 9 it could vary its intensity etc. For my purpose, I will be creating a Blueprint that effectively says "when you receive a value from pot number 6 of the LaunchControl then change the focal length on the virtual camera to match that value" and "when you receive a value from pot number 7 of the LaunchControl then change the focus distance on the virtual camera to match that value" and so on and so on. By defining those actions for each element of the virtual camera, we will then be able to operate all of its functions externally from the control surface in an intuitive manner. Blueprints are created using a visual scripting language comprising of nodes that are connected together that is accessible but contains enough options and full access to the internals to create deep functionality if required. This is the overview of the Blueprint that I created for our camera control. Essentially, what is happening here is that I have created a number of functions within the main Blueprint which are triggered by different events. The first function that is triggered on startup is this Find All MIDI Devices function that finds our MIDI controller and shown in expanded form here. This function cycles through the attached MIDI controllers and lists them on the screen and then steps on to find our specific device that we have defined in another function. Once the MIDI device is found, the main Blueprint then processes incoming MIDI messages and conditionally distributes them to the various different functions that I have created for processing them and controlling the virtual camera such as this one for controlling the roll position of the camera. When the Blueprint is running, the values of the parameters of the virtual camera are then controlled by the MIDI controller in real time. This image shows the viewport of the virtual camera in the editor with its values changed by the MIDI controller. So far so good in theory but the MIDI protocol does present us with some challenges but also some opportunities. The first challenge is that the value for most "regular" parameters such as note on, control change and so on that you would use as a vehicle to transmit data only ranges from 0 to 127 and doesn't support fractional or negative numbers. If you look at the pots on the LaunchControl, you will see that they are regular pots with finite travel so if you rotate it all the way to the left it will output 0 and will progressively output a higher value until it reaches 127 when it is rotated all the way to the right. As many of the parameters that we will be controlling have much greater ranges (such as focus position) or have fractional values (such as f stop) then another solution is required. If you look at the pots on the X-Touch Mini, these are digital encoders so have infinite range as they only interpret relative movement rather than absolute values like the pots on the LaunchControl do. This enables them to take advantage of being mapped to MIDI events such as Pitch Bend which can have 16,384 values. If we use the X-Touch Mini, then, we can create the Blueprint in such a way that it either increments or decrements a value by a certain amount rather than absolute values, which takes care of parameters with much greater range than 0 to 127, those which have negative values and those which have fractional values. Essentially we are using the encoders or switches on the X-Touch Mini has over engineered plus and minus buttons with the extent of each increment/decrement set inside the Blueprint. Workable but not ideal and it also makes it trickier to determine a step size for making large stepped changes (think ISO100 to 200 to 400 to 640 is only four steps on your camera but a lot more if you have to go in increments of say 20) and of course every focus change would be a looooongg pull. There is also the aspect that, whilst perfectly fine for initial development, these two MIDI controllers are not only wired but also pretty big. As our longer term goal is for something to integrate with a camera, the requirement would quickly be for something far more compact, self powered and wireless. So, the solution I came up with was to lash together a development board and a rotary encoder and write some firmware that would make it a much smaller MIDI controller that would transmit to Unreal Engine over wireless Bluetooth MIDI. Not pretty but functional enough. To change through the different parameters to control, you just press the encoder in and it then changes to control that one. If that seems like less than real time in terms of camera control as you have to switch, then you are right it is but this form is not its end goal so the encoder is mainly for debugging and testing. The purpose of how such a module will fit in is in how a camera will talk to it and it then processing that data for onward transmission to Unreal Engine. So its a just an interface platform for other prototypes. In concert with the Blueprint, as we now control the format of the data being transmitted and how it is then interpreted, it gives us all the options we need to encode it and decode it so that we can fit what we need within its constraints and easily make the fractional numbers, negative numbers, large numbers that we will need. It also offers the capability of different degrees of incremental control as well as direct. But that's for another day. Meanwhile, here is a quick video of the output of it controlling the virtual camera in coarse incremental mode.3 points

-

Also ProRes on GH6 seems to be much less image processing heavy so more organic image. No RAW tho. Overall a great looking camera, the main "issue" I have with it is price.. M43 was initially affordable (GH2 retailed at $900!). Obviously the features are night & day.. but this general camera trend of each new model costing more is kind of annoying.2 points

-

The GH6 is a triumph of practical upgrades (especially for shooting travel)

Jimmy G and one other reacted to webrunner5 for a topic

Once you get spoiled with 12bit Raw files there is no going back LOL. 😬 Once you get the hang of editing them it is pretty easy to do, especially in Studio Resolve.2 points -

In other news - I just purchased a C70, which I will be collecting on Monday 😍.2 points

-

Somehow, or another, my YT feed ended up with some F3 videos on it and I was surprised to see how well the F3 uprezzes to 4K. This is the camera that just keeps on giving.2 points

-

35mm photo film emulation - LUT design

TrueIndigo and one other reacted to hyalinejim for a topic

It's worth pointing out that there's no guarantee that the skintones and reds are accurate to what they are in reality, although they may be. I've long been a believer in colour that is better than, rather than faithful to, reality. However, the LUT gives colours that are faithful to a particular film stock, exposed and digitised in a specific way. And it may well be the case that skin and reds are quite close in hue to how they are in reality. But blues, to give another example, are considerably "off", such that skies will be rendered as very slightly but noticeably more towards green rather than purple. So perhaps the blue of the bottle or your father's clothing look a bit different to reality. In the LUT the hue, saturation and lightness of various colours will vary with respect to their colorimetric values in reality. And the relationship is not constant through the tonal range. Shadows, for example, are relatively saturated and highlights much less so. A lot of the skintones in this image are in the lower midtones and that gives the skin its robust colouration in this instance. I suppose part of the reason for this variance between realistic colour and filmic colour is simply because of the way negative film inherently works as a recording material. But another part of it is by design: conscious decisions that the engineers at Kodak made to design a film with specific responses. I think most people agree that, broadly speaking, the colour of film is beautiful. I certainly do, and that's why I'm going for it! It certainly is and, as @kye suggests, one way is to create a HLG to VLog transform. But as well as the curve, I'd assume the colour is different too. Another way is to create a LUT from scratch using a HLG chart shot. That would probably be more accurate. I don't have any plans to do that at the moment, though. I would have thought the vast majority shoot in VLog with only a handful of HLG crew? But I guess on the GH5 HLG gave many of the benefits of VLog without the added expense of the paid firmware update.2 points -

Canon C70 User Experience

Django and one other reacted to herein2020 for a topic

Here is my first model promo video shot with the C70. @Django the DR really shows with the backlit direct sunlight shots, I did not use anything but daylight for this video. As far as stability, @kye about 80% of the video was shot handheld and I did not use any in camera digital stabilization. I still am not 100% convinced the C70 has more DR than the S5, if anything I would say they are even, but the AF is so good even is good enough for me. The touch to track is a cool feature but too unreliable IMO, I don't have anything to compare it to (since I don't own the R5 or R6), but it definitely seems like it is easy to lose the tracking at which point the camera becomes unpredictable especially since I only get the center 60% of the coverage area with the speed booster attached. Overall, I am really glad I skipped the R5 and the R5C, those internal ND filters make all the difference here. Many times with the S5 I would just stop it down because there just wasn't time to pull out the variable ND. The C70 combined with the DJI Ronin RS2 is an awesome combination. The RS2 is so much lighter than the DJI Ronin S that the C70 on the RS2 feels like the same weight as the S5 on the Ronin S. I am able to keep my full Shape cage on the C70 and balance it without needing counter weights even with the Canon 24mm-105mm L lens, however I did need the Smallrig extending base because the C70 was too wide for the DJI base and the Smallrig Manfrotto base plate since the one that comes with the Ronin RS2 is pretty much useless. The 1TB V30 Sandisk Extreme PRO card is working out great as well, no problems so far, I just keep the V90 card in slot 2 for just in case.2 points -

The GH6 is a triumph of practical upgrades (especially for shooting travel)

PannySVHS reacted to ade towell for a topic

I agree but guess it's all relative, the Canon R5 is £4300, GH6 way less than half that at £2000. Think this is a great camera and with the Olympus puts M34 back on the map and hopefully has a decent future. Many folks been writing M43 off but I feel it has a lot to offer. What would really be a statement of intent would be a proper cine/eng zoom with good range, take advantage of the M43 unique sensor size, has been crying out for one for years1 point -

Canon C70 User Experience

Mmmbeats reacted to herein2020 for a topic

Congrats, I am sure you will love it. Mine is great so far. That sounds about right....not enough difference to notice in real world shooting. I was aware of the 4K120p limitation, funny how I always thought 120FPS would be nice to have but now that I have it I doubt that I will use it much if at all. I still think the S5 might be better in low light due to the FF sensor and dual native ISO, that 4000ISO was incredibly useful; I haven't had a low light shoot yet so that's the last thing I need to test. I do think that the C70 and CLOG2 is easier to grade right out of camera and easier to expose than VLOG. I just set the exposure for the WFM so that the highlights touch 80 for outdoors shoots. If the sun is in the frame I let that peak hit 100. The video was shot at 60fps and output at 30fps. I don't like the motion blur from 24fps so I never use that frame rate.1 point -

The GH6 is a triumph of practical upgrades (especially for shooting travel)

Jimmy G reacted to webrunner5 for a topic

I bought my Resolve Studio 17 for $145.00 on ebay. Lots of people sell them when they buy the Blackmagic.controller. It's just a credit card looking thing now you get.1 point -

1 point

-

A solid indie budget A-cam and a viable b-cam to other workhorse cameras. The improvements to the image alone get it in the territory of being taken seriously, especially the DR and color improvements. That's what folks have to pay attention to. I believe the veydra/meike lenses were absolutely made for this camera and its design/function ethos. No other mount has cinema glass that small. For the fluid small body run-n-gun style of shooting that benefits from phase detect af, pick up the OM-1 or earlier Olympus releases instead.1 point

-

The video is purely a prototyping illustration of the transmission of the parameter changes to the virtual from an external source rather than a demonstration of the performance, hence the coarse parameter changes from the encoder. So is purely a communication hub that sits within a far more comprehensive system that is taking dynamic input from a positional system but also direct input from the physical camera itself in terms of focus position, focal length, aperture, white balance etc. As a tracking only option, the Virtual Plugin is very good though but, yes, my goal is not currently in the actual end use of the virtual camera. A spoiler alert though, is that what you see here is a description of where I was quite a few weeks ago 😉1 point

-

My Journey To Virtual Production

majoraxis reacted to KnightsFan for a topic

I have a control surface I made for various software. I have a couple of rotary encoders just like the one you have, which I use for adjusting selections, but I got a higher resolution one (LPD-3806) for finer controls, like rotating objects or controlling automation curves. Just like you said, having infinite scrolling is imperative for flexible control. I recommend still passing raw data from the dev board to PC, and using desktop software to interpret the raw data. It's much faster to iterate, and you have much more CPU power and memory available. I wrote an app that receives the raw data from my control surface over USB, then transmits messages out to the controlled software using OSC. I like OSC better than MIDI because you aren't limited to low resolution 8 bit messages, you can send float or even string values. Plus OSC is much more explicit about port numbers, at least in the implementations I've used. But having a desktop software interpreting everything was a game changer for me compared to sending Midi directly from the arduino.1 point -

I'm excited and looking forward to it! I've been cutting my teeth on FCP but I haven't ruled out having another NLE at my disposal along the way. Of particular interest to me are the video scopes in Resolve, as demonstrated by professional colorist Darren Mostyn here... How to use resolve SCOPES - In-depth with a Pro Colourist - YouTube ...I'd wet myself to have that CIE Chromaticity scope in Final Cut! Ha! Truly helps one see where their colors are in relation to the gamuts they shot in and the one's they are targeting for, super useful. Too bad it's only in the paid version of Resolve! Hmm, maybe someone knows of a plugin?1 point

-

I know very well that you are a very good programmer and surely you are trying to make your own system to control the unreal camera, but after seeing the example in video, I wonder if it is not much more convenient to use an already made app, such as a virtual plugin. that I used in my tests ... You can control different parameters such as movement and focus , you can move around the world with virtual joysticks as well as being able to walk, you can lock the axes at will etc etc .. Try to give it a look, there is also a demo that can be requested via email ... https://www.unrealengine.com/marketplace/en-US/product/virtual-plugin1 point

-

The GH6 is a triumph of practical upgrades (especially for shooting travel)

kye reacted to webrunner5 for a topic

The color transformation tool is just super dope in it. You don't need LUTs anymore with it. Do that function, Node, last not first.1 point -

Hi Kye, wow, what an in-depth look into this camera...methinks you're very interested to the point of becoming a future owner?! 😉 I'll reserve my points to "output quality" as each of the internal mechanical aspects and features of the camera will appeal (or not) differently to individual users. To that end, you've covered quite nicely (and better than I have been able to do) the need for wider dynamic range when shooting outdoor environments...the f/stop range on a typical sunny day is just too much for 8-bit Rec709 to contend with for those of us who need/desire/require that the sum of our frame includes everything from the brightest clouds and specular highlights to the detail and vibrancy going on in the shaded regions. Our eyes capture all of that and I have never been ashamed to ask for and seek out equipment that gets me closer to being able to capture that same natural view. I laugh every time I hear/read someone say that this is niche...no more niche than what I came to expect from my old love, Kodachrome 25! LOL Getting to understand how HDR and 10-,12- (14-, and 16-) bit imaging can help "get me there" has been a challenge up to now...hard for any of us to realize the benefits of these things when viewed on SDR monitors! Ha! Enter my new M1 Max Macbook Pro with its HDR panel, I've devoted this year to finally educating myself on what is possible (and desirable) with the 10-bit footage I've been accumulating with my S1 for the past few years...in fact, I've decided to hunt down a used Ninja V just so I can become familiar with using 12-bit ProRes RAW at learning what it might and might not be "most practical for" for my style of nature shooting. Clearly, not every situation calls for such bit-depth or, even, DR and learning those lessons through shooting will help educate and inform me as to when varying codec choices are called for...all the more reason for my wanting it all internal to the camera (GH6 and future releases), call up a Custom setting and off one goes, er, shoots, no fuss no muss (f*** external recorders, really, I mean that from the bottom of my heart, Panasonic). Anyhoo, I thought this article and the accompanying two videos would be of particular interest to you (and to all our fellow folks trying figure out the whens and whys and needs for HDR and high bit-depth)...a two-part interview with Kevin Shaw, CSI and founder of the International Colorist Academy (ICA), where he demonstrates where and how the benefits of HDR acquisition come into play... Is 2022 the Year You Buy Into HDR? - Frame.io Insider https://blog.frame.io/2022/01/17/hdr-in-2022/ ...a college-level education from someone who knows. 😉1 point

-

You are both correct, as this is just a different way of describing the apparent DoF. @herein2020 is technically correct (yes, yes everybody - 'the best kind of correct' 😉), and @M_Williams is correct for practical purposes. DoF is in fact the same whether using the speedbooster or not. However, in order to achieve the same framing on the 50mm lens example you would have to alter the distance between the camera and the subject, thus altering the DoF. I much prefer herein2020's way of stating it, but I have come to acknowledge that endlessly explaining this introduces just as much confusion as it clears up.1 point

-

Feck me, Vimeo sucks now!

webrunner5 reacted to BenEricson for a topic

Vimeo is still the go to pro portfolio site. YouTube can’t cut it with ads / related videos you get. I can’t have someone watching an ad before a video. on top of that, the quality is just better. YouTube goes to mush. Especially fine details in film, grain, noise, etc. Even you know that is an exaggerated statement. I guess we can all agree that YouTube is camera reviews and covid conspiracies. 🙂1 point -

Feck me, Vimeo sucks now!

webrunner5 reacted to jgharding for a topic

I cancelled my paid Vimeo a year back as i wasn't using it and YouTube provides a better service for free. I don't really see a future for Vimeo TBH. From the poor playback performance to their summary deletion of videos there's nothing in their "offer" that keeps me there. I've downloaded all my old videos and will likely delete the account so it is one less to think about. At one point when i was working in Soho everyone wanted to be a "Vimeo Staff Pick" but it very quickly became a self parody of fart-sniffing self congratulatory hipness. Now it's just irrelevant tbh.1 point -

Thanks for that panel discussion link, truly informative! The section that I most wanted answered was regarding whether or not the USB-C port will be able to provide both power and data transfer (e.g. power the unit while recording RAW to an SSD) simultaneously? At the 55:50 mark Panasonic's Matt Fraser goes to check it out with the camera he has but the subject never gets revisited in the video. If it does turn out to be functional/possible then I would hope that either Panasonic or some 3rd-party folks might also be working on some sort of SSD/battery combo device to fill that need and provide for that functionality? Maybe something in a battery grip design with a short USB-C cable that will both record-from and power-the-unit at the same time?! To muse further...had they added an HDR rear display (ala Atomos, BlackMagic, etc.), it would then have negated the need for a cumbersome Ninja or VideoAssist for monitoring (?), would make for a tidy and stealthy package for us folks who like-to and need-to travel as light as possible! ____________________________ Regardless of my hopeful ramblings...the camera is shaping up to be a 10-bit internal recording beast, it will be nice to stretch my creative impulses with locational natural-lighting possibilities with the extra DR and bit-depth and see how it helps out in those brutal imaging and lighting environments outdoors (i.e shooting into the sun or against excessive specular highlights, etc.). Fingers still crossed on the whole internal RAW thing (ala Nikon, Canon, etc.) coming to be for this camera. 🙂1 point

-

1 point

-

But they are factory ready for shooting video. Just not with a 180-degree shutter. Cell phones, action cameras, dashcams etc all lack NDs, do they not shoot video? Ugly video is still video. I'm not sure we'll ever see hybrid cameras come with built-in NDs outside of a few models that are very video-biased, in the vein of an S1H, GH6, etc. It just adds too much bulk to keep the traditional photo-camera form-factor. You have to have room for an assembly a bit wider and taller than the sensor to completely slide/rotate/flip out of the way. Ideally you'd have a clear replacement to keep the backfocus to the sensor consistent. And this is competing for space with IBIS and mechanical shutter mechanisms. If global shutters ever happen without DR or sensitivity compromises, that would clear up some room I guess. You could use a fixed E-ND that doesn't need to move, but then you're always losing whatever its minimum setting is... a stop, two stops? Low-light sensor performance hasn't improved enough lately to absorb that difference and stay competitive. IBIS is too valuable to photographers (and many videographers including myself) to trade it for ND. Adding an ND to my lens or lens adapter is massively easier than adding and using a gimbal.1 point

-

You probably want something like this..1 point

-

@Gianluca - Fantastic! I am wondering if it would be possible to film a virtual background behind the actor, say a 10' x 5' short through projected image and then extend the virtual back ground in sync with the computer generated projected background being recorded behind the actor. Kind of like in the screen grab from your video that reveals grass, house, bush and trampoline. If the physical back ground in this shot was the projected image behind the actor and the Minecraft extended background seamlessly blended with the small section of projected background being recorded along with the actor, that would super interesting to me, as I am putting together a projected background. I am hoping to combined the best of both worlds and I am wondering where that line is. Maybe there's still some benefits to shooting an actor with a cine lens on a projected background for aesthetic/artistic reasons? Maybe not? Thanks! (I hope it's OK that I took a screen grab of your video to illustrate my point. If not, please ask Andrew to remove it. That's of course find with me.)1 point

-

My Journey To Virtual Production

KnightsFan reacted to Gianluca for a topic

While I am learning to import worlds into unreal, learning to use the sequencer etc etc, I am also doing some tests, which I would define extreme, to understand how far I can go ... I would define this test as a failure, but I have already seen that with fairly homogeneous backgrounds, with the subject close enough and in focus (here I used a manual focus lens), if I do not have to frame the feet (in these cases I will use the tripod) it is possible to catapult the subject in the virtual world quite easily and in a realistic way .. The secret is to shoot many small scenes with the values to be fed to the script optimized for that particular scene .. The next fly I'll try to post something made better ..1 point -

Feck me, Vimeo sucks now!

BenEricson reacted to mercer for a topic

Yeah the site is annoying now, but the videos still look better than YouTube. FYI, you can refresh the screen instead of logging in and it will allow you to watch them. What annoys me the most is when you look at a users videos, they don't list them from newest to oldest and they don't tell you the upload date unless you click on it.1 point -

Holy crap. Vimeo starts to become unpleasant to use. I dislike to have to log in to watch certain so called unrated videos. Staff picks don´t fit my bill neither with their hipster oriented nowness videos. Social struggles don´t come in pretty corporate colours.1 point

-

Thanks! Those streaks are from the star-filter that we used (for both pool, concert and club scene) We took the Ferrari for a little spin!1 point

-

Prores is irrelevant, and also spectacular!

HockeyFan12 reacted to kye for a topic

True, but I'm not sure what you were referring to? I've heard that most professional productions shoot Prores rather than RAW, and that RAW is typically only used by the big budget productions and by amateurs. I'm keen to hear more about that. it makes sense to me, but my experience of the internet camera ecosystem (YT, social media, forums like this) and also of most solo-operators is that they're completely insulated from the industry proper, who interact in parallel discussions like liftgammagain.com, the CML mailing list, etc, and who have very different working methods and mindsets to the whole topic of making films.1 point -

My Journey To Virtual Production

KnightsFan reacted to Gianluca for a topic

Sorry if I often post my tests but honestly I've never seen anything like it, and for a month I also paid 15 euros for runwayml which in comparison is unwatchable .. We are not yet at professional levels probably, but by retouching the mask with fusion a little bit in my opinion we are almost there ... I am really amazed by what you can do...1 point -

My Journey To Virtual Production

KnightsFan reacted to Gianluca for a topic

From the same developers of the python software that allows you to have a green screen (and a matte) from a shot with a tripod and a background without a subject, I found this other one, it's called RVM, and it allows you to do the same thing even with shots moving and starting directly with the subject in the frame .. For static shots the former is still much superior, but this has its advantages when used in the best conditions. With a well-lit subject and a solid background, it can replace a green background Or with a green or blue background you can have your perfect subject even if it just comes out of the background or even if the clothes she is wearing have green or blue Now I am really ready to be able to shoot small scenes in unreal engine and I can also move slightly within the world itself thanks to the virtual plugin and these phyton tools The problem is that the actor has no intention of collaborating .. ..1 point