Leaderboard

Popular Content

Showing content with the highest reputation on 11/22/2023 in all areas

-

Sorry to hear about your medical issues and great to hear you are on the road to recovery. I echo your thoughts about this place. My last few weeks have been spent at my Mother’s bedside as she drifted away to her passing. In times like that of huge change and trauma we look for constants to keep us connected to “normal” life. In that respect, checking into here every now and again to find everyone having the same circular arguments was oddly comforting. And I honestly mean that with love.4 points

-

2 points

-

Panasonic S5 II (What does Panasonic have up their sleeve?)

John Matthews and one other reacted to newfoundmass for a topic

A f2 will give you an extra stop of light over a f2.8. That can be quite handy when you're a wedding or event shooter. I suspect that's the primary reason why he'd want it.2 points -

New Insta360 action camera on 21st Nov

eatstoomuchjam and one other reacted to kye for a topic

What do you mean? It looks flawless to me!!! 😂😂😂 Doing it in post in Resolve looks like it might be a mature enough solution now, but I can't imagine that devices will be good enough to do it real-time for quite a few years. I started a separate thread about this semi-recently: https://www.eoshd.com/comments/topic/78618-motion-blur-in-post-looks-like-it-might-be-feasible-now/2 points -

New Insta360 action camera on 21st Nov

FHDcrew and one other reacted to eatstoomuchjam for a topic

I didn't catch that the videos are only 8-bit (he mentioned it partway through). That's a real bummer. Also, the fake motion blur that was shown near the end looks bad.2 points -

2024 - A "No Gear" Year

John Matthews reacted to QuickHitRecord for a topic

I have been spending too much money on equipment in recent years, and WAY too much time researching it. I really don't need anything else in terms of kit. What I do need is to replace my wardrobe and make some repairs to my home and my vehicle. Also, I'd like to spend more time producing passion projects. So, I'm making a commitment that I will not buy any new gear between November 30th, 2023 and November 29th, 2024 (got to give myself the option to take advantage of Black Friday deals). If I need a specialty piece of gear, I will rent it. The exceptions are expendables and direct replacements, should anything I'm using break down. I've never done this before and I'm posting this to help keep myself accountable.1 point -

Help me decide: Canon C300 Mark III or Sony FX9

gt3rs reacted to Jedi Master for a topic

Several issues with photo lens: Most have focus-by-wire, which I find extremely annoying. Most have very short focus throws. Most have no aperture rings and can only set aperture electronically. Most have lots of plastic and aren't as robust as cine lenses. None have 0.8 MOD focus and aperture gearing. Most don't have consistent front diameters across the line. Little correction for focus breathing.1 point -

2024 - A "No Gear" Year

kye reacted to Tim Sewell for a topic

My Sony Walkman from 1986 would like a word with you on that one.1 point -

Yes, lots of stuff is done in-camera and cannot be un-done, so it's just a case of trying all the tricks we have and seeing how far we get. If you do a custom WB on the G9ii and GH6 on the same scene, does it remove the differences in WB between them?1 point

-

Ok I'm convinced, in camera AI motion blur not usable... already in Resolve is a bit of a hit and miss but this example is really not good at all.1 point

-

Panasonic S5 II (What does Panasonic have up their sleeve?)

John Matthews reacted to ac6000cw for a topic

I'd also love them to do 1), but I can imagine some of the YouTube influencer/reviewer comments: It's expensive It overheats recording 4k60p after 10 minutes if it's really small and light - "There's no IBIS" If it's got IBIS - "It's not small enough and it's too heavy" The battery life is too short It doesn't have a fully articulated/flip out screen There's no headphone jack Most of the above list would not actually be issues for the vast majority of potential buyers if it was cheap enough, but it would be a hard sell if it's almost as expensive as a G9ii Maybe the answer would be to market it as a Leica camera?1 point -

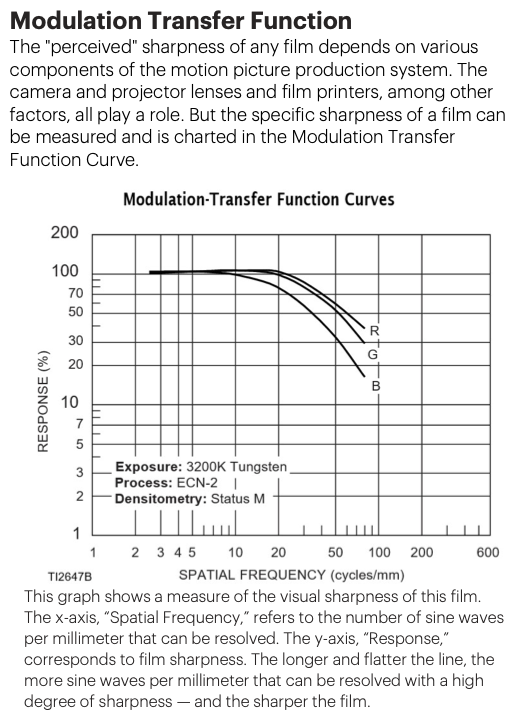

I was being a bit provocative, mostly just to challenge the blind pursuit of specifications over anything actually meaningful, which unfortunately is a bit like trying to hold back the ocean. I have seen a lot of footage from the UMP12K and the image truly is lovely, that's for sure. Especially, looking at the videos from Matteo Bertoli shoots with UMP12K and the BMPCC6K, because it's the same person shooting and grading both so the comparison has a lot less external factors, the 12K has a certain look that the P6K doesn't quite reach. The P6K is also a great image too, so a high standard to beat. The idea of massively oversampling is a valid one, and I guess it depends on the aesthetic you're attempting to create. In a professional situation having a RAW 12K image is a very very neutral starting position in todays context. I say "in todays context" because since we went to digital, the fundamental nature of resolution has changed. In film, when you exposed it to a series of lines of decreasing size (and therefore higher frequencies) at a certain point the contrast starts to decrease as the frequency rises, to the point where the contrast was indistinguishable. The MTF curve of film shows a slope down as frequency goes up. In digital, the MTF curve is flat until aliasing kicks in, where it might dip up and down a bit and then it will fall off a cliff when the frequency reaches half the pixel distance. In audio this would be the Nyquist frequency, and OLPFs are designed to make this a nicer transition from full contrast to zero contrast. While there is no right and wrong, this type of response is decidedly unnatural - virtually nothing in the physical world operates like this, which I believe is one of the reasons that the digital look is so distinctive. The resolution that the contrast starts to decrease on Kodak 500T is somewhere around 500-1000 pixels across, so the difference in contrast on detail (otherwise called 'sharpness') is significant by the time you get to 4K and up. So to have a 12K RAW image is to have pixels that are significantly smaller than human perception (by a looong way) so in a sense it takes the OLPF and moire and associated effects, of "the grid" as you say, out of the equation, but it also creates an unnatural MTF / frequency response curve. In professional circles, this flat MTF curve would be softened by filters, the lens, and then by the colourist. If you look at how cinematographers choose lenses, their resolution-limiting characteristics is often a significantly desirable trait in guiding these decisions. Going in the opposite direction, away from very high resolutions with deliberately limited MTF properties that Hollywood normally chooses, we have the low resolutions which limit MTF in their own ways. For example, a native 1080p sensor won't appear as sharp as a 1080p image downsampled from a higher resolution source. 1080p is around the limits of human vision in normal viewing conditions (cinemas, TVs, phones). In a practical sense, when people these days are filming at resolutions around 1080p, MTF control from filters and un-sharpening in post is normally absent, and even most budget lenses are sharper than 1080p (2MP!) so this needs some active treatment to knock the digital edge off things. The other challenge is that these images are likely to be sharpened and compressed in-camera, so will have digital looking artefacts to deal with, these are often best un-sharpened too as they are often related to the pixel-size. 4K is perhaps the worst of all worlds. It isn't enough resolution to be significantly greater than human vision and have no grid effects, but also has a flat MTF curve that extends waaay further than appears natural. Folks who are obsessed with the resolution of their camera are also more likely to resist softening the MTF curve, so are essentially pushing everything into the digital realm and having the image resemble the physical world the least. I find that "cinematic" videos on YT shot in 4K are the most digital / least analog / least cinematic images, with those shot in 1080p normally being better, and with the ones shot in 6K or greater being the best (because up until recently those were limited to people who understand that sharpness and sharpening aren't infinitely desirable). The advantage that 4K has over 1080p is that the compression artefacts from poor codecs tend to be smaller, and are therefore less visually offensive and more easily obscured by un-sharpening in post. Ironically, a flat MTF curve is just like if you filmed with ultra-low-noise film and then performed a massive sharpening operation on it. The resulting MTF curve is the same. I'm happy to provide more info if you're curious. I've written lots of posts around various aspects of this subject. Yep, massively overenthusiastic amateur here. I mostly limit myself to speaking about things that I have personal experience with, but I work really hard behind the scenes, shooting my own tests, reading books, doing paid courses, and asking questions and listening to professionals. I challenge myself regularly, fact-check myself before making statements in posts, and have done dozens / hundreds of camera and lens tests to try and isolate various aspects of the image and how it works. I have qualifications and deep experience in some relevant fields. I also have a pretty good testing setup, do blind tests on myself using it, and (sadly!) rank cameras in blind test by increasing order of cost! 😂😂😂 I'm happy to be questioned, as normally I have either tested something myself, or can provide references, or both. Sadly, most people don't have the interest, attention span, or capacity to go deep on these things, so I try and make things as brief as possible, so they end up sounding like wild statements unless you already understand the topic. Unlike many professionals, I manage the whole production pipeline from beginning to end and have developed understandings of things that span departments and often fall through the cracks or involve changing something in one part of the pipeline and compensating for it at a later point in the image pipeline. Anything that spans several departments would rarely be tested except on large budget productions where the cinematographer is able to shoot tests and then worked with a large post house, which unfortunately is the exception rather than the norm. Ironically, because I shoot with massively compromised equipment in very challenging situations, I work harder than most to extract the most from my equipment by pushing it to breaking and beyond and trying to salvage things. Professional colourists are, unfortunately, used to dealing with very compromised footage from lower-end productions, but they are rarely consulted before production to give tips on how to maximise things and prevent issues.1 point

-

Ahah, no, of course it is on the original files, I never pixel peep on converted files. I don't like the digital look at all like on smartphone (when everything is oversharpened), but I don't like digital processing making the image soft or sharp artificialy either. I sometime need to keep all the fine details for my videos, I don't want they have digital sharpening, I just want the same details as a raw photo without any digital processing. If I really need a soft image looking, I think the 1080P of my GH5 with a bit of un-sharpen is perfect, no need for a 4K or 6K camera. If I un-sharpen a video file looking like a raw photo, as on the GH5, the result is good, because every details are there. But when I un-sharpen a file like on the GH6, it just doesn't look as good in my opinion, especially in low light, it looks more like if the image is upscaled. My S5II also has bad processing going on internaly compared to previous S cameras, bad edge sharpening even in V-log, making the fine details less thin, and also chroma noise reduction more or less significant depending on the profile used. Of course, most of the time and depending on your screen, you need to crop in order to see the difference. Digital processing like the Intelligent Detail Filter on the GH6 can certainly helps with moiré, but nothing come close to a OLPF like on the S1H for the nicest image quality. I even prefer to use my GH5 in low light compared to the GH6 below 1600 ISO, sure the GH6 is cleaner, but something just looks off about fine details rendering. Of course, downscaling the file to 1080P or viewing it on small screen doesn't make a big difference between both cameras. Again, it's really when cropping we can see that the details rendering is not the same. But it is not so simple, because the colors of the GH6 are really really nice, I just love their new 25MP M43 sensor, it has natural and rich tones in video (and photos of course), nicer than on my S5II IMHO. Last time I loved the colors of a camera as much was with the Samsung NX1. My G9II has more or less the same colors than the GH6, but on the cooler side, and more magenta overall. Nothing that can't be fixed in post though.1 point

-

Have you considered a C500 MK2 6k, Internal ND, Great lowlight performance, great latitude and good dynamic range, internal RAW and 10 bit capability. Pretty well designed package that works great for building up or building down, good battery life. To me IQ/usability wise it would be the C500 MK2 or the RED Monstro. In that price range they are the top performers. With the Monstro you have to deal with 8k but you have tons of compression options. You're getting better dynamic range and color depth over the C500. Only downside is no internal ND's, probably more expensive media (redmags), and power consumption is higher(though not terrible). I see some mentions of the S1H. I think it's a great bang for your buck camera. Great battery life, great sensor. They sell used now for incredibly cheap. Downsides are the HDMI out latency is terrible, slow rolling shutter, no internal 12 bit/RAW, and color isn't the greatest compared to higher end cameras. Again you can get great images out of it and for a hobby camera it would excel. If you are looking for a more high end experience I just don't think that would be it. In terms of value it is incredible though. I might also suggest an ARRI Alexa XT, but that might be too far into the too heavy spectrum. It's quite a beast.1 point

-

Additionally, it's easy to look at residual noise on the timeline and turn up our noses, but by the time you have exported the video from your NLE, then it's been uploaded to the streaming platform, then they have done goodness-knows-what to it before recompressing it to razor-thin bitrates, much of what we were seeing on the timeline is gone. The "what is acceptable and what is visible" discussion needs to be shifted to what is visible in the final stream. Anything visible upstream that isn't visible to the viewer is a distraction IMHO.1 point

-

I'm actually not that fussed by 8-bit video anymore, assuming you know how to use colour management in your grades. If you are shooting 8-bit in a 709 profile you can transform from rec709 to a decent working space, grade in there, then convert back to 709. Assuming the 709 profile is relatively neutral, this gives almost perfect ability to make significant exposure / WB changes in post, and by grading in a decent working space (RCM, ACES) all the grading tools work the same as with any other footage. The fact you're going from 8-bit 709 capture to 8-bit 709 delivery means that the bit-depth is mostly kept in the same place and therefore doesn't get stretched too much. The challenge is when you're capturing 8-bit in a LOG space, or a very flat space. This is what I faced when recording my XC10 in 4K 300Mbps 8-bit in C-Log. I have spoken in great detail about this in another thread. This was a real challenge and forced me to explore and learn proper texture management. Texture management isn't spoken about much online, but it includes things like NR (temporal, spatial), glow effects, halation effects, sharpening / un-sharpening, grain, etc. I found with the low-contrast 8-bit C-Log shots from the XC10, that by the time I applied very modest amounts of temporal NR, spatial NR, glow, and un-sharpening, that not only was I left with a far more organic and pleasing image, but the noise was mostly gone. It's easy for uninformed folks to look at 8-bit LOG images like the XC10 and think they're vastly inferior to cameras where NR isn't required, but this isn't true - the real high-end cinema cameras are noisy as hell in comparison to even the current mid-range offerings, and professional colourists are expected to know about NR. A recent post in a professional colour grading group I am in was about NeatVideo and the post mentioned that NR was essential on almost every professional colour grading job. I'd almost go so far as to say that if you can't get a half-decent image from 8-bit LOG footage then you couldn't grade high-end cinema camera footage either. There are limits though, and things like green/magenta macro-blocking in the shadows were evident on shots where I had under-exposed significantly, but on cameras that have a larger sensor than the 1" XC10 sensor, and if exposed properly, these things are far less likely to be real issues.1 point

-

Is my memory wrong, but can't you have two external sources plus two internal scratch mics? That's a good enough compromise in my opinion1 point

-

I've been talking about OIS and IBIS having this advantage over EIS for years..... sadly, most people don't understand the differences enough to even know what I'm talking about.1 point

-

Will The Creator change how blockbusters get filmed?

Davide DB reacted to Llaasseerr for a topic

This vfx approach has been used for over 15 years. That level of in-camera "photographed" realism was pioneered by ILM in films like War of the World, Pirates 2 and Transformers. What the director is really doing is thinking back to his methodology with his first film and wanting to get back to that immediacy and creative spirit, but with a bigger vfx budget. As has been described, for him the rig was obviously fundamental to that. And I now understand how much they would have saved on shipping lights, cranes, on hotel budgets etc due to the need for less crew and gear - also crediting the high ISO there.1 point -

Help me decide: Canon C300 Mark III or Sony FX9

kye reacted to Jedi Master for a topic

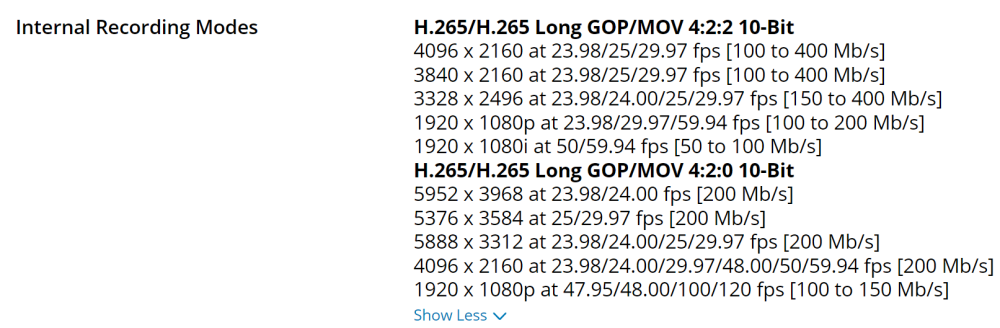

Now that I've got the ACES workflow more or less under control in Davinci, I went back and redid the S1H footage I downloaded and I like the results. I'd expect the BS1H would produce similar results since it uses the same sensor as the S1H. Is the L mount used on the BS1H strong enough to support typical cine lenses? Anyone know if the BS1H can do internal recording using an H.264 codec? The specs on B&H's website indicate it can only do H.265. This is a concern to me as although I have a very capable PC (32 cores, 128GB RAM, beefy Nvidia GPU, and RAID-0 SSDs), H.265 footage plays and scrubs sluggishly in Davinci while H.264 footage is smooth. I'd hate to have to transcode everything before editing it.1 point -

Absolutely, but when will it be here? I mostly have EF lenses, though I may consider the Sony FX9 II if that comes along sooner and has the better features/price. The FX9 already looks great with the in-camera 10 bit: And, with the RAW expander, it reproduces some beautiful colors: Sony's advantage is that they don't need to introduce a new mount at this stage and have a better native and 3rd-party lens selection.1 point

-

Help me decide: Canon C300 Mark III or Sony FX9

eatstoomuchjam reacted to kye for a topic

The more you look at something, the more you notice. When I first started doing video, I couldn't tell the difference between 60p and 24p, now I can tell the difference between 30p and 24p! BUT, having said that, my wife is pretty good at telling very subtle differences in skin tones and has spent exactly zero time looking at colour grading etc, so we all start off seeing things differently as well.1 point -

Looks like available from scratch...1 point

-

^ in that case i can still safely recommend a pocket 4k1 point

-

For me is more make sure that I buy only things that I 100% confident that I will constantly use or that are not available for rent if I need it just for one project. And more so if I don't use them enough resell them asap. My TCO of not super useful accessories seems bigger that the TCO of my cameras and lenses1 point

-

Panasonic S5 II (What does Panasonic have up their sleeve?)

ac6000cw reacted to John Matthews for a topic

My Priorities for Panasonic: 1) Release a VERY SMALL M43 camera body with PDAF and full-sensor readout in 4k NOW! 2) Release a GH7 with variable ND and PDAF. 3) Release a high-end video camera with variable ND and PDAF for L mount. 4) Release a pancake (28mm or 40mm or both) lens for L mount.1 point -

😁 Well, well, this certainly was a long read that took quite a few days.... I have to say it was quite a ride to read. In my case, still using my NX cameras but pretty stock, it was until last month that I found that there were "hacks" for both the NX1 and NX500 and decided to get up to speed and do some shooting. Sadly, I have not been able to find much around. The old NXKS2 hack is nowhere to be found (can someone share it?) I'd like to experiment with that before trying Vasile's method. It seems I lost the boat on accessories like cages, or adapters like the NXL that sadly are not popping up in an amazon or eBay search or anywhere. It was strange as well to see that many of the comparison videos linked here were taken down in one way or another. I still feel very happy with my NX lineup in 2023. I wonder how it compares to whatever others had upgraded in the past years as I still feel it can hold up for a while - in my humble stock mode-. Now I'm anxious to try the hacks from 2016 🙂1 point