Axel

Members-

Posts

1,900 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by Axel

-

Not mine. Why is it that none of the clips published so far has that 'cinematic', 'organic', 'natural' look that we have been after for ages? I appreciate this discussion, because, as the TO wonders, why would anybody ever wish another camera? - and that's the answer: every camera must have weak points, where concurring products do better. You see, on paper, the C300 MII is a perfect camera (I don't desperately need raw). But I had rented the current version for a week and (besides the 8-bit, which doesn't count much, because it was good 8-bit) it had a serious handicap (for me): a really shitty EVF, too small image, too uncomfortable to press it to my face as an additional stabilization point, which is my habit when I shoot handheld. If there is an EVF, it must feel right, or I won't have it. For example: my old GH2's EVF only needed an adapted Zacuto eyepiece ($ 17), my Pocket (with a really shitty display) a Zacuto display loupe (~ $110 for the early buyers) to make it at least usable. There were times when we thought, this race for better specs must have an end now, this or that camera has it all. Duh! On 4,6k Ursa mini I thought, who would ever need better codec options, better audio options, greater resolution, better ergonomics? Let's sit and wait what downsides the first testers discover.

-

I agree. For anyone who grades his films in FCP X anyway (and many have proven that you can do that!), Color Finale surely is a good investion. It's easy to use, elegant, it fits best into FCP X.

-

Exactly. Gives me time to save some money. And being a BMPCC owner myself, I already know I could handle this camera, which in many respects is much more ergonomic, despite the missing ND filters. What sample video are you talking about? The Cpt. Hook clip with the 4,6k sensor in Ursa on the BM page? Why, couldn't be better, could it? No comparison to an FS7, which doesn't have raw or ProRes ootb, which doesn't have a global shutter and I'm sure no 14, sth. stops of DR. See what Hook wrote about it on BM forum: With DR, so far in my experience i think it's safe to say that whatever you rate the Pocket Camera to be, add roughly 2 stops. 1 stop in the highlights, and another stop in the shadows. I can't wait for people to get this and go shoot some incredible footage with it! I'd say according to the specs and what we saw in the NAB videos, I want the 4,6k version from the start, with the BM EVF. It's still much less than a fully equipped FS7. People forget that speedbooster or optionally very expensive (and usually slow) Sony lenses are needed then. Whereas most already have access to some good EF lenses or can figure out in advance which are best. Yeah. As others wrote, we have to compare the better DR and the better lowlight. I see that with my Pocket. My older cameras or my D3300 have better lowlight, but are we talking about cinema cameras here? Of the two advantages, better DR and better lowlight, I'd choose the DR at once. That's why I want the 4,6k (resolution is overestimated, as I often wrote).

-

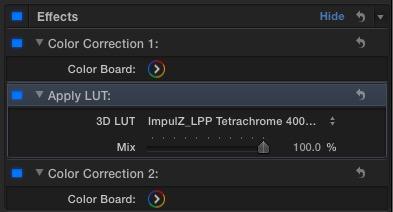

You always could have multiple color boards in FCP X - with "+". Color Finale is nice, particularly that it doesn't slow down performance. But it isn't 'Resolve in FCP X'. If not for the integrated LUTutility it adds no new possibilities. It's still not keyframeable, and the '6 vectors' are actually less powerful than FCP X's color mask. That's Resolve, in comparison. FCP X with or without Color Finale is best if you want to enhance your clips quickly and intuitively. FCP X is the fastest editor, suitable for many, many clips that need to be viewed and edited. If, on the other hand, you are working hours on just one take, these advantages count no more. Because your options in FCP X would be limited - and because you are slow anyway ...

-

Good news for the Mac community. It seems they soon won't be condemned to do their compositions in costly AAE or Motion. Here is the link: https://www.blackmagicdesign.com/videos/fusion The main advantage of Fusion is it's node-structure, which is clearly a better way to organize complex comps and applied filters, effects, trackers, keyers and parameters than layers and tracks with awkward boxes and pop-up menus. Let's hope it's no April Fool's ...

-

Instead of putting into action my most ambitious plans, I keep planning, postponing their actual realization. I 'm making plans, I'm performing tests. That means I actually distrust my ideas, I don't really believe in them. At the end of the day, they are hot air. Because creativity is all about solving problems, finding ways to overcome constraints - with wit and spirit and with what you have, not with 'specs'. Improvisation is better than unobtainable (mostly hollow) perfection. If I think, I can only do it with this or that piece of equipment, I will fail, always. Once I have that precious gadget, I reckon the bar rose again. It's me. Any camera is insufficient to capture my fancies, if I am. Any camera will do, if I do. The first sentence:

-

I like the slogan for the german Smart car ad: reduce to the maximum. As for the BM camera set up: Well, if you need steadily moving shots, sth. like this is required. You could do with a conventional steadicam, but that wouldn't reduce much. They could have used a Nebula with a BMPCC and the new smartphone-sized SmallHD, but this was probably the BMPC. By downgrading you have to accept compromises as well or it's the wrong thinking.

-

Welcome. Or five minutes, but in earnest ... Why not? God forbade it. One might find that 23'55" of the day were devoted to distractions.

-

Same with me. As a big fan of Chris Marker, I love this thoughtful, streaming-consciousness-like poetry. I realize I understood ALL (in essence) just by getting tuned to the voice, almost music by itself (BTW: What was that music?). New Yorkers don't have a difficult dialect for foreigners to understand, they just speak (and think?) too fast. The same is said of Berliners, where Andrew lives. They also create the most new idioms. I particularly liked the editing. And how you treated sound(s).

-

I am also getting used to low contrast images because I see them in the log files. But even for an 8-bit image as the target I can have definite blacks and whites without destroying too much of the dynamic range. Of the two photos above the first is better. The future is HDR-TV like with Dolby Vision. In this simulation you see how this affects the reception of images: A HDR image tone-mapped to 8-bit out of seven differently exposed shots, for example, has preserved more than 20 stops. But compressed into 5 stops (you usually can't distinguish shades of grey below 16). So that everything has defintion (outlines), but similar luma values. We now grade with higher contrasts to avoid images looking too flat on terrible LDR displays. We bend curves. So the wise thing to do if you think your films should be 'future-proof' is shooting in 10, 12 or 14-bit, grade for 8-bit now and keep the original ungraded material for the future.

-

Inspiring. Visuals are better than words to make your ideas flow for filmmaking. They are more impatient. Once you can see them there appears a render bar above them ... Another playful method is sticking together moodboards, collages of things one feels should be in the movie, colors, locations, expressions on faces. Whether you are trained to paint or not: painting (best in watercolors) frees creativity. While you mess about, the muses will kiss you.

-

If objective truth or an objective fact is something we need to agree on, I'd say it's a delusion. An artificial frame, the more abstract the more it forces people to confess to it. A human disease. Literally much ado about nothing, but the ado includes wars and genocides. Think about it. Can there be anything more negligible than, say, the question which NLE or OS is best? Apply this to any dissensions between individuals, groups or societies. Apply it to your own inner conflicts. Don't worry about things, so-called 'facts', notions. Understand that others are influenced by alien forces . Do the right thing. Use The Force, Luke!

-

We can't of course know what Murch told you in private, but unless everything he wrote about his experiences with NLEs was actually written by an uninformed ghostwriter, the quotes above were correct. And you contradict yourself a bit, since you also mentioned the 'missed frames' issue that happens when quickly scrubbing or skimming over clips. Nothing. I am an amateur. I roundtrip with Resolve Lite, and compared to Premiere (a friend of mine uses that) it seems to work better. Can't tell about AVID. As you can see, the production for Focus used Logic Pro X, that's what I meant with 'proprietary workflow'. Since there are no tracks in FCP X, you have to assign so-called 'roles' to your audio, which is one of the tagging-tools in the project itself. Those you can export either 'as' tracks with individual clips (and then of course gaps) - afaik there is a special software that translates this for ProTools - or as full-length stems. I can't tell how Logic deals with the audio, since I never tried it. Best google for it. Of the said Focus workflow, there is also an older clip where the assistant editor talks about their experiences:

-

In his book In The Blink Of An Eye he describes how he thinks of editing film as a physical thing, like dancing. That's why he prefers to stand and move during the process rather than sit (postural deformity, peripheral circulatory disturbances, repetitive strain injury - 'mouse arm'). Apart from the bad health of most seasoned digital editors, they feel less. This applies to AVID, APP and FCP X in the same way, of course. The main difference between all track-based NLEs and FCP X is that you need to think differently. One has to accept that the main storyline ("e") is like a film strip that must contain the narration as a linear sequence with no gaps. That everything else ("q") is just added to that and has no right of it's own. That's it.

-

Very hard to believe. It's just a statement. To fully exploit the possibilities of FCP X, you of course need to follow a 'proprietary' workflow. It works best with digital data from the start, which (though Kodak continues to deliver film, for now) clearly is the future. That means you have to use FCP X as DIT assistant, for which it is 100% perfect. You hot-swap Thunderbolt drives, and everybody in the team has instant access to all the media: All contributors log in and must be 'trained hands' in setting the appropriate tags, giving the editor(s) all information he/she/they can dream of. The program fits into the OS seamlessly, insofar as tags and folders (though who needs folders?) are linked in smart collections that proved to work flawlessly. It's very close to mind reading. You look for the needle in the haystack? Yoink!, you've got it. It's hard if not impossible to find an argument against improved teamwork and more responsibility (always instantly controllable by everyone) for all, which is seen as the premise for a successful modern work plan. Trained hands? And we only talked about media organization here. What about editing? I can't tell you if there is an advantage of AVID over FCP X in this respect, but I doubt it very much.

-

Most apply sharpening in post, i.e. in Premieres sharpen effect around '25'. Afterwards the image looks no longer that soft. Anyway, we shot the same motifs with C300, BMPCC and 5D MIII, and it's clear that of these three HD cams the 5D resolves the least detail. Not to speak of a comparison to 4k cams, as seen here: However, the winner is not so clear if the 4k is compared with raw through Magic Lantern: http://www.youtube.com/watch?v=nH5fafQQddg However, we need to see a comparison to GH4 plus Shogun 4k 10bit, which is also much easier to use. Raw with 5D ans ML means still a lot of trouble! BTW: Since this site was overhauled, many things don't work well (my layout gets torn apart for what reason ever), apart from being offline very often ...

-

Well, in the seventies the majority of directors experimented with drugs. Among others Coppola (as you can read in Biskinds book Easy Riders, Raging Bulls). Could someone with a straight mind (quotation from Inherent Vice: 'Straight is cool') have made Apocalypse Now? Kubrick, with his famous Jupiter and Beyond the Infinite sequence, stated he never tried drugs. I did, and I'd say they shouldn't be used as an escape but for the expansion of consciousness (Huxley). You get difficulties taking anybody seriously who describes himself as 'down to earth'.

-

Interesting thread and good remarks. I always felt that people who call for higher frame rates subconsciously want to stop motion. On the relativity of time (and the perception of time) I once read a good book (I think the title was Time). The author said that if you were sucked into a black hole, this would happen so fast that you wouldn't notice how all your molecules were compressed. A guy with a very good telescope, watching the tragedy from earth, would see you hovering in front of the abyss completely motionless. Every now and then, becoming old and grey, he would check the status quo, but wasn't able to tell if you moved forward at all. The heart of moving pictures is present constantly slipping away. Fleeting moments. Best captured at lower frame rates. Not long ago (relatively) I watched an experimental film about motion blur (title was motion blur). Time lapse with successively longer exposure times. Made the cars on a highway disappear, made the fish in a fish tank disappear. A trillion fps will make us see light move, but will practically turn any living thing to stone.

-

Fun. Yes. I had a mechanical Bolex and developed the B&W films myself. Wouldn't you agree you had to get much more parameters right intuitively (w/o proper preview, w/o proper exposure assistant, w/o sound) than with any of the digital cameras of today? I also had a Kiev 6x6, which had a light meter built into the (detachable) reflex viewer. I found out for myself that guessing the right aperture and shutter (relative to the stock's speed) by simply looking at the ground glass got me better results. If we have to fight some moire now, and if that's our major pita, there's no need to despair.

-

Know your foe! Make friends with your biggest fears or they may destroy you (Bill to Kiddo, sitting at the campfire playing the flute) If I had the perfect camera with no quirks or limitations but with incomparable strengths such as 30 x optical power zoom, perfect AF, AE and AWB, low light down to 10 luces to shoot night for day, whatever, I was never able to make an informed decision. After the physical facts behind a camera's cardinal weaknesses are understood, I suggest one explores them deeper. Eventually dealing with them becomes intuition, almost psychosomatic. Sound pompous, but is rather trivial. Take a good pan or a smooth focus transition. Could you really teach someone how to do that by describing it verbally? It's all about intuition paired with fine motor skills.

-

Working out how to avoid it. How best? By doing the contrary first, by learning how to produce moire. A lot of factors contribute to that, but in the end it all boils down to the resolution of patterns. So the obvious answer is looking for patterns. The lens is capable (sharp enough) to depict smaller picture elements (google >circle of confusion) than the pixel grid on the sensor, never the other way around. This reduces the actual resolution drastically. If you additionally allow any in-camera sharpening, the aliasing stays the same, but the moire will pop out more (software solutions as shown above affect only this, they can only soften the existing aliasing, imo not worth the trouble/money). There are filters you can put in front of the sensor (> OLPF) that will tune the spatial frequency. They will eliminate moire. But they are expensive and they have to be made specifically for your camera. However, during your deliberate chase of moire patterns you will notice that with one too sharp lens you will only be able to produce moire under certain conditions. 1. The most important is the spatial frequency of the lens, which alters depending on the aperture you chose. For just about every lens there are test charts that show this correlation in a graph. As a rule of thumb: At open aperture, the lens usually performs 'worse'. You should learn to find the aperture range that allows moire with your combo! NOTE: With some lenses, choosing an inappropriate aperture alone makes moire disappear (which is not what we intend), 2. The pattern needs a certain contrast. As we all know, contrast is highest on a sunny day at noon and lowest in a dimly lit cellar. So you need to avoid wide apertures in bright light. If you are after moire, stay away from ND filters! By understanding those two conditions, you will capture 90% of all moire that's achievable. The third condition is the relative size of the pattern itself. Whereas the first two factors are easy to manage, this one really restricts your options regarding framing, focal lengths or choice of motifs in the first place. A pain in the ass. But in reality, it's actually pretty easy to instantly recognize promising patterns. And then, all together now: 1. Turn up in-camera sharpening (this embosses otherwise barely noticeable moire and bakes it into the image, best advice!) 2. Find the aperture at which your lens performs best (also depends on focal length with zooms) 3. Change your position during shooting ever so slightly. Doesn't need much practice, you'll know intuitively pretty soon, it actually doesn't limit your choices. 4. Don't be afraid of moire-free images! Cameras with very high moire efficiency are around for almost one decade now. It's lunacy, but many filmmakers actually at some day lost their skills to capture moire. Cinema features have been released shot with those cameras, and nobody complained about missing rainbows. People got used to the clean images, so don't worry.

-

Nothing against an occasionally necessary stabilization in post. But only for 5 % of the footage at the most. Affordable gimbals are in the world, and abusing 4k for 'reframing' is detestable. Watch this: http://vimeo.com/111900328

-

For lack of a histogram on my BMPCC (it came with a FW update only a week later), I exposed a whole wedding (almost four hours of footage) to the right, with ultraflat ProRes 10-bit LOG, using only 95% zebra. Most shots were fine, as expected (did a lot of tests before), but some shots had weird plasticlike skintones that I couldn't fully rescue in post. I have been shooting weddings with various cameras before, of course I always EdTTC or used auto exposure (which does the same). That said, I do very often like skin colors of DSLR video, but not so much the skin's gradation. I propose you really perform many tests with ETTRd skin and match them before you announce the method to be superior.

-

Yes, it's the same skin color at first glance. Yet it looks as if the skin was softened in the ETTR image. You really can't judge that if you take just one shot. Shoot an entire sequence with two or more people hit by the light sources in different angles. Then try to match those in post. One looks perfect, the other 'posterized' (old Photoshop effect), and you can't do anything about it. Carpets and vases have no 'memory colors'. They can be reduced to, say, five nuances, and nobody will notice. A face will need - I don't know - fifteen? to look alive.