-

Posts

1,839 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by jcs

-

GH4 simultaneous audio recording while in 96fps mode

jcs replied to DesignbyAustin's topic in Cameras

For sync use a real clapper board or an app. Use your cellphone for audio- quality is good enough if on a budget. Shooting at 60p provides audio when not in VFR mode- that isn't slow enough? In your NLE, sync the audio spike for the clapper sound to the visual of the clapper closing (or screen flash if using an app). -

The 25mm F.95 Voigtlander does look gorgeous. The metal build is excellent, the focus is smooth and very high quality. It works out to a 25mm*2.3(crop) = 57.5mm F2.2 full-frame equivalent. I find the Canon 50 F1.4 with an EF to E mount (I use the MB IV) looks even better on the A7S vs. the GH4 + Voigtlander. For those on an ultra low budget, the Canon 50 1.8 is optically excellent for $125 (focus ring and build quality are not the best). The Commlite Smart adapter for ~$92 looks like a good deal. Perhaps the fastest lens with top image and build quality for the price would be the Nikon 50 F1.2 AI-S for $700 and a $15 adapter. A bit less than the ~$1000 for the Voigtlander, and on FF much faster. Zeiss has a 50 F1.4 for ~$600 (Nikon mount). Used there's a lot more options e.g. Yashica 50mm F1.9 in C/Y mount for $50 used + $14 C/Y to E-mount adapter. If clean and in good mechanical condition, used older lens can have more character as optical imperfections actually help with the 'unreality' of filmed images. Such lenses can be specialty use only, however could save time in post instead of grading to look vintage/organic.

-

Right on- a camera test with people and skin tones ()! Looks pretty good, especially for the price. What's the raw bitdeph? Raw is fine for narrative, would more versatile with on-camera deBayering and efficient compressed codecs. If it shot ProRes or similar might be a good ARRI alternative.

-

For the GH4 I use the Panasonic 12-35 F2.8, Voigtlander 25 F.95, and Panasonic 35-100 F2.8, used the most in that order. IS and autofocus (for run & gun and steadicam) are very helpful. For low light with some form of rig, the Voigtlander 25 F.95 can look really nice.

-

The GTX960 looks pretty solid. In order to use the hardware H.265 decoder, the transcoder app must use the Windows DXVA2 API (this means a native DirectShow implementation vs. command line as with ffmpeg). IMO the DirectShow API is the most complex API in Windows (as in hard to use to create reliable apps!). lol- the original architect has left Microsoft and now works for Twitter which, ironically is one of the simplest and easiest to use apps out there! Apps that already use DirectShow will be able to support this feature fairly easily (perhaps even VirtualDub). For those who dual-boot WIndows/Mac, check out Macvidcards: http://www.macvidcards.com/index.html . They mod fast PC cards to work in both Windows and OSX. It's unclear if the H.265 acceleration would be exposed on the OSX side (through AVFoundation), but it's certainly possible in the future. TMPGEnc- used to use it a long time ago. Nice to see they are still around. It supports CUDA H.264 hardware encoding, but they don't indicate any form of hardware decoding. Curious that they're also using x264- thought it was GPL licensed (as in can't be used in closed source apps). I added Prores support today and am currently adding multiple parallel job support. Also experimenting with ALL-I 10-bit 422 H.264 as well as IPB 10-bit 422 H.264 (works OK in Premiere Pro CC). Since H.264 can be hardware decoded, and H.264 10-bit 422 is a newer implementation vs. ProRes (a form of MJPEG), it might provide more efficiency as well as play back faster in NLEs which hardware decode H.264.

-

It's means that: If the licensing fees for these codecs are reasonableand there's a market to recoup the cost of licensing and development timeI can relatively quickly create a transcode tool which is much faster than anything based on ffmpeg

-

iFFMPEG is a GUI which calls ffmpeg (a command line tool and separate download). All tools which use ffmpeg will run the same speed in most cases (where each tool is running the same number of jobs at once). GUI tools which call ffmpeg (which can't be included with the product due to licensing) can be relatively low cost as they don't need to use a licensed SDK, such as MainConcept. There's also typically a royalty required for each sale, which isn't a big deal but adds more complexity for bookkeeping (will need to review in more detail, but it looks like the first 0-100K sales there's no royalty for H.265, after that it's $.20 per copy, max of $25M per year). There was a time when ffmpeg was the fastest, highest quality tool for H.264 (and possibly H.265). I haven't researched this much recently, however it appears that there may be much faster solutions available today: http://www.codeproject.com/Articles/819588/Real-time-End-to-End-H-HEVC-Solution-for-Intel http://www.strongene.com/en/downloads/downloadCenter.jsp - Real-time H.265 decoding on mobile devices too (perhaps also on such devices which shoot 4K). While H.265 is a lot more complex than H.264, compilers and tools are taking much better advantage of existing CPUs and GPUs: using all the cores and using them more efficiently. The Strongene encoder is only 2x slower than H.264 when encoding H.265 (likely what is used or something similar in the NX1). Decoding speed isn't listed but it will be much faster than encoding. MainConcept states real-time encoding at 1080p30 for H.265, and faster than real-time H.265 decoding up to UHD60p (no hardware specs listed): http://www.mainconcept.com/products/sdks/codec-sdk/video/h265hevc.html As of today, there are software technologies that can encode and decode H.265 in real-time, at about 2x slower than H.264. This means that on a machine that can encode and decode 2 H.264 streams in real-time, the same machine can encode and decode 1 H.265 stream in real-time. If there's interest, I can research what it costs to license these SDKs (and royalties, etc.) to see if it's worth creating a custom fast-parallel-batch transcoder for H.265 to H.264 and ProRes.

-

Ed- thanks for the file. It appears to be a component for Edius along with ffmpeg dlls (but no command line tool). If there's a command line tool to convert H.265 to HQX, I can create a parallel batch processor for it. Chris- today I created an ffmpeg proxy to intercept the calls from mlrawviewer. ffmpeg is called in a way that uses a pipe for input, where mlrawviewer feeds ffmpeg uncompressed video frames, one at a time, which ffmpeg then compresses and writes to disk. So I can't just copy the command line arguments and run the jobs in another process. While I could read from the pipe as fast as mlrawviewer writes to it (caching the data then feeding to another instance of ffmpeg), It looks like the AmaZe debayer used by mlrawviewer is the main bottleneck. Given this bottleneck and the nature of how mlrawviewer works e.g. load a file, set WB, exposure, and gamma curve, then export to disk, the fastest workflow (without major changes to mlrawviewer itself (written in python)), is to run multiple copies of mlrawviewer, processing the first file in the first viewer, 2nd file in 2nd viewer, and so on, cycling which viewer is used as you go along. On my 12-core MacPro running Win7 with a GTX 770 (modded to run on OSX as well), I see 80+% CPU utilization and about 60% GPU utilization running two copies of mlrawviewer at once. So 3 copies would probably be the max on this machine. I found that ffmpeg has native MLV support, going back to April of last year: http://www.magiclantern.fm/forum/index.php?topic=11566.25 , however after testing it today with an ffmpeg build I compiled last month from the latest code, it's not complete: same results as reported in the ML thread. The reason the output looks dark, is it's linear video (no gamma applied), however it also doesn't finish processing the files, so not usable yet. A which point MLV works with ffmpeg, a parallel batch processor will be possible.

-

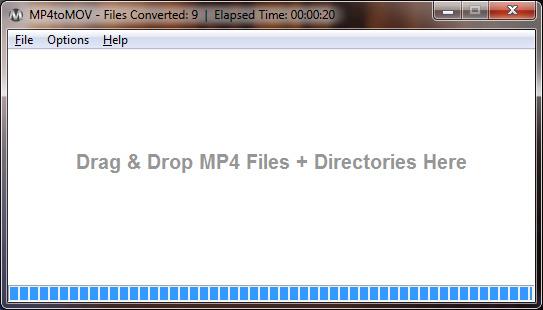

Hi Ed- here's the Windows version: http://brightland.com/w/mp4tomov-for-windows/ . Currently it quickly rewraps MP4s to MOVs (batched). Adding support for H.265 to H.264 or ProRes would be fairly quick. Looking at the supported codecs for ffmpeg- I didn't find HQX or anything from Grass Valley. HQX looks like a version of MJPEG (similar to ProRes) optimized for Edius. I examined the HQX downloads at Grass Valley- didn't see any command line tools. Creating a custom tool with DirectShow is doable (in order to use the Windows HQX codecs), however would take a bit of time. VirtualDub supports the command line- if it's possible to use VirtualDub command line to process your files, I can add support very quickly.

-

I released a high-performance tool to convert MP4s to MOVs to allow Resolve to use the A7S's audio. This app could be updated to also support fast H.265 to H.264/ProRes for the NX1 as well as other conversions provided by ffmpeg. mlrawviewer for 5D3 RAW could be modified to use this system as a parallel batch processor to greatly reduce time converting MLV files to ProRes and even 422 10-bit H.264 (similar to XAVC spec). Let me know your thoughts regarding updating these tools as well as any other desired tools & features.

-

A Windows version was requested- it's now available: http://brightland.com/w/mp4tomov-for-windows/ The OSX version is a compiled script, the Windows version is a native C++ app which includes an option to keep or delete the originals, provides progress info, progress bars, and performance stats.

-

I think in this case he meant it as a compliment- you're both on the same page. What most of us want are cameras with accurate skin tones out of camera. From there we can more easily match cameras, have no surprises in post, and can tweak color for emotion (ending up no longer accurate/realistic and instead lending to the cinematic mood of the story).

-

MattH- iffmpeg is a GUI for ffmpeg. ffmpeg has full source code available and is relatively easy to compile on Linux/OSX (it's better to cross-compile for Windows from Linux or OSX!). I'm currently wrapping up a fast batch converter for Windows (uses ffmpeg). If there's interest I can look into adding H.265 to H.264/ProRes conversion with full-range ffmpeg support. Also thinking about providing an add-on for mlrawviewer to improve 5D3 RAW workflow performance.

-

It's perhaps more fair to say the FS700 at $8600 (with lens) was a compelling rival to the C300 ($15k no lens). The C300 wins in absolute detail (slightly), better skin tones out of camera, better usability, and a smaller package. The FS700 wins in slomo (up to 240fps 1080p-ish (up to 480 and 960fps at lower resolutions/cropping)), full frame support and superb low light with a Speedbooster. For fast turn-around, the C300's colors were (and still are) a major selling point. The FS700 can look great, but takes more work in post. If we add an Odyssey 7Q to the FS700 (bringing the price up to around $11K), this combo provides superior 10-bit ProRes, continuous 2K 240fps 12-bit raw, 120fps 4K (including raw bursts), 4K ProRes, and along with Slog2 puts it well past the C300 in terms of final image quality possible (including skin tones with post work). The new FS7 brings 4K 10-bit 422, up to 180fps 1080p internally, and along with improved color science, isn't just a rival for the C300, it far surpasses it. The FS7 is even doing well matched up with the ARRI Alexa/Amira! I'd hold off on the FS7 until Sony does at least one firmware update, however Sony is on the right track- giving us what we're asking for (as they have with the A7S). Samsung (NX1), Panasonic (GH4), and Sony are eager for our business- let's keep giving it to them. The most challenging aspect of a modern digital camera is skin tones: we notice right away when they aren't good. Resolution, moire, dynamic range, highlights/shadows- most people don't notice so much. When skin tones are off, everyone notices. When skin tones look great- everyone loves the image. That's why the 5D3, even with H.264 is still popular with a relatively soft image. I can understand there's a lot more work for camera tests shooting models/actors, however a camera test without skin tones, the most important test for the viewing public, doesn't really show how most people, both pros and consumers, will use the cameras (cats & dogs not included). To minimize the workload, setting the cameras to the best in-camera settings possible (or even selecting the best stock options- no tweaks), setting correct WB and exposure, and shooting in the same conditions makes a great camera test when comparing two or more cameras (no color work in post other than matching levels on the scopes, and converting all cameras to Rec709).

-

Perhaps post some sample images (and perhaps links to originals somewhere) so we can compare ourselves and share the results?

-

sam- yeah the FS7 is looking better & better as more footage is posted. A7S- Pro and Cinema appear to be variants on the Rec709 profile with differing levels of saturation (primary change) and a red bias (Pro) vs. green bias (Cinema), etc. SGamut can look OK in post using one of the SGamut LUTs. I'm still experimenting to see if there's any advantage to using SGamut + LUTs. The Amira is supposed to be exactly the same as Alexa: sensor and color science. Andrew- it's a camera test the way working DPs shoot: Gene (FS7) and Nate (Amira) are both pro DPs working in LA. The cameras were both set to default settings, and then compared in post with default settings to get both cameras to Rec709 for delivery. No grading was done to show what the cameras look like with... no grading. A second video with slight grading to match the footage would be helpful. In any case, it's clear that the FS7 and Amira (and Alexa) could be cut together. When the Odyssey 7Q came out, Gene was kind enough to bring his FS700+O7Q to our studio and do some very helpful tests (his in-camera FS700 skin tones look pretty good too: https://vimeo.com/63245456). We ultimately went with a GH4 and m43 native lenses for 4K run & gun shooting, however now that the O7Q is about the same price as a Shogun (on sale), it's a bit more interesting for 4K studio shoots (can work with FS700, GH4, A7S). If Canon doesn't step up with a competitive 4K camera, the FS7 might be the best deal for in-camera 4K+slomo+decent-color for 2015. Ebrahim- yeah, a helpful test with limited variables. As noted, a follow up with minor color tweaks would be helpful- here's a frame after tweaking to match (only global changes- no masks or secondaries): I'm not a colorist and can understand why the best are highly paid- it will take some time to become an expert. On my X-Rite i1Display calibrated Dell 3007 and Dell 2405 monitors these two frames look reasonably close. The remaining differences can be fixed with secondaries/masking. As is I wouldn't have a problem cutting between these two cameras. As part of this exercise, I will say the Amira was easier to work with, even after H.264 compression (I pulled a frame from Gene's uploaded original on Vimeo). It looks like both cameras were rolling at the same time- which forms a stereoscopic 3D image (cameras were a bit too far apart, but it works): If you relax your eyes and look into the page until you see 3 images, then focus on the center image: it will be 3D (hyper stereo).

-

Other than the magenta bias for the FS7 and green bias for the Amira, the FS7 looks pretty decent in color and highlight tonality (no CC other than what is noted). Once Sony fixes the firmware issues, the FS7 looks to be a decent camera for the price. In the comments it looks like Nate sold his F55 to get an Amira. Even though the F55 can now closely match the Alexa in post, clients are asking for ARRI so he switched to Amira.

-

What's The Best Camera For Shooting A Low Budget Movie?

jcs replied to fuzzynormal's topic in Cameras

A low-budget film is between $625k to $2.5 million. Even Alexa, F65, and Phantom (for super slomo) could be used if they provide value that other cameras don't have and can be justified in the budget (these cameras and lenses would be rented). Otherwise, RED Epic/Dragon and F5/F55 level cameras are common. Ultra low budget goes down to $200k- RED Epic/Scarlet, C100/300, and Blackmagic are common (and even the 5D). For 'no budget'- whatever camera you have access to. Using a cell phone as with Tangerine and the Bentley ad are marketing angles. http://www.backstage.com/advice-for-actors/secret-agent-man/costs-low-budget-films/ -

Makes sense for a machine-control-feedback loop. With low torque, relative to mass (inertia tensor of the 'body'), the control system can't react fast enough, constantly under- then over shooting the target (I wrote software to control a physics-based medical simulation trainer a few years ago for laparoscopy of the lower GI. Used pneumatics but similar control loop as with electromagnetic soutions). At some point we'll have options to buy modular parts so we can custom build exactly what we need (already available to some extent for the bigger gimbals). My minimum combo would be the A7S with the 18200 lens- 1013 grams. I have a GH4 with the 12-35 F2.8 but wouldn't want to give up low light provided by the A7S. Would also be nice to be able to use the Canon 16-35 F2.8L or 24-105 F4L with the MB adapter on the A7S. Hopefully there will be more pistol grip sized gimbals with bigger motors soon. Something made out of carbon fiber composite would be pretty cool.

-

Is 1000g max accurate? The Sony A7S with SEL18200 are 489g+524g=1013g. Curious how it behaves at or slightly over the limit. I use iOS for mobile- would be more inclined to write something in Objective C,C++. For motion control could do any cubic curve or physics-based algorithm with feedback. It's Bluetooth so just need the spec for the device (working sample code would be helpful too- sometimes specs don't match implementations).

-

Pretty cool- will it work with iOS too?

-

When comparing the A7S to 5D3 RAW, it's clear that recording in a linear color space with lots of bits (14 in this case) is much easier to deal with. Over- or under- exposure is relatively easy to handle in post as long as blacks and whites don't clip. The challenge with the A7S is that unless carefully set up and exposed, color behavior is non-linear, which means unexpected color changes happen with over- or under- exposure. As you may have discovered, PP6, which is CINE2 gamma and CINEMA Color Mode is one of the best stock all-around settings. Setting Color Phase toward magenta slightly can be helpful. Tweaking Color Depth can be helpful in some situations but doesn't really work well in the general case. I'm putting together a guide for matching the A7S to 5D3 RAW for skin tones. I've also created a simple app for OSX to help with editing A7S files in Resolve when audio is needed.

-

Currently Resolve can't handle the A7S's MP4 files with audio (uncompressed PCM audio). A solution is to rewrap the MP4 files into an MOV container using ffmpeg: ffmpeg -i <A7S>.mp4 -vcodec copy -acodec copy <A7S>.mov Some of the GUIs for FFMPEG may be able to perform this function. I created a very simple and easy to use drag & drop app for OSX that allows adding the app to the Dock, whereby you can simply drag & drop files or directories to quickly convert MP4s into MOVs with no transcoding: runs about as fast as a file copy. http://brightland.com/w/mp4tomov-for-osx/

-

http://www.4kshooters.net/2014/08/20/12-cameras-blind-test-bmpc-4k-bmpcc-gh4-arri-alexa-red-epicdragon-6k-sony-f55-fs700-kineraw-mini-canon-c500-5d-mark-iii-1dc/ When viewed on the internet, the differences between many cameras may not be significant. Many of the examples could look better when looking at skin tones. The differences show the challenges of working with color, even by experienced colorists. Note the banding on the red cylinder on the left on the 1DC vs. 5D3 RAW (none). However the 5D3 RAW has green skin (easily fixable)KineRaw looks pretty good next to Alexa (would expect the Alexa to look better)The Dragon skin tones look much better than EpicF55 skin tones look pretty goodFS700 skin tones look flat but color is OKBPCC Speedbooster looks much better than without SB (noise)GH4 looks OK (kind of flat color), lower noise than BPCC with SB

-

Does the 1DC do 60p in S35 mode? The A7S works really well in APS-C mode at 60p: excellent low-light and reduced RS, haven't noticed any significant aliasing issues: https://www.youtube.com/watch?v=aq8m2FaVL1U . Reviewing FS700 footage recently (regarding the FS7): Sony provides pro-quality mic-preamps and ADCs- really handy when recording straight into the camera. Using something like any of the Sound Devices solutions into the 1DC will sound good, as it does with the 5D3, however a lot more bulk and complexity.