-

Posts

2,531 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by sanveer

-

Everything is an eventuality. 8k is happening. Regardless of how overrated it may be. And how unreasonable and resource heavy . They cannot create a new body design except for their studio or higher end. If they created a new camera type, lots of loyalists would throw a tantrum. I sometimes wonder whether Blackmagic should have picked by IBIS, an Articulating Screen, Good Battery Life, PDAF and Weather Sealing from Olympus, before Olympus committed camera division as a junk sale. Imagine a smaller and better designed Blackmagic camera, more on the lines of a FF camera like the S1H with internal BRAW, an articulating 3.5inch display, good battery life and IBIS, 6k or 8k. For $2500-3000. People may feel reluctant to pick it up, how much easier it would be without a ton of accessories. In many ways, I felt Blackmagic and Olympus should have explored a joint venture opportunity. I guess not only parents and licences of Olympusbare up for sale, so it's probably a little late.

-

They'll probably put that 8k FF sensor into the Pocket Camera Body. Everything else will probably remain the same.

-

IMHO the GH6 could be a seriously formidable camera with just a few good tweaks to the sensor and PDAF. If it only does 4k at upto 120fps (and perhaps 1080p at 240fps), has atleast 1 stop better dynamic range for video, has 14-bit RAW photo and about 1.5-2 stops of better low light, it should be more than sufficient for the next generation of filmmakers and usable for another 3-4 years. Having RAW via HDMI or having some flavour of internal compressed RAW (BRAW) would be great. 8k is just overkill, causing the camera to turn into a barbeque machine and eating unnecessarily into memory. Plus good 4k can easily be upressed to 8k, if one absolutely needs it (which is like not right now). The colour science and highlight handling need tweaking and the VLog L needs to go. Luckilg it doesn't suffer from overheating, and so many othe issues. Panasonic should keep the price as low or lower than the GH5. They could do this by re-using the GH5's basic body structure (or using the G9's body structure) and re-use some of the hardware. If they get PDAF, and these features, and the GH6 is priced at $1500-1750, it could easily eat into everyone else's market. Both size and price of the M43 has become difficult to justify after the launch of the A7III and the XT3, and the ever increasing size of M43 bodies (1DX and G9) and lenses (all the Olympus f1.2 pro primes). Suddenly $2000 seems like a good price for many cameras. Blackmagic makes great cameras, but they lack reliability, weather-sealing, decent battery life, IBIS, decent autofocus. Also the 5 inch screen seems like a wasted effort if it cannot be seen during the day. Panasonic just needs to consolidate its video prowess.

-

I completely agree with that viewpoint. Not sure why Panasonic isn't making autofocus their biggest priority right now. Almost everything else seems acceptable (dynamic, colour science, codec, even low light and other things which could be improved for better usability but aren't unusable) and some things are also exceptionally well executed (IBIS, unlimited, recording times, HDMI albeit a slight lag, ergonomic especially for the G9 and GH5 etc). Also, while PDAF (and the Dual Pixel version of it), is usable for stills and video on Canon and Sony, it is far from actually being usable from a creative perspective. Which means the algorithms and the options to make creative choices in software interface, in camera, are mostly missing, right now, across all manufacturers. Once Panasonic fixes the speed and accuracy of the autofocus, it could lead the way for the correct creative implementation of autofocus, with the sole purpose of making focus pulling usable, creatively, in single camera operations for for tiny crews on low budget features. I am guessing there would be atleast a 3 x 3 (if not 5x5) option necessary to fix this.

-

The tech team in Panasonic should be embarrassed. Or maybe they're not announcing it to prevent slowing down sales of current cameras?

-

Oh ok. I didn't know that. It's a rather poor software design, then.

-

Canon EOS R5 has serious overheating issues – in both 4K and 8K

sanveer replied to Andrew Reid's topic in Cameras

Tell us, you're an alien. Or you were living in a cave long before Covid-19 afflicted the world. I saw it on Newsshooter.com too. I am curious how much longer it lasts with it, and how it affects the image. Suddenly, I feel, all of us Panasonic M43 users take reliability for granted. The fact that the GH5/GH5s can record for hours, like other GH cameras before them. No overheating rubbish. We can even record whole concerts with the battery grip, even popping one battery out while the one in the camera continues (and swapping between SD cards, since we can remember, like 3-3.5 years ago). -

They already have a webcam mode for a few of their cameras (GH5, GH5s, and probably G9).

-

Canon EOS R5 has serious overheating issues – in both 4K and 8K

sanveer replied to Andrew Reid's topic in Cameras

Apparently overheating isn't the only issue. The rolling shutter is going to be great too, apparently. Though we should wait for the actually reviews to come out. -

Canon 9th July "Reimagine" event for EOS R5 and R6 unveiling

sanveer replied to Andrew Reid's topic in Cameras

I havent used it on an ILC, but I remember using it on my Samsung S7 smartphone where it had some creative editing with the sound. It vacillated between video slowed down in post and played at real time. It played the sound at real time, it sounded lime regular 24fps. I am guessing at slowed speeds it may sound like some strange animal yawning. -

Canon 9th July "Reimagine" event for EOS R5 and R6 unveiling

sanveer replied to Andrew Reid's topic in Cameras

120fps with sound is actually pretty useless. But some ILC does apparently record sound. Not sure which one it is. -

Canon 9th July "Reimagine" event for EOS R5 and R6 unveiling

sanveer replied to Andrew Reid's topic in Cameras

Yes. 120fps doesn't include audio. -

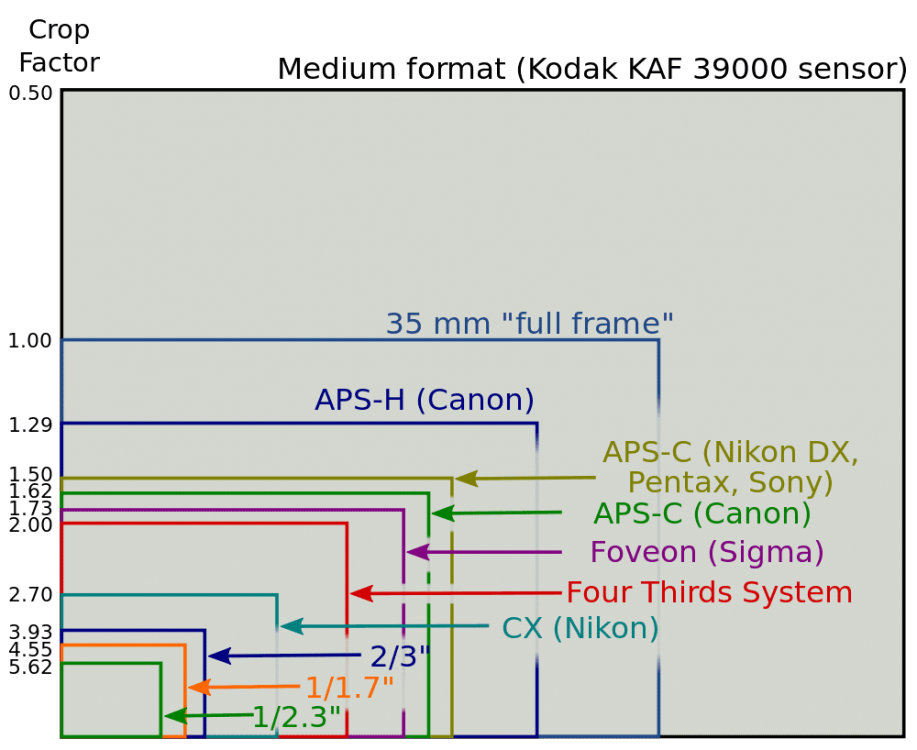

The whole crop factor thing is interesting. Because within APS-C (APS-H) alone, there are like 4 different crop factors (from 1.73x to 1.29x) I also clearly remember the debate about the GH2 having a 1.86x crop, instead of a 2.0x crop (for video, instead of for photos?). I also read somewhere that the Panasonic GH5s has an even wider crop (1.80-1.83x?). I am not sure the argument about the sensor of the GH5s being too large for IBIS seems correct, then. Panasonic could, then, easily fit such a multi-aspect ration sensor (MARS) inside the next GH Camera (GH6). They could keep the MP count at 20, since I am guessing that with the latest sensor tech, the low light video performance should be extremely similar to the GH5s. The GH5s doesn't seem very good for stills in low light, surprisingly, so the GH6 could have an advantage over it here (apart from the IBIS and higher MP count. Also, since the actual area size of sensors isn't much different between APS-C and M43 (most noticeably varying in width, rather than height), especially for MARS, the actual dynamic range and low light performance, too, theoretically, shouldnt be too different.

-

Hopefully Panasonic starts coming clean about the future of M43, and especially the GH6. If it's still on, it should be future proofed, like the GH5, for another 3-4 years. Full VLog, better low light by 1.5-2 stops, 4k at 120-180fps, atleast 1.5 stops more of video dynamic range, way better video autofocus (PDAF finally), 6k at upto 60p, 14-bit photo, 3.5inch LCD, much lower lag on HDMI. (slightly bigger sensor?)

-

Wow. Amazing. That's superb news.

-

I was also curious about the lag on HDMI cables, on both ILCs and TVs (especially as compared to SDI). It appears the lag is not due to the HDMI cable (or standard). It's due to the processing Before the feed. Which probably means, that if camera makers don't colour the output to the HDMI, and if the hardware linking the HDMI is sufficient enough, there really shouldn't be much of lag (not noticeable enough). I wish Panasonic would look into this for the next ILC (GH6?), as should all other ILC makers.

-

Canon 9th July "Reimagine" event for EOS R5 and R6 unveiling

sanveer replied to Andrew Reid's topic in Cameras

Thanks @Andrew Reid and @Anaconda_ The Menu is extremely clean, and the screen appears Larger and more Square than regular LCDs. I guess the next level of ILC Video wars is beginning. It's almost feels like GH5 announcement 3.5 years back. I guess once the Sony 7Siii specs are announced, too, people may start feeling the full thrill. It's Video ILC love in the time of Corona. -

Canon 9th July "Reimagine" event for EOS R5 and R6 unveiling

sanveer replied to Andrew Reid's topic in Cameras

What o the 8k and 4k D and U stand for? -

Interesting. I am wondering whether larger LCD would have been a better idea. Or using that money for mini XLR and better heat management, and cost cutting perhaps. Since the new sensor and redesigned body for the 10-bit video is going to already push the costs substantially. Plus the new sensor, and 9ther features.

-

Whoa. That's over 4k resolution on a tiny EVF. I wonder what the refresh rate would be? Edit: I saw that its dots, not pixels. 4 dors pake for a single pixel. So yeah, much lesser but still almost double of what's presently in the best ILC EVFs available right now.

-

It now gets upto 20 hours of life from 4 x AA batteries. If it has so many inputs, could monitoring have been made easier? Maybe that's on the app(s)?

-

Is there any proof you've done any manner of filmmaking, professional or otherwise?

-

Dynamic range is how much information you can See in the shadows and highlights. All of that may not be usable in post, and depending upon signal to rise ration, it could vary greatly. Exposure latitude is how much of that, you can further push in post, without degrading the image enough, to make it unusable. It has got to do the sensor, and more importantly, the codec bit depth. Like a 14-bit codec would always be a (little) better than a 12-bit one, a 12-bit one would be better than a 10-bit one etc. I am guessing the arrangement if middle grey would also govern the latitude, and whether the highlights are better protected or shadow information. Sensor size, to some extent would also govern how much of latitude is available in the final image. Please correct me if I an wrong.

-

Remember the infamous Michael Woodford case, of him Exposing the over US$1.7 billion in scam money, involving fake auditing and sequestering money (euphemism for illegal wire transfers to Cayman Islands among other places), at Olympus. It appears, that Olympus probably never recovered from that, because when the scan was exposed, they had to isolated one of their 3 businesses. So it was probably prudent to let their Camera Imaging business bite the bullet. Maybe Olympus was ust a sinking ship. It may have merely been a question of when.

-

The IBIS may be common or similar for most Japanese companies, including the one in Fuji (which had similar tech, but smaller sized components, since it sports larger sensors). I am guessing Olympus may have bene licensing it to some of them. True. Though Olympus has Full Frame lens patents, which they could have used for the L-Mount Alliance. Except, perhaps, they would have to figure which lenses would be covered by Sigma and which by Olympus (?).