-

Posts

632 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by Hans Punk

-

That is for more of a violent camera shake (not subtle gate weave)...the mag is just a good place to grab onto! I heard of a music video where the director got a bit overexcited and actually tore the mag off by doing this.

-

Yeah, HFR and HDR demos are incredible when showcased properly, through work I've recently been to a few private demo's and also seen some unreleased laser hardware and screens in Germany (very impressive). I just fear that by the time that HDR will hit consumer TV's, the implementation may be hobbled by default processing of non HDR content that most consumers just accept as 'normal' but are nowhere like how the filmmaker intended to be shown (like example pic in my previous post). It will be that awkward transition period where things need to take time to settle, before all content can be displayed as intended. It will be fine, I just hate the hype and bullshit when anything hits the consumer market...remember 3D TV's anyone? I have much higher hopes for the next gen of theater projection, the upcoming Avatar films will probably be a considerable driving force for the next roll out of projection upgrades worldwide if Jim Cameron succeeds in the HDR/HFR Glass-less 3D solution he is ultimately aiming for.

-

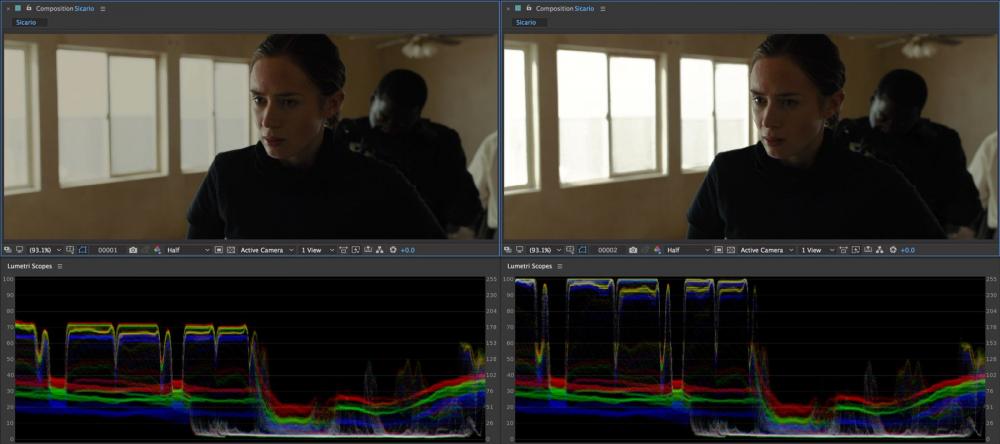

Yes, Red Giant has strength and weaknesses in it's collection. I must admit I mostly use a wiggle expression for light post handheld moves (often 2 instances offset with manual keyframes added to parent null) or shoot my own handheld move with tacking markers on a wall, then track and apply that to footage.. Misfire has a couple of good settings when dialed down and layered at low opacity look very good...but again it is all about subtlety and layering multiple elements. Yes, separating channels for grain is the way to go...analyzing real grain in it's separate channels is the best way to mimic it digitally, or set correct intensity if using overlay grain footage. HFR is inevitable I think and I'm slowly warming to the idea as soon as it gets used correctly on an immersive film project. Lets see if Avatar 2-100 has it - to force theaters to upgrade to HFR 3D projection systems, hopefully without a 'half-lamp' option for brightness. HDR is what concerns me at the moment...it seems to be more of a required delivery format for the next gen HDR capable streams from people like Netflix. It looks like it may be the new 'motion smoothing' curse that people hate...but worse. Looks like many dynamically lit scenes in films will have forced highlight recovery turned up to 11, causing muddy and crap results. Example of actual muddy HDR stream vs what Deakins shot and Mitch Paulson graded. Frightening.

-

Totally agree. The human brain has become so attune to audio and visual input that lots of research is still to be done. The physiological effects of higher frame rates done by Doug Trumbell many years ago is particularly interesting - as it concludes that very high frame rate (way higher than the disastrous 48fps hobbit) has a truly immersive effect to the viewer when projected at the correct brightness. I suspect the growing generation of gamer kids may be more accommodating to the notion of mass adoption of high fps movies in the future. With the correct implementation, this could well be the future of film making and presentation. Then the future kids will probably want to apply motion blur to footage to give it a nostalgic filmic feel. That is maybe a thing worth remembering, modern film is still clinging (rightly or wrongly) to established rules of frame rate and presentation methods. Silent 16fps black and white film used to be the cutting edge back in the day...so it is probably just a matter of time until we go through another 'advancement' of similar impact.

-

The dirty little secret is that everything about film is fake. Film projection is in itself a magic trick...tricking the brain into thinking that a rapid succession of still images is a continuity of motion. It is only the brain that interprets this into an experience of motion within 'motion pictures'. I totally see some merits in introducing old analogue artifacts from the film days - into digital cinema but I think the key is to be subtle about it if applying at the post stage. I think that a current feeling to some is that a 'connection' to an immersive viewing experience is having a reminder that what is being viewed has gone through a 'process'. What were once unwanted visual artifacts are now welcome visual cues of the 'good old days of film'. A comparison could be the trend of anamorphic flares being provoked so heavily in films these days. When these lenses were first being used in cinema, any flare was considered way too distracting and an obvious technical error to be either cut out or suppressed as much as possible. It is only in hindsight after a technical 'advancement' that many analog artifacts start to have positive or nostalgic memories to people. I have some old Hi8 and mini DV footage from years ago, it is now the new super8 in terms of provoking nostalgia. It looks quite awesome now because it is so detached from the look of modern digital video quality....but the real reason I think I like it now is because it is associated to memories of being a teenager I have. That is the power of the moving image, regardless of format. A Grindhouse grungy film effect is easy to achieve in post, but quickly gets boring unless integral to the intended look. Unfortunatly most film look presets default to a similar heavy handed setting that when left as is - giving a very 'fake' feel to things. Subtle layering of grain, weave, fading can give nice results that do not stand out as being ugly, but help make the image more akin to the 'warm' feel of older film. I often use real film scans of grain to overlay digital footage, nothing wrong with that...it is dialed down very low, and often layered and offset 2-3 times to give the image a near invisible bit of dancing texture that helps take the clinical feel from digital and also helps give an added sense of sharpness to shots where focus was not tack sharp. Adding post effects such as weave/grain/speckle/dust is totally fine in my book if it compliments the visuals and does not distract from the subject. I'd say at least 70 percent of the 'film look' in digital cinema these days is dictated by camera lens choice and the remaining 30 percent is down to the grade and finishing. Modern multi-coated lenses tend to bake-in a contrast and colour that is very hard to convincingly adjust afterwards, even when dealing with footage from high dynamic range cameras. This is why so many vintage cine primes are still being used on features - giving a more 'organic' feel to the image at the source of the image capture process. I think too many people assume that they can take digital footage shot with kit lenses and post-process the footage to accurately emulate film with one or two simple clicks with a LUT. The truth is that you need to consider lens choice, exposure and have enough latitude in your camera 'negative' to first colour correct and THEN start to grade and finish the image. The irony is the just before film started to die as a primary cinema acquisition and projection method, it was hitting the pinnacle of impeccably clean, sharp and pristine stocks that could deliver amazing results when combined with the flexibility of a modern DI. Motion picture cameras themselves have hardly changed in 100 years of design. Film was never a format that wanted any analog artifacts that many seem to now miss, it was always striving to be as clean and perfect as possible. Makers of Vinyl records never wanted crackles and pops to be heard on a first play - it is only from passage of time and advancement of technology that these audible artifacts become nostalgic and add a layer of pleasant experience to the listener. When CD was introduced, the horrible compression of audio dynamic range made the same songs sound sterile and without life when compared to a vinyl (a technical inferiority)...but many gravitated to the more identifiable 'crackle and pop' as an identifiable 'warm feel' artifact that they missed from the previous technology. I feel a similar thing may have happened with film. Film can technically outperform digital to this day, especially when considering overall latitude and handling of highlight roll-off and especially when talking about large format...but the Alexa and Alexa 65 is pretty much there in terms as to what traditionalist film people consider a 'filmic' image.

-

Yes, many presets default to settings that may not be ideal (often too strong). The trick is to play around with the settings to tune it to taste. Sometimes you can apply an effect onto an adjustment layer, then dial the opacity of that effect layer down and also apply multiple copies of the effect to more adjustment layers in an offset fashion so as to build up an influence that is subtle and not 'intellectually' created by the computer. Same goes with grain/dirt/scratches...subtlety is often key. As mentioned earlier by someone else, After effects has a very simple expression called 'wiggle', there are many good tutorials online to explain how to apply this effect. Also as mentioned here before, motion tracking real footage is an idea worth trying, but is not nessesarily more of a realistic method, unless you find a perfect range of motion that you can track and loop without noticing the repetition of movement. Two adjustment layers with a small wiggle expression applied will often give a nice random and subtle move that with nicely achieve the gate weave effect, or simply add a bit of life to locked off shots. I used to work at a large Kodak facility, batch processing photographic film back in the day. When taking digital stills was a novelty back then, yet we had digital to film scanners to give customers physical prints from their images. It was that strange period in time when Kodak could not understand (or want to believe) where the market was going. Within two years of me being there, the plant closed and everything went digital. I then had a short stint at a cine lab, processing and scanning film rushes for features and commercials...the process and machines were very similar. Then I bought myself my first proper camera, a Arri 2c 35mm. Using connections I'd made at work to get stock, I could actually shoot 35mm film for short projects and get it telecined to tape for about the same cost as it was to hire a HD camera back then. Now I've transitioned to post production work (visual effects) and often try to incorporate the analogue methodology whenever I can. Most of what people think they love about the 'film look' is often too nostalgic in my opinion..the artefacts of bad handling/transfer/age of print etc. The real power of Film is it's latitude and colour rendition when selecting negative and print stocks. Thankfully digital has come to a very respectable stage, where good lens choice and a talented colourist can keep most film traditionalists happy. Besides, if you shoot film these days 99.9% of the time your film is projected digitally. Film projection and its degradation through many cycles of showings in theatres is what made a huge influence of the viewers of the 'film' effect.

-

Sounds like you are describing 'Gate Weave'. That is when a film frame passes through a projector, sprockets outside the image area control the vertical motion of the frames through the projector. Over time, a film can become warped or the sprockets can wear, causing the frame to appear to move side to side. It can also be caused by a worn mechanical movement in the camera not keeping perfect registration with the sprockets on the negative or by the film itself being warped by heat. A good plugin for exactly this (and other old film effects) for Premiere/AE is Red Giant's Misfire: http://www.redgiant.com/user-guide/universe/misfire/ or you could try and see if you can customise the parameters in this free camera shake plugin: http://premierepro.net/editing/deadpool-handheld-camera-presets/ Gate Weave is primarily a horizontal motion (like a soft sway left and right) that can have different speeds. You could possibly keyframe your clips to have this motion with a Bézier curve to mimic the effect with the inbuilt motion control tab within Premiere. The most 'scientifically accurate' way to achieve the effect would be to shoot on film with a worn movement in a camera that does not have double-claw pull down...or you could purposely introduce movement during the telecine scan.

-

@EnzoNgu Maybe look into mattebox ring adapters combined with a clamp on filter mount. If you can measure all the dimensions needed, I'm sure RAF would be able to make something up for you, or by him modifying something else he's already made. IMHO It's worth getting something decently constructed to properly secure that nice piece of zeiss: https://www.rafcamera.com/adapters/for-matte-boxes/95mm-diameter?p=1

-

It indeed looks like either part of an optical printer or reconfigured to or from print duplication use. Seen this lens being re-sold 2 or 3 times from different sellers without much time between auctions - which raises alarm bells as to its real practicality for filmmaking use (and where is footage of it at infinity and variable stops?). No doubt the optics are going to be top notch but I'd want to be assured that its focus can hit infinity with that aperture inside (causing added space between optics), since I would presume that lens was always intended for closeup duplication use, as part of a specific optical printer or telecine machine. I suspect that the variable aperture inside the lens is not going to make the image much sharper when coupled with a taking lens, but rather cut the light transmission to the taking lens and dramatically increase vignette. In a nutshell, if it was the wonder lens that it is supposed to be, where is example footage of it resolving infinity focus and its results when stopping down with its internal aperture? EDIT: Found an expired eBay auction that sold for $250 that confirms my hunch... "This is is multiple lens assembly that was used at Deluxe Film Laboratories in Hollywood for 35mm anamorphic conversion to flat formats for theatrical trailers. It should not be confused with camera or projector lenses. Printing lenses are ulta sharp intermediate lenses designed to resolve 35mm camera original high resolution color negative without distortion. The assembly consists consists of three separate lenses, each of which may be separated for individual use: Isco Lens VII - Base Lens Isco Anamophis Printing Lense 2x with Manual IRIS Ring (Like F-Stop) Isco Lens III - Output Lens I highly doubt the Isco printing lens can hit infinity without stopping it down to a crazy small aperture. You can see from the original configuration that it is basically used for near-macro focus distances, as part of an optical conversion chain. If it could indeed be used for filmmaking use, I would be interested to hear how on earth you would achieve infinity focus. I think the current seller on eBay is a member of this forum @Hunterj11 perhaps they can shine some light on this lens?

-

Assuming you mean 5D Mkiii?...if yes, I can reply with what I've personally found so far. Other shooters here no doubt have more insight. Depends on resolution settings and detail of scene being recorded. On the most recent high resolution crop mode build -the clever MLV lite compression will adjust data rate to squeeze as much record time out as possible, but if your scene has high amount of detail - it can spike the data rate and restrict duration of record time. Lexar or Komputerbay CF cards are most commonly used, 1000x or 1066x Komputerbay cards have never personally let me down and are pretty cheap. I can shoot up to 3.5k continuous (scene detail and aspect ratio dependent) on Komputerbay cards. Shorter record durations are possible at higher resolutions. 1080p raw is pretty bulletproof on the 5Diii and is the easiest to shoot with, it is the higher resolutions that always have the quirks or limitations. That is to be expected when you realize what the camera is doing to enable raw video recording (that canon never intended to be made possible). It can be made very easy if using MLVFS workflow. Basically it acts as a virtual drive to convert the MLV files into .dng files that can be registered to have a Resolve compatible header to the files...no transcodes needed. Search MLVFS in the ML forum for instructions to setup and run. MLV Dump also works great, very simple operation to 'virtually' convert MLV files to .dng. There are many software options for both PC and Mac to process or view the camera files...people seem to have different workflows tips and tricks that also are evolving with the firmware. Short answer is no. You can get a lower res, choppy frame rate playback with the non-crop 3.5/4k ML builds. The most recent high resolution builds have live view seriously choked or purposely broken (when in crop mode), so as to enable enough processing power to be freed up to the camera for the higher resolution recording (from my understanding). If shooting 1080p raw, you can also shoot .h264 proxy file at the same time, then select the proxy (as normal) for realtime playback. This is a good option for confidence or client playback as well as a backup if shooting an interview for example. The audio from the .h264 can also be useful as scratch sound to help sync the raw video to a separate sound recording. Yes. On 5Diii you can monitor a 'mirrored' live view display from the camera, or HDMI output will act as separate monitor feed (clean). The options depend on the two different builds of ML firmware you can choose to run. With the new high resolution crop mode, I personally use 123 build, that allows me to use rear camera lcd live view as the framing reference (non real-time liveview)...and the HDMI feed to my monitor will display a zoomed in (but real-time) preview - this gives a nice punch in view to use for focus. It's a quirky workaround, but can be made to work quite well. Lots of Gotchas...and part of the fun is to work them out, or creatively work around them. Developments and tweaks are continuously being made to the ML firmware builds, so what could be an issue today, may have a solution tomorrow. If you can code, you can become a developer to get a feature tested, approved and the committed into a working build for others to test and use. It is a cool community of people that have managed to make great things come out of canon DSLR's. To use ML and get the most out of it, there is some research and reading of the ML website/forum required....that is not necessarily for everyone. It depends on how much time you can afford to spend. The ML builds and forum developed workflows are so much more refined than a few years ago, so it is very accessible to those who are willing to work with something that does not necessarily come 'ready to run' out of the box. Any detailed questions are best searched on the ML forum, virtually EVERY question has already been asked and answered there, as well as detailed explanations as to how to get your camera up and running pretty quickly. http://www.magiclantern.fm/forum/

-

Hackers inject Mac virus into Handbrake Video Encoder

Hans Punk replied to Andrew Reid's topic in Cameras

Looks like VLC and other media players might have an open back door for hackers to exploit : Subtitling systems contain 'widespread' security threat -

One unit per device (so in my case 4). If syncing one camera to one external audio recorder, then you would need 2. I'm not affiliated with the company so have no gain in trying to push the product, but they are very good from my experience and provide sync very effectively. The price point is very reasonable considering the alternative solutions on the market. you can see the wide range of sync cables on the product page to see what devices/cameras they support (virtually everything with a audio/line in input). https://shop.tentaclesync.com/

-

Nope, but they will work with NX. Ive used them to sync Amira/2x 5D3's to audio recorder on a doco...they are very versatile little things that are virtually camera agnostic.

-

Here are a few iscorama pre-36 shots thrown together for those interested - I took my camera and Rama to a local car meetup with this thread in mind. My injured back prevented me getting the low angle dynamic shots and closeups I wanted, but you get the idea. A sunny day with lots of chrome is always a good test to see if a lens has any CA or highlight/contrast edge bloom issues. There is some fine alias/ line buzzing artefacts due to warp stabiliser being used on almost all shots (no tripod and was high on painkillers). I'm sure the Bolex can match the iscorama (perhaps beat it) on sharpness, but as I've always noticed with the Rama - it tends to simply be as sharp/clean as the taking lens that you use with it. My other kowa lenses with Core DNA attached could have achieved similar results, but not at such wide aperture on 58mm on FF - and definitely not in such a compact and light form factor as the Iscorama.

-

Iscorama Pre-36 with Helios 44 @ f2.8 (oval aperture) on Canon 5D3 To get that sharp on my (sharpness tweaked) Kowa Inflight or Kowa B&H with Core DNA attached I'd need to stop down to at least f5.6. Sharpness is indeed often overrated, but it's sure nice to have and can always be softened in Resolve very nicely. Iscorama still has perfect balance of sharpness but with organic feel due to vintage coating. More modern cinelux type lenses are as sharp, yet look very clinical (to my eyes at least).

-

Some really nice compositions in there...you have a photographers eye.

-

Any tie down/ratchet straps will work...can be found at most DIY places. Magic arms with clamps at both ends are good for support and C-stand knuckles and rods can make lots of funky rigs possible...but maybe overkill for your needs. Maybe try the tripod and straps first for a test...as 2-3 decent straps will cost under 20 quid. Maybe some carpet or padding to go between seat and tripod if cranking the straps super tight (to stop any dents/rips in seat upholstery) If that solution does not look practical maybe look into that headrest mount.

-

Either way I would test out before the shoot as much as you can. Unless monitoring wireless away from the car...you won't be able to see 'live' the amount of shake or vibration that gets picked up. It's well worth testing out and reviewing footage to make sure any rig solution is working. You should be good in minimising shake if using a very wide lens like you mentioned. Strapping a hefty lump (tripod and head) firmly to a large fixed point (passenger seat) is going to give better stability...it may not look as pretty, but it should do the job. Any fixed single clamp/bracket/arm is often perfect for GoPro weight cameras, but for anything heavier tends to make the rig vibrate. Ideally you may want to ratchet-strap the collapsed tripod to the passenger seat rear and maybe use additional straps/clamps to door handles or other fixed features inside the car as long as they are not in vision of your lens.

-

Did something similar a while back for passenger POV and had no time to pre-rig anything. I ended up removing passenger seat headrest and ratchet strapping the tripod (folded closed) to the back side of the passenger seat. Tripod head and camera was then free to occupy the space where the headrest used to be. A bonus of the setup was that the tripod head could also pan and tilt by an operator in the back of the car, approximating the head turns of our passenger's POV whilst in a car chase. For your needs it could be similar setup...but with an extension or longer plate on the tripod to give the camera a bit more overhang, closer to where the passenger's head would be. Magic arms are good, but not the best for camera support on their own if car is moving...they tend to vibrate. Magic arms (used in multiples) are great for additional support and cranking down a grip for 'on the fly' rigs like this. If the car is to be moving, it is generally best to strap down a hi-hat or tripod solution where multiple points of support from straps and clamps can be employed...something where vibration can be minimised as much as possible.

-

I just got a reply from Brian Berkey (very nice guy) - he said he does not have the exact model number for the full spec sheet to hand, but here are the important details for wiring. The Berkey motor comes with 4 bare wires for the user to solder to most common 4 pin connectors. NEMA 14 1A, 2.7V Black A+ Green A- Red B+ Blue B-

-

Awesome...thanks Jim!

-

Cool. Pretty sure that the Berkey motor is 12v 1amp...but I'm running it at 6v I think with no torque issues. I've contacted Brian Berkey to get a proper spec sheet. Through luck I wired a custom cable to integrate it to my Dynamic Perception NMX controller and it works perfectly. I cant really see if the Berkey motor is massively better than your solution, but I know that a lot of people in the motion control world use them because they are compact and can be configured in many ways... I can get the gear within 1mm of the camera body when using pancake lenses or (as seen in following pictures) when I remount lenses that have a focus gear very close to the lens mount. Also if it can be an option to integrate into your controller and encoder, there are a few owners out there with Berkey motors on custom live action/ animation motion control rigs that would also appriciate encoder driven control that can act as a slave to rotational input.

.thumb.jpg.78114f1af78ced5c1f34ed7d9f4bdf5f.jpg)