-

Posts

957 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by maxotics

-

In the article is says it has no internal 4K. I wonder if Sony is playing coy here, maybe offer it as $ add-on like the X-70. Yeah, that's pleased a lot of people! Why call it an RX0? Is it part of their consumer line? A camera for many different applications that will be jack of all trades, master of none. It's also similar to their snap-on phone cameras, which failed miserably. And why does the $360 FDR-X3000 have 4K and this thing wouldn't? For past past 2 years Sony has been pitch perfect. Not looking good.

-

You're probably looking under HFR. They don't list it because at this point Sony takes 4K for granted in all of their cameras It's essentially a stripped down RX10/RX100

-

It can do internal, far as I know, but has a 5 minute limit per clip in 4k, or something like that.

-

Yes, one actually looks at RAW frames from Canon cameras, as you will with ML, then they see those focus pixels in frames, which lead to the "pink pixel" problem. There can never be a perfect camera! I suspect that Canon adds more red to their filters, which reduces dynamic range, but gives a more pleasing image for many people, whereas Nikon goes for wider dynamic range with neutral colors which aren't as "psychologically" appealing. Since even a video 4:2:2 compression will essentially double up on every 3rd pixel of color it's no wonder that dual-pixel effects on color are not noticed in video. My wonder is if dual-pixel auto focus, because it requires pixels made slightly different (my guess) makes it more difficult to get 4K, definitely 4K with focus peaking (which is Nikon's problem). Bottom line, for Nikon to keep its lead in still photography dynamic range, I can't see how it can implement Canon's video compromises--EVER. Mirrorless may get them to similar video features as Canon, but it will be at the expense of their still frame photography technology which one can best appreciate by shooting with a D810/D850.

-

From left field, you might get a Sony FDR X3000 and Rhode VideoMicro because the X3000 ActionCam has a MIC-IN. Its internal mic is pretty good too. The image stabilization is amazing. If anything, the camera is too small--I often don't pay attention and get crooked footage. You could look at it as a small audio recorder with 4K, or time-laps, or slow mo. You can put 10 of those in your pocket and still forget you have them Here's a video with and without the Rhode VideoMicro. As you can hear, it does very well and picking up sound in front of it, even with a lot of background noise. The camera has a tripod mount on the bottom, so I just attach the mic with a double male 1/4 inch screw. BTW, I just happened to start working on the lens attachment for close-up filming so if you get one let me know and I'll send you a prototype.

-

Sorry! It's another "What camera?"question.

maxotics replied to Ricardo Constantino's topic in Cameras

Leave it to @cantsin to throw a wrench into the question Video RAW (or ProRes Equiv) against 8bit anything (even the A7s). If the OP uses stils only for the web (2K) then agree with Cantsin that the BM should be on the list for sure. Looking at the question that way, then the 7D with ML RAW is another option. -

Sorry! It's another "What camera?"question.

maxotics replied to Ricardo Constantino's topic in Cameras

Musicians? Where most stuff happens in low light? I'm with @noone If EVER there was a camera made for that world it is the A7S. Why? There's only so much software-engineering (reducing noise) that one can do around small pixel sizes. The tech is probably at its limit. The pixel pitch of the A7s is 8.4 microns. GH5 is 3.3. Let me put this in perspective. You want to race two cars with same transmissions. Do you go with 8 Litres or 3 Litres? This isn't to say the GH series cameras don't have their strengths, like running all night, flexible software and working with inexpensive glass (which may be more important if you're recording whole shows). A7S...beg, borrow, or steal Otherwise, any other full-frame. -

I couldn't agree with you more! I mean like, triple AMEN! I have never worked with a camera SDK that seemed like it was designed by half-competent developers (unlike the main ML devs). Indeed, recently I wanted to control Sony cameras. Did I load up the SDK? NOPE. Been down that road, with unanswered questions on life-less forums. So I installed the USB Camera Remote and used a library that basically does key-stroke pressing on the application's interface. When it comes to SDKs, all the camera makers are pathetic. Now this may seem like a tangent, but I want to prove a point. Adobe Premiere just re-vamped their whole TITLE making process. THREW OUT THE WHOLE THING. Now there is a "Graphics" interface. When you create a title you get bountiful properties and methods in the Graphics pane. It's been built from the ground up. Should be perfect, right? Yet it has everything but the TEXT OF THE TITLE! In order to change the text of the title you have to click on the "Text" tool on the timeline. Is the text of a graphic a property of a timeline? WTF! It makes me speechless! My brain freezes trying to comprehend how Adobe management could let something like that pass. It's a KLUDGE of monumental incompetence. My 2-cents There are very, very few software developers who have a real talent for programming. Just like there are very, very few bloggers who have a talent for writing about cameras The world runs on computers. There is still a fair amount of managers at Canikon who weren't brought up on computers. In short, there is a chronic shortage of talent. If Adobe can't get enough good talent, what hope everyone else? Look at ML, of the 28,000 members my guess is there are only 50 or so people with hard-core programming skill. And if more, they don't have the time. Put another way, I bet 8 out of 10 software guys at Canon don't even understand what the ML devs do. It's over their head.

-

Consumer cameras are optimized for size and weight, which means they must compromise power somewhere. YES, Canon cameras can shoot RAW. Yes, RAW makes the camera more powerful. But NO, the cameras can't shoot RAW without risk of sensor or other electronics failure. The camera gets UNNATURALLY hot! NO, you can't shoot 10 minutes of RAW and then expect to shoot 100 normal photos on your trip to Italy (the battery will be dead). NO, you can't just use any memory card to shoot RAW--try explaining why to most people. Bottom line, un-crippling the camera creates risk, heat, battery strain, consumer confusion, etc., etc. Let me explain it another way. You're one kind of high end user. To you, Canon is crippling the camera. There's another kind of user "John Q Public" who just wants the camera to work as they expect it, as the camera is advertised. I think if you imagine any of your normal friends or family shooting RAW on a consumer camera you'll see all the issues that will come up that won't make them blame their own lack of knowledge, but Canon. Most people who try RAW photography still end up in nightmare valley! No, Canon isn't crippling their camera. Don't you think they'd love to advertise their cameras as having RAW. Say they set it to 5 minutes shooting time only. They'd get lots of great camera press. Two days later their help desk will be swamped with calls and everyone will complain that they're crippling that camera at 5 minutes!!!! Or look at Samsung. H.265. Where's their "thank you" card for not-crippling their camera with yesterday's CODEC? Nope, all they got for their troubles is people not buying the camera because they couldn't be bothered to install an extra piece of software! "Expectations lead to resentment". All successful businesses, like Canon, keep that in mind.

-

Me either! I remember when I first joined this forum everyone was looking forward to the next GH camera with a higher bit-rate. What will the GH5 do now, 400? Years ago, a couple of posters would say something along the line of, "The C100 has a great image so I don't care about bits or CODECs." I was watching "Abstract" on Netflix a while back and the Roku was buggy so showing the bitrate, it hovered around 19mbits. They're shooting Red Epic Dragons with anamorphic Primes, RAW I'd assume, 16 gig a minute.. But in the end, all I see is 19mbits. So the question is, if a camera can give you 19mbits a second and it LOOKs like "Abstract" what difference does it make how many megabits are recorded in the forest? Anyway, mjpeg is the "RAW" version of jpeg compression. It is frame by frame, unlike video CODECs that calculate compression through a series of frames. That is, mjpeg is the camera's BEST stills compression technology at 24 or 30 frames a second. Why wouldn't one want that? I mean how can you say that's bad and the 400mbits nonsense (sorry) of the GH5 is good?

-

I can't for the life of me imagine any sane person buying the D850 primarily for video. Canon's dual-pixel auto focus is more than a piece of software, it is a combination of sensor technology (pixels set aside to double as focus receptors) and tech. They've been working on it for a long time and it keeps getting better and better. What this article tells me is Nikon is becoming more of a weather-sealed professional stills-photography eco-system built on Sony sensors. As Andrew pointed out, too many sensor similarities to Sony's other cameras. When will Nikon build dual-pixel auto-focus for video? Looks like never; or at this point it's too late. As for 4K, as someone above pointed out, 4:2:0 shouts chroma-compromised. I recently bought a Canon C100 for $1,500. Don't flame me, but for video it makes my Sony A6300 seems like just TOO MUCH WORK. The D850 has only made up some 4K ground, on paper, against the Sony A7S line or Panny GH line. In the real world, fuggedabout it In video, NOTHING BEATS sensors with fat pixels (in a 4K space), like the Canon Cinema line or Sony A7S. Panasonic does a nice job turbo-charging those MFT sensors for video. But use a GH camera for professional stills photography? Again, don't flame me We went on vacation recently and I shot a lot with Sony's FDR X3000 Action-cam. That is one cool camera! It even has mic-in so with a rhode videomicro mini-boom you can get what the D850 can do in good light just as well, actually better. The X3000 has kick-butt image stabilization. Nikon put out a similar action-cam recently. That didn't go well for them. Nikon just doesn't have the institutional video chops to compete head on in video--anywhere. As from the beginning, video on Nikon is only an emergency feature for photojournalists. Unless I'm missing something, the D850 offers little above the D810 except a few more pixels. All that said, I wouldn't shoot Sony professionally for stills. The D850 is nothing to trifle with on the photography front.

-

No. You can take great photographs with any camera. But almost all serious "model" photographers use Nikon or Canon full-frame, if not medium format if they can afford it. Not Sigma, they're too slow. That's a fact, take from it what you will Sigmas appeal to two types of photographer in my experience. 1) The ones who come from film and are very fussy about color (though Foveon has trouble with red). 2) photographers who are super picky about printing and deplore bayer color artifacts. The question for you would be is full-frame good enough that you don't need that extra clarity of a Sigma? So, I suggest you borrow/rent a full-frame and try it out. Even an old one. If you don't see a difference between that and your GH5 then I'm fairly certain you'd find the Sigma camera a complete frustration. As much as I love the Sigma look, if size/weight isn't a factor, a Nikon D810 is close enough for me. IF I want something small with the best look possible, and I can take my time, then Sigma is the way to go.

-

Quattro sensors achieve higher resolutions be splitting the top blue layer into 4 pixels, sort of like Bayer's use of green pixels to increase apparent resolution over color. There are some (like me) who believe the Merrill's are still superior because they don't add mosaic issues into the image. I can't see Foveon replacing Bayer. As @Shirozina mentioned above, bayer sensors could already do better against Foveon by thickening their filters. Or they could go back to CCD. The fact is, only a small minority of photographers look to maximize color in native ISO shooting. Someone mentioned that Foveons are dynamically-range challenged. Couldn't agree more. However, capturing a wide dynamic range is immensely over-rated. As others will point out, within the 6 stops of DR Foveon likes to play in it crushes other camera's color-wise IMHO. Our eyes can only perceive 6 stops of DR at a time. One of the problems with Foveon, as I understand it, is that although the sensor has three layers, they aren't as distinct as bayer filters. Photons move through the silicon and a lot of computations must be done to calculate what color each layer is registering. They've been working on this for a long time. I don't see any magic algorithm in sight. Foveon sensors have always been slow. They don't get faster, just a little bit less slower! These cameras are labors of love for Sigma; my guess is that either lose money or don't make any. If they really cared about reaching the public they'd license a different lens mount, or make it easier to create adapters. Normally, I would say that these cameras have nothing to do with video. However, the kind of images they can capture in the right conditions are the closest thing to film one can get. An image from a Sigma is THE image you want your video equipment to produce. These cameras can make you fall in love with photography again.

-

Get a used C100 and something like the Canon EF-S 17-55mm f/2.8. Even without much light, it will shoot beautiful images indoors. Use your MFT camera outside for b-roll.

-

That's why I'm not rushing to buy one I've had enough networking protocols for a lifetime. You'll see in the video that you can configure it through your browser a bit.

-

Saw this on YouTube. Fascinating. NOT FOR THE TECHNICALLY FAINT OF HEART. But if you're comfortable with TCP/IP then this is very interesting. Essentially, what Danman has figured out is that this HDMI extender transmits the HDMI signal through standard TCP/IP packets. He discovered that VLC, ffmpeg, etc. will read the stream fairly well, and most other software that will display a network stream. From there, you can save to any video CODEC they support (which is almost every one) There are various hacks to get around some issues (like multi-broadcasting to your whole network) which seem pretty well documented. I suggest watching the YoutTube video by OpenTechLab. The only gotcha for you EOSHDers is the latency, which is about a second and half. https://blog.danman.eu/new-version-of-lenkeng-hdmi-over-ip-extender-lkv373a/ Here's what I believe is the right thing on Ebay. Remember, you want the TRANSMITTER. Not the receiver. http://www.ebay.com/itm/LKV373A-V3-0-HDMI-Extender-100-120M-HDMI-Extender-Over-Cat5-Cat6-Support-1080P/191750919298?ssPageName=STRK%3AMEBIDX%3AIT&var=490770381286&_trksid=p2055119.m1438.l2649

-

That exactly the kind of question I want to answer. Unfortunately, this data is so noisy I can't. If I can eventually get good data then it would be wicked cool (at least to me) to see exactly how changes in the knee/black gamma settings change the tonality distribution.

-

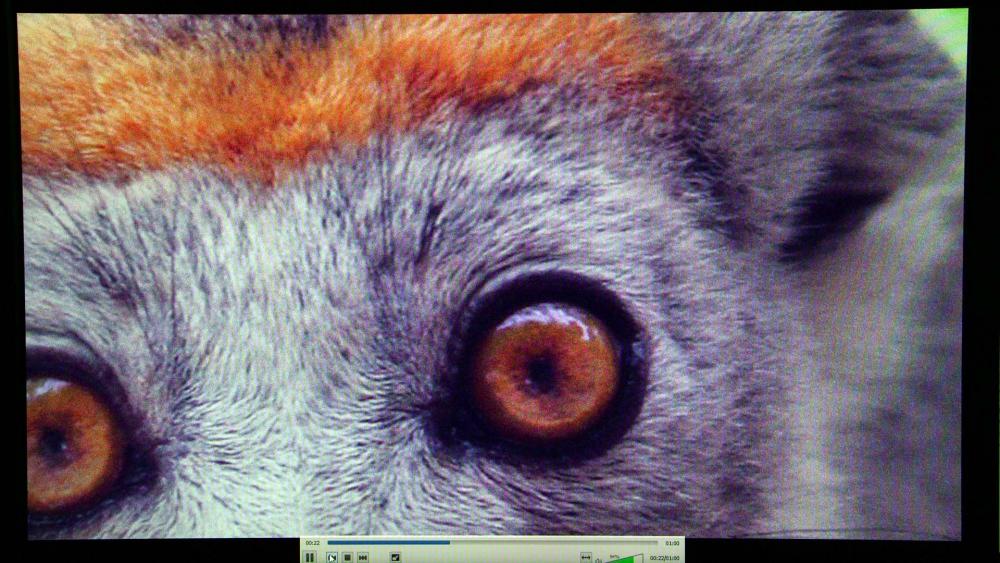

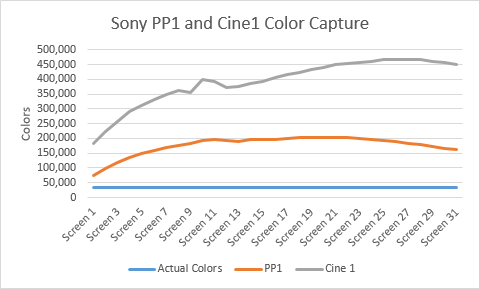

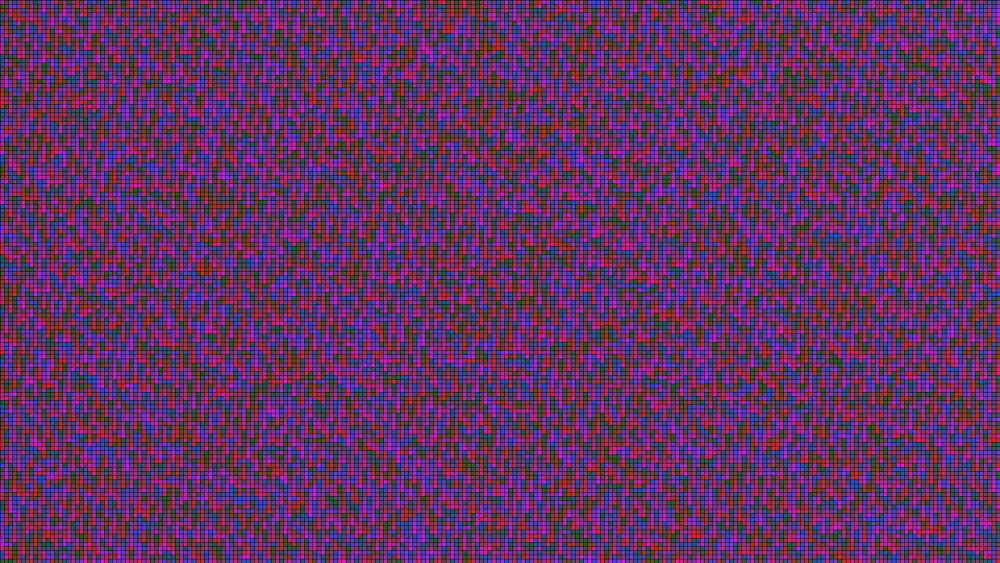

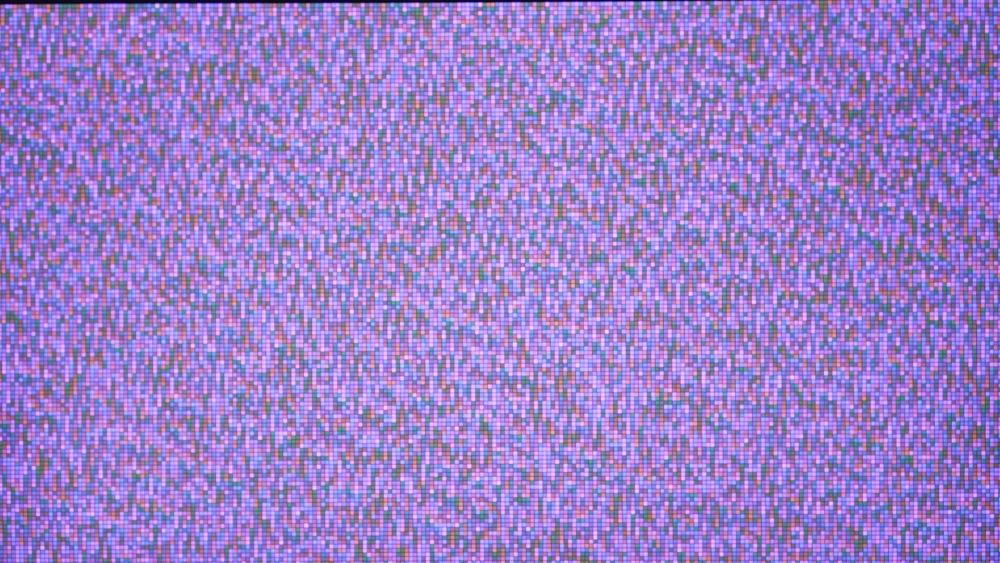

First, Bioskop and CPC thanks for joining the conversation. Because of the way LOG extends ISO I've never doubted for a second that there's be more noise in the image. "add[ing] a colour chart to the test" is exactly what I've been trying to do. First I used one-shot charts where I noticed that aggressive LOG recording gammas reduced the pre-grading color palette by a very large amount. The gentler LOGs are another story. Some people in the thread believe the LOG method of capturing color improves perceptual image quality, even if there is data loss compared to the "linear" profile. In the end, they may be right! But I want to see for myself through some tests In order to test the color issue in more depth (AGAIN THANK YOU BIOSKOP for recognizing this should be a TO DO for anyone interested in this subject) I set up to compare how different gamma settings will capture all 16 million colors (assuming the camera is 8-bit). First, I sliced apart full RGB images which you can get here https://allrgb.com/. Here's a comparison of capturing part of this image on a monitor in EOS Cinema on the C100 (letting PS auto grad) Now with EOS standard If I'm just using my eye, I can't see much "artistic" difference between EOS std and Cinema. So I can see how, in the "real world", filmmakers like @Oliver Daniel find this subject too technical because the differences aren't great enough to effect the final product. I then wanted more control over the colors. So wrote some Python/PIL scripts to generate 32 1920x1080 frame of all colors (4 pixels a color) where the colors are sorted by luminosity because what I really want to know is where in the DR LOG shooting plays games, so to speak. Unfortunately, the aliasing issues of shooting RGB screens with RGB sensors have set me back. There are too many artificial colors and noise for me to get at what I want. Here's a graph of the screens and shooting with the A6300 in PP1 and PP5 The original BMP of 32,410 colors. 32 frames of these are captured, 5 seconds a piece, by the A6300 PP1 PP5 The data suggests that PP5, or Cine 1, is capturing more color than Sony's PP1, which is pretty close to its standard profile (I believe). So is @cantsin correct? Is there an improvement of color using a LOG profile? I still don't know. Because both captures are recording more colors than the screen is meaning to display (it should create only 32,401 colors in our eye), due to re-mixing of RGB values, I can't tell which colors each gamma recording is getting from the proper RGB mixes, and which are essentially false aliasing colors. To my eye, Cine 1 is loosing color fidelity, but I can't prove it with the data I have. To continue, I will need to created larger, probably 1-inch blocks of color on the monitor and average down to a hopefully noise-insignificant value. I'll also need to program stuff to put that data in a database and compare displayed color to captured. Don't know if I'm going to continue here. That method will need hours of capture time (to go through the screens of color) The really shrewd here might know what I could do, IF I could do this, so that is some motivation. We'll just have to see.

-

I don't know how many times I need to say that I too believe all LOG recording gammas have their place in the filmmaker's toolkit. A really experience/serious filmmaker is probably not going to use a camera limited by 8bit video, as @cantsin has pointed out and can use a LOG gamma in a scene near rec709 and not worry about it too much. For one to say, "in the real world filming the benefits of using X___ is worth over its weak spots" is a subjective judgement, right? The benefits may be worth it to you, not to someone else. We can never know what we'll want when we shoot. I'm trying to figure out the differences between LOG and non-log shooting in the A6300 for example. I've done tests similar to the one you suggest, but i wouldn't be telling anyone here anything they don't already know! There are some disputes about where the noise comes from, but no one disputes that LOG shooting introduced noise somewhere. And most don't feel the noise is a big problem. Aren't you even a little bit interested in the JUST HOW MUCH mid-tone information is lost in LOG. Even if it's small, even if no matter what the number, you're going to keep shooting it, wouldn't you like to know? I would. Unfortunately, it's proving very difficult to get at that data. I've spent hours writing scripts and developing techniques but am hitting technical difficulties which many try to help me solve by saying, 'don't bother, we know everything.' I'd be fine with that if they shared their statistics. Just tell me the numbers. How much noise is introduced by various LOG recording gammas? How much color information is lost in the mid-tones in LOG. How aggressive will a LOG need to be to introduce visible banding?

-

Please don't quit. That's not what I want. We spoke our minds off-line, let's move forrward! Everyone will be mad at me if you quit Just to give you an idea what I'm going through. Okay, so I want to use the monitor as a test reference of a color palette. If I used a printed page I'd have to light to mimic 5 stops of DR. Also, I'd have to deal with the dithering issues of a printer which would create color artifacts. Okay, so I'm using a monitor. Now I have to deal with the fact that each color is three pixels. Here's a fun video I did on that subject: It would be impossible, I'd think, to get a monitor-to-camera-pixel relationship. Somehow, I need each pixel in the camera to blend the right few pixels on the monitor. A "rabbit hole" indeed! Anyway, I'm just going to have to hope for a crude approximation of color capture from the camera using a monitor showing colors display along a DR curve. AGAIN, IF ANYONE HAS A BETTER WAY TO DO THIS, PLEASE POST! At this moment, I did 4K footage that showed more color in S-LOG2 than PP1. I know that is impossible from the data of these images. So it's all a type of noise. When I think about it, that should be expected in 4K which is greater resolution than my screen. (Yeah, I do one stupid thing after another). Anyway, I'm off to dealing with that issue and some others. That's where I'm at. Here's more of the kind brain damage I must deal with http://www.alanzucconi.com/2015/09/30/colour-sorting/

-

We'll see! I'm not arguing one way or another. My hypothesis is the manufacturers have already taken that into account in their standard profile, so don't lose the sensor's brightest stops. Yes, one could say LOG does not add noise. But whether it comes from adding, or "the appearance" the question remains about how much color is captures in total tonality. I'm not at the point where we can look at this issue, but YOU HAVE A GOOD ONE And I'm not ignoring it. Thanks!

-

Only if shooting in a scene that would best fit a Rec709 profile. No, I haven't concluded anything! Based on some initial tests against 1 million sample colors it looks like LOG loses about 35% color in a Rec709 case. I am posting here for people to help me get to the bottom of this. I posted another quick test looking at "banding" which would be expected IF LOG lost 35% of color in a Rec709 case. Do any of you go out and buy a camera because the guy at the store says it captures the best image IF you know how to grade it? Of course not. Everyone here tests their cameras, looks for new techniques to get better images. That's all I'm doing. My acid test would be, after all the work is done, someone like @Oliver Daniel would say, "that's useful information to keep in mind"

-

I'm not questioning your knowledge @cantsin. I'm questioning where and when you apply it. I already joke to friends that I didn't understand the simple difference between S-LOG and C-LOG, that they are Sony and Canon. Just because I'm woefully ignorant there, however, doesn't make me ignorant about how cameras work or what LOG does. I want your help. But please, wait your pitch Then hit it out of the park. No need to swing at everything (and yes, I should follow my own advice!)

-

On what f-ing camera, Cantsin? What if I don't have a 10 or 12bit CODEC camera? Again, you're bring up a theoretical question OUTSIDE the scope of the post. For a person shooting 8-bit video, what are the trade-offs between the camera's default settings and LOG?

-

Cantsin, you're not giving me any respect. One can poke holes in anyone's question. You're not working with this post, you're working against it. Instead of addressing the core issue I brought up, you keep lecturing me as if I'm some newbie film student about DR in the real world, the eye, all stuff I've studied in some depth and have decided are not relevant to this question. Your bringing them up 50 times doesn't convince me, sorry. FOR ALL THOSE READING THIS, PLEASE READ THIS: In video, LOG allows you to capture more dynamic range from the physical world. For example, point your camera outside on a partly-cloudy day at someone wearing a gray shirt under an umbrella and it will capture both detail in a gray cloud and detail of a person’s gray shirt under the umbrella. If you take out a light meter and measure the difference in brightness, between the cloud and shirt, you might find a difference of 15 stops between them. If you exposed the person with the camera’s default settings, and exposed for their gray shirt, the cloud would be washed-out and not visible. The reason for that is a camera is configured, from the factory, to record 16 million shades of color between around 5 stops of brightness. The cloud’s brightness would have been beyond that range. The drawback of LOG is that it captures less color in the middle tones of the image and it will add noise because the values in the extreme ends of the dynamic range are noisier. Whether this is acceptable for you is a complex and difficult question to answer. What you’re up against is the fixed memory size of your camera. Whether you shoot LOG, or the camera’s default settings, you can only record 24 bits of data, 8 bits for each primary color of red, green and blue. Your video palette is 16 million colors, whether you use the camera’s default settings or LOG. That cannot be changed. A camera’s default settings maximize the quality of image the camera can record that will match the dynamic range of your monitor, cell phone, TV or tablet. A camera’s default profile, out of the box, is the best image it can get under ideal lighting, whether the camera has LOG or not. When you shoot LOG, the 16 million color shades you get have a different quality compared to the 16 million shades you get in the camera’s default setting. So understanding how LOG differs from the camera’s default capture of 16 million shades is what we’re going to discuss today. When we talk about dynamic range we want to be careful which type of dynamic range we’re dealing with. There is the dynamic range of your monitor, which really isn’t dynamic at all, but usually fixed with 6 stops of brightness. Then there is the dynamic range of our physical world, which ranges from absolute darkness to light, so bright, looking at it would blind you. If you took philosophy in school, you may remember Plato’s cave. He used a cave as an analogy to separate what we can study and what we cannot, which are the metaphysical questions. Similarly, our displays are what we see in our cave. The physical world can never be shown on our cave wall. All we can do is record the physical world, best we can on a device, and come back and show it. Imagine that your viewers are people who have never left the cave. All they see are what we show on the wall, or their display. If that display only has 6 stops of dynamic range what would it mean for them if we say to them “our video recorded 12 stops of dynamic range”? If you think about this for a few minutes you will have that “ah-ha’ moment. If you keep trying to explain to them was the 12 stops means, at some point they’ll say “All I see are 6 stops, if you say your video has 12 stops well, that’s too metaphysical for me, dude! You need another beer.” I don’t want to study what a camera can record in metaphysical sense, but what it can do in the practical world of display technology. I need some images to show on it which do everything it is capable of. To do this, I have acquired images with all 16 million colors we have our in a 24-bit color space. Because the monitor can only display 2 million at a time, I have to break them up into separate images and assemble those into a video that will show all colors when played in its entirety. What I want to do is quantify the palette of colors that are captured using various camera settings within our viewer’s real world experience. Yes, we don’t shoot monitors for our content. But most scenes, especially indoors, are within 5 stops of dynamic range. Even outside, in a cloudy day, dynamic range is narrow. There are DEFINITELY scenes that call for LOG. However, how should we choose in scenes that could go either way? Can we acquire data that helps us make a better decision?