-

Posts

1,151 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by tupp

-

Well, you seem to live in a world in which you make up your own "realities" about what I am saying and that's okay, but posting insinuations based on those fantasies is a whole other thing. Regardless, such fantasies have no relation the fatal problems with Yedlin's test. However, just to make it clear, I never suggested that chroma subsampling should be avoided in resolution tests. If fact I implied the opposite by pointing out that 4:2:0 cameras are more common than the Alexa65, and thus, according to your logic, we shouldn't use an exceedingly uncommon camera such as the Alexa65: Of course, chroma subsampling essentially is a reduction in color resolution (and, hence, color depth), but it doesn't really affect a resolution test, as long as the resulting images have enough resolution for required for the given resolution comparison. Again, none of this discussion on cameras/sensors has any bearing on the fatal problems with Yedlin's test. Classic projection and irony. Slipshod tests like Yedlin's are for those who need conformation of their bias.

-

What I get is that there are some hair-brained notions floating around this thread on what cameras can and cannot be used in a resolution test, notions which have absolutely no bearing on the fatal flaws suffered in Yedlin's test. No. I rejected the test primarily because: And again, the test doesn't "involve" interpolation: Incidentally, Yedlin's test is titled "Camera Resolutions," because it is intended to test resolution -- not post "interpolation." A 1-to-1 pixel match is required for a resolution test, and Yedlin spent over four minutes explaining the importance of a 1-to-1 pixlel match at the very beginning of his video. In addition, you even explained and defended Yedlin's supposed 1-to-1 match... that is, until I showed that Yedlin failed to achieve it. Yedlin's haphazard test accidentally blurred the "spatial" resolution of the images, which makes it impossible to discern any possible difference between resolutions (Yedlin's downscaling/upscaling convolutions notwithstanding). What is it that you do not understand about this simple, basic fact? Not that this matters to the fact that Yedlin's test is fatally flawed, but you seem stuck on the notion that the results of using a camera with an interpolated sensor vs. using a camera with a non-interpolated sensor will somehow differ resolution-wise -- even if both cameras possess the very same effective resolution. However, the reality is that the particular camera that is used is irrelevant, as long as the captured images meet the resolution requirements for the comparison. If a non-interpolated camera sensor has the same effective resolution as a sensor that is interpolated, then both cameras with both such sensors are equally qualified to shoot images for the same resolution test. No, I didn't. You are making that up. In addition, how do you make sense of your notion that a 6K camera necessarily involves interpolation while a 4K camera doesn't involve interpolation? Well, then who cares about Yedlin's test that used an exceedingly uncommon camera? You said: No, it doesn't. The most common digital video cameras shoot with a 4:2:0 color (chroma) subsample. However, it is unlikely that anyone who would go to the trouble and expense to rent an Alexa65 package (along with all of the effort to achieve an appropriate high-end production value) would do any kind of subsampling at all. So, no -- the Alexa65 doesn't "share the same chroma subsampling properties of most cameras." The actual number on how many projects are down-sampled is likely unknown, but, in regards to down-sampling, again: So, Yedlin's results are further misleading in the sense that his "2K" image down-sampled from 6K is not the same as the more common down-sample of 4K to 2K, nor is it the same as 2K shot with a 2K camera. Using your logic from earlier in the thread, the results from an uncommon, high-end Alexa65 cannot possibly be applicable to those from the cameras that most of us use, because our cameras are lower quality. On the other hand, the Ursa 12K -- with a non-Bayer sensor -- is a also a high quality imaging device with twice the resolution of the Alexa65. Are you saying that the Ursa 12K -- with a non-Bayer sensor -- isn't good enough for a resolution test? Regardless, such notions of what cameras can and cannot be used in a resolution test have absolutely no bearing on the fatal flaws suffered in Yedlin's test. Really? Well, it seems like the red herring is actually your hypothetical test with a Foveon sensor that "does not share the same colour subsampling properties of most cameras," because the title of Yedlin's video is "Camera Resolutions," which indicates that his test compares differences in "perceptible" resolution -- not differences in color nor color "subsampling."

-

If we follow your reasoning, then who cares about Yedlin's comparison? He used an exceedingly uncommon camera for his test. You have to rent an Alexa65, and doing so is extremely expensive. Furthermore, on my entire continent there are only five locations where the Alexa65 is available for rent. I am not sure what cameras in your mind are uncommon, but I would bet that there are more Foveon cameras in use than the Alexa65. Likewise, with X-Trans cameras, scanning back cameras and Ursa 12K's. You said yourself, "I'm not watching that much TV shot with a medium format camera," and the Alexa65 is a medium format camera! So, why should anyone care about Yedlin's test when he used such an uncommon camera? You seem stuck on the notion that the results of using an uncommon camera vs. using a common camera will somehow differ resolution-wise -- even if both cameras possess the very same effective resolution. However, the reality is that the particular camera that is used is irrelevant, as long as the captured images meet the resolution requirements for the comparison. If an uncommon camera has the same effective resolution as a common camera, then both cameras are equally qualified to shoot images for the same resolution test. It's a simple concept that's easy to grasp. Well, please explain how your notion of an SD resolution comparison is applicable to our discussion on a comparison higher resolutions.

-

Setting aside the fact that this is the first time that you have made that particular claim regarding "the whole point of the test," a 1-to-1 pixel match is crucial for proper perceptibility in a resolution comparison. Yedlin spent over four minutes in the beginning of his video explaining that fact, and you additionally explained and defended the 1-to-1 pixel match. I and @slonick81 were able to achieve a 1-to-1 pixel match within a compositor viewer, but Yedlin did not do so. So, Yedlin failed to provide the crucial 1-to-1 pixel match required for proper perceptibility of 2K and higher resolutions. Right. I keep missing the point that the comparison is between 2K and higher resolutions: You have fascinating and imaginative interpretation skills. What gave you the notion that anyone referred to, "a camera that no-one ever uses?" Again, it is irrelevant whether the starting images were captured with an common or uncommon camera, as long as those images are sharp enough and of a high enough resolution, which I previously indicated here: So, as long as there is enough sharpness and resolution in the starting images, the resolution test is "camera agnostic" -- as it should be. In addition, the tests should be "post image processing" agnostic, with no peculiar nor unintended/uncontrolled side-effects. Unfortunately, the side-effect of pixel blending and post interpolation are big problems with Yedlin's test, so the results of his comparison are not "post image processing" agnostic and only apply to his peculiar post set-up and rendering settings, whatever they may be. Now, what was that you said about my "missing the point" on "determining if there is a difference between 2K and some other resolution?"

-

Well, I posted an image above of a compositor with an image displayed within it's viewer which was set at 100%. Unlike Yedlin, I think that I was able to achieve a 1-to-1 pixel match, but, by all means, please scrutinize it. There is no need for any such resolution tests to apply to any particular camera or display. I certainly never made such a claim.

-

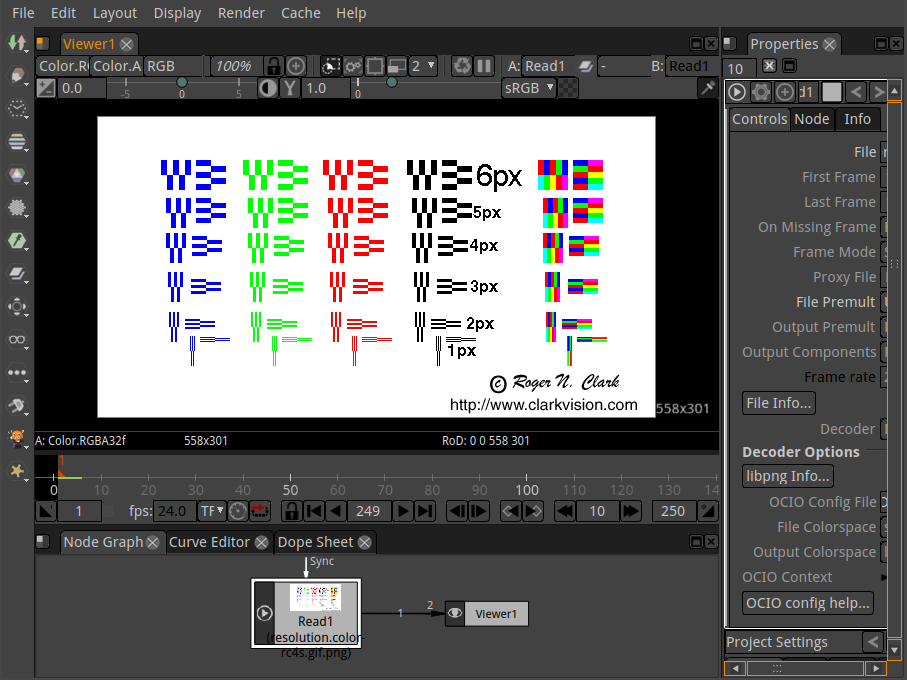

Wait. You, too, are concerned about slipshod testing? Then, how do you reconcile Yedlin's failure to achieve his self-imposed (and required) 1-to-1 pixel match?... you know, Yedlin's supposed 1-to-1 pixel match that you formerly took the trouble to explain and defend: As I have shown, Yedlin did not get a 1:1 view of the pixels, and now it appears that the 1:1 pixel match is suddenly unimportant to one of us. To mix metaphors, one of us seems to have changed one's tune and moved the goal posts out of the stadium. Also, how do you reconcile Yedlin’s failure to even address and/or quantify the effect of all of the pixel blending, interpolation, scaling and compression that occur in his test? There is no way for us to know to what degree the "spatial resolution" is affected by all of the complex imaging convolutions of Yedlin's test. There is absolutely no need for me to try and make... such an attempt. I merely asked you to clarify your argument regarding sensor resolution, because you have repeatedly ignored my rebuttal to your "Bayer interpolation" notion, and because you have also mentioned "sensor scaling" several times. Some cameras additionally upscale the actual sensor resolution after the sensor is interpolated, so I wanted to make sure that you were not referring to such upscaling, and, hence, ignoring my repeated responses. Absolutely. I haven't been posting numerous detailed points with supporting examples. In addition, you haven't conveniently ignored any of those points. Demosaicing is not "just like" upscaling an image. Furthermore, the results of demosaicing are quite the opposite from the results of the unintended pixel blending/degradation that we see in Yedlin's results. Also, not that it actually matters to testing resolution, but, again: some current cameras do not use Bayer sensors; some cameras have color sensors that don't require interpolation; monochrome sensors don't need interpolation. It's not just "technically" correct -- it IS correct. Not everyone shoots with a Bayer sensor camera. What is "missing the point" (and is also incorrect) is your insistence that Bayer sensors and their interpolation somehow excuse Yedlin's failure to achieve a 1-to-1 pixel match in his test. You are incorrect. Not all camera sensors require interpolation. No, it is not a problem for my argument, because CFA interpolation is irrelevant and very different from the unintentional pixel blending suffered in Yedlin's comparison. Yedlin's failure to acheive a 1-to-1 pixel match certainly invalidates his test, but that isn't my entire argument (on which I have corrected you repeatedly). I have made two major points: No. The starting images for the comparison are simply the starting images for the comparison. There are many variables that might affect the sharpness of those starting images, such as, they may have been shot with softer vintage lenses, or shot with a diffusion filter or, if they were taken with a sensor that was demosaiced, they might have used a coarse or fine algorithm. None of those variables matter to our subsequent comparison, as long as the starting images are sharp enough to demonstrate the potential discernability between the different resolutions being tested. You don't seem to understand the difference between sensor CFA interpolation and the unintended and uncontrolled pixel blending introduced by Yedlins test processes, which is likely why you equate them as the same thing. The sensor interpolation is an attempt to maintain the full, highest resolution possible utilizing all of the sensor's photosites (plus such interpolation helps avoid aliasing). In contrast, Yedlin's unintended and uncontrolled pixel blending degrades and "blurs" the resolution. With such accidental pixel "blurring," a 2K file could look like a 6K file, especially if both images come from the same 6K file and if both images are shown at the same 4K resolution. Regardless, the resolution of the camera's ADC output or the camera's image files is a given property that we must accept as the starting resolution for any tests, and, again, some camera sensors do not require interpolation. Additionally, with typical downsampling (say, from 8K to 4K, or from 6K to 4K, or from 4K to HD), the CFA interpolation impacts the final "spatial" resolution significantly less than that of the original resolution. So, if we start a comparison with a downsampled image as the highest resolution, then we avoid influence of sensor interpolation. On the other hand, if CFA interpolation impacts resolution (as you claim), then shooting at 6K and then downsampling to 2K will likely give different results than shooting at 6K and separately shooting the 2K image with a 2K camera. This is because the interpolation cell area of the 2K sensor is relatively coarser/larger within the frame than that of the 6K interpolation cell area. So, unfortunately, Yedlin's comparison doesn't apply to actually shooting the 2K image with a 2K camera. Except you might also notice that the X-Tran sensor does not have a Bayer matrix. You keep harping on Bayer sensors, but the Bayer matrix is only one of several CFAs in existence. By the way, the Ursa 12K uses an RGBW sensor, and each RGBW pixel group has 6 red photosites, 6 green photosites, 6 blue photosites and 18 clear photosites. The Ursa 12K is not a Bayer sensor camera. It is likely that you are not aware of the fact that if an RGB sensor has enough resolution (Bayer or otherwise), then there is no need for the type interpolation that you have shown. "Guess what that means" -- there are already non-Foveon, single sensor, RGB cameras that need no CFA interpolation. However, regardless of whether or not Yedlin's source images came from a sensor that required interpolation, Yedlin's unintended and uncontrolled pixel blending ruins his resolution comparison (along with his convoluted method of upscaling/downscaling/Nuke-viewer-'cropping-to-fit'"). You recklessly dismiss many high-end photographers who use scanning backs. Also, linear scanning sensors are used in a lot of other imaging applications, such as film scanners, tabletop scanners, special effects imaging, etc. That's interesting, because the camera that Yedlin used for his resolution comparison (you know, the one which you which you declared is "one of the highest quality imaging devices ever made for cinema")... well, that camera is an Alexa65 -- a medium format camera. Insinuating that medium format doesn't matter is yet another reckless dismissal. Similarly reckless is Yedlin's dismissal of shorter viewing distances and wider viewing angles. Here is a chart that can help one find the minimum viewing distance where one does not perceive individual display pixels (best view at 100%, 1-to-1 pixels): If any of the green lines appear as a series of tiny green dots (or tiny green "slices") instead of a smooth green line, you are discerning the individual display pixels. For all of those who see the tiny green dots, you are viewing your display at what is dismissed by Yedlin as an uncommon "specialty" distance. Your viewing setup is irrelevant according to Yedlin. To make the green lines smooth, merely back away from the monitor (or get a monitor with a higher resolution). Wait a second!... what happened to your addressing the Foveon sensor? How do you reconcile the existence of the Foveon sensor with your rabid insistence that all camera sensor's require interpolation. By the way, demosaicing the X-Trans sensor doesn't use the same algorithm to that of a Bayer sensor. I have responded directly to almost everything that you have posted. Perhaps you and your friend should actually read my points and try to comprehend them. Yedlin didn't "use" interpolation -- the unintentional pixel blending was an accident that corrupts his tests. Blending pixels blurs the "spatial" resolution. Such blurring can make 2K look like 6K. The amount of blur is a matter of degree. To what degree did Yedlin's accidental pixel blending blur the "spatial" resolution? Of course, nobody can answer that question, as that accidental blurring cannot be quantified by Yedlin nor anyone else. If only Yedlin had ensured the 1-to-1 pixel match that he touted and claimed to have achieved... However, even then we would still have to contend with all of the downscaling/upscaling/crop-to-fit/Nuke-viewer convolutions. I honestly can't believe that I am having to explain all of this. Yes. There is no contradiction between those two statements. Sensor CFA interpolation is very different from accidental pixel blending that occurs during a resolution test. In fact, such sensor interpolation yields the opposite effect from pixel blending -- sensor interpolation attempts to increase actual resolution while pixel blending "blurs" the spatial resolution. Furthermore, sensor CFA interpolation is not always required, and we have to accept a given camera's resolution inherent in the starting images of our test (interpolated sensor or not). Yedlin's accidental bluring of the pixels is a major problem that invalidates his resolution comparison. In addition, all of the convulted scaling and display peculiarities that Yedlin employs severely skew the results. Well, it appears that you had no trouble learning Natron! That could be a problem if the viewer is not set at 100%. I am not sure why we should care about that nor why we need to reformat. Why did you do all of that? All we need to see is the pixel chart in the viewer, which should be set at 100%, just like this image (view image at 100%, 1-to-1 pixels): That could cause a perceptual problem if the viewer is not set at 100%. I converted the pixel chart to a PNG image. I perceive an LED screen, not a projection. It seems that the purpose of Yedlin's comparison is to test if there is a discernible difference between higher resolutions -- not to show how CG artists work. This statement seems to contradict Yedlin's confirmation bias. In what should have been a straightforward, fully framed, 1-to-1 resolution test, Yedlin is shows his 1920x1080 "crop-to-fit" section of a 4K image, within a mostly square Nuke viewer (which results in an additional crop), and then he outputted everything to a 1920x1280 file, that suffers from accidental pixel blending. It's a crazy and slipshod comparison. That's actually two simple things. As I have said to you before, I agree with you that a 1:1 pixel match in is possible in a compositor viewer, and Yedlin could have easily acheived a 1-to-1 pixel match in his final results, as he claimed that he did. Whether or not Yedlin's Nuke viewer is viewer is showing 1:1 is still unknown, but now we know that the Natron viewer can do so. In regards to the round multiple scaling not showing any false details, I am not sure that your images are conclusive. I see distortions in both images, and pixel blending in one. Nuke is still a question mark in regards to the 1-to-1 pixel match. A "general impression" is not conclusive proof, and Yedlin's method and execution are flawed. Again, I make no claim for or against more resolution. What I see as "wrong and flawed" is Yedlin's method and execution of his resolution comparison. Likewise, but it appears that you have an incorrect impression of what I argue.

-

Pixels "impact" adjacent pixels? Sounds imaginative. Please explain how that works in the "real world." Yedlin's comparison is far from perfect. In general: Actually, they don't, especially if one shoots in black and white. Irony: ... and... ... and... Okay, not that it actually matters to this resolution discussion, but you have been hinting that there is a difference in resolution between what the camera sensor captures and the actual resolution of the rest of the "imaging pipeline," So, let's clarify your point: Are you saying that camera sensors capture images at a lower resolution than the rest of the "imaging pipeline," and, at some point in the subsequent process, the sensor image is somehow upscaled to match the resolution of the latter "imaging pipeline?" Is that what you think? The camera's resolution is determined at the output of the ADC or from the recorded files. It is irrelevant to consider any interpolation nor processing prior to that point that cannot be adjusted. Also, you seem to insinuate that cameras with non-Bayer sensors are uncommon. Regardless of the statistical percentage of Bayer matrix cameras to non-Bayer camers, that point is irrelevant to the general discernability of different resolutions perceived on a display. Even Yedlin did not try to argue that Bayer sensors somehow make a difference in his resolution comparison. Again... irony: ... and... One could ask the exact same question of you. My answer is that it is important to point out misleading information, especially when it comes in the form of flawed advice from a prominent person with a large, impressionable following. In addition, there are way too many slipshod imaging tests posted on the Internet. More folks should be aware of the current prevalence of low testing standards. Not that such commonality matters nor is true, nor that your 4K/2K point actually makes sense, but lots of folks shoot with non-Bayer matrix cameras. For instance, anyone shooting with a Fuji X-T3 or X-T4 is not using a Bayer sensor. Any photographer using a scanning back is not using a Bayer sensor. Anyone shooting with a Foveon sensor is not debayering anything. Anyone shooting with a monochrome sensor is not debayering their images. Well, we need to clarify exactly what you are hinting at here (see above paragraph mentioning "imaging pipeline"). Coincidentally, in our most recent "equivalence" discussion, someone linked yet another problematic Yedlin test. I am beginning to agree with that.

-

I applaud you for making your single-pixel "E's!" Likewise, I couldn't find the original version of that pixel chart, but the black integer rulings are clean. I apologize that I forgot to mention to concentrate on the black pixel rulings, as I did earlier in the thread: I had also linked another chart that is a GIF, so it doesn't suffer from compression, and all rulings of any color shown are clean, but it lacks the non-integer rulings: Natron is free and open source, and is available on most platforms. Creating something in a compositor and observing the work at "100%" within a compositor viewer might differ from seeing the actual results at 1-to-1 pixels. It is important to also see your actual results, rather than just a screenshot of a viewer with an enlarged image. We seem to agree on many things, but we depart here. Not sure how such a sizeable leap of reasoning is possible from the shaky ground on which Yedlin's comparisons are based. "Resolution lines" usually refers to a quality of optical systems while the resolution of digital video "codec" involves pixels, but I think I know what you are trying to say. I agree that Yedlin is not trying to test the capability of any codec/format to store a given amount of pixels. There is absolutely no reason for such a test. ... which is precisely what we are discussing in this thread. This issue has been covered in earlier posts. Why would anyone test "non-perceived" resolution? "Projected?" -- not necessarily. "Percieved?" -- of course. Why do you say "projection." I am not "perceiving" a projection. Any crop can be a "1:1 portion," but for review purposes it is important to maintain standardized aspect ratios and resolution formats. For some reason, Yedlin chose to show his 1920x1080 "crop-to-fit" from a 4K image, within an often square software "viewer" (thus, forcing an additional crop), and then he outputted everything to a 1920x1280 file. WTF?! It's a crazy and wild comparison. By the way, the comparisons mostly discussed so far in this thread involve an image from a single 6K camera. That image has been been scaled to different resolutions. Again, I make no claims as to whether digital resolution may be more or less important than other factors, but I argue that Yedlin's comparisons are useless in providing any solid conclusions in that regard. Yedlin went into his comparison with a significant confirmation bias. Your statement above acknowledges his strong leanings: "his idea sounds valid - starting from certain point digital resolution is less important then other factors." By glossing over uncontrolled variables and by ignoring significant potential objections, Yedlin tries to convert his bias into reality, rather than conducting a proper, controlled and objectively analyzed test. He suggests that there is no perceptual difference between 6K and 2K. He tries to prove that notion by downscaling the original 6K image to 2K, and then by comparing the original 6K and downscaled 2K image cropped within a 4K node editor viewer, and then by outputting a screen capture of that node editor viewer to a video file with an odd resolution -- all of this is done without addressing any blending/interpolation/compression variables that occur during each step. Again, WTF?! He is heavily reframing the original image within his node editor viewer. I wan't asking about the whole idea of his work. You said: I was asking you to cite (with links to Yedlin's video) those specific reasons covered by Yedlin. That notion may or may not be correct, but Yedin's convoluted and muddled comparison is inconclusive in regards to possible discernability distinctiveness of different resolutions Thank you for creating that image. It is important for us see such images with a 1-to-1 pixel match -- not enlarged. It appears that your use of the term "technical resolution" includes some degree or delineation of blended/interpolated pixels, not unlike those shown by the non-integer rulings in the first pixel chart that I posted. I am not sure if such blending/interpolation is visually quantifiable (even considering the pixel chart). Nevertheless, introducing any such blending/interpolation into a resolution comparison unnecessarily complicates a resolution comparison, and Yedlin does nothing to address nor to quantify the resulting "technical resolution" introduced by all of the scaling, interpolation and compression possibly introduced by the many convoluted steps of his test. On the other hand, you have given a specific combination of adjusted variables (+5% scale, 1° rotation, slight skew), variables which Yedlin fails to record and report. However, how do we know that the "technical resolution" of your specific combination will match that of, say: a raw 4K shoot, edited in 4K in Sony Vegas with no stabilization nor scaling and then delivered in 4K Prores 4:4:4?; someone shooting home movies in 4:2:0 AVCHD, edited on MovieMaker with sharpness and IS set at full and inadvertently output to some odd resolution in a highly compressed M4V codec?; someone shooting an EOSM with ML at 2.5K raw and scaled to HD in editing in Cinelerra with no IS and with 50% sharpening and output to an h264, All-I file? Do you think that just because all of these examples may or may not use some form of IS, sharpening compression that they will all yield identical results in regards to the degree of pixel blending/interpolation? Again, just because Nickleback and the Beatles both use some form of guitars and drums, that doesn't mean that their results are the same. If one intends to make any solid conclusions from a test, it is imperative to eliminate and/or assess the influence of any influential variables that are not being tested. Yedlin did not achieve his self-touted 1-to-1 pixel match required to eliminate the influence of pixel blending, scaling, interpolation and compression, and he made no effort to properly address nor quantify the influence of those variables.

-

Thank you for taking the time to create a demo. I agree that achieving a 1-to-1 pixel match when outputting is easy, and I stated so earlier in this thread: Indeed, with vigilant QC, a 1-to-1 pixel match can be maintained throughout an entire "imaging pipline." However, Yedlin did not achieve such a pixel match, even though he spoke for over 4 minutes on the importance of a 1-to-1 pixel match for his resolution comparison. I do appreciate your support, but your presentation doesn't seem conclusive. Perhaps I have misunderstood what you are trying to demonstrate. After opening your demo image on a separate tab, the only things that seem to be cleanly rendered without any pixel blending, is the thinner vertical white line (a precise three pixels wide) and the wider horizontal white line (a precise six pixels wide). The other two white lines suffer from blending. Everything else in the image also suffers from pixel blending. If you make another attempt, please use this pixel chart as your original image, as it gives more, clearer information: If the chart rulings labled "1" are a clean, single-pixel wide, then you have achieved a 1-to-1 pixel match. Not that it matters, but there is no doubt that in "real life" many shooters capture at a certain resolution and maintain that same resolution throughout the "imaging pipline" and then output at that very same resolution. Judging from quite a few of the posts on the EOSHD forum, many people don't even use lower res proxy files during editing. Regardless, when testing for differences in discernabillity from various resolutions, it is important to control all of the variables other than resolution, as other variables that run wild and uncontrolled can muddle any slight discernability distinction between different resolutions. If we allow such varibles to eliminate the discernability differences in the results, then what is the point of testing different resolutions? The title of Yedlin's comparison video is "Camera Resolutions," and we cannot conclude anything about differing resolutions until we eliminate the influence of all variables (other than resolution) that might muddle the results. Judging from the video's title title and from Yedlin's insistence on a 1-to-1 pixel match, Yedlin's comparison should center around detecting discernability differences of various resolutions. However, it appears that we agree that Yedlin is actually testing scaling methods and not resolution, as I (and others) have stated repeatedly: Once again, I appreciate your support. On the other hand, even if the subject of Yedlin's comparison is scaling methods and other image processing effects, his test is hardly exhaustive nor conclusive -- there are zillions of different possible scaling and image processing combinations, and he shows only three or four. Considering the countless possible combinations of scaling and/or image processing, it is slipshod reasoning to conclude that there is no practical difference between resolutions, just because Yedlin used some form of scaling/image-processing and in "real life" others may also use some form of scaling/image-processing. Such coarse reasoning is analogous to the notion that there is no difference between Nickleback and the Beatles, because, in "real life," both bands used some form of guitars and drums. There are just too many possible scaling/image-processing variables and combinations in "real life" for Yedlin to properly address, even within his lengthy video. Shooting and outputting in the same resolution probably isn't rare. I would guess that doing so is quite common. However, until someone can find some actual statistics, we have no way of knowing what is the actual case. In addition, I would guess that most digital displays are not projectors. Certainly, there might be existing sales statistics on monitor sales vs. projector sales. In a resolution tests, all such variables should be controlled/eliminated so that they do influence the results. Please cite those reasons covered by Yedlin. Again, we agree here and I have touched on similar points in this thread: Did you just recently learn that some camera manufacturers sometimes "fudge" resolution figures by counting photosites instead of RGB pixel groups? Did you forget that not all 4K sensors have a Bayer matrix?: Also, did you forget that I have already covered such photosite/pixel-group counting in this thread?: Not that it matters, but there are plenty of camera sensors that have true 4K resolution. Indeed, the maximum resolution figure for any current monochrome sensor cannot be "fudged," as there is no blending of RGB pixels with such a sensor. It's could be the same situation Foveon sensors, as the RGB receptors are all in the same photosite. I make no claims in regards to resolution in "the abstract." I simply assert that Yedin's comparison is not a valid resolution test. In light of Yedlin's insistence on a 1-to-1 pixel match and his use of what he calls "crop to fit" over "resample to fit", I am not sure that Yedlin shares your notion on the context of his comparison. Also, what kind of camera and/or workflow is not "real." I would guess that one could often discern the difference between 4K and 2K, but I will have to take your word on that. I am not "debating differences between resolutions." My concern is that folks are taking Yedlin's comparison as a valid resolution test. Well, if that is so, then Yedlin's test is not valid, as his comparison shows no difference between "6K" and "2k" and as his "workflow" is exceedingly particular. The thing is, in any type of empirical testing one usually has to be "100% scientific" to draw any solid conclusions. If one fails to properly address or control any influential variables, then the results can be corrupted and misleading. In a proper test, the only variable that is allowed to change is the one(s) that is being tested. Yedlin's made-up terms are not really consequential to what is being discussed in this thread. In regards to the lack of tightness of Yedlin's argument/presentation, I suspect that there are a few posters in this thread who disagree with you. I am not at odds with Yedlin's semantics nor am I arguing semantics. What gave you that notion? The method and execution shown in Yedlin's presentation are faulty to the point that they misinform folks in a way that definitely could matter to their own projects. If you think the topics that I argue don't matter for you own projects, that is fine, but please do not unfairly single me out as the only one who is discussing those topics. There are at least two sides to a discussion, and if you look at every one of my posts in this thread you will see that I quote someone and then respond. I merely react to someone else who is discussing the very same topic that evidently doesn't matter to you. Why have you not directly addressed those others like you have done with me? By the way, I do not "enjoy" constantly having to repeat the same simple facts that some refuse to comprehend and/or accept. Great! I am happy that you have made your decision without relying Yedlin's muddled comparison. There will likely be less of a gradient with a lower resolution. The viewing conditions in Yedlin's demo are not "normal": He uses a framing that he calls "crop to fit" which is not the normal framing; we see the entire comparison through the viewer of Yedlin's node editor; the image suffers at least one additional pass of pixel blending/interpolation that would not be present under normal conditions. It is obvious when he zooms in here that the actual resolution of the two compared images is identical, as the pixel size does not change. However, there might be a difference in the interpolation and/or "micro contrast." Okay, but the demo is flawed. Don't forget to verify a 1-to-1 pixel match within the NLE timeline viewer with a pixel chart. If the NLE viewer the introduces it's own blending/interpolation, try making short clips and play them in a loop back-to-back with a player that can give true 1-to-1 full screen 4K.

-

There is a way to actually test resolution which I have mentioned more than once before in this thread. -- test an 8K image on an 8K display, test a 6K image on a 6K display, test a 4K image on a 4K display, etc. That scenario is as exact as we can get. That setup is actually testing true resolution. Huh? Not sure how that scenario logically follows your notion that "there is no exact way to test resolutions," even if you ignore the simple, straightforward resolution testing method that I have previously suggested in this thread. What you propose is not actually testing resolution. Furthermore, it is likely that the "typical image pipeline" for video employs the same resolution throughout the process. In addition, if you "zoom in," you have to make sure that you achieve a 1-to-1 pixel match with no pixel blending (as stressed by Yedlin), if you want to make sure that we are truly comparing the actual pixels. However, zooming-in sacrifices color depth, which could skew the comparison. You don't say? He also laid out the required criteria of a 1-to-1 pixel match, which he did not achieve and which you dismiss. TWO points: Yedlin's downscaling/upscaling method doesn't really test resolution which invalidates the method as a "resolution test;" Yedlin's failure to meet his own required 1-to-1 pixel match criteria invalidates the analysis. Yedlin took the very first 4 minutes and 23 seconds in his video to emphasize the 1-to-1 pixel match and its importance. Such a match is required for us to view "true, 4K pixels," as stated by Yedlin. If we can't see the true pixels, we can't conclude much about the discernability of resolutions. Without that 1-to-1 pixel match we might as well just view the monitor through a 1/2 Promist filter. Yedlin didn't achieve that 1-to-1 pixel match, as you have already admitted. No. I stated that there is some form of blending and/or interpolation happening. I suspect that the blending/interpolation occurs within the viewer of Yedlin's node editor. He should have made straight renders for us to view, and included a pixel chart at the beginning of those renders. Yes, but, again, the problem could begin with Yedlin's node editor viewer. I did not describe it as "scaling." As I have said, it appears to be some form of blending/interpolation. Compression could contribute to the problem, but it is not certain that it is doing so in this instance. Not that I claimed that it was "scaling," but whether or not we commonly see images "scaled" has no bearing on conducting a true resolution test. Compare differences in true resolutions first, and discuss elsewhere the effects of blending, interpolation, compression and scaling. It is plain what Yedlin meant by saying right up front in his video that a rigorous resolution test uses a 1-to-1 pixel match to show "true 4K pixels." Evidently, it is you who has misinterpreted Yedlin. Again, if you think that a 1-to-1 pixel match is not important to a resolution test, you really should confront Yedlin with that notion.

-

No it doesn't. Different image scaling methods applied to videos made by different encoding sources yield different results. The number of such different possible combinations is further compounded when adding compression. We can't say that Yedlin's combination of camera, node editor, node editor viewer, peculiar 1920x1280 resolution and NLE render method will match anyone else's. ... Two points... two very significant points. Even though you quoted me stating both of them, you somehow forgot one of those points in your immediate reply. On the other hand, it takes only a single dismissed point to bring catastrophe to an analysis or endeavor, even if that analysis/endeavor involves development by thousands of people. For example, there was an engineer named Roger who tested one part of a highly complex machine that many thousands of people worked on. Roger discovered a problem with that single part that would cause the entire machine to malfunction. Roger urged the his superiors to halt the use of the machine, but his superiors dismissed Roger's warning -- his superiors were not willing "to throw away their entire analysis and planned timetable based on a single point." Here is the result of the decision to dismiss Roger's seemingly trivial objection. Here is Roger's story. Is it a fact that the sky is blue?: Regardless, a single, simple fact can debunk an entire world of analysis. To those who believe that the Earth is flat, here is what was known as the "black swan photo," which shows two oil platforms are off the coast of California: From the given camera position, the bottom of the most distant platform -- "Habitat" -- should be obscured by the curvature of the Earth. So, since the photo show's Habitat's supports meeting the water line, flat earthers conclude that there is no curvature of the Earth, and, thus, the Earth must be flat. However, the point was made that there is excessive distortion from atmospheric refraction, as evidenced by Habitat's crooked cranes. Someone also provided a photo of the platforms from near the same camera position with no refractive muddling: This photo without distortion shows that the supports of the distant Habitat platform are actually obscured by the Earth's curvature, which "throws away the entire analysis" made by those who assert that the Earth is flat. Here is a video of 200 "proofs" that the Earth is flat, but all 200 of those proofs are rendered invalid -- in light of the single debunking of the "black swan" photo. Likewise, Yedlin's test is muddled by blended resolution and by a faulty testing method. So, we cannot conclude much about actual resolution differences from Yedlin's comparison. By the way, you have to watch the entire video of 200 flat earth proofs (2 hours) "to understand it." Enjoy! I certainly do. Please explain what does humility have to do with fact? Incidentally, I did say that Yedlin was a good shooter, but that doesn't mean that he is a good scientist. It doesn't matter if Yedlin is the King of Siam, the Pope or and "inside straight" -- his method is faulty, as is his test setup. Yedlin failed to meet his own required criteria for his test setup, which he laid out emphatically and at length in the beginning of his video. Furthermore, his method of downscaling (and then upscaling) and then showing the results only at a single resolution (4K) does not truly compare different resolutions.

-

So what? Again, does that impress you? Furthermore, you suggested above that Yedlin's test applies to 99.9999% of content -- do you think that 99.9999% of content is shot with an Alexa65? Well, you left out a few steps of upscale/downscale acrobatics that negate the comparison as a resolution test. Most importantly, you forgot to mention that he emphatically stated that he had achieved a 1-to-1 pixel match on anyone's HD screen, but that he actually failed to do so, thus, invalidating all of his resolution demos. You are obviously an expert on empirical testing/comparisons...

-

Yedlin's resolution comparison doesn't apply to anything, because it is not valid. He is not even testing resolution. Perhaps you should try to convince Yedlin that such demonstrations don't require 1-to-1 pixel matches, because you think that 99.9999% of content has scaling and compression, and, somehow, that validates resolution tests with blended pixels and other wild, uncontrolled variables.

-

Yes, and I have repeated those same points many times prior in this discussion. I was the one who linked the section in Yedlin's video that mentions viewing distances and viewing angles, and I repeatedly noted that he dismissed wider viewing angles and larger screens. How do you figure that I missed Yedlin's point in that regard? Not sure what you mean here nor why anyone would ever need to test "actual" resolution. The "actual" resolution is automatically the "actual" resolution, so there is no need to test it to determine if it is "actual". Regardless, I have used the term "discernability" frequently enough in this discussion so that even someone suffering from chronic reading comprehension deficit should realize that I am thoroughly aware that Yedlin is (supposedly) testing differences perceived from different resolutions. Again, Yedlin is "actually" comparing scaling methods with a corrupt setup. Not sure how 1-to-1 pixels can be interpreted in any way other than every single pixel of the tested resolution matches every single pixel on the screen of the person viewing the comparison. Furthermore, Yedlin was specific and emphatic on this point: Emphasis is Yedlin's. It's interesting that this basic, fundamental premise of Yedlin's comparison was misunderstood by the one who insisted that I must watch the entire video to understand it -- while I only had to watch a few minutes at the beginning of the video to realize that Yedlin had not achieved the required 1-to-1 pixel match. It makes perfect sense that 1-to-1 pixels means that every single pixel on the test image matches every single picture on one's display -- that condition is crucial for a resolution test to be valid. If the pixels are blended or otherwise corrupted, then the resolution test (which automatically considers how someone perceives those pixels) is worthless. It's obvious, and, unfortunately, it ruins Yedlin's comparisons. Well, I didn't compare the exact same frames, and it is unlikely that you viewed the same frames if you froze the video in Quicktime and VLC. When I play the video of Yedlin's frozen frame while zoomed-in on the Natron viewer, there are noticeable dancing artifacts that momentarily change the pixel colors a bit. However, since they repeat an identical pattern every time that I play the same moment in the video, it is likely those artifacts are inherent in Yedlin's render. Furthermore, blending is indicated as the general color and shade of the square's pixels and of those pixels immediately adjacent have less contrast with each other. In addition, the mottled pixel pattern of square and it's nearby pixels in the ffmpeg image generally matches the pixel pattern of those in the Natron image, while both images are unlike the drawn sqaure in Yedlin's zoomed-in viewer which is very smooth and precise. When viewing the square with a magnifier on the display, it certainly looks like its edges are blended -- just like the non-integer rulings on the pixel chart in my previous post. I suspect that Yedlin's node editor viewer is blending the pixels, even though it is set to 100%. Again, Yedlin easily could have provided verification of a 1-to-1 pixel match by showing a pixel chart in his viewer, but he didn't. ... and who's fault is that? Then perhaps Yedlin shouldn't misinform his easily impressionable followers by making the false claim that he has achieved a 1-to-1 pixel match on his followers' displays. On the other hand, Yedlin could have additionally made short, uncompressed clips of such comparisons, or he could have provided uncompressed still frames -- but he did neither. It really is not that difficult to create uncompressed short clips or stills with a 1-to-1 pixel match, just as it was done in the pixel charts above. I am arguing that his resolution test is invalid primarily because he is actually comparing scaling methods and even that comparison is rendered invalid by the fact that he did not show a 1-to-1 pixel match. In regards to Yedlin's and your dismissal of wider viewing angles because they are not common (nor recommended by SMPTE 🙄), again, such a notion reveals bias and should not be considered in any empirical tests designed to determine discernability/quality differences between different resolutions. A larger viewing angle is an important, valuable variable that cannot be ignored -- that's why Yedlin mentioned it (but immediately dismissed it as "not common"). Not that it matters, but there are many folks with multi-monitor setups that yield wider viewing angles than what you and Yedlin tout as "common." Also, again, there are a lot of folks that can see the individual pixels on their monitors, and increasing the resolution can render individual pixels not discernible. Have you also forgotten about RGB striped sensors, RGBW sensors, Foveon sensors, monochrome sensors, X-Trans sensors and linear scanning sensors? None of those sensors have a Bayer matrix. It matters when trying to make a valid comparison of different resolutions. Also, achieving a 1-to-1 pixel match and controlling other variables is not that difficult, but one must first fundamentally understand the subject that one is testing. Nope. I am arguing that Yedlin's ill-conceived resolution comparison with all of its wild, uncontrolled variables is not valid. That's not a bad reason to argue for higher resolution, but I doubt that it is the primary reason that Arri made a 6K camera. I can't wait to get a Nikon, Canon, Olympus or Fuji TV! Cropping is a valid reason for higher resolution (but I abhor the practice). My guess is that Arri decided to make a 6K camera because the technology exists, because producers were already spec'ing Arri's higher-res competition and because they wanted to add another reason to attract shooters to their larger format Alexa. Perhaps you should confront Yedlin with that notion, because it is with Yedlin that you are now arguing. As linked and quoted above, Yedlin thought that it was relevant to establish a 1-to-1 pixel match, and to provide a lengthy explanation in that regard. Furthermore, at the 04:15 mark in the same video, Yedlin adds that a 1-to-1 pixel match: Emphasis is Yedlin's. The point of a resolution discernability comparison (and other empirical tests) is to eliminate/control all variables except for the ones that are being compared. The calibration and image processing of home TVs and/or theater projectors is a whole other topic of discussion that needn't (and shouldn't) influence the testing of the single independent variable of differing resolution. If you don't think that it matters to establish a 1-to-1 pixel match in such a test, then please take up that issue with Yedlin -- who evidently disagrees with you!

-

Keep in mind that resolution is important to color depth. When we chroma subsample to 4:2:0 (as likely with your A7SII example), we throw away chroma resolution and thus, reduce color depth. Of course, compression also kills a lot of the image quality. Yedlin also used the term "resolute" in his video. I am not sure that it means what you and Yedlin think it means. It is impossible for you (the viewer of Yedlin's video) to see 1:1 pixels (as I will demonstrate), and it is very possible that Yedlin is not viewing the pixels 1:1 in his viewer. Merely zooming "2X" does not guarantee that he nor we are seeing 1:1 pixels. That is a faulty assumption. Well, it's a little more complex than that. The size of the pixels that you see is always the size of the the pixels of your display, unless, of course, the zoom is sufficient to render the image pixels larger than the display pixels. Furthermore, blending and/or interpolation of pixels is suffered if the image pixels do not match 1:1 those of the display, or if the size image pixels are larger than those of the display while not being a mathematical square of the display pixels. Unfortunately, all of images that Yedlin presents as 1:1 most definitely are not a 1:1 match, with the pixels corrupted by blending/interpolation (and possibly compression). When Yedlin zooms-in, we see a 1:1 pixel match between the two images, so there is no actual difference in resolution in that instance -- an actual resolution difference is not being compared here nor in most of the subsequent "1:1" comparisons. What is being compared in such a scenario is merely scaling algorithms/techniques. However, any differences even in those algorithms get hopelessly muddled due to the fact that the pixels that you (and possibly Yedlin) see are not actually a 1:1 match, and are thus additionally blended and interpolated. Such muddling destroys any possibility of making a true resolution comparison. No. Such a notion is erroneous as the comparison method is inherently faulty and as the image "pipeline" Yedlin used unfortunately is leaky and septic (as I will show). Again, if one is to conduct a proper resolution comparison, the pixels from the original camera image should never be blended: an 8K captured image should be viewed on an 8K monitor; a 6K captured image should be viewed on an 6K monitor; a 4K captured image should be viewed on an 4K monitor; 2K captured image should be viewed on an 2K monitor; etc. Scaling algorithms, interpolations and blending of the pixels corrupts the testing process. I thought that I made it clear in my previous post. However, I will paraphrase it so that you might understand what is actually going on: There is no possible way that you ( @kye ) can observe the comparisons with a 1:1 pixel match to those of the images shown in Yedlin's node editor viewer. In addition, it is very possible that even Yedlin's own viewer when set at 100% is not actually showing a 1:1 pixel match to Yedlin. Such a pixel mismatch is a fatal flaw when trying to compare resolutions. Yedlin claims that he established a 1:1 match, because he knows that it is an important requirement for comparing resolutions, but he did not acheive a 1:1 pixel match. So, almost everything about his comparisons is meaningless. Again, Yedlin is not actually comparing resolutions in this instance. He is merely comparing scaling algorithms and interpolations here and elsewhere in his video, scaling comparisons which are crippled by his failure to achieve a 1:1 pixel match in the video. Yedlin could have verified a 1:1 pixel match by showing a pixel chart within his viewer when it was set to 100%. Here are a couple of pixel charts: If the the charts are displayed at 1:1 pixels, you should easily observe with a magnifier that the all of the black pixel rulings that are integers (1, 2, 3, etc.) are cleanly defined with no blending into adjacent pixels. On the other hand, all of the black pixel rulings that are non-integers (1.3, 1.6, 2.1, 2.4, 3.3, etc.) should show blending on their edges with a 1:1 match. Without such a chart it is difficult to confirm that one pixel of the image coincides with one pixel in Yedlin's video. Either Steve Yedlin, ASC was not savvy enough to include the fundamental verification of a pixel chart or he intentionally avoided verification of a pixel match. However, Yedlin unwittingly provided something that proves his failure to achieve a 1:1 match. At 15:03 in the video, Yedlin zooms way in to a frozen frame, and he draws a precise 4x4 pixel square over the image. At the 16:11 mark in the video, he zooms back out to the 100% setting in his viewer, showing the box at the alleged 1:1 pixels You can freeze the video at that point and see for yourself with a magnifier that the precise 4x4 pixel square has blended edges (unlike the clean-edged integer rulings on the pixel charts). However, Yedlin claims there is a 1:1 pixel match! I went even further than just using magnifier. I zoomed-in to that "1:1" frame using two different methods, and then I made a side-by-side comparison image: All three images in the above comparison were taken from the actual video posted on Yedlin's site. The far left image shows Yedlin's viewer fully zoomed-in when he draws the precise 4x4 pixel square. The middle and right images are zoomed into Yedlin's viewer when it is set to 100% (with an allegedly 1:1 pixel match). There is no denying the excessive blending and interpolation revealed when zooming-in to the square or when magnifying one's display. No matter how finely one can change the zoom amount in one's video player, one will never be able to see a 1:1 pixel match with Yedlin's video, because the blending/interpolation is inherent in the video. Furthermore, the blending/interpolation is possibly introduced by Yedlin's node editor viewer when it is set to 100%. Hence, Yedlin's claimed 1:1 pixel match is false. By the way, in my comparison photo above, the middle image is from a tiff created by ffmpeg, to avoid further compression. The right image was made by merely zooming into the frozen frame playing on the viewer of the Natron compositor. Correct. That is what I have stated repeatedly. The thing is, he uses this same method in almost every comparison, so he is merely comparing scaling methods throughout the video -- he is not comparing actual resolution. What? Of course there are such "pipelines." One can shoot with a 4K camera and process the resulting 4K files in post and then display in 4K, and the resolution never increases nor decreases at any point in the process. Are you trying to validate Yedlin's upscaling/downscaling based on semantics? It is generally accepted that a photosite on a sensor is a single microscopic receptor often filtered with a single color. A combination of more than one adjacent receptors with red, green, blue (and sometimes clear) filters is often called a pixel or pixel group. Likewise, an adjacent combination of RGB display cells is usually called a pixel. However you choose to define the terms or to group the receptors/pixels, it will have little bearing on the principles that we are discussing. Huh? What do you mean here? How do you get those color value numbers from 4K? Are you saying that all cameras are under-sampling compared to image processing and displays? Regardless of how the camera resolution is defined, one can create a camera image and then process that image in post and then display the image all without any increase nor decrease of the resolution at any step in the process. In fact, such image processing with consistent resolution at each step is quite common. It's called debayering... except when it isn't. There is no debayering with: an RGB striped sensor; an RGBW sensor; a monochrome sensor; a scanning sensor; a Foveon sensor; an X-Trans sensor; and three-chip cameras; etc. Additionally, raw files made with a Bayer matrix sensor are not debayered. I see where this is going, and your argument is simply a matter of whether we agree to determine resolution by counting the separate red, green and blue cells or whether we determine resolution by counting RGB pixel groups formed by combining those adjacent red, green and blue scales. Jeez Louise... did you just recently learn about debayering algorithms? The conversion of adjacent photosites into a single RGB pixel group (Bayer or not) isn't considered "scaling" by most. Even if you define it as such, that notion is irrelevant to our discussion -- we necessarily have to assume that a digital camera's resolution is given by either the output of it's ADC or by the resolution of the camera files. We just have to agree on whether we are counting the individual color cells or the combined RGB pixel groups. Once we agree upon the camera resolution, that resolution need never change throughout the rest of the "imaging pipeline." You probably shouldn't have emphasized that point, because you are incorrect, even if we use your definition of "scaling." There are no adjacent red green or blue photosites to combine ("scale") with digital Foveon sensors, digital three chip cameras and digital monochrome sensors. Please, I doubt that even Yedlin would go along with you on this line of reasoning. We can determine the camera resolution merely from output of the ADC or from the camera files. We just have to agree on whether we are counting the individual color cells or the combined RGB pixel groups. After we agree on the camera resolution, that resolution need never change throughout the rest of the "imaging pipeline." Regardless of these semantics, Yedlin is just comparing scaling methods and not actual resolution. When one is trying to determine if higher resolutions can yield an increase in discernability or in perceptible image quality, then it is irrelevant to consider the statistics of common or uncommon setups. The alleged commonality and feasibility of the setup is a topic that should be left for another discussion, and such notions should not influence nor interfere with any scientific testing nor with the weight of any findings of the tests. By dismissing greater viewing angles as uncommon, Yedlin reveals his bias. Such dismissiveness of important variables corrupts his comparisons and conclusions, as he avoids testing larger viewing angles, and he merely concludes that larger screens are "special". Well if I had a 4K monitor, I imagine that I could tell the difference between a 4K and 2K image. Not that it matters, but close viewing proximity is likely much more common than Yedlin realizes and more more common than your web searching shows. In addition to IMAX screens, movie theaters with seats close to the screen, amusement park displays and jumbo-trons, many folks position their computer monitors close enough to see the individual pixels (at least when they lean forward). If one can see individual pixels, a higher resolution monitor of the same size can make those individual pixels "disappear." So, higher resolution can yield a dramatic difference in discernability, even in common everyday scenarios. Furthermore, a higher resolution monitor with the same size pixels as a lower resolution monitor gives a much more expansive viewing angle. As many folks use multiple computer monitors side-by-side, the value of such a wide view is significant. Whatever. You can claim that combining adjacent colored photosites into a single RGB pixel group is "scaling." Nevertheless, the resolution need never change at any point in the "imaging pipeline." Regardless, Yedlin is merely comparing scaling methods and not resolution. Well, we can't really draw such a conclusion from Yedlin's test, considering all of the corruption from blending and interpolation caused by his failure to achieve a 1:1 pixel match. How is this notion relevant or a recap? Your statistics and what you consider be likely or common in regards to viewing angles/proximity is irrelevant in determining the actual discernability differences between resolutions. Also, you and Yedlin dismiss sitting in the very front row of a movie theater. That impresses you? Not sure how that point is relevant (nor how it is a recap), but please ask yourself: if there is no difference in discernability between higher resolutions, why would Arri (the maker of some of the highest quality cinema cameras) offer a 6K camera? Yes, but such points don't shed light on any fundamental differences in the discernability of different resolutions. Also how is this notion a recap? You are incorrect and this notion is not a recap. Please note that my comments in an earlier post regarding Yedlin's dismissing wider viewing angles referred to and linked to a section at 0:55:27 in his 1:06:54 video. You only missed all of the points that I made above in this post and earlier posts. No, it's not clear. Yedlin's "resolution" comparisons are corrupted by the fact that the pixels are not a 1:1 match and by the fact that he is actually comparing scaling methods -- not resolution.

-

Oh, that is such a profound story. I am sorry to hear that you lost your respect for Oprah. Certainly, there are some lengthy videos that cannot be criticized after merely knowing the premise, such as this 3-hour video that proves that the Earth is flat. It doesn't make any sense at the outset and it is rambling, but you have to watch the entire 2 hours and 55 minutes, because (as you described the Yedlin video in an earlier post) "the logic of it builds over the course of the video." Let me know what you think after you have watched the entire flat Earth video. Now, reading your story has moved me, so I watched the entire Yedlin video! Guess what? -- The video is still fatally flawed, and I found even more problems. Here are four of the videos main faults: Yedlin's setup doesn't prove anything conclusive in regards to perceptible differences between various higher resolutions, even if we assume that a cinema audience always views a projected 2K or 4K screen. Much of the required discernability for such a comparison is destroyed by his downscaling a 6K file to 4K (and also to 2K and then back up to 4K) within a node editor, while additionally rendering the viewer window to an HD video file. To properly make any such comparison, we must at least start with 6K footage from a 6K camera, 4K footage from a 4K camera, 2K footage from a 2K camera, etc. Yedlin's claim here that the node editor viewer's pixels match 1-to-1 to the pixels on the screen of those watching the video is obviously false. The pixels in his viewer window don't even match 1-to-1 the pixels of his rendered HD video. This pixel mismatch is a critical flaw that invalidates almost all of his demonstrations that follow. At one point, Yedlin compared the difference between 6K and 2K by showing the magnified individual pixels. This magnification revealed that the pixel size and pixel quantity did not change when he switched between resolutions, nor did the subject's size in the image. Thus, he isn't actually comparing different resolutions in much of the video -- if anything, he is comparing scaling methods. Yedlin glosses over the factor of screen size and viewing angle. He cites dubious statistics regarding common viewing angles which he uses to make the shaky conclusion that larger screens aren't needed. Additionally, he avoids consideration of the fact that larger screens are integral when considering resolution -- if an 8K screen and an HD screen have the same pixel size, at a given distance the 8k screen will occupy 16 times the area of the HD screen. That's a powerful fact regarding resolution, but Yedlin dismisses larger screens as "specialty thing," Now that I have watched the entire video and have fully understood everything that Yedlin was trying to convey, perhaps you could counter the four problems of Yedlin's video listed directly above. I hope that you can do so, because, otherwise, I just wasted over an hour of my time that I cannot get back.

-

The video's setup is flawed, and there are no parts in the video which explain how that setup could possibly work to show differences in actual resolution. If you disagree and if you think that you "understand" the video more than I, then you should have no trouble countering the three specific flaws of the video that I numbered above. However, I doubt that you actually understand the video nor the topic, as you can't even link to the points in the video that might explain how it works. I see. So, you have actually no clue about the topic, and you are just trolling.

-

Good for you! Nope. The three points that I made prove that Yedlin's comparisons are invalid in regards to comparing the discernability of different resolutions. If you can explain exactly how he gets around those three problems, I will take back my criticism of Yedlin. So far, no one has given any explanation of how his set up could possibly work. Yes. I mentioned that section and linked it in a previous post. There is no way his set up can provide anything conclusive in regards to the lack of any discernability between different resolutions. He assumes that the 1-to-1 pixel view happens automatically, in a section that I linked in my previous post. Again, here is the link to that passage in Yedlin's video. It is impossible to get a 1-to-1 view of the pixels that appear within Yedlin's node editor -- those individual pixels were lost the moment he rendered the HD video. So, most of his comparisons are meaningless. No he doesn't show a 1-to-1 pixel view, because it is impossible to actually see the individual pixels within his node editor viewer. Those pixels were blended together when he rendered the HD video. In addition, even if Yedlin was able to achieve a 1-to-1 pixel match in his rendered video, the downscaling and/or downscaling and upscaling performed in the node editor destroys any discernable difference in resolutions. He is merely comparing scaling algorithms -- not actual resolution differences Furthermore, Yedlin reveals to us what is actually happening in many of his comparisons when we see the magnified view. The pixel size, the pixel number and the image framing all remain identical while he switches between different resolutions. So, again, Yedlin is not comparing different resolutions -- he is merely comparing scaling algorithms That is at the heart of what he is demonstrating. Yedlin is comparing the results of various downscaling and upscaling methods. He really isn't comparing different resolutions. Yedlin is not comparing different resolutions -- he is merely comparing scaling algorithms. You need to face that fact. Yedlin is a good shooter, but he is hardly an imaging scientist and he certainly is no pioneer. There are just too many flaws and uncontrolled variables in his comparisons to draw any reasonable conclusions. He covers the same ground and makes the same classic mistakes of others who have preceded him, so he doesn't really offer anything new. Furthermore, as @jcs pointed out, Yedlin's comparisons are "way too long and rambly." From what I have seen in these resolution videos and in his other comparisons, Yedlin glosses over inconvenient points that contradict his bias, and his methods are slipshot. In addition, I haven't noticed him contributing anything new to any of the topics which he addresses. Indeed... I don't think that Yedlin even understands his own tests. Well, I don't understand how a resolution comparison is valid if one doesn't actually compare different resolutions. You obviously cannot explained how such a comparison is possible. So, I am not going to risk wasting an hour of my time to watching more of a video comparison that is fatally flawed from the get-go.

-

You are incorrect. Regardless, I have watched enough of the Yedlin videos to know that they are significantly flawed. I know for a fact that: Yedlin's setup cannot prove anything conclusive in regards to perceptible differences between various higher resolutions, even if we assume that a cinema audience always views a projected 2K or 4K screen. Much of the required discernability for such a comparison is destroyed by his downscaling a 6K file to 4K (and also to 2K and then back up to 4K) within a node editor, while additionally rendering the viewer window to an HD video file. To properly make any such comparison, we must at least start with 6K footage from a 6K camera, 4K footage from a 4K camera, 2K footage from a 2K camera, etc. Yedlin's claim here that the node editor viewer's pixels match 1-to-1 to the pixels on the screen of those watching the video is obviously false. The pixels in his viewer window don't even match 1-to-1 the pixels of his rendered HD video. This pixel mismatch is a critical flaw that invalidates almost all of his demonstrations that follow. At one point, Yedlin compared the difference between 6K and 2K by showing the magnified individual pixels. This magnification revealed that the pixel size and pixel quantity did not change when he switched between resolutions, nor did the subject's size in the image. Thus, he isn't actually comparing different resolutions in much of the video -- if anything, he is comparing scaling methods. In one of my earlier posts above, I provided a link to a the section of Yedlin's video in which he demonstrates the exact flaw that I criticized. Somehow, you missed the fact that what I claimed about the video is actually true. I also mentioned other particular problems of the video in my earlier posts, and you missed those points as well. So, no "straw man" here. As I have suggested in another thread, please learn some reading comprehension skills, so that I and others don't have to keep repeating ourselves. By the way, please note that directly above (within this post) is a numbered list in which I state flaws inherent in Yedlin's and please see that with each numbered point I include a link to the pertinent section of Yedlin's video. You can either address those points or not, but please don't keep claiming that I have not watched the video. In light of the fact that I actually linked portions of the video and made other comments about other parts of the video, logic dictates that I have at least watched those portions of the video. So, stating that I have not watched the video is illogical. Unless, of course, you missed those points in my post, in which case I would urge you once again to please develop your reading comprehension. Actually, neither you nor any other poster has directly addressed the flaws in Yedlin's video that I pointed out. If you think that I do not understand the videos, perhaps you could explain what is wrong with my specific points. You can start with the numbered list within this post.

-

Agreed, but what is the point of all the downscaling and upscaling? What does it prove in regards to comparing the discernability of different resolutions? How can we compare different resolutions, if the differences in resolution are nullified at the outset of the comparison? In addition, is this downscaling and then upscaling something that is done in practice regularly? I have never heard of anyone intentionally doing so. Also, keep in mind that what Yedlin actually did was to downscale from 6K/4K to 2K, then upscale to 4K... and then downscale back to 1080. The delivered videos both on Yedlin's site and in your YouTube links are 1080. I honestly do not understand why anyone would expect to see much of a general difference after straight downscaling and then upscaling, especially when the results are rendered back down to HD. Please enlighten me on what is demonstrated by doing so. Furthermore, Yedlin merely runs an image through different scaling nodes in editing software while peering at the software's viewer, and I am not sure that doing so gives the same results as actually rendering an image to a lower resolution, then re-rendering it back to a higher resolution. Of course, there are numerous imaging considerations that transcend simple pixel counts. That issue has been examined endlessly on this forum and elsewhere, and I am not certain if Yedlin adds much to the discussion. By the way, my above quote from @jcs came from a 2-page "detail enhancement" thread on EOSHD. The inventive and original approach introduced within @jcs's opening post gives significant insight into sharpness/acuity properties that are more important than simple resolution. In that regard, the 1+ hour video on resolution by Steve Yedlin, ASC is far surpassed by just six short paragraphs penned by JCS, EOSHD.

-

Then Tupp said that he didn't watch it, criticised it for doing things that it didn't actually do, then suggests that the testing methodology is false. Your interpretation of my interaction with your post here is certainly interesting. However, there is no need for interpretation, as one can merely scroll up to see my comments. Nevertheless, I would like to clarify a few things. Firstly, as I mentioned, I merely scanned the Yedlin videos for two reasons: I immediately encountered what are likely flaws in his comparisons (to which you refer as "things that it didn't actually do"); Yedlin's second resolution video is unnecessarily long and ponderous. Why should one waste time watching over an hour of long-winded comparisons that are dubious from the get go. Yedlin expects viewers to judge the differences in discernability between 6K, 4K and 2K footage rendered to full HD file on the viewers' own monitors. Also, we later see some of the footage downscaled to 2K and then upscaled to 4K. Unfortunately, even if we are able to view the HD file pixels at 1-to-1 on our monitors, most of the comparisons are still not valid. His zooming in and out while switching between downscaled/upscaled images is not equivalent to actually comparing 8K capture on an 8K monitor with 4k capture on a 4k monitor with 2K capture on a 2K monitor, etc. In regards to my claims of flaws in the video which are "things that it didn't actually do," here is the passage in the video that I mentioned in which the pixel size and pixel quantity did not change nor did the subject's size in the image. By the way, I disagree with his statement that the 2K image is less "resolute" than the 6K image when zoomed in. At that moment in the video, the 2K image looks sharper than the 6K image to me, both when zoomed in and zoomed out. So much for the flaws in the video "that it didn't actually do." As for the laborious length of some of Yedlin's presentations, I am not the only one here who holds that sentiment. Our own @jcs commented on the very resolution comparisons that we are discussing: Please note that this comment appeared in an informative thread about "Multi-spectral Detail Enhancement," acuity and sharpness, which is somewhat relevant to this thread regarding resolution. Nice comments! Thank you for your thoughtful consideration of my points!

-

Thank you for the recommendation! I don't see any flaw in his strategy, but it also seems rather generic. Not sure if I should spend an hour watching a video that can be summed-up in a single sentence (as you just did). Also, I hesitate to watch a lengthy video that has fundamental problems inherent in its demonstrations (such as showing resolution differences without changing the pixel size nor pixel number).

-

Very cool! Your rig reminds me of @ZEEK's EOSM Super 16 setup. It shoots 2.5K, 10-bit continuously or 2.8K, 10-bit continuously with ML at around 16mm and Super 16 frame sizes.

-