Towd

Members-

Posts

117 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by Towd

-

I've thought about using that mode, but still just prefer to typically capture in a max quality 5k open gate or c4k in 10 bit. But, I typically don't do super fast turnarounds and just run proxies out overnight on all my footage using Premiere. But I can totally see the appeal for home movies and such, and 3k seems like a nice sweet spot for a 1080p product. One thing I have evolved into for slow motion is the 1080p 10 bit at 60fps over one of the 4k 60fps modes in 8 bit. I really much prefer all the 10 bit modes to the 8 bit ones on the GH5, and as your test shows, the 1080p is quite good. Especially on things like skies and clouds approaching clipping the 8 bit modes grade kind of crummy. Oh, but just to note, I just shoot V-Log for everything-- just to keep things consistent.

-

Nice tests! Well worth downloading the Prores file as the Youtube compression really plays hell with all the stress tests. To my eye they all looked pretty solid. Maybe someone can pixel peep some motion blur variations, or I'm totally missing something. The only thing that popped out to me was some exposure variation on the trees blowing when using the open gate modes. But since everything else looked perfectly matched up, I'm guess that was just some shoot variation on that test. As a total gut check, I probably liked the 1080p All-I and C4k All-I the best, but if it was a blind test, I'd probably fail identifying them. Also, thanks for 24p on all the tests! 👍

-

Really nice to hear that the Veydra guys seem to have worked things out and are endorsing the Meike lenses. I picked up a 25mm T2.2 version of the Meike design when I first heard they were available and my curiosity got the best of me. I absolutely love that lens, but had held off on getting more until I knew what the story was with Meike. I may need to add a 12mm and a 50mm to complete a small set for my GH5 soon. With the Extra Tele converter mode that punches in 1.4x, I'd have a very compact 3 lens set that covers 12mm, 17mm, 25mm, 35mm, 50mm, and 70mm for about the cost of one Veydra! 😃

-

Sounds good to me! I agree and totally get it. To be honest, I'm going to be really surprised if we discover any reasonable prosumer camera or higher that is recording frames at inconsistent rates. Yes, a cheapo camera or phone might be doing something like a 3:2 pulldown or some drop frame process while recording at a different frame rate. Who knows! I may be wrong, and I'd love to find out if some manufacturer has been sneaking that by us. But, if there is some kind of drop frame processing happening, I would imagine it would be in a very consistent pattern-- like 60 hz converted to 24fps. I really can't see a camera being so dodgy that it is inconsistently recording exposures at subtly different rates from frame to frame to frame. I know in time-lapse mode on some still cameras there can be minor variations, but I'm going to be floored if we discover this in a video recording mode. My personal theory is that most motion cadence issues have to do with motion blur artifacts, incorrect or variable shutter angles(like from aperture priory shooting), rolling shutter, or playback issues on the viewer's side. In regards to 24 vs 25 fps... Isn't PAL a regional standard for like the local news? I'm pretty sure 24 fps is the global film/cinema standard. You are doing the tests, so I'll get by with whatever, but seriously... 25 fps.... really??? 😎 I can run some more tests if it helps. It's pretty fast on my end. I think you can get a 15 or 30 day trial if you want to try it. It's pretty useful if a little obtuse. In regards to IBIS, I think we are certainly introducing errors into our measurements by using it. Could be interesting to test jitter/cadence with and without it though. I'd just note that, this is pretty much exactly how motion blur records to film. If we consider that the holy grail. Everything else I agree with... camera shake is jitter and trying to discern some kind of motion cadence from it is probably optimistic at best. @BTM_Pix Thanks for this link! I had a feeling somebody somewhere must have invented a variable ND soft shutter. And I had a strange feeling you'd be the one to know about it! Very interesting. 😊

-

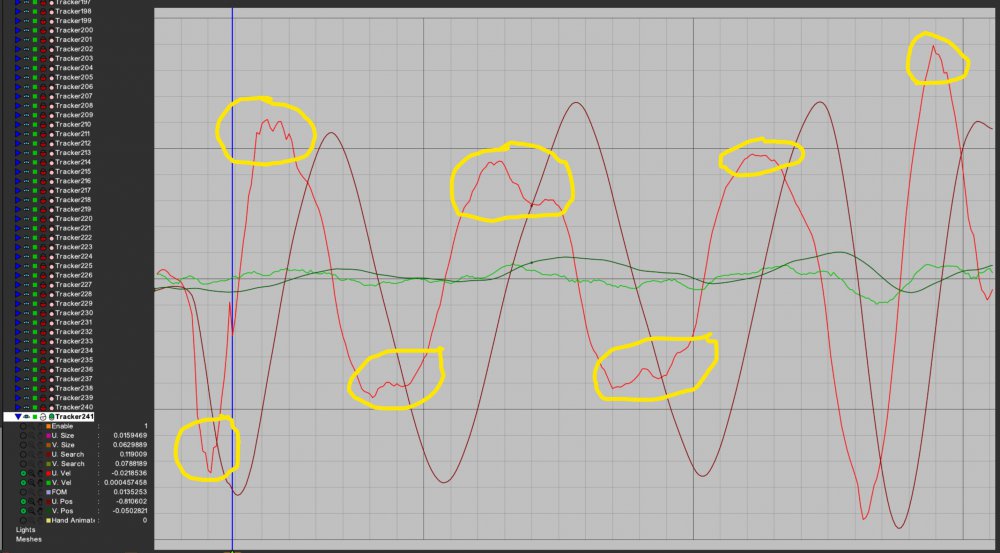

Thinking about it some more, I could see where these marked velocity changes in the middle of the pan could be possibly seen a motion cadence problems and they should correspond to what Kye was graphing in his plots-- that is the change in X on each frame. But to my eye they are fairly inconsistent in smoothness on each pan. Some pans were really smooth and some have a bit of random jumpiness which leads me to believe they are probably random noise introduced by the IBIS, or subtle irregularities in Kye's panning.

-

Okay, so I looked at the video you posted and ran it through a 3D tracking/camera solving app I own called "SynthEyes". Here is the test object I tracked: And this is a graph of it's 2D motion. From what I'm seeing, the horizontal motion seems pretty smooth. The bright red is velocity changes in X and the dark red (brown) is the position changes. Even the velocity changes seem pretty smooth. You mention having the IBIS turned on. I think I'd definitely turn that off because who knows what it is adding to the test. I noticed specifically on the frame where the blue vertical line is (where I had the play head) there is a big bump in velocity. That was probably the floating sensor adjusting to the fact that you were changing the direction of you pan from left to right around that time and it stuttered a bit-- confused on what to lock to. I also resampled the footage down into an 1080p MXF video for playback on my 1080p 120hz monitor and to be honest it looked really smooth. One thing I noticed when trying to play back the original file was a lot of stuttering because my PC couldn't decode the 10 bit UHD in real time. That would definitely lead to cadence issues if you are having a similar issue. It's really important that you're not dropping frames during playback. Before we had realtime playback of video, animators used to use flipbook software to load all their frames into RAM to ensure they were not dropping frames. You might want to try that to ensure you are not dropping frames during playback if you are unsure. There is an open source one I use occasionally called DJV that is pretty good. It wont playback 30 minutes of UHD video because you have to load everything into ram, but it might work in a pinch for short clips. Link: https://darbyjohnston.github.io/DJV/ Anyway, I'm still interested in more tests, but from my end, I think the motion on the GH5 looks pretty good and smooth.

-

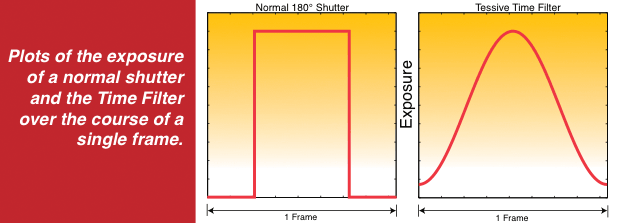

I love this! Honestly, I thought you'd need a more controlled test, but you do seem to be picking up some random motion with these uncontrolled panning tests. It would be nice if you had some some kind of accurately repeatable motion that could be run consistently for each test. Maybe even a metronome, or spinning fan blade if you zoomed in enough to get a nice measurable motion sweep in each frame. I also think that a high speed shutter is great for tracking the inconsistent motion, but I think it may be helpful to repeat the test with a 180 degree shutter. I think one of the key components of proper "motion cadence" is good motion blur. We should see motion blur that streaks through half the distance traveled in each frame if it is being recorded correctly. To this point, I could see when a high contrast scene where a bright area is moving into the shoulder of the exposure the motion blur may become less apparent as an example of something people might perceive as bad motion cadence. Just one of many possible examples of what would constitute bad motion blur. I think you are referring to this pic from the BTM_Pix link: I tried to look up what this system he is referring to is, but the link didn't seem to be working. But I believe he is referring to some kind of post processing motion blur system as you could then synthesize whatever kind of exposure curve you wanted digitally from a high sample of frames. To the best of my knowledge there is no camera shutter that ramps up it's photo-site's sensitivity over time. What I mean is that while film or digital exposure is a logarithmic process of recording light that ramps up, you still have a rolling shutter, global shutter, or spinning mechanical shutter where every discreet point on an exposed image is either being fulling exposed or off. It could be argued that there is a microsecond when a mechanical shutter is only partially obscuring a pixel, but I think the effect would be so minimal it would disappear into noise. I guess you could also reproduce that Tessive time filter with some kind of graduated ND mechanical shutter, but I've never heard of that. So while exposure is logarithmic and mapped to an S-Curve, every discreet pixel is either fully on and recording or off, and I've never heard of any kind of "ramping shutter". If there is one out there, I'd love to read about it! So trying not to get too distracted, my point being that photo sensors in cameras are either on or off like in the first square wave picture. What gives motion blur its gradual ramping from on to off is how much time the object being recorded spends lighting that pixel during the time the photo site is "on" no matter the shutter being used. I hope I'm making sense. I really love your tests. One small request though--- can you do your tests in 24 or 23.976 fps? 25 fps weird format. Yes, I know you PAL guys love it because it is *close* to cinema, but it really is a TV standard and not a cinema standard. Also, I don't have any monitors that will do 50, 75, or 100 hz playback. But if that's all you have, I'll make so with PAL. 😜

-

This was an interesting read. For Kye, watching on a good display is definitely going to affect your perception of whatever you are viewing. If you are testing 24 fps material, I think reviewing your work on a monitor set to 72hz or 120hz would be ideal. You may be able to go into your graphics card or display settings an make an adjustment there. As my 2 cents, I'd just suggest trying to get really granular with measuring individual issues that could affect the overall motion cadence. From the thread above they go into a lot of issues regarding motion blur and rolling shutter, but I think the good thing is a lot of that could be checked by doing measurements on individual frames to see if dynamic range, exposure, codecs, etc. are having an effect on the visible motion blur.

-

I'm really interested in seeing the results of your test, and I've thought about this myself. I think shooting something high speed that is repeatable would be interesting to see if there is any unusual timing-- like a recording frames at 60 hz, but then dropping frames and just writing 24. Maybe shooting an oscilloscope running a 240 or 120 hz sign wave or something so you could measure if the image is consistent from frame to frame. Another option for checking if frames are recorded at consistent intervals would be to use a 120hz or higher monitor and record the burned in time code from a 120 fps video. This might be enough to see if frames are being recorded a a consistent 24 fps and not doing something strange with timing. You'll probably have to play around with shutter angles to record just one frame.... or you could add some animated object (like a spinning clock hand) so you could measure that you are recording 2.5 frames of the 120 fps animation as would be correct for 180 degree shutter recorded at 24fps. A 240hz monitor and 240fps animated test video would be even better as you could check for 5 simultaneous frames. Anyway, just throwing out some ideas. The only other things I can think of that could be characterized as bad motion cadence, might be bad motion blur artifacts, bad compression artifacts, and rolling shutter. These might show up with a "picket fence" type test by panning or dollying past some kind of high contrast white and black strips. At the very least it would be interesting to see if the motion blur looked consistent between cameras with identical setups.

-

Of course any information regarding Panasonic's thoughts regarding the future of Micro Four Thirds would be welcome. Maybe specifically, with Panasonic possibly becoming the sole standard bearer for the MFT format, have they considered extending the standard to cover an S35 image area? Like an MFT+ spec. Or possibly, have they considered releasing a GH series camera with a multi aspect sensor that scales from the standard MFT area up to S35? I believe JVC did something like this. For still photography a larger s35 sensor could also allow for a larger 1:1 aspect ratio image area that would still fit inside the normal MFT image circle as a side benefit for Instagram shooters and other photographers who like that format.

-

Whenever I read a long thread regarding the merits or shortcomings of the MFT format I'm reminded of a conversation I had with a DP quite a few years ago about Super 35 vs Academy ratio framing. For historical context every VFX heavy movie that was shot on film was typically shot Super 35 with spherical lenses even if its final projected format would be widescreen 2.39. Of the twenty or so films I've worked on that originated from scanned film, only one was shot with Anamorphic lenses even though the vast majority were slated for widescreen delivery. Anyway, the big concern back then was minimizing film grain and had less to do with the depth of field and blurry backgrounds, so you'd typically want to use as much of the negative as possible to reduce the film grain before scanning it. However, in talking with a DP one day, he told me that when a feature wasn't slated for a lot of VFX, they would often just frame the project in Academy Ratio with room on the negative for where the sound strip would go. In this way you would go Negative -> Interpositive -> Internegative -> Print without mixing in an optical pass to reduce the super 35 image into the Academy framing for the projector. The reason for this according to the DP was that the optical pass would introduce additional grain, so shooting S35 was kind of a wash in that you were trading the larger film area of S35 for framing directly for the Academy area on a projector and skipping the optical reduction that introduced more grain than just the steps in making a print. Of course now with cheap film scanning, Digital Intermediates, and of course digital cameras, everyone just shoots using the S35 area. My understanding from the conversation is that if you were shooting something like "Star Wars" that would have a bunch of opticals anyway, you'd shoot S35 even before the days of digital film scanning, but if you were shooting some standard film it was common for the DP to just frame inside the Academy ratio area of the film strip and skip the optical reduction. Is there anyone with more experience than myself with shooting old films that can confirm this? It really has me curious. The reason being is that the Academy Ratio on a projector is defined at 21mm across. While a multi aspect MFT sensor on something like the GH5s is 19.25mm across if you shoot DCI. When you consider projector slop or the overscan on an old TV for the action safe area, the difference in the exposed film or sensor area seen by an audience seems negligible-- and thus the perceived DOF or lack there of between the two formats. Anyway, just wanted to share this, since I have never seen it discussed anywhere. And maybe its just me, but I'm really curious as to whether the vast bulk of films shot from the 1930s and into the 90s were actually using the Academy Ratio area of the negative for framing. Also, hope I'm not derailing the conversation regarding Olympus too much, but as we're discussing the MFT format of Olympus cameras as a possible reason for it's lack of sales, this seemed apropos. https://en.wikipedia.org/wiki/Academy_ratio https://en.wikipedia.org/wiki/Super_35

-

They don't show how to setup Raw, but she does mention ProRes and ProRes Raw at the 45 second mark. Says something to the effect that it "preserves the camera's raw sensor data". So, it sounds to me like it's still on the table. Interesting times. ? Queued up here:

-

Panasonic S1 V-LOG -- New image quality king of the hill

Towd replied to Andrew Reid's topic in Cameras

Sony, Nikon, and Canon don't shoot 10 bit internally until you step up to their pro cinema line. The rest is irrelevant. -

Panasonic S1 V-LOG -- New image quality king of the hill

Towd replied to Andrew Reid's topic in Cameras

Every camera has trade offs. Why is it so hard to believe that some people value the autofocus ability of a camera at near the bottom of the list of their priorities if they are comfortable with manual focusing? Maybe some day we'll have an A.I. living in our cameras that takes natural language instructions from a director and always pulls focus correctly and smoothly and can follow the play of a scene in real time better than a any human focus puller. Until that day comes, for many of us the camera's acquisition format and image quality will matter much more. In addition for many of us, it is just fun and satisfying to pull focus manually and capture a scene the way we intend without relying on whatever the camera decides to do. I have a feeling even if some magical A.I. does come along one day, many people will still enjoy using manual focus for the spontaneous creative control it provides. -

I'm with you, I find the m43 format to be just outstanding for video work and really enjoy the smaller form factor, so I'd love to see some of the imaging mojo of the S1 compressed into a new m43 product. I just realized the 10-25mm zoom is possibly releasing this month which may be too soon for a GH6 announcement. Maybe we'll hear more this fall. Here's a link to a video where the director of Panasonic's imaging department discusses the possibility of new m43 models. (It's at the 2 minute mark.) He doesn't come out and confirm anything, but he reaffirms Panasonic's commitment to m43 and stats that they are looking into products based on the format. So, I can only assume a GH6 is in the mix... Not to derail an S1 thread though, he does have some interesting thoughts regarding DFD vs phase detect focusing in all panasonic cameras toward the end. From what he says, he seems open to considering other focusing solutions if that will give Panasonic better performance in the future, but he believes contrast AF is the most accurate and that is why it is the system they currently use.

-

From my own results, I ended up picking the only three samples that were shot in 4k. Based on my test observations, I discarded samples that had line skipping or aliasing artifacts that were either apparent on the floating platform or in the fine hairs on the end of Gunpowder's nose. There were also a few samples that seemed excessively soft. All in all my take is that these camera makers use a fairly rough form of downsampling to achieve HD. At least on the cameras used in this test. From what I've seen on the S1 in other tests, it shots amazing HD. A few of the tests had some pretty muddy looking colors. I wonder if that was due to the variable NDs. Not really sure. The colors presented were a secondary concern in my judgement because I personally feel that as long as there is decent color separation, a final look can be achieved with some quick tweaks in post. I really appreciate the test. And I know they take a lot of time. My own take away though is that the hardware and what format you shoot a camera in to squeeze the best quality out of it does have real impact on the final results.

-

I'm not sure the GH5 has the hardware to pull all the tricks the S1 can for autofocus, but it would be nice to see at least some of the S1 features trickle down into a GH5 update. Personally, I'm less interested in purchasing a full frame camera, but I think what we're seeing in the S1 may be a good indication of what to expect in a GH6. Would love to see some kind of Varicam color engine with 10 bit color and full V-Log in all of it's shooting modes. Hopefully we'll hear something about it when Panasonic releases the 10-25mm lens into the wild. I know I heard somewhere that Panasonic has begun work on it.

-

Panasonic bringing the heat with DFD autofocus. Also, why does Pany's 1080p look more detailed than downscaled 4k from the A7III in the second half of the video? Anyone think that's the lenses or sharpness settings? Or is there just some crazy good imaging mojo in the S1?

-

I think I liked E, I and K the best. But the colors out of E were probably my favorite of those three. Some of the others had nice colors as well, but were soft or had aliasing in them. Maybe I missed another good one, it was a lot of cameras. ? Fun test!

-

Wow! That is really an impressive diversity of styles. And I bet they never spent any time arguing about 2k vs. 4k or what sensor size they were shooting on.

-

Oh, it's mostly just a for fun lens and way beyond practical. I currently own a Nikon 75-300mm F/4.5-5.6. It's a total shit lens-- on or off my speedbooster. Tons of chromatic aberration and very soft. I've had it for like 15 years, and it has never impressed. My other telephoto that is much nicer is an Olympus 40-150mm F/4-5.6 that I picked up as my first native m43 lens when I bought my GH5-- on sale for $100 dollars US. This is... no joke, a really decent lens for a plastic-fantastic bargain bin lens. Puts the Nikon to shame, and it fits in a front pocket. It's currently my only autofocus m43 lens, and does a half decent job for its size. So yeah, I'm interested in a nice long tele for my growing micro four thirds passion.

-

I've been toying with an investment in that lens, the 100-400mm, or the fixed 200mm, so I'd love to hear more about your experience with it long term. The 50-200mm definitely seems the most practical while the fixed 200mm is probably the least. I've generally come to the conclusion that I'll be shooting Panasonic (GH series) and not Olympus long term, and so will probably favor Panasonic glass. And Panasonic seems to include lens stabilization more often than Olympus. Yeah, I was being overly harsh there to reviewers. I think that trolls however love to find holes in a reviewer's conclusions, so the reviewers play it cautious. I do enjoy the whistle clean image aesthetic that seems to be the growing trend in video. With Neat Video or other degrainers however, it's very achievable to get a clean image even up to 3200 on the GH5-- but it will soften the image some, and of course you'll be losing some dynamic range. That said, I love me some grainy 16mm scanned film. Probably one of my favorite looks.

-

Yeah, people completely blow out of proportion the noise on the GH5. With some minimal work in post it cleans up really nicely-- especially if you are delivering in 2k it's just a non-issue. I think a lot of reviewers just discount it to avoid trolls who might discredit their standards. None of these people I think have ever worked with film or the early generation digital cinema cameras that were noisy as hell.

-

That's the digital stabilizer that shifts the image on the sensor. Kind of like an in camera version of post stabilization. I prefer to just use the mechanical sensor stabilization and lens stabilization if a lens has it, and add digital stabilization in post. If you need it and don't want to bother with the post work, it adds a little more smoothing to your video. One difference between the mechanical and e-stabilization though is that the regular sensor stabilization will help correct for the motion blur in micro jitters, but e-stabilization will not.

-

Nikon Z6 features 4K N-LOG, 10bit HDMI output and 120fps 1080p

Towd replied to Andrew Reid's topic in Cameras

Yeah, I remember reading somewhere that the cost for a full frame chip is only a $100-$150 more than an APSC. Why would Nikon want to split their time catering to that market when they need to stay busy filling out an offering of full frame lenses? If you want to shoot super 35 on a Nikon, just use the crop mode. I think the APSC format was a compromise due to the limitations of the technology of the time. Nikon and Canon are probably happy to leave it behind on their new generation of mounts. I won't be surprised to see full frame Nikons and Canons in the $500-$600 range in a few years. Long term, I kind of see the market shaking out into M43 for those who want small travel cameras with a few more features and options than smartphones. Full frames with crop modes for the mid range, and Medium format for crazies who want to go for a niche look. Hell sometime in the next 10 years I wouldn't be surprised to see an IMAX digital sensor for big budget and special venue projects.