-

Posts

6,099 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by BTM_Pix

-

Long shot but are the USB cables that you’ve tried definitely both data ones rather than charge only ones?

-

There will still be negotiation between the camera and the adapter if the pins of the adapter are exposed to the camera mount that can be causing it. I have two Fotodiox EF to M42 adapters that I've just tried on my S5ii with the MC21. The EF to M42 adapter that has contacts on it does exactly as you describe with the really slow startup and the one without is an instant startup straight to the IBIS selection screen. Fotodiox has some dire warnings about using their L mount adapters with Leica bodies (the MC21 has this problem to with my SL and T and has to have the pins taped over) so I'm not sure they are fully compliant with the L mount protocol which may be complicated further with later cameras and any changes they have made.

-

If you are stacking it with the Fotodiox, try putting some electrical tape over the contacts on the Fotodiox. In my experience, the delay you are seeing is the camera trying to handshake (multiple times) with the adapter to get lens information to automatically set the IBIS focal length. Taping over the contacts will stop the attempts and you should get straight to the set focal length process. If that works, stacking a dumb EF to L mount adapter onto the CY to EF will provide a permanent solution absent the variable ND that the Fotodiox was providing. If you don't need to have an electronically controlled EF lens on the same day (or even at all) then the tape solution will suffice.

-

I dunno. I honestly think that the same techniques and skill they were applying to work within the parameters of the medium then would have been adapted and transferred to work within the parameters of the new medium now. Of course, I'm not saying it doesn't look good. Far from it actually. But, in my opinion, its because they are proper productions and, again, its the skills of everyone and everything from the back of the lens forwards that is making the biggest contribution to that. In those terms, and its only my opinion of course, the difference made by what is behind the lens capturing the image is almost negligible compared to the difference that is made in what is coming into it. I've shot absolutely horrible looking stuff on both film and digital so I should know 😉

-

If you google the net worth of family then they could make a decent fist of buying a fair chunk of Arri in its entirety let alone one of their cameras 😉

-

There were over 500 people involved in the creation of Born On The Fourth Of July. It was nominated for 8 academy awards. The writer, director, editor, cinematographer, composer and sound department have all won at least one academy award. The actual camera and film stock only captured the end result of the direction production design, lighting, composition and performance of what was in front of it. As such, it was the least influential aspect of what you were watching. Resolution matters, "colour science" matters but being realistic matters most of all. Waft the same camera loaded with the same film stock around without any of what they had in front of it and it will look as shit as if you did it with whatever the next great thing in cameras is. We've all got the means now to capture a great image but still lack everything that goes into creating that image in the first place. In this case, what we would be lacking would be the other 499 people.

-

On mine, I can get it to take an inordinate amount of time to release when the AF is in Tracking mode and then it will still occasionally do it when I switch it to Area but its not consistent and sounds like a different issue to what you are having anyway. By the by, I sometimes don't think the AF ON is performing properly but I need to look into that further. You could try using the Save/Restore Camera Setting function to make a copy of the settings of each camera onto their respective SD cards then swap the cards and load the settings up on each to see if the behaviour transfers to each camera. If Unit 02 doesn't start misbehaving in the same way as Unit 01 when it is using its settings then that would imply it is a hardware fault. Similarly, after saving the settings, you could do a factory reset on Unit 01, check its behaviour with defaults, then re-load your settings from the card to see if it then does it which would imply something within the custom settings you have made.

-

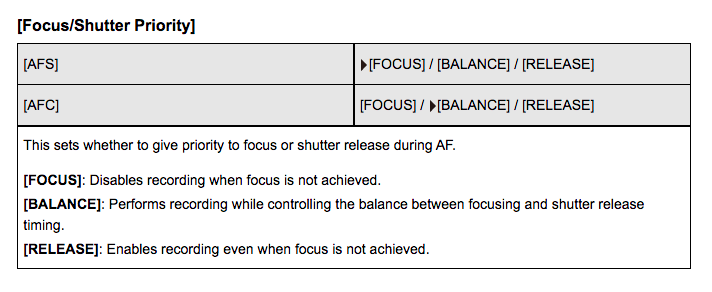

I'm guessing you've ruled this out so forgive the sucking eggs advice but it could be in the Focus/Shutter Priority settings you have in combination with AF type ? Set to Focus can give the impression of too long a press while focus is not locked and set to Release can give the impression of a hair trigger. I've found that if the AF is in Tracking mode then you can be holding the shutter full down for a loooong time before the shutter releases. Quickest way to test both would be to put them both in Release priority.

-

It has a great feature set for the purpose but, for me, more so as a holiday camera. The magnetic mounting pendant is very good for staying in the moment of what you are doing on holiday whilst still filming. The pre-record function is particularly good for using it in that way as you won’t actually have to worry about missing anything. Let’s face it, trying to do holiday videos with a “real” camera is a pain in the arse for EVERYONE concerned and is actually a contradiction in terms as it’s no holiday when you are titting about with lenses and ND filters and offloading footage. Let alone carrying it all and making sure it’s safe. It always ends up with the bulk of the footage coming from the first day and tailing off to nothing after a few days as it’s too much hassle to keep up. The AI editing is good too not just for taking out the tedium of doing editing but likely for taking out the tedium of the actual end result for whoever has to watch your holiday videos. No full size HDMI port means it will be a hard pass for a lot of people on here of course.

-

If I was Blackmagic, I’d skip the L mount and be beating a path to Nikon’s door to use the Z mount. Natively, you can get fast Z primes up to 800mm so there is a much broader range there whereas L mount tops out at 135mm but it’s the adaptability where it scores. Adapters for EF with autofocus, E mount with autofocus, even any manual focus lens with M mount and deeper mount can have autofocus too and even PL mount with integral variable ND filter. Last but by no means least F mount with full autofocus, something which no other mount can offer. Between these adapters a customer for this speculated BM camera could use pretty much any lens they’ve acquired over the years with full autofocus. Minus X mount and of course L mount itself but weirdly with the MFT to E mount you can even use those lenses too. Z mount is hands down the most versatile mount around* with the added bonus of BM not having to deal with Leica who, let’s not forget, wouldn’t do firmware to make their cameras compatible with an adapter from one of the actual members of the alliance. My MC-21 still isn’t and won’t ever be compatible with my Leica T or SL. Nikon are unlikely to get into the true cinema camera market so there is less perceived challenge versus Panasonic who are very likely to want to do so with an L mount cinema offering in the near future. *This amazing and comprehensive compatibility didn’t of course stop me somewhat hypocritically not buying into Z mount and spending all my money that I’d intended to use on increasing my L mount collection of lenses instead 🙂

-

Panasonic S5 II (What does Panasonic have up their sleeve?)

BTM_Pix replied to newfoundmass's topic in Cameras

You can assign it to toggle on/off in "Fn Button Set". The down position on the dial as he is using it is Fn10 https://eww.pavc.panasonic.co.jp/dscoi/DC-S5M2/html/DC-S5M2_DVQP2839_eng/0112.html -

RED made a product for the EPIC called the Motion Mount which does ND and global shutter emulation. @Andrew Reid has one and it is fantastic.

-

Help me on an eBay hunt for 4K under $200 - Is it possible?

BTM_Pix replied to Andrew - EOSHD's topic in Cameras

One major thing that the LX10/15 has over the RX100 series is the f1.4 minimum aperture on the wide end. The RX100 series have the sLog profiles but this is mitigated on the LX10/15 if you install my Cinelike D hack. I actually prefer just having Cinelike D as its easier to deal with in a casual camera than sLog having to be exposed at ISO1600. You can add ND to both of course using stick on filter adapters but having to reduce from ISO100 instead of ISO1600 it a lot less problematic in terms of not getting X pattern artefacts etc. Everyone should just get an FZ2000/2500 though as it really is a powerhouse and, if we were brutally honest with ourselves, probably as much of a camera as many of us actually need 😉 Its a bit chunky though so a combination of that and the LX10/15 would be great for travel where the LX could be used where discretion is required but at minimal additional weight with the bonus of the flexibility of two matched cameras (using Cinelike D) instead of one. -

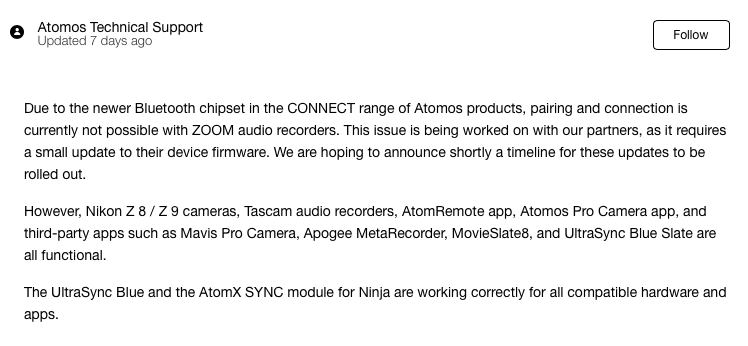

Yeah, adding an UltraSync Blue will resolve my woes if Zoom don't update their firmware but I wouldn't rule out Atomos breaking compatibility with that either. Curious how it works with the Tascam X6/X8 though as I'd put good money on their overpriced BT adapter being one and the same product as Zoom's overpriced BT adapter. Anyhow, I'm off to test what else doesn't work on the Connect now 🙂

-

It is the original AtomX Sync module that has been discontinued rather than the Connect. Ironically, of course, the AtomX Sync works perfectly fine with the Zoom products 🙂 The Connect does have a lot more utility over and above the timecode stuff though so its not a dead loss and I'm hopeful that Zoom will do the required firmware update. But absent of any definite commitment from them, I'm likely seeing an UltraSync Blue having to enter the equation.

-

Now it is a surprise - DPReview is closing

BTM_Pix replied to Marcio Kabke Pinheiro's topic in Cameras

Its more Raw Patrol than Paw Patrol here. -

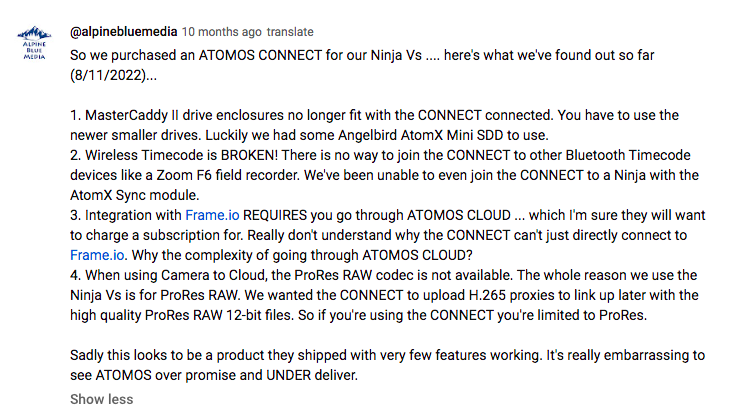

Right, so I've unpacked it, connected it and had a go at syncing a Zoom F2-BT and a Zoom H3-VR to it. Absolutely nothing happening with either of them. Updated the firmware on my Ninja V which in turn updated the firmware on the Connect. Updated the firmware on both Zoom devices then verified that BLE works correctly on both of them with their respective Zoom Bluetooth control apps. Still nothing. Then came across this comment on Atomos's YouTube channel under a promo for the Connect. That was from 10 months ago so you'd imagine that if there was an issue that Atomos would have addressed it by now, particularly as the new firmware update that I put on the Ninja V for it was from only a week ago... I then found this absolute bombshell on Atomos FAQ section, also, you will note, from a week ago. Unbelievable. They had been pimping the fuck out of the Connect with AtomX Switcher module bundle for the whole of May and have casually forgotten to point out until last week (the deal ended at the end of May) that it doesn't work with any Zoom recorders despite it being known from that YouTube comment for ten months. That will only be the recorders that the vast majority of people (myself included) have bought this thing to work with then. This incompatibility thing is a bit fishy as well considering that the Zoom products do work with Atomos' own UltraSync Blue and AtomX Sync modules which of course themselves also work with the Connect. What the fuck is up with that company ? As I said in a previous post, there is always something with them isn't there? In this case, it looks like you have to buy an UltraSync Blue to act as a bridge if you want to use Zoom products or wait for some vague possible maybe firmware update from Zoom.

-

Because of ProRes RAW support, Atomos have development relationships with all of the popular camera brands (minus BM of course 🙂 ) and most of these new generation cameras have BLE so you'd think it would be something they would be pushing, particularly as there is no conflict with the manufacturers having their own solution. You could argue maybe that they aren't interested in facilitating timecode solutions to 3rd party recorders as a way of enabling better sound for their cameras when most of them are pushing their own overpriced audio add on kits (or the Tascam compatible versions in the case of Canon and Fuji). When I get round to setting up the Connect, I will, of course, be taking a great interest in analysing the connection and communication of the BLE link to the Zoom products to see if there is anything "interesting" that I might be able to use....

-

Sigma 16-28mm f2.8 L mount I haven't really had much time to do anything with it but these are example grabs from 4K footage with the new to me Panasonic S5ii from the first couple of days after I picked it up. Initial impressions are that it is fast focusing, sharp and although it is absolutely not distortion free (particularly on the wide end) but should definitely be considered by anyone with an E/L mount camera that needs a fast, constant aperture, very wide zoom.

-

My Connect and AtomX Cast bundle has shown up so I'm going to have a play with it over the next couple of days with a couple of Zoom products. It only supporting two BLE devices (via a new firmware update no less!) is a bit of a nonsense though. If they are going to move forward with it then they could definitely do with a new version of either the Blue or the One that has both BLE and LTC and I'm also hoping Atomos can persuade the likes of Panasonic to add support for the BLE timecode into their cameras as Nikon have done.

-

Panasonic S5 II (What does Panasonic have up their sleeve?)

BTM_Pix replied to newfoundmass's topic in Cameras

So after what can only be described as a Devon Loch moment (ask your Dad) in the absolutely nailed on not going to change my mind purchase of a Nikon Z8, I now find myself joining you as a fellow Panasonic S5ii owner. I had been struggling with the sunk cost fallacy aspect of buying the Z8 as a way of stopping my collection of pro Nikon glass collecting dust in the Peli cases but eventually bit the bullet and accepted reality. The Z8 would indeed have given them an extended life but I’m not going to be hauling big f2.8 teles and zooms around with me any more so would you have eventually have to have caved and built up a Z mount collection to go with it. Financially, that would be out of the question anyway and I couldn’t ignore the value proposition of the S5ii route. So, after a bit of judicious choice of a combination of used and new offers and bundles, I’ve managed to acquire all of the following for roughly the same price as the Z8 and one of its Cf cards. Panasonic S5ii Panasonic 20-60mm f3.5-5.6 Panasonic 35mm f1.8 Panasonic 50mm f1.8 Panasonic 85mm f1.8 Sigma MC-21 EF-L adapter Sigma 16-28mm f2.8 Sigma EVF for my Fp As I have other L mount cameras (Leica SL and Sigma Fp) this collection will benefit all of them. As I say, the value of getting not just a camera but three fast-ish primes, a very interesting kit zoom, a fast ultra wide zoom, the EF adapter and the long awaited completing part of my Fp puzzle couldn’t be ignored. And all of them are light and compact compared to what I would’ve been rocking on the Z8. Essentially, I went in for what I thought at my age might be a forever camera and walked out with my forever lens mount instead. First impressions are that I don’t know enough about it yet to have any. It’s not to say I haven’t used it but it’s on all default settings, I haven’t really read the manual and I’m here on holiday so I haven’t/won’t put in the work to have a better feel for it. I’ll also defer judgement until after the imminent firmware update. On a surface level, I’ve found the IBIS to be fairly impressive but the AF, whilst a huge leap forward over other L mount cameras that I’ve got, can be a bit of a mixed bag in lower light, particularly the face detect. Again, I’ve got everything on default so I’m sure it can be tuned to be better. What I will say as well is that the Sigma 16-28mm f2.8 is worth a look for anyone contemplating it. -

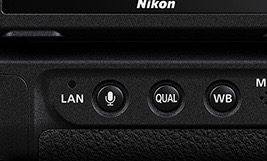

Yes, the voice memo has been a standard feature on pro bodies for many years. Operated as per the Z9 here by a dedicated button on the back panel. Yes you could do that and there will be dedicated photo editors on the other end of your LAN cable who will do that for you even without the voice prompt (which you would mainly use for player identification for the caption anyway). Not everyone has the luxury of an editing team though and not every match is covered that way either. In those instances when you are on your own then having it in camera is the best solution and by best I mean fast because it’s all about getting the image onto the photo desk of the newspaper as quickly as possible. The bigger the file (as the unedited version would be) has speed implications all along the way from ingest into the laptop, editing and export and most importantly out of the stadium when you won’t always have the luxury of wired internet and will often be having to even tether off your phone. Another less obvious advantage is in terms of navigating the image on the back of the camera for player identification and focus checking. If you’re using your 400mm as a 600mm because the opposite goal is so far away then you have one less button press to get it to the right size on playback on the camera. I know that sounds trivial but it isn’t (particularly in gloves in the pudding down rain) and every second counts when you might be having to quickly analyse a 30 shot burst to find the best one. What a pity the Z8/Z9 only do it in video mode then, at least in the current firmware.

-

My point was to the exposure, field of view, AF consistency and optical degradation. If I am using my 400mm at f2.8/ISO6400 and 1/1250th and either crop in post to get to an 800mm FOV or use the Hi-Res Zoom then the exposure won’t change, the AF won’t change and the optical quality won’t change. If I attach my TC-2.0x then my exposure WILL change (by two stops), the AF will degrade and as good (relatively) as the Nikon TC is then it does take a hit. So the lenses will always be f2.8 and my exposure remains unchanged (which is hugely important when even category A stadiums for night matches are very tight when balancing high shutter speeds and ISO) and my FOV needs are met in a more dynamic way. A 70-200mm becomes a whole lot more useful when an extra 100mm can be activated without having to commit to having it on all the time. Will they be “real” f2.8 in terms of absolute equivalence ALL the way throughly the range once they’ve got beyond their base focal length ? Nope. But the compromise in those areas when using a clear image zoom type of function compared to using TCs when you need extra reach on tap are absolutely minimal compared to losing two stops of light and AF performance as and when you need it. HOWEVER….. Having said ALL of that, I’ve just discovered to my researching shame that unlike the Sony (and JVC) options the Hi-Res Zoom feature is ONLY available in video mode not stills! So disregard everything I’ve said on the subject from a sports photography point of view as it is moot 🙂