-

Posts

5,964 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by BTM_Pix

-

Atomos released new firmware late last year for the Ninja V+ to enable this now with the FS5,FS7 and FS700 when you add the AtomX SDI module.

-

This is the same way to get the Sigma MC21 to "work" on Leica by taping over the pins (for similar reasons in terms of blocking by the camera) but it becomes a dumb adapter with no control of AF, Aperture and IS. So, yes, you could do that with the Meike/Viltrox/Kolari etc but lose all of that stuff in the process which may or may not be an issue depending on what you are mounting on it (just using EF as an intermediate mount etc for older manual lenses) or only use manual focus anyway. But the best way I can see to have your cake and eat it in terms of keeping all of that, insuring yourself against future firmware blocking and not having the dramatic colour casts at greater intensities is as @ntblowz does it by using the official adapter but swapping out the NDs for the Breakthrough versions. More expensive in the short term but not as costly as having an adapter that all of a sudden is rendered useless.

-

Yes, great point, that combination definitely looks like the best and safest way ahead.

-

No, both the Kolari and Meike adapters also provide electronic control for AF etc. A simple handshake ID check between camera and adapter/lens will be able to tell the camera the identity of what it is connected to it and compare that with an approved list at which point the camera can then adopt a policy of "if you're not on the list, you're not coming in" and prevent any operation until you remove it. Of course, it is not currently active in any of the firmware as people are able to happily use these products but its obviously only an update away should Canon choose to do it. On the basis that the Canon version does not fare very well at all on every comparative review I've seen then these 3rd party products must be damaging not only sales but reputation too. The colour shift and IR pollution performance of the Canon version in this review being an example.

-

It could be because Viltrox are the only one that have acquiesced and gone public about it or that they were first on the list and the others will follow. If it is aimed solely at Viltrox then it could be that Canon consider that actual wrongdoing has happened in terms of the acquisition of the protocol and/or lens design. Its a tough call because on the one hand the temptation to buy them before they disappear (BH already show the Viltrox as discontinued) but on the other hand they might well be rendered useless by a future firmware update. I suppose it comes down to whether you have a Canon camera with a settled/finished firmware version so you don't have to upgrade to a future firmware that potentially could contain a countermeasure.

-

Project Greenlight might fit the bill. I've only watched the first season as I'm not sure if the other seasons aired in the UK. https://en.wikipedia.org/wiki/Project_Greenlight To be honest, I'm not sure the first one did either and I may have watched it via a "non-traditional" method 🙂 Its based on a competition element but once that is out of the way after the opening episode or two and they've picked the winning script it then follows the whole process through to the film being released.

-

The regular Metabones version is actually 20% cheaper than the Canon one. https://www.bhphotovideo.com/c/compare/Metabones_T_Speed_Booster_Ultra_0.71x_Adapter_for_Canon_Full-Frame_EF-Mount_Lens_to_Canon_RF-Mount_Camera_vs_Canon_Mount_Adapter_EF-EOS_R_0.71x/BHitems/1526307-REG_1595393-REG The Cine version with the locking mount is $20 cheaper than the regular Canon one too and Canon has no comparable version. On that basis, if I was in the market for an EF>RF converter, then I think Metabones has them beat on price for both (significantly so on the regular one) and beat on a significant feature on the Cine version, which would make them a threat to Canon's sales of their own adapters? If anything, the near decade long history of the Metabones Speedboosters would also take away any issues of "brand respectability" versus Viltrox so would make them even more of a threat in my view. On the product page, Metabones state "Disclaimer: we are NOT licensed, approved or endorsed by Canon." so we can rule out that being a difference too. Its almost like Canon have granted an exclusive license to use RF products to a company with a very long track record of litigious actions isn't it ? So perhaps Metabones are going to be treated the same but they just haven't opened the letter from Canon yet 🙂

-

It will be interesting to see if this also applies to the Viltrox speed boosters and if so will Canon go after Metabones with the same vigour. It could well be that the contention here from Canon is more related to alleged theft/cloning by Viltrox than it is about reverse engineering.

-

The German magazine Photografix has had a statement from Canon Germany to confirm that they have requested Viltrox to cease and desist. Full article here https://www.photografix-magazin.de/canon-statement-rf-objektive-dritthersteller/ The translated statement is —————- SHENZHEN JUEYING TECHNOLOGY CO.LTD, manufactures autofocus lenses for Canon's RF mount under the brand name "Viltrox". Canon believes that these products infringe its patent and design rights and has therefore requested the company to cease all acts that infringe Canon's intellectual property rights." Canon Germany ————————-

-

You could argue that you are better off with EF lenses for all mirrorless bodies too. Canon RF and M,Fuji-X and G, Sony-E, L, MFT, Nikon Z and even Hasselblad-X mount all have credible electronic adapters for EF so if you use mixed bodies or even just to soften the blow if you do a wholesale change from one brand to another then having lenses with such universal compatibility is a real boon. Not to mention the massive choice of 1st and 3rd party options as well as the cost benefits of the 2nd hand market. In the case of Canon, it also immunises you from potential shithouse behaviour of firmware blocking of other adapters because, of course, they make their own EF-RF adapter so they can't block that. For similar reasons, I would recommend anyone looking at getting the new wave of full frame cine primes and anamorphics in EF mount where possible as it gives the same option of mounting on all the above mount cameras but, crucially, the adapters will purely be physical with no electronics (you don't need that anyway as they are all manual) so can't be shut out by firmware. The broader perspective here is if you picked up something like the 24-105mm f4 L with the 5D MarkII at the start of the "revolution" almost a decade and a half ago, then that lens will have followed you on whatever journey you've been on ever since today no matter if you flipped between cropped MFT, APS-C and now full frame on whatever brand you have chopped and changed to. EF lenses have survived every scorched earth "game changer" flip to a new system that we've all made and is still very much standing. It is very much the cockroach of the lens mounts ! Or, more generously, it is the most forgiving of all lens mounts because no matter which ill advised and ultimately disastrous camera system change you've made it will always be loyally by your side every step of the way. Hang on, it might be a fucking jinx 😉

-

Without reverse engineering, you couldn't pick up a €100 Canon camera that shoots RAW or have a Canon EOS-R5 that doesn't pretend it is overheating. Reverse engineering a product to create a new interoperable product isn't fundamentally illegal either. Threatening a manufacturer of such a product that you will activate a firmware procedure to render their products unusable causing them to incur huge financial losses having to refund customers probably is though. I'm not saying that is what has happened in this case of course. Nor am I suggesting it is in the case of Fotiodiox's L mount adapter with its dire capital bold letters warnings of instant destruction if you put them on a Leica camera either. Of course, to take a more generous view, it can be easily argued that they are merely protecting their customers from damaging their own cameras with these attractive third party products that they have no inclination to make first party versions of either entirely or at a reasonably competitive price.

-

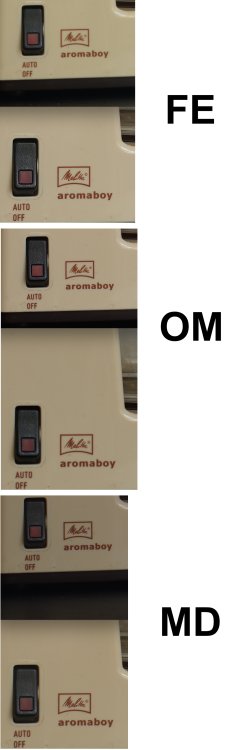

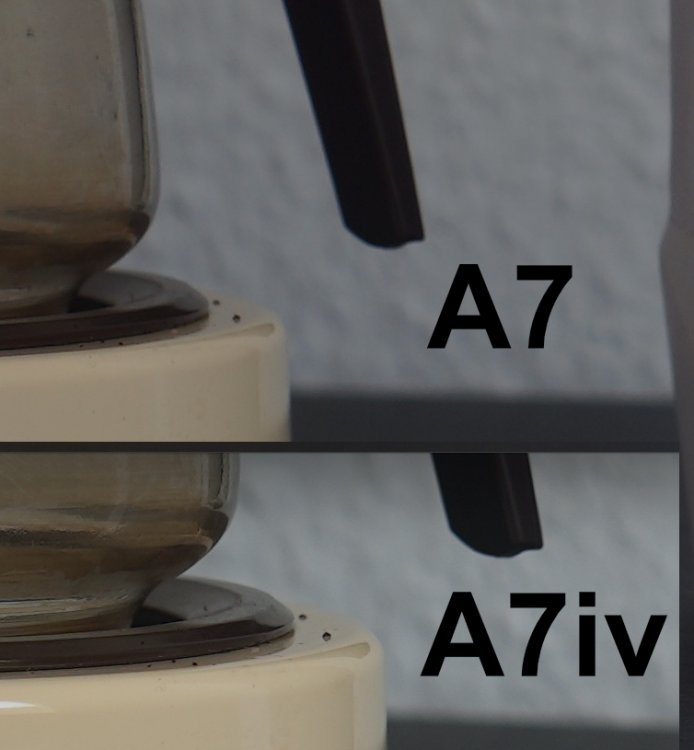

I have to say that the two images are so completely different in terms of composition and light thats its difficult to make any comparison between them. In lieu of not having a focus calibration tool, the keyboard on the desk if placed next to the coffee machine could act as an OK substitute if you place it like this crude representation. This way there will be evenly spaced and legible markers in front of and behind the focus point to compare between images. By the way, I do note from the EXIF of the A7iv file though that it appears to think the stabilisation is on ?

-

Be careful stopping down as you may run into diffraction issues which will cause more issues. If you want to test this in a more structured and controlled way then you will need to use a focus calibration tool such as the Spyder. That way you can see precisely how much is in focus ahead of and behind the focus point. They aren't particularly cheap (about €60) but that might be a bargain in terms of your own time in trying to get to the bottom of things. However, it is pretty simple to make one yourself using a guide such as this one. http://www.parallax-effect.ca/2013/03/12/the-ghettocal-my-diy-lens-calibration-tool-for-micro-adjustment-enabled-dslr-cameras/

-

I would actually recommend focusing on the texture on the switch as it is this that is the giveaway on the examples above.

-

I've pulled out the switch section in each comparison to show that the focus point is slightly different within each test. In some it favours the A7 and in others the A7iv in terms of appearing sharper but at the actual focus point for each camera within each comparison image they are both much of a muchness. If you take the MD one for example, the switch is sharper on the A7 because the focus point is on that whereas with the A7iv it looks softer but if you then compare the rim of the jug in each you'll see that it is reversed as that is the focus point for the A7iv.

-

I missed this when it was announced a few months ago but Meike have released an EF>M adapter that has a drop in filter slot. It ships as a package with both the Variable ND and clear filters but also has a polariser available too and of course supports all AF and IS functionality €165, I'd say its very reasonably priced for what it will add to EOS-M rigs but of course thats all relative when it costs more than the insanely cheap prices the actual camera itself goes for these days ! I know for many people using EF lenses goes against the grain of using such a small camera but the flip side of that is that with this adapter and a compact EF-S all in one zoom like the Tamron 18-200mm or Sigma 18-250mm (both under €200 used), the EOS-M can become a stabilised, RAW shooting, pick it up and go camcorder for under €500.

-

I was hoping people din't miss the reference and think Waaaaffffferrrr was a brand of lens adapters.

-

This is the biggie from my point of view. I have that MFT Meike cine lens and use the waaaaffffferrrr thin MFT>E mount adapter to use it on my A6500 because, as you say, the lens is really an APS-C lens anyway. Giving it triple duty with this adapter would be fantastic.

-

Or in the shell of one of the Samsung Android based compacts ?

-

If it was Royal Mail then its probably worth asking them if its still there so you can do a re-shoot 😉

-

Ulanzi (and a few others if I remember) did an old school ground glass DOF adapter that let you put EF lenses on your phone. Bit of a unwieldy monstrosity when you put the EF lens on and the ground glass didn't spin either but it did the job in terms of providing an approximation of "the look" that had us all buying Canon NV20s and the like and mounting them upside down on even more unwieldily rigs before the 5DMarkII et al handed everyone the keys to that kingdom ! So, whilst not the same as a native mount, a C-Mount version of something like that should be feasible and obviously smaller. And usable on multiple phones as well and whilst I know the EU keep changing warranty terms in favour of the consumer, I think even they'd draw the line at not seeing ripping the camera out and fitting a new mount as "problematic" in terms of a refund so it solves that problem too 😉 I can't see how it would be beyond the bounds of possibility to make the ground glass at least vibrate or even spin considering how companies like TechArt make the MF to AF adapters with motors that are absolutely tiny. World has definitely moved on the 12 years or whatever since the DOF adapter movement in terms of doing this sort of thing DIY so a 3D printed prototype would be easy and cheap. Minimum focus distance could be an issue on some smartphones for the ground glass so there will always be a certain amount of bulkiness to it. But thats just all the more space to put the variable electronic ND in isn't it ?

-

LOG, IBIS, 10bit, AF, does it really make for better footage?

BTM_Pix replied to Andrew Reid's topic in Cameras

More a case of IBS rather than IBIS as I'm sure he has been shitting himself ever since.