HockeyFan12

Members-

Posts

887 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by HockeyFan12

-

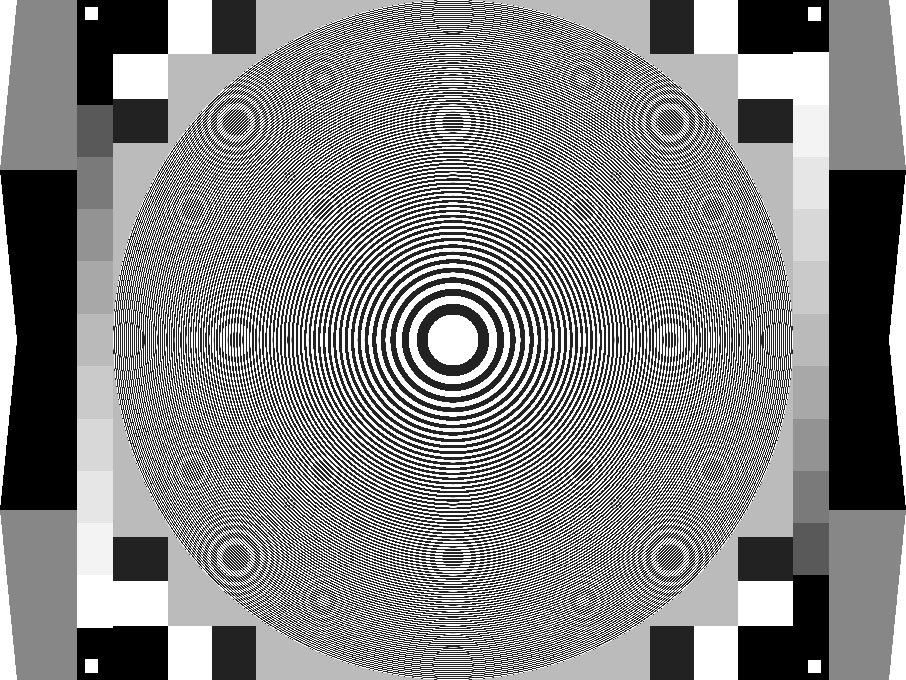

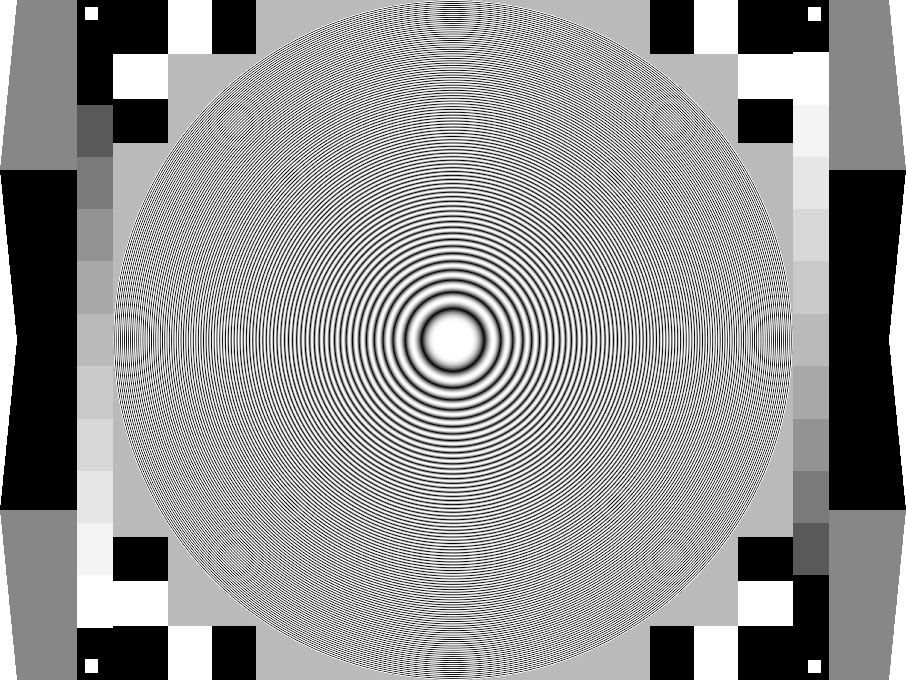

For further comparison. The square wave zone plate downressed with nearest neighbor: Vs. the sine wave zone plate downressed with nearest neighbor: As expected, the square wave plate appears to alias at every edge due to the infinite harmonics (even if the interference patterns aren't really visible in the lowest frequency cycles). The sine wave zone plate begins to alias much later. So yes, the Gaussian blur (OLPF) does fix things when applied aggressively, but much of the aliasing present in the downscaled square wave test chart is due to square wave (black and white with no gradient) lines being of effectively infinite frequency (before they are blurred), not because the frequency of those lines' fundamentals are below a certain threshold dictated by Nyquist. My only point is that observing aliasing in square wave zone plates and correlating it directly with Nyquist's <2X rule is misguided. Nyquist applies to square wave test charts, but it predicts aliasing at every edge (except in the presence of a low pass filter, as seen above) as every edge is of infinite frequency. Whereas on a sine wave chart, a 4k monochrome sensor can resolve up to 2k sinusoidal line pairs or up to 4k lines, or 4k pixels. Of course, the actual system is far more complicated on most cameras and every aspect of that system is tuned in relation to every other. But I would still argue that the 2x oversampling figure works so well for Bayer because it provides full resolution R and B channels and furthermore simply because any arbitrary amount of oversampling will generally result in a better image. But not because 2X coincidentally lines up with the <2X rule in the Nyquist theorem, which applies to sine waves, not square pixels. (Fwiw, I haven't worked much with the C300 Mk II but I do remember that the C500 had false detail problems worse than on the Alexa and Epic and F55, but its RAW files also appear considerably sharper per-pixel. Canon may have erred on the side of a too-weak OLPF. The C200 test chart looks really sharp.)

-

It does appear that way and it makes sense that it would. The higher the sampling frequency the less aliasing. The Nyquist theorem does apply to images and every time you increase the resolution of the sensor by a linear factor of two you increase the amount of resolution before apparent aliasing by about that much too (other aspects of the acquisition chain notwithstanding). I'm not debating that. It's just that 2X oversampling for sensors is based on what works practically, not on the 2X number in the Nyquist theorem. Most resolution charts are printed in high contrast black and white lines (4k means 4k lines), which represent square waves, whereas Nyquist concerns line pairs (4k means 2k line pairs or 2k cycles), which are represented in sine waves, which look like gradients. If you have a set of <2048 line pairs (fewer than 4k lines) in a sinusoidal gradient rather than in black and white alone, a 4k monochrome sensor can resolve them all. So a 4k monochrome sensor can resolve UP TO but not full 4k resolution in sinusoidal cycles without aliasing (<1/2 frequency in cycles * 2 pixels per cycle so long as those cycles are true sine waves = <1 frequency in pixels). So under very specific circumstances, a 4k monochrome sensor can resolve UP TO 4k resolution without aliasing... but what a 4k sensor can resolve in square wave cycles is another question entirely... a more complicated one. Because square waves are of effectively infinite frequency along their edges. All I'm really getting at is that Nyquist concerns sine waves, not square waves, but pixels are measured without respect to overtones. If we're strictly talking sine waves, then a monochrome 4k sensor can resolve up to 2k sinusoidal cycles, which equals up to 4k half cycles or pixels, which equals up to 4k pixels. If we're strictly talking square waves, then any sensor should theoretically be prone to aliasing along the high contrast edges at any frequency because black-and-white lines have infinite order harmonics throughout... but that's where OLPF etc. come in.

-

As of last year that was pretty much true for me. This year, I'm being asked to work on an increasing amount of 4k content.

-

You'd think so. So did I. But we're dealing with more complicated systems than "divide by two." Frequency in the Nyquist-relevant sense is a sine wave. With sound, the appearance and sound of a lone fundamental sine wave are well-known to anyone with a background in subtractive synthesis. In terms of images, however, we usually think in square waves. Compare the images I posted of a sinusoidal zone plate and a standard square wave plate. Resolution charts generally represent square waves, which contain infinite higher order harmonics and thus are theoretically of infinitely high frequency at any given high contrast edge. In theory, a square wave resolution chart is liable to alias at any given frequency, and in practice it might alias at low fundamental frequencies if the contrast is great enough (see Yedlin's windows on the F55). Yes, the higher the acquisition resolution the less aliasing there is in general, but the "oversample by two" dictum works so well more because it lets the B and R in the Bayer grid reach full resolution than because it fulfills the specific terms of the Nyquist theorem in a monochrome sense. As you correctly state, 4k pixels can represent a maximum of 2k line pairs: one black pixel and one white pixel per line pair. But 2k line pairs times two lines per pair equals 4k lines. And I think we can agree that one black pixel and one white pixel are two distinct pixels... so 4k linear pixels can represent 4k linear pixels... which still only represents 2k cycles (line pairs). Nyquist holds true. And in practice, a 4k monochrome sensor can indeed capture up to 4k resolution with no aliasing... of a sinusoidal zone plate. When you turn that zone plate into square waves (black and white lines rather than gradients) you can induce aliasing sooner because you're introducing higher order harmonics. NOT because the fundamental is surpassing the 2k cycle threshold. Again, 4k image resolution measures pixels. 2k line pairs measures pairs of pixels. Nyquist holds true. But the reasons why you see aliasing on those test charts with the C300 Mk II and not the F65 are not because Nyquist demands you oversample by two (it's really more like Nyquist converts pixel pairs to signal cycles), but because those test charts' lines represent infinite order harmonics and that oversampling in general helps prevent aliasing at increasingly high frequencies. As predicted by math and science.

-

I'm not an expert on projector optics and I don't have a tv that big so I can't say. My projector seems about as sharp as my plasma at a similar FOV, but I believe plasmas are actually softer than most LCDs, so that makes sense that they would be less sharp than big tvs are. You must have very good eyes if you can the difference at a normal viewing distance. For me, I can't tell a big difference and I have better eyes than most still (I think they're still 20/20) so I just assume most others can't tell either. Truth be told, I bet most people with exceptional (15/20 or better) vision can see a significant difference in some wide shots and a very significant difference in text, animated content, graphics, etc. even at a normal viewing distance and with a tv as small as 64" in not smaller. I wonder if I could tell the difference with white on black text. I bet I could. Even in a double blind test. So to that extent it's just a matter of my preferences in terms of diminishing returns that leads me to have the opinion that 4k isn't necessary or interesting. If I still had such good vision and had a great tv and money to burn, I might get into the 4k ecosystem, too.

-

Low pass filter, yes. My bad. I'm not saying that Nyquist doesn't apply to sensors. Not at all. Let's forget about Bayer patterns for now and focus on monochrome sensors and Nyquist. What I'm saying is that the idea, which I've read online a few times and you seem to be referencing in the paragraph about the C300, that a 4k monochrome sensor means you can resolve up to 2k resolution without aliasing (because we can resolve up to half the frequency) is misguided. A 4k monochrome sensor can in fact resolve up to full 4k resolution without aliasing on a sinusoidal zone plate (1/2 frequency per line pairs * 2 lines per line pair = 1 frequency in lines). So you can resolve up to 4096 lines of horizontal resolution from a 4k sensor without aliasing (up to 2048 line pairs). On a monochrome sensor, if you framed up and shot up to 4096 alternating black and white lines they should not alias on that sensor so long as they were in a sinusoidal gradient pattern rather than strictly hard-edged black and white and so long as they filled the entire frame. But that same sensor will exhibit aliasing under otherwise identical conditions on a black and white (square wave) zone plate of that same resolution/frequency because square wave lines contain overtones of effectively infinite frequency. It's the overtones, not the fundamental frequencies, that cause aliasing in this case. So a 4k black and white square wave zone plate will cause a 4k sensor (monochrome or Bayer) to alias, but not because of Nyquist dictating that you need less than half that resolution or the camera will alias, but because the sensor can resolve up to that full resolution in sine waves (1/2*2=1), it's just that black and white line pairs (square waves) contain infinite higher order overtones and of course those overtones will far surpass the frequency limit of the sensor. I'm not saying Nyquist doesn't apply to sensors, just that its application is often misunderstood. The 2X scaling factor is not a magic number and there are reasons Arri went with a 1.5* scaling factor instead (which coincides closely with Bayer's 70% efficiency; 1.5*.7 is just over 1). That's all I'm arguing. I think we just misunderstand each other, though. Everything you've written seems right except for the focus on the 2X scaling factor.

-

You're right and I'm wrong. I misread your post. Again, I'm not an engineer, just interested in how things work. I thought what you were implying is what I used to (incorrectly) think: a 4k sensor can only resolve 2k before it begins aliasing. Because Nyquist says you can resolve 50% of the frequency before aliasing. And... a lot of great 2k images come from 4k downconversion... so... it makes sense... The truth, however (I think) is that a 4k sensor can resolve all the way up to 4k without aliasing... because there are two pixels in every line pair and 2 * 1/2 = 1. But... this is only so long as that 4k image represents, for instance, a 4k zone plate with resolution equivalent to fewer than 2048 sinusoidal gradients between black and white (line pairs, but in sinusoidal gradients rather than lines): But the zone plates in the links above are black and white like pairs. Hence infinite odd order harmonics (or actually I think square waves would be right, not sawtooth waves, which would mean every harmonic is represented? I don't know the equation for a square wave): So yes, the F65 looks just great, but those resolution charts will theoretically cause problems for any sensor so long as the resolution isn't being reduced by the airy disc and/or lens flaws. Because black and white zone plates like the one above are of theoretically infinite resolution so you need a high pass filter elsewhere in the acquisition chain or anything will alias. Anyhow, I just think it's interesting that non-sinusoidal resolution charts represent, in theory, infinite resolution at any given frequency because of the higher order harmonics and that anti-aliasing filters are, ultimately, fairly arbitrary in strength, and tuned more to taste than to any given algorithm or equation, and only one small part of a big mtf chain. Nature abhors a straight line let alone a straight line with infinite harmonics so in most real world use the aliasing problems seen above will not present themselves (though fabrics and some manmade materials and SUPER high contrast edges will remain problematic and I see a lot of aliasing in fabrics with the Alexa and C300, the cameras I work with most, as well as weird artifacts around specular highlights). In conclusion, you're right and I am definitely wrong as regards my first post; I misread what you wrote. And if I'm also wrong about any of what I've written in this one I'm curious to learn where I have it wrong because ultimately my goal is to learn, and in doing so avoid misinforming others. This also explains why the F3 and Alexa share a similar sensor resolution less than twice the (linear) resolution of their capture format. Bayer sensors seem to be about 70% efficient in terms of linear resolution and 2880 is slightly greater than 1920/0.7. Canon's choice to oversample by a factor of 2 with the C300 was just due to their lack of a processor at that time that could debayer efficiently enough I think, nothing to do with Nyquist.

-

There's a wide-ranging misunderstanding of Nyquist sampling theory when it comes to images, and I think you might be following that widely-publicized misunderstanding. If not, my apologizes but I think it's such an interesting topic (which I was totally wrong about for years) that I will butt in: Nyquist does not apply to image sensors how many people think it does. A 4k sensor actually CAN resolve a full 4K (well, technically anything less than 4k so 99.99%) signal, and fully. It's only Bayer interpolation and the presence of anti-aliasing filters that reduces this number in a meaningful way. What it boils down to is that a line pair represents a full signal wave. Yes, you can only fully capture less than 2048 line pairs in 4k without aliasing, as per Nyquist. But that's still 4096 lines. So... with a Foveon or monochrome sensor you can capture full 4k with no aliasing on a 4k sensor. Really! You can! (Assuming you also have a high pass filter with 100% mtf below 4k and 0% mtf above 4k. Which... doesn't exist... but still.) The other point of confusion is the idea that a line pair on a normal resolution chart represents a sine wave. It doesn't. And THAT is 99% of the reason why there's aliasing on all these test charts. It represents a sawtooth wave, which has infinitely high overtones. So mtf should be measured with a sinusoidal zone plate only, as the Nyquist theorem applies to sine waves specifically (well, it applies to anything, but sawtooth waves are effectively of infinite frequency because they contain infinite high odd order harmonics). Since most resolution charts are lines–sawtooth–waves, rather than sinusoidal gradients, even the lowest resolution lines are actually of effectively infinite frequency. Which might be another reason why you see such poorly reconstructed lines and false colors around the very high contrast areas of the window in Yedlin's test in the other thread. To that extent, the use of anti-aliasing filters is more just "whatever works" for a given camera to split the difference between sharpness and aliasing, and not correlated with Nyquist in any specific way. Bayer patterns I believe remove a little less than 30% of linear resolution, but in practice it looks a lot sharper than 70% sharpness due to advanced algorithms and due to aliasing providing the illusion of resolution... So the resolution issue requiring over-sampling is due to anti-aliasing filters and Bayer pattern sensors and balancing things out between them so you get a sharp enough image with low enough aliasing. It's not Nyquist eating half your spatial resolution. I'm no engineer by any means and I have made this mistake in the past and now feel guilty for spreading misinformation online. Also, I'm normally an 8-bit-is-fine-for-me-and-probably-for-everyone type person, but for next generation HDR wide gamut content you need 10 bit color and a wide gamut sensor. I think Netflix is going for a future proof thing and perhaps it is due to legal. That is a very astute comment. It's not an aesthetic choice, but a legal one. Otherwise, anything could be called "true 4k." (Fwiw you can include small amounts of b cam footage shot on other cameras or even stock footage.)

-

It's basically just a film emulation LUT except that it's incredibly good and comprehensive. It has transforms to make the Alexa look like 5219. Procedural grain that's very realistic. Halation that accurately emulates film. Just a great looking film look script.

-

IMO Blackmagic 4k definitely does not have an image up to Netflix standards. Gnarly fixed pattern noise, aliasing, poor dynamic range, etc. keep it away from serious use. If you are getting great images with it, more power to you, and it has its place. But I would rate that image quite low, below the 2.5k even and nowhere near what Netflix is looking for. The 4.6k is pretty good, though! I would expect they'd include it. Anyhow if you have any tips on getting a better image out of the BM4k I would be glad to hear them because I do use one from time to time and am admittedly a frustrated novice with it. Marketing, not image quality, is definitely behind the Alexa's exclusion, imo. But yeah, technically the Alexa 4k is a little softer than the F55. The noise pattern feels a little wide, there's some hints of unsharp mask. It's great from a subjective perspective and would be my choice every time, but in the lab it would fail to meet their standards. But the thing is it doesn't matter unless Netflix is producing the show. They'll buy originals (tv shows and movies alike) that are shot at 1080p. So they would acquire a feature shot on the Alexa but not produce one. They'd acquire one shot on film but would not produce one. So feel free to shoot on film... if you're footing the bill and hoping Netflix will pick it up later, which they very well might. Does the F55 have a different RAW output than the F5? Maybe it is the wide gamut BFA. That makes a lot of sense. When I first used the F5 (a month or two after its release so the firmware was early and you could tell) I thought the image was awful, but I used it again later with the Kodak emulation LUT a few years later and it's way better than it once was. I was recently working on a Netflix show that was shot on F55 RAW and again was not impressed with the image from a technical perspective or even the color didn't blow my mind but it did seem a lot better than the F5 (which I still dislike). Now that the footage is out there and I see the graded footage online, I think the footage looks very good, so the colorist did a good job and I was wrong about the F55 I think. It's possible Netflix knows what it's talking about, but I'm still surprised by how well the F55 holds up. (I would place it way above any Black Magic camera except maybe the newest 4.6k, for instance, but worse than the Alexa or Varicam and yet it seems to look just as good in the final product. F55 RAW is surprisingly good.) Likewise, I rate the C500 poorly but above the F5 and FS700. But... at that point you're sort of picking arbitrarily. The C500 is sharper, cleaner, better color, slightly worse DR, maybe more aliasing? Not dramatically better by any means. Maybe it has the wide gamut BFA array they need and it's as simple as that.

-

Sure, but at a normal resolution they still look pretty similar. Remember, he's punched in 4X or something by that point and you're watching on a laptop screen or iMac with a FOV equal to a 200" tv at standard viewing distance. So yeah, on an 800" tv I would definitely want 6k, heck, even on a 200" tv I would. But the biggest screen I've got is a 100" short throw projector or something so the only place where I can see pixels with it is with graphics and text.* I've also been watching a lot of Alexa65-shot content on dual 4k 3D IMAX at my local theater and tbh I can never tell when they cut to the b cam unless it's like a GoPro and then I can REALLY tell. :/ Furthermore, I don't think you need RAW video. That's another marketing myth. Anything high end (C300 Mk II, Varicam, Alexa, even arguably the shitty F55 etc.) is a total wash in terms of RAW vs ProRes. Honestly I'm most impressed that he got the F55 to look that good! And I want that Nuke script! I think the bigger lesson is that you can't apply logic against marketing. Red staked its reputation on a 4k camera that in practice had a much softer image than the C300 (the original Red pre-MX was softer per-pixel than the 5D Mark III–by a lot! I think the Genesis/F35 was sharper despite being 1080p)... and it worked! Netflix and YouTube are pushing hard with heavily compressed 4k... although at least YouTube lets you use the Alexa. 4k LCD panels are gaining some traction in the low end market where the image suffers from a million other problems and the screens are pointlessly small. It's like the megapixel wars. Most digital photographers aren't even printing, let alone printing wall-sized (and those I know who do printed wall-sized were satisfied with 12MP). But the big number works well for marketing departments, and that trickles up to everyone. No one who matters to marketing will watch this video anyway. *I thought my projector's LCDs were misaligned until I noticed that what appeared to be chromatic aberration or misalignment was really just sub-pixel antialiasing! I think 4k would benefit a lot for cartoons, text, etc. Anything with really sharp lines. And for VR I think ultra high resolutions will matter a lot. I love my Rift, but the image is 1990s-level pixellated. I'm not big on VR video, though.

-

That's fair. I would do almost anything if I were paid enough. But the matte box thing is an insult.

-

The leitax adapters are incredible. You can shim them so the lenses are as good as native mount. If you're shooting anamorphic and zero play and proper collimation are necessary, these are worth the price but they're REALLY expensive. I just use relatively cheap generic adapters from eBay (not the worst but certainly not even close the best) but I'll either use a FF or lens support to support the lens. They still have some give. I've heard good things about the Redrock adapters but I don't think you can shim them. So they work if you don't mind the focus marks being a bit of but if you're spending that kind of money maybe better to go leitax.

-

You can read about Brewster's angle here: https://en.wikipedia.org/wiki/Brewster's_angle But if you're dumb like me you can just assume reflections will approach next to nothing (when properly addressed with a polarizer) at 90º I think it is... so straight on it won't do much good. Maybe no good at all. It won't make the sunglasses see through (that's impossible) but if the sunglasses are polarized, too it might make them look even darker, potentially emphasizing the reflections a little more? Wearing all black is good advice. I get asked to paint this stuff out in post all the time so that's another option. But usually in that case it's reflections of lights on process trailers and they're more glaring than the image you posted.

-

I've often disagreed with you, but I agree with you here. Your argument is in the spirit of the ongoing "use cheap gear," thread. You should use the medium in an ontologically sound way. Video is fast and ephemeral. Film is slow and physical. Good videos use the difference to their advantage and don't apologize. In recent history but before video's ubiquity, Zodiac used video very well. Not by apologizing for a smaller sensor or whatever else, but by allowing Fincher to shoot 100s of takes and comp together just the ones that suited that particular film. After the more fringe artists you cite but before the Alexa's prominence, a few other other artists used video unapologetically well: Channel 101 (look at the illustrious alumni from that group of great storytellers)–they also influenced Awesome Show and the SNL Digital Shorts; Dogme 95; Chronicle (very good genre film); and even the toweringly innovative cult classic Werewolf: The Devil's Hound, which used tons of coverage to more than make up for a lack of resources elsewhere. Video is cheap, cameras small. They owned it. A+ Video is not film. It's not worse. It's not better. It is different. Let it be different. Embrace it. If it's your medium, use it. If it's not, shoot film, which I love the hell out of. To some extent I think film schools are harmful in our era. They teach you the old way, which was great... but now we're in a faster moving age. Even Vine videos are a far more worthwhile medium than most gave them credit for being. A lot of good filmmaking in a given six seconds. And horses for courses. I can see why JJ Abrams shot film on Star Wars. It made sense. It's nostalgic. I can see why a lot of art films are shot on 16mm. It's physical. As a viewer, I mostly far prefer it. But if digital is what inspired you to get into production, don't apologize. Shoot the hell out of it. It's its own thing. Your voice is your own. Shoot video as video, not as an apology for film, and you've taken a step 95% of A list DPs refuse to. It clips. It wobbles. Love the wobble. Love the clip. Love the pause cut. Love the meme. Love the vloggers. Love snapchat. Love vine. Love what you do, whatever you're doing.

-

Again, I agree to disagree. I've never even seen stereo audio captured on set (always a 416 or Schoeps or something with lavs mixed for stereo and 5.1 after), but I understand that it's useful for some stuff. My experience is in narrative but for ambient recordings I guess binaural is valuable. I listen to a lot of binaural recordings for music and they're great and I wish more narrative film were mixed like that, actually. I also strongly disagree about the value of ETTR except on a camera-by-camera and case-by-case basis. I think it benefits the Red, for instance, even when possible using color filters and exposing each channel to the right, and fixing both white balance and exposure in post, assuming you want a "slick" commercial look. Whereas Rob Zombie's DP is a friend of a friend and he exposes the Red at 3200 ISO intentionally to intentionally muddy it up, as he finds that look preferable to a post grain pass for "gritty" footage. And I just find the color vs saturation values and even the hue vs lum shifts (I know I'm talking in Resolve terminology and not camera terminology but I stopped following camera tech closely after film was phased out) with most log colorspaces in particular to be inconsistent enough that (excepting maybe the Alexa) you're better off rating normally, or at the very least consistently, whereas ETTR is inconsistent by nature. Sony's F55 LUT threw me for a loop here in particular. And with inexpensive cameras that shoot 8 bit, ETTR is the surest way to generate banding even in cameras that normally don't suffer from it. That said, it's a case-by-case basis thing, but the cases where I would consider exposing with ETTR are vanishingly rare, especially because it adds such a huge headache in post having your vfx artists work with inconsistently exposed plates and your colorist do so many transforms, but I can see overexposing a stop or two consistently when possible, much as I would rate film 1/3 stop slower than it is, or using ETTR with particularly difficult exposures and fixing it in post rather than bracketing and comping or something. But while I disagree strongly on both of those points, I strongly agree that it's valuable having and sharing different viewpoints. Readers will see whose goals mirror their own, and structure their needs around those who have similar goals and who have found effective ways to deal with them. So I think your post is really valuable, I'm just offering a counterpoint, from someone with a different perspective, one that's more rooted in narrative I assume, for better or worse. I think I have more concern for "good enough" than for "good" so for me ETTR isn't worth the hassle, but for others it definitely will be. (I'm also extremely lazy, and that's a huge factor in my workflows, often the biggest one.)

-

I couldn't disagree much more strongly. That kind of rigging is taking away everything good about a dSLR and adding nothing but headaches. The only exposure aid I need is my 758 cine (heck, with zebras I don't even need the spot meter) and the only sound I would use from an on-camera mic would be for syncing dual system sound. Even with the best pre-amps in the world, your mic is still in the wrong place if it's on-camera. I can see the handle or a small cage being useful for balance, or maybe a loupe in daylight being helpful, but beyond that I don't see the point. That said, not everyone agrees with me! If it works for you it works for you. As I mentioned before, s union AC I worked with did the same thing with a C300 when shooting a super bowl ad, rigging it out like crazy... and it is not designed to be rigged out. I asked what the point was when the ergonomics are great (for me) out of the box, but apparently the operator wanted a large 435-like form factor as it was what he was used to, having a film background. He wanted the thing to weigh 50 pounds. But for me the smallest rig is the best one. If I wanted to weigh it down I'd tape a barbell to it. I do often get asked to use a matte box or something so clients and insecure actors will feel like the small camera is a real "cinema camera" but nothing makes me more irritated than this request. "Is that a real camera? Yes, are you a real actor? If so it'll show in your performance. If it's a real camera it'll show in the footage." But when I get that request it doesn't even reflect on the camera it reflects on me. If you think you know more about my job than I do, you've already lost confidence in me just by asking for a larger camera. Project your insecurities elsewhere. I'm doing my job well, worry about doing the same. Ugg... actors. So insecure it even wipes off on camera department. I'm not saying I complete disagree, though. The Black Magic cameras, for instance, have such poor ergonomics that they need to be rigged up. Just offering a dissenting opinion. Each will have his or her own preferences. (And for mirrorless still cameras like the GH5 and A7S I do think the ergonomics are so poor that they often benefit from having a small cage and an HDMI clamp, but beyond that I don't see the point.)

-

Is Sustainable Independent Filmmaking Possible?

HockeyFan12 replied to Jonesy Jones's topic in Cameras

I think it's very possible but it's difficult. I think Rocket Jump has the closest thing to a good model that there is. They are absolutely cleaning up. But at the begging, when you're small, it's going to be very hard to monetize. If you can move from day job with freelance supplementing it to freelance with creative work supplementing it to creative work paying the bills to creative work making you rich... then you just lived the American dream. If you have a vision, go for it. I have no advice except look to Rocket Jump and only do it if you have a vision and you're willing to fail but so desperate to succeed you don't worry about setbacks and failures. Look to Patreon and YouTube channels, too. And let me know what you find out! And start small. Gall's law is truth! -

how to simulate the original "shake" of the old movies shot on film?

HockeyFan12 replied to Dan Wake's topic in Cameras

-

how to simulate the original "shake" of the old movies shot on film?

HockeyFan12 replied to Dan Wake's topic in Cameras

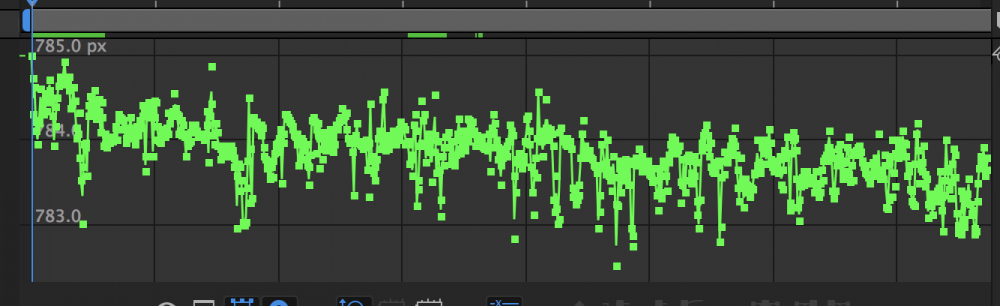

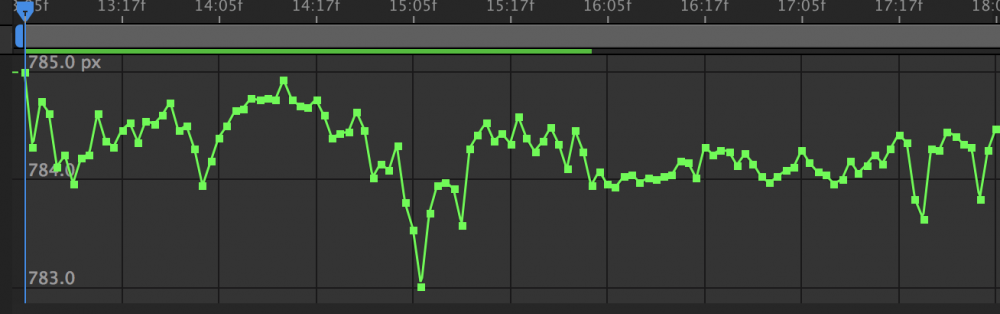

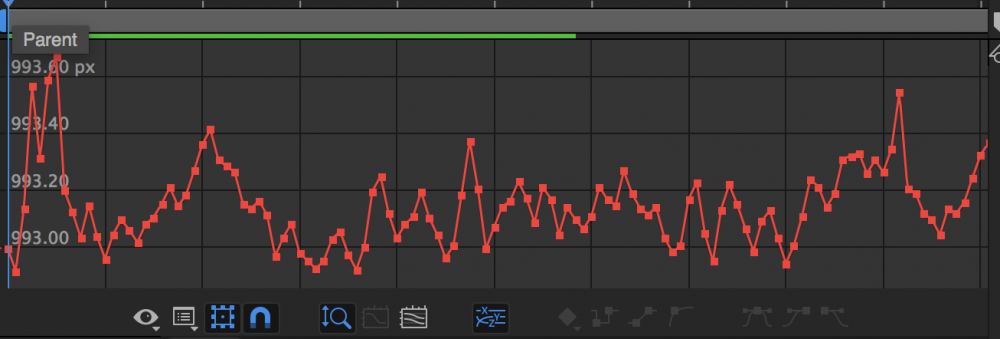

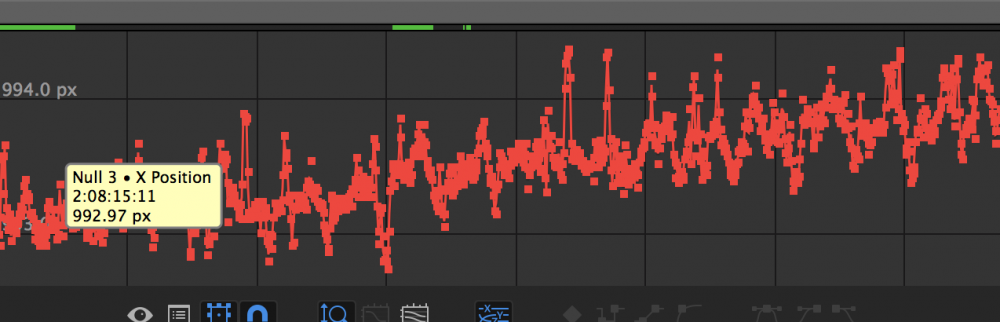

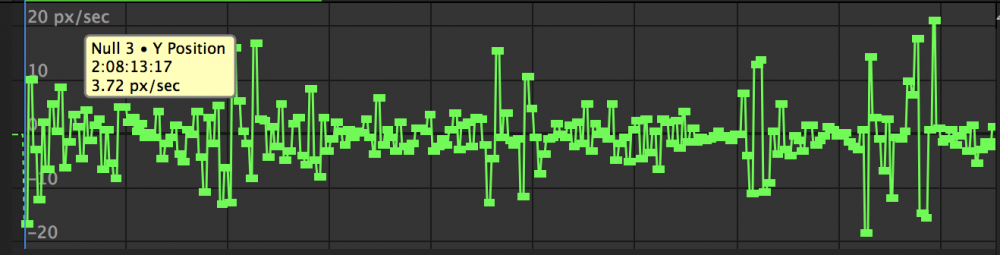

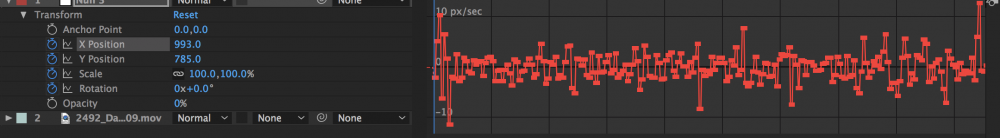

Yes, exactly. The power consumption is massive. It is actually the cooling that contributes more to the power consumption than the display itself. As LED efficiency improves, so should the need for cooling decrease. But I think we've hit an asymptote, or are closing in on one, as regards LED efficiency, so I suspect that consumer HDR will still get really crazy good but it won't reach the mind-blowing levels that made me want to immediately sell my Panny Plasma... However, on a small enough screen (on an AR or VR device) I think a true HDR image might be viable. The closer we get the screen to the eye, the less brightness we need, and exponentially. That's why HDR tech in cinemas isn't great. And it won't be. True HDR (10,000 nit plus) really is incredible, powerful, and immersive tech. As is VR... which has a tiny screen... Just saying... As regards the original question, I'm working with a high quality 16mm scan now. I love film but I have to say the headache is huge. There is gate weave but it's very subtle. I can't see it at 100% but when I zoom in it's like ugg... so I need to do a position track on every lock off! I think most people here would be surprised by how incredibly subtle it is. The Red Giant plug in is about 100X too strong. Whether you want to emulate it or not is up to you, but it's crazy subtle. I would like to come up with a procedural after effects expression but the shape of the gate wave is difficult to translate into an equation. Bizarrely, the Y weave is slightly greater than the X weave, and it doesn't seem to correlate to it at all. Same frequency, greater quantity... I think. At first glance, dimensions seem to move pretty independently? Note that those are speed and not position graphs. But I think it illustrates gate weave in a clearer way. Value graphs seem to show multiple frequencies. Even more chaotic than the speed graph. I do think a procedural option is viable, but it's more than a simple wiggle expression. Also, grain is interesting. It is indeed per-channel. And highlights never blow out but when they even get close they have a beautiful orange glow. Difficult to emulate in post without a lot of work, so mind your highlights. As regards grain, a b&w overlay doesn't do the trick. You need to refrain each channel. With digital, the green channel has the finest grain texture, then red and blue have bigger grain texture and more grain, too. With film, it seems the size of grain is more consistent between channels (makes sense when you consider three equal layers vs bayer sensors favoring green) but the quantity is still greater in the blue channel. There doesn't seem to be any correlation between grain pattern in each channel, though. Looks like three random, really nice grain textures. So you should offset the time of your film grain scan to regain each channel imo; they don't seem correlated at all. And just boost the blue grain's opacity relative to the others. -

Tbh I kind of hope they do.

-

Can you tap to focus with the wifi app? This would make a great Ronin M B cam for the C-series except wireless FFs are a pain...

-

Looks good! Of course f1.4 makes big difference, doesn't it... How did you find the AF on the XC10? I've found that the DJI focus is a brilliant product but getting it and a cinema camera and everything rigged up and getting your AC by a wireless monitor system is such a pain. There are two kinds of Ronin shots I shgoot, mostly, following behind someone from behind and pushing in on their face from the front. The face stuff I bet the XC10 could handle... but can you follow behind someone okay in AF? The AF examples I see online look a bit dodgy, but I haven't seen many. The focus is deep, at least, I guess...