-

Posts

7,835 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by kye

-

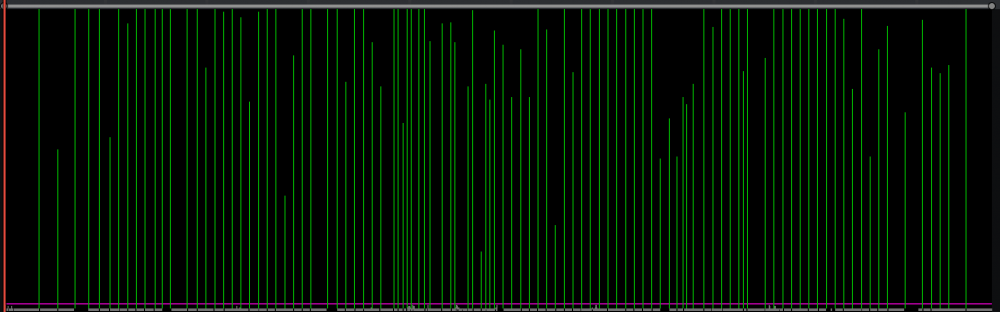

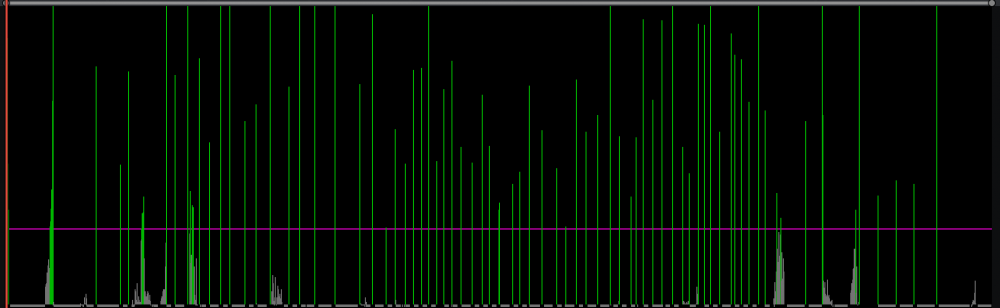

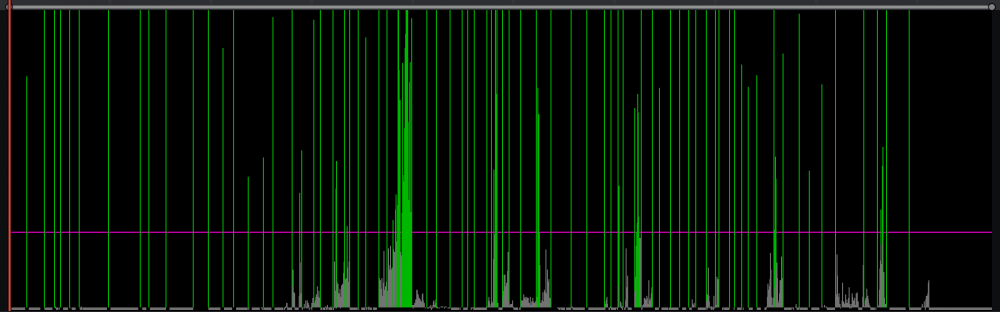

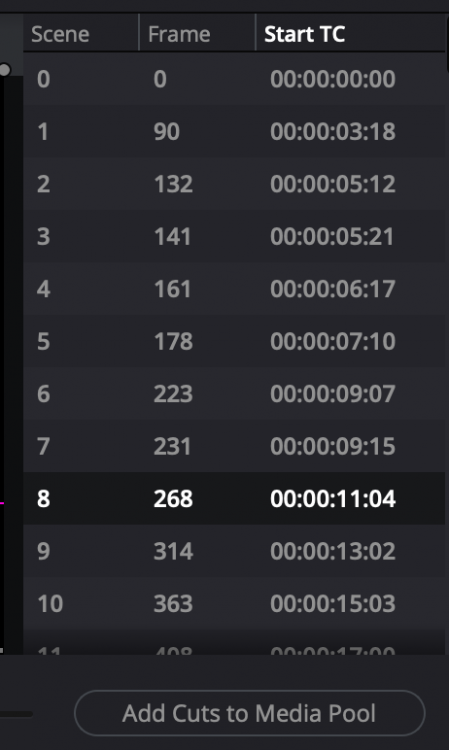

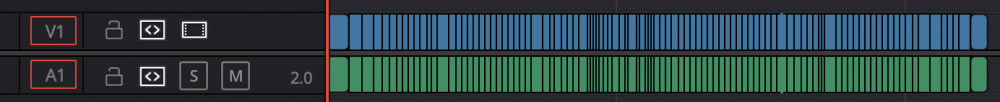

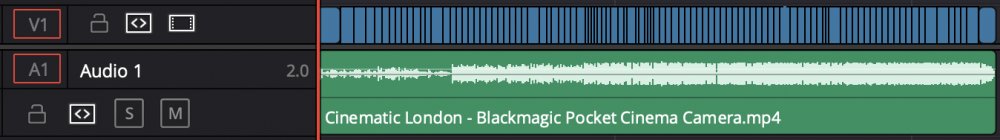

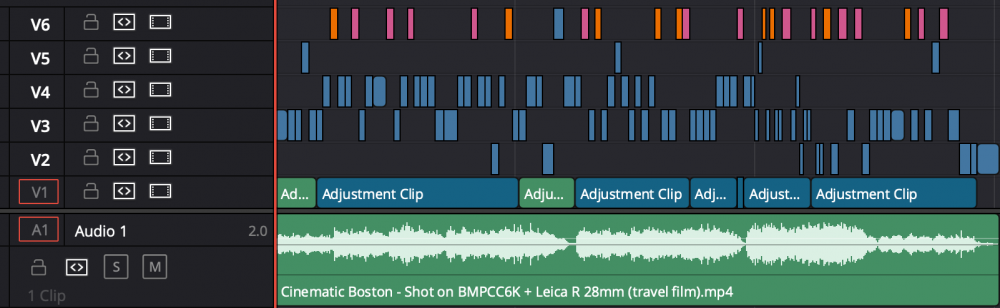

As I gradually get more serious about learning the art of editing, I've discovered it's a very under-represented topic on social media. There are definitely some good resources, but compared to cinematography or colour grading, it's much more difficult to find resources, especially if your interest isn't purely narrative film-making. A good strategy is to search for editors by name, as often the good stuff is just called "<name> presents at <event>" and no mention of editing or even film at all. However, you can search for editor after editor and find nothing useful at all. As such, I've now started analysing other people's edits directly, hoping to glean interesting things from their work. My process is this. Step 1: Download the video in a format that Resolve can read I use 4K Video Downloader for Mac, but there's tonnes of options. You're probably violating terms of service by doing this, so beware. Step 2: Use the Scene Cut Detection feature in Resolve Resolve has this amazing function that not many people know about. It analyses the video frame-by-frame and tries to guess where the cut points are by how visually different one frame is from the previous one. It's designed for colourists to be able to chop up shots when given a single file with the shots all back-to-back. This isn't a tutorial on how to use it (the manual is excellent for this) but even this tool shows useful things. Once it has analysed the video, it gives you the window to review and edit the cut points. Here's a window showing a travel video from Matteo Bertoli: What we can see here is that the video has very clear cuts (the taller the line the more change between frames) and they occur at very regular intervals (he's editing to the music), but that there are periods where the timing is different. Let's contrast that with the trailer for Mindhunter: We can see that there's more variation in pacing, and more gradual transitions between faster and slower cutting. Also, there are these bursts, which indicate fading in and out, which is used throughout the trailer. These require some work to clean up before importing the shots to the project. Lastly, this is the RED Komodo promo video with Jason Momoa and the bikers: There are obviously a lot of clean edits, but the bursts in this case are shots with lots of movement, as this trailer has some action-filled and dynamic camera work. I find this tool very useful to see pace and timing and overall structure of a video. I haven't used it yet on things longer than 10 minutes, so not sure how it would go in those instances, but you can zoom in and scroll in this view, so presumably you could find a useful scale and scroll through, seeing what you see. This tool creates a list of shots, and gives a magic button... Then you get the individual shots in your media pool. Step 3: "Recreation" of the timeline From there you can pull those shots into the timeline, which looks like this: However, this wouldn't have been how they would have edited it, and for educational purposes we can do better. I like to start by manually chopping up the audio independently from the video (the Scene Cut Detection tool is visual-only after all). For this you would pull in sections of music, maybe sections of interviews, speeches, or ambient soundscapes as individual clips. If there are speeches overlapping with music then you could duplicate these, with one track showing the music and another showing the speeches. Remember, this timeline doesn't have to play perfectly, it's for studying the edit they made by trying to replicate the relevant details. This travel video had one music track and no foley, so I'd just represent it like this: I've expanded the height of the audio track as with this type of music-driven edit, the swells of the music are a significant structural component to the edit. It's immediately obvious, even in such a basic deconstruction, that the pace of editing changes each time the music picks up in intensity, that once it's at its highest the pace of editing stays relatively stable and regular, and then at the end the pace gradually slows down. Even just visually we can see the structure of the story and journey that the video takes through its edit. But, we can do more. Wouldn't it be great to be able to see where certain techniques were used? Framing, subject matter, scenes, etc etc? We can represent these visually, through layers and colour coding and other techniques. Here is my breakdown of another Matteo video: Here's what I've done: V6 are the "hero" shots of the edit. Shots in orange are where either Matteo or his wife (the heroes of the travel video) are the subject of the video, and pink are close-ups of them V5 is where either Matteo or his wife are in the shot, but it doesn't feature them so prominently. IIRC these examples are closeup shots of Matteo's wife holding her phone, or one of them featured non-prominently in the frame, perhaps not even facing camera V4 and below do not feature our heroes... V4 either features random people (it's a travel video so people are an important subject) prominently enough to distinguish individuals, or features very significant inanimate objects V3 features people at a significant enough distance to not really notice individuals, or interesting inanimate objects (buildings etc) V2 are super-wide shots with no details of people (wides of the city skyline, water reflections on a river, etc) V1 is where I've put in dummy clips to categorise "scenes", and in this case Green is travel sections shot in transport or of transport, and Blue is shots at a location V2-V6 are my current working theory of how to edit a travel film, and represents a sort of ranking where closeups of your heroes are the most interesting and anonymous b-roll is the least interesting. You should adapt this to be whatever you're interested in. You could categorise shots based on composition, which characters are in the shot, which lens was used, if there was movement in the shot, if there was dialogue from the person in-shot or dialog from the person not-in-shot or no dialog at all, etc etc. Remember you can sort between tracks, you can colour code, and probably other things I haven't yet tried. NLEs have lots of visual features so go nuts. Step 4: Understand what the editor has done Really this depends on what you're interested in learning, but I recommend the following approach: Make a list of questions or themes to pay attention to Focus on just one question / theme and review the whole timeline just looking at this one consideration I find that it's easy to review an edit and every time you look at the start you notice one thing (eg, pacing), and then in the next section you notice another (eg, compositions), and then at the end you notice a third (eg, camera movement). The problem with this is that every time you review the video you're only going to think of those things at those times, which means that although you've seen the pacing at the start you're not going to be noticing the compositions and camera movement at the start, or other factors at other times. This is why focusing on one question or one theme at a time is so powerful, it forces you to notice things that aren't the most obvious. Step 5: Look for patterns We have all likely read about how in many films different characters have different music - their "theme". Star Wars is the classic one, of course, with Darth Vaders theme being iconic. This is just using a certain song for a certain character. There are an almost infinite number of other potential relationships that an editor could be paying attention to, but because we can't just ask them, we have to try and notice them for ourselves. Does the editor tend to use a certain pacing for a certain subject? Colour grade for locations (almost definitely, but study them and see what you can learn)? Combinations of shots? What about the edit points themselves? If it's a narrative, does the editor cut some characters off, cutting to another shot while they're still talking, or immediately after they've stopped speaking, rather than lingering on them for longer? Do certain characters get a lot of J cuts? Do certain characters get more than their fair share of reaction shots (typically the main characters would as we care more about what main characters think than what secondary characters feel while they're talking). On certain pivotal scenes or moments, watch the footage back very slowly and see what you can see. Even stepping through frame-by-frame can be revealing and potentially illuminate invisible cuts or other small tweaks. Changing the timing of an edit point by even a single frame can make a non-trivial aesthetic difference. Step 6: Optional - Change the edit Change the timing of edits and see what happens. In Resolve the Scene Cut Detection doesn't include any extra frames, so you can't slide edit points the way you normally would be able to when working with the real source footage, but if you pull in the whole video into a track underneath the individual clips you can sometimes rearrange clips to leave gaps and they're not that noticeable. This obviously won't create a publishable re-edit, but for the purposes of learning about the edit it can be useful. You can change the order of the existing clips, you can shorten clips and change the timing, etc. You could even re-mix the whole edit if you wanted to, working within the context of a severely limited set of "source footage" of course, but considering that the purpose of this is to learn and understand, it's worth considering. Final thoughts Is this a lot of work? Yes. But learning anything is hard work - the brain is lazy that way. Also, this might be the only way to learn certain things about certain editors, as it seems that editors are much less public people than other roles in film-making. One experiment I tried was instead of taking the time to chop up and categorise a film, I just watched it on repeat for the same amount of time. I watched a 3.5 minute travel film on repeat for about 45 minutes - something like a dozen times. I started watching it just taking it in and paying attention to what I noticed, then I started paying attention to how I felt in response to each shot, then to the timing of the shots (I clapped along to the music paying attention to the timing of edits - I was literally repeating out loud "cut - two - three - four"), I paid attention to the composition, to the subject, etc etc.. But, I realised that by the time I had watched a minute of footage I'd sort-of forgotten what happened 30 shots ago, so getting the big-picture wasn't so easy, and when I chopped that film up, although I'd noticed some things, there were other things that stood out almost immediately that I hadn't noticed the dozen times I watched it, despite really paying attention. Hopefully this is useful.

-

@webrunner5 makes good points. The resale value of tech is minimal. I also have an XC10 that lies unused and I should sell, but I have accepted that I will only recoup a small percentage of what I paid for it. In terms of what you should buy, it really is dependent on what you shoot, how you shoot it, and what you're planning to do with the images. Lots of knowledgable people here willing to give you advice, but the more you can tell us about your needs the more useful and tailored that advice will be.

-

The way that people behave online (both good and bad) is predictable if you understand basic psychology, inter-personal communication channels, and the current way that the internet is designed. History tells us that technology always comes before our ability to understand and manage it, so anything new wreaks havoc before we gradually work it out and restore some degree of balance. The acceleration of technology means that there are more things expanding unchecked than ever before, and it can be a rough ride. Times are also tough in COVID times where people are more likely to be stressed and venting online, rather than being calm and forgiving. A good strategy is to monitor what you do in life and pay attention to those things that make you feel better about yourself and those that don't, and then moderate or remove those negative influences. Ultimately we're all battling the temptations of our more base instincts.... do I eat salad or a burger? do I read a book or scroll social media? do I spend time with people or watch TV? etc etc..

-

I'd also really like to have more visible markers, but I'm not aware of any way to adjust them. I have previously looked through all the menus looking, but didn't find anything. Maybe @Andrew Reid can shed some light?

-

As @TomTheDP said, it's about the bitrate not the resolution. Here's a test I did to see what is visible once it's gone through the streaming compression. The structure of the test was that I took 8K RAW footage (RED Helium IIRC) and exported it as Prores HQ in different resolutions and then put them all onto a 4K timeline and uploaded to YT. This simulates shooting in different resolutions but uploading them all at 4K with an identical bitrate, so removes the streaming bitrates from the equation. I really can't tell any meaningful difference until around 2K when watching it on YT. The file I uploaded to YT fares slightly better, but even that was compressed, and a 4K RAW export would likely be more resolving still. Scenario 1: The resolution wars Manufacturers increase the resolution of their cameras to entice people to buy them File sizes go through the roof, requiring thousands of dollars of equipment upgrades to store, and process the footage Features like IBIS, connectivity, colour science, reliability, take a back seat Shooting is somewhat frustrating, the post process more demanding, upload times gruelling We watch everything through streaming services where the compression obliterates the effects of the extra resolution (see the above test) and things like the colour are on full display Scenario 2: The quality wars Manufacturers increase the quality of their cameras by going all-in on features like great-performing IBIS, full-connectivity, almost unimaginably good colour science, and solid reliability to entice people to buy them File sizes stay manageable without requiring expensive upgrades Shooting is easy and the equipment convenient and pleasant to use, the post process is smooth, upload times are reasonable We watch everything through streaming services where the resolution is appropriate to the compression and things like the colour are on full display, creating a wonderful image regardless of how the images are viewed The first is the business model of the mid-tier cameras - huge resolutions and basic flaws. The second is the business model of the high-end companies like ARRI and RED. The OG Alexa and Amira are still in demand because the second scenario is still in demand. Shoot with an Amira and while the images aren't super-big-resolution, the equipment is reliable with great features and the quality of the resolution you do have is wonderful. When I went 4K I was talking about future proofing my images, but I eventually worked out that the logic is faulty. I shoot my own family, so I could make the argument that my content is actually far less disposable than commercial projects but resolution doesn't matter in that context either, and even if it did the difference between 2K and 8K will be insignificant when compared with the 500K VR multi-spectral holographic freaking-who-knows-what they'll have at that point. I regularly watch content on streaming services that was shot in SD 4:3 - oh the horror! Oh, but hang on, you hit play and the image quality instantly reveals itself, sometimes even showing glitches from the tape it was digitised from, but then someone starts talking and instantly you're thinking about what they're saying and what it means, and the 0.7K resolution and 4:3 aspect ratio disappears. Well said. It's about the outcome and understanding the end goal. I was hoping that by posting a bunch of real images that it would put things into perspective and people would understand that resolutions about 4K (and maybe above 2K) really aren't adding much to the image, but come at the expense of improving other things that matter more.

-

It does seem like a long time. @Grimor - how hot are the bags when they're finished in the microwave?

-

It will be very interesting to see. Who knows how much of a limiting factor their sensor has been up until now. Obviously it's excellent, but it's also positively ancient in tech terms.

-

I shoot travel, run and gun, guerrilla style, in available light, no re-takes, for personal projects, but within that context, I've done both. I have a target aesthetic that I want to achieve with my work and I know it mostly up-front, although the location does influence it. The quality of the light is one thing I try to capture and emphasise in post, for example. I own all my own equipment, but am pushing it to the limits and sometimes beyond. I'm consistently trying new equipment, techniques, and balancing various trade-offs. For example, with my GH5, I traded auto-focus capability for 10-bit internal files. This means I am manually focusing live in unpredictable environments. I have ordered a couple of lenses even this week to trial, and will sell a few pieces of gear once I've fully tested them. I have no control over what I shoot, but absolutely want to create continuity in the edit between days and locations. In addition to this, I mix footage from three types of cameras across 3 different brands, and shoot in different frame rates which have different codecs. These all have to match in the edit and are sometimes intercut. I haven't been in a colour suite in a professional sense as I do everything and am the client as well as entire production team, but I can colour grade fast. I've taught myself to colour grade by essentially making myself study. A senior colourist told me years ago that there is no shortcut, and that "it takes 10 years to get a decade of experience". I took that to heart. One exercise I did, which is relevant to your question, was I pulled in about 200 random shots (representing a ~10 minute edit) across all the footage I have, including over a dozen cameras and dozens of locations, and then graded it under a time constraint, perhaps equating to something like 30s per shot. I did this exercise many times. The first "set" of times I did this, I did the entire grade using only a single tool. I did the whole thing using only the LGG wheels, then only the contrast/pivot/offset controls, then only curves. I can't remember if there were others - I can't think of any 🙂 Then I looked at the footage and studied what I liked about each look. How well the footage matched, how easy they were to use, etc. Then I did the exercise again, using whatever tools I wanted. I studied the results again. Then I did it again, this time with a target look. I did warm, dreamy, vintage, modern, etc etc. Studied the footage again. That really covers both 1 and 2, because I've graded as many different types of footage as I can get my hands on, including my own footage as well as downloaded footage, both from the manufacturers themselves, as well as independent cinematographers uploading source footage for people to play with. I don't post much of my own work here because its of my family and friends who mostly don't want to be shared publicly online to people they don't know. I do make de-personalised versions of the edits sometimes, but TBH the last thing I want to do once I've finished an edit is to pull out the shots featuring the people I know and then re-cut the whole thing again. I've posted 35 videos to YT since Jan 2021, but almost all are private. They are a mixture of finished projects, equipment tests and colour grading tests. But just for fun, here's one I quite like: No actually, someone else said it, another person agreed, and I mentioned it. My comments were reserved to Canons compressed codecs, not their RAW files. Obviously colour is a matter of perception, taste, but it's also a matter of culture. Colour grading in other countries is often done quite differently, if you're not aware of this then do a bit of searching - it's quite an interesting topic. I could say the same line back to you - if you're not seeing the difference between Canon colour science and ARRI colour science then "That statement alone kind of disqualifies you from any serious color science debate"!!!! Of course, this kind of "I hereby declare that your opinion isn't valid because it isn't the same as mine" is the challenge with these conversations, and sadly, its normally the fanatics that want to cancel you because you have a more nuanced opinion. "You don't think Canon is perfect? Well, you obviously failed second grade math and were locked out of home by your parents - be gone heathen!" "You think we don't need 24K? Well, you obviously don't understand film-making, and progress is progress so get out of the way, and don't you know that there was once a situation where that came in handy, and don't you know that your computer will be able to handle those files in the year 2048 anyway... so, how about that new lens that is so optically perfect the factory keeps muddling up the lens housings for the finished lenses lol lol lol". At the end of the day, I just see that the equation doesn't add up... people are watching 2K masters shot on vintage lenses in cinemas, but if you question why the average Canon user needs 8K then somehow "it's the future" is meant to mean something, but I look at the average image and resolution above 4K is about as relevant to creating them as the colour of the tripod was on set.

-

In a sense there are two fundamental approaches, and two fundamental looks. The first combination is to get an organic look by doing it in camera. This used to be the only option before the VFX tools really became available, and would involve choosing the production design / lighting / filters / lenses / camera / codec to be the "optimal" combination to render the aesthetic. It's still the default approach and will be for a long time to come I would suggest. This is really the scenario that we're talking about here, and where I question the "more is more" mentality that people have, because it just doesn't line up with the images above. Sure, you can shoot a vintage zoom lens on a 12K camera, but if your target aesthetic is the 1940's then the lens won't resolve that much, and so there's no advantage the the resolution over shooting in 2K Prores, but you'll pay for the resolution choice with storage costs and media management and processing time. The second combination is to get an organic look by doing it in post. This is really only feasible recently due to the availability of VFX tools, and would involve choosing an authentic production design / lighting but using filters / lenses / camera / codec to be the highest optical resolution possible, so that the target aesthetic can be created in post. This is the scenario of Mindhunter, and is what I would call a specialist VFX application. The advantage is that you can control the look more heavily, but it's a lot of work. This is why the UMP12K exists. The last combination is to get as clinical / modern a look as possible. This will require the most resolution and highest resolving lenses possible. This creates soul-less images and probably none of us here are interested in this. So the two fundamental approaches are "cameras and lenses should be neutral and technically perfect" and the other approach is "cameras and lenses should be pleasing and technically imperfect, in the ways that I choose". You keep saying I have a black and white view, but I'm not sure what the third option would be - some people want "the right amount" and other people want "more more more more more more more". I would imagine that this will eventually plateau and those people might say "enough already" when we're at 200K or something stupid, but in the meantime it just gets less and less practical for the majority of people. ....and yes, I do believe that most people don't want more pixels, but are forced to buy cameras with more pixels in order to get the minor upgrades to the things that they actually care about.

-

AF I can live without, but I rely heavily on IBIS for the situations I shoot in because I use unstabilised manual primes, often vintage. The Komodo is absolutely a better image, no doubt. I really wish it worked for how I shoot, despite being used-car prices, I'd be super interested. I can't say I'm that enamoured with the cropping it does for higher frame rates though. GH5 battery life is fine. In a sense, I'm looking for: Colour science on the level of BM 2012 (sadly still limited to BM 2012 and pro-level cinema cameras) The IBIS, practicality and reliability of GH5 2017 An EVF with decent focus-peaking Prores downsampled from full sensor resolution I wouldn't say no to dual-gain ISO either, but I get by with fast primes so it's not a deal breaker. Essentially it's a GH5 with the colour science and codec from BM 2012.

-

Lens emulation is essentially a VFX operation, so happy to acknowledge that extra resolution is useful in that case. In terms of how much I've read that article, my answer is that I've read that article more than you have. This is because I have attempted lens emulations in post myself, quite a lot actually, and that article (and the excellent accompanying video which shows the node tree in Baselight) was one of the rare examples I could find of someone talking about the subject in really any useful detail at all. I've read it many times, watched the video many times, taking notes and following along as I went, experimenting and learning. Studying. It's a fascinating topic, and quite a controversial one judging from the response I got on another forum when I started a discussion around it. I won't link to it out of courtesy to Andrew, but googling it should yield results if you're curious - the ILM guy spoke about how they go about emulating lenses for matching composites in VFX and even shared a power grade he mocked up for the discussion, very generous and always fascinating to get to see the workings of people with deep expertise. You still haven't pointed out a shot in the above that requires a high-resolution, except for the one VFX example I included. I'm obsessed with colour science in this discussion because it's the weak link of cameras, and they're not really improving it (don't confuse change with progress). Canon can add as many versions as they like to their colour science, but the R3 RAW images were the splitting image of 5D3 ML RAW files, which I see quite regularly. That level of colour science was very good in 2012, but still isn't ARRI 2012, let alone ARRI 2022. Sony have greatly improved their colour science, absolutely. They took it from "WTF" to "Canon 2012." It's an improvement to their previous cameras, but doesn't surpass what I've been able to buy all along from other brands. BM changed sensors from Fairchild to Sony, which was a large step backwards, and have improved their colour science up to version 5, which is now about the same level as Canon 2012. Still not like the images from the Fairchild sensor. Your point about people watching on iPads reinforces my argument for colour science - the smaller the image is the less resolution matters, but it doesn't diminish the importance of colour science. 10-bit LOG and RAW is great. The best cameras in 2012 had RAW, and 10-bit Prores which is aesthetically nicer than h264/5. No improvement there, just a decade of manufacturers playing catchup. It's an interesting statement that recent mirrorless can compete or match ARRI or RED. My grading experience with the files says no, but ok. My grading skills, yes, let's get personal! You are ABSOLUTELY right that the weak link is my colour grading skills. Absolutely. If I had the total cumulative colour grading knowledge that ARRI have devoted to colour science, my grades would be as good as ARRI. Yes, that's definitely true. The problem is that there's no-one on earth who can claim that. Are you familiar with the path that ARRI have taken with colour science and the involvement and experience of Glenn Kennel? If not, it's a fascinating topic to read more about. Colour and Mastering for Digital Cinema by Glenn Kennel is hugely useful, and the chapter on film emulation is spectacular and was a revelation, and released several years prior to the first Alexa. ARRI have probably devoted 100+ years of expert development into their colour science since then. So when someone points an Alexa at something and records a scene, then takes it into post and applies the ARRI LUT, they're bringing hundreds of years of colour science development to bear. and no, when someone shoots a scene with a Canon camera and then I bring the footage into post and apply the Canon LUT, my skills are not sufficient to bridge the difference. That's the job of the manufacturers. But when I pull in Alexa 2012 footage and Canon 2021 footage into Resolve, apply the manufacturers LUTs, the Canon 2021 doesn't look as nice as the Alexa 2012. Like I've heard the colourists say many times, they can't work miracles, so unless you're shooting with the best cameras (which they mostly specify as ARRI and RED) you can't expect them to deliver the best images. Yes, that's right. The resolution doesn't really matter unless you're doing VFX, which lens emulation in post is definitely an example of. It's 2D VFX instead of 3D VFX, but it's an effect, and it's digital. Not sure what your point is here. My point is that higher resolutions are a niche thing, and I wish that the manufacturers would focus on things like colour science, which isn't a niche application and applies to all images. Sadly, the resolution obsessed videographers keep buying the manufacturers BS so the manufacturers aren't encouraged to actually make progress that applies to everyone.

-

I'd prefer to use the BM box - I don't suppose Apple have allowed there to be a HDMI-in adapter for the iPad yet? I'm not holding my breath though... Also, do you know of anyone using the iPad as a dedicated viewer for editing? I'm keen to hear if people find it large enough. Walter Murch famously puts little silhouettes of people on the side of his viewing monitor to remind him of the scale of the screen in a cinema: This helps him keep perspective on what the viewing experience is like for an audience and inform decisions about how much is required and when something will be good enough. Obviously I'm not editing for cinema, and according to @Django people watch videos on my non-existent 7" iPad, but I'd like to have the screen big enough to ensure things are visible. I have a tiny 3.5" monitor for the BMMCC, but I'm thinking that isn't large enough(!) so wondering what is a sensible minimum size.

-

They're both FF aren't they? The ARRI website lists the ALEXA Mini (not LF) as being 3.4K, so I'd imagine that Tom was referring to 4K on S35. That's the standard for cinema, still. That's a BS criteria that has nothing to do with imaging. Again, please point to the one that NEEDED 4K. or 6K. or 8K. or 12K...... for anything relating to the actual image. It certainly won't matter to anyone watching on my 7" iPad as I don't have a 7" iPad. (You keep making statements referring to my behaviour, and getting it wrong. Are you secretly bugging someone's house, and thinking it's mine? Very strange!) It also doesn't matter to me watching content on my 32" UHD display at about 5' viewing distance. When I was sizing my display before buying I reviewed the THX and SMPTE cinema standards and translated their recommended viewing angle to my room so that I'd have the same angle as sitting in the diamond zone in the cinema. The two standards were a bit different, so I chose a spot about half-way between them. At this distance, under controlled conditions, the difference between 2K and 4K high-bitrate images (ie, not streamed) is not visible, yes I performed my own tests and shot my own footage as well as watching comparisons from others. With streaming content there is a huge difference between a 2K stream and a 4K stream due to the bitrates involved, but switching between a 2K source and 4K source is not visible, even when the stream is 4K. Yes, my display is colour calibrated. Yes, my display is set to industry cinema brightness. Yes, my room is set to industry standard ambient light levels and is also colour calibrated. These were tests using high-resolution lenses and controlled conditions, not the kind of images shown above, which obscure and de-emphasise resolution deliberately for creative purposes. So, no, it's not visible to the faceless people somehow watching my non-existent 7" iPad, it's not visible to me in a highly calibrated environment with a cinema-like angle-of-view, and it's basically not visible to people who are watching a 4K TV that isn't within arms reach. It's only visible to people who pixel-pee instead of thinking about the creative aspects. Yes, it does, and yes, they do. I've done as many blind camera tests as I can find, and done them properly under controlled blind conditions. I did so because I was genuinely interested in learning what I saw, not what I think I saw based on my own prejudices. I learned two things: I judge a cameras image based on its colour This technique has, without fail, ranked more expensive cameras above cheaper cameras So, back to the tech. It's been a decade since we had OG BM cameras and the 5D with ML, but no improved colour science in the sub-$4K price range. None. Zero improvement. In a decade. Your statement is correct "Tech moves forward. So do requirements". Unfortunately, the number one consideration I have, and the consideration that accurately predicts the price of the camera (unlike other considerations from others) hasn't improved. It's not like it couldn't be done. ARRI improved the colour science of the Alexa, which was already worlds-best, but we're still stuck with 5D level colour. You appreciate resolution and so it looks like the tech is improving, and it has - DSLR sized cameras went from 2K RAW to 8K RAW is a 16x improvement. I value colour, and DSLR sized cameras haven't made much progress at all. The R5C and R3 look like 5D images, with a ton more resolution and a touch more DR. I'm not asking for a 16x improvement, I'm asking for a 2x improvement, or a 3x improvement.

-

No-one is forcing 8K on me? Brilliant! Please direct me to the aisle with the 2.5-3K RAW cameras that don't crop. I'd also assume, since we had these a decade or so ago that they'll have improved the colour science and made them crazy good at low light and improved the DR instead of adding lots of resolution. Thanks.

-

Please point to the one that NEEDED 4K. or 6K. or 8K. or 12K. Lots of talk about cameras around here, and lots of talk about cameras needing more resolution. Meanwhile I'm watching the content that is the best of the best and barely seeing a frame where the minuscule amount of detail 4K provides wasn't completely overwhelmed by the lens properties, either physical or virtual. The desire for finer detailed images from non-cinema cameras is opposed to having emotional images, and comes at the cost of things that would actually make a difference The people who talk about more resolution being better fall into two camps: those who are making images like the above and feel that a bump in resolution might be slightly advantageous, or the people who don't understand that images like the above exist and talk about resolution like its an end-goal in itself.

-

Interesting. I would have thought that the silica gel would have made the container too dry, but apparently not. Seems like a good solution, and worth trying if humidity is a problem. I wonder if silica gel can be dried and reused? Maybe by putting it out in the sun on a hot day, or something similar?

-

The people you're talking about are cinematographers telling a story. They're not talking about lens resolution, 8K, or cropping in post. Every time a new camera comes out there is the same challenge.... People that are seeing cameras get more expensive, have more pixels (that they don't need or want), and seeing that the colour or features aren't any better. Then there are the videographers who want as much resolution as possible, want as clinically sharp lenses as possible, don't know or care about truly great colour science, and will swallow the ever-increasing cost of the new cameras. The former seem to be happy to acknowledge that the latter wants more resolution (along with VFX departments and VR), the latter seem unable to acknowledge that the formers needs are valid, or even make any sense. This is the source of the conflict. This is how videographers shoot. They want to be able to extract many compositions from a single angle. They want to be able to get zoom-lens functionality from a single prime. It could be because they're running multiple cameras at once, or operating in a small space without room for many cameras, or the logistics of pumping out videos at break-neck pace dominate. The ability to re-frame in post requires resolution and it requires having the sharpest most neutral/bland lenses possible, otherwise things look funny if you're zooming into the frame near an edge and getting asymmetrical lens distortions. They want the image to be as flexible in post as they can, so the image SOOC needs to have the least amount of character possible. I wish. Too heavy, too expensive, and too large for how/where I shoot. There is a problem in the camera market. Essentially, there are no modern, DSLR-sized cameras with great colour (for which I set the standard of the Alexa from a decade ago): if you eliminate modern, you get the OG BMPCC BMMCC if you eliminate DSLR-sized, you get cinema cameras (Komodo, ALEXA Mini, UMP12K, etc) if you eliminate great colour, you get the modern mirrorless cameras (P4K, P6K, R5, C70, A7S3, S1H, XT-4, etc) The problem is that we had the OG BM cameras, but they abandoned the colour of the Fairlight sensor. Notice that price wasn't a variable in the above? There is literally no small, modern camera with great colour - even if you were willing to pay $20K for one. I think that the manufacturers are actively prejudiced against non-narrative smaller productions. They reserve the great image quality for people that can use a rigged-up cinema camera (slow and picky to work with) or if you're not in that upper echelon of high-art then screw you, you get the modest colour science of Canon from 10 years ago that used to be special then and everyone has now.

-

Yes, I suspect I'll end up with one of those travel screens, as Andrew reviewed. In fact the one that Andrew reviewed looked great, except I think I'd prefer one that's smaller. That's why this thread - to see if others have feedback. Technically you're right, that I'd have to carry a calibration device, which I have and could do. The challenge is that the rigours don't stop there. You need to calibrate it to each environment, but you need to have colour calibrated ambient light of a specific brightness, lest you not interpret contrast correctly. So I'd have to travel with several calibrated lights, and then I'd have to position them to achieve the correct ambient brightness. Luckily, my work is just personal and for YT so near enough is good enough. Having a portable calibrated display isn't really the issue, really I'm just looking for a decently sized screen to view the video on. In that sense it's actually more for editing than it is for colour grading. I have a 13" MBP, but you can only make the viewer so large by adjusting the size of the menus etc. I have the BM Speed Editor controller, and you can edit with it in full-screen mode, but it's tough to not be able to see the timeline or any menus or controls at all, so that doesn't work so well in many of the stages of editing. As I said, colour isn't that critical for me. I asked about calibration on the colourist forums and there was an interesting reply saying "I find an i1 display pro using xrite software gets my GUI monitor pretty close to my Flanders broadcast monitor. So for hobbyist work it will be fine." I figure that if a pro said "pretty close" when talking about a normal monitor managed through the GUI then a reasonable quality normal monitor that is calibrated should be enough for me! Also, I only work on 1080p timelines, and the BM UltraStudio I have is limited to 1080p, so I only need a 1080p monitor. I do this not only because 4K doesn't really matter, but also to reduce the load on my machine for editing. Once again, I'm using 1080p to avoid a cost of 4K that no-one here will even acknowledge exists.. 🙂

-

It's a videographer vs cinematographer thing. The videographers priority is to work with consumers/brands to create clean/modern looking videos that make them money. The cinematographers priority is to work with imperfections to create emotion that supports a story. That's why videographers talk about specs, and cinematographers are talking about the look of the image. This might help:

-

I was referring to the original Alexa. The one that's 10 years old and is barely breaking five-figures second hand now. Rather odd that you think you know which videos I'm looking at, but that's besides the point. I've graded Canon Log footage from a few of the Canon cine cameras, Prores from a few Alexa models both current and legacy, and Prores from my BM OG BMPCC and BMMCC. The footage from the BM cameras feels similar in the grade to the Alexa in a way that the Canon footage did not. REDRAW has the same feeling of the ARRIs. There is an effortless quality to the colours and a predictability to the image, like all the sliders are working properly. In contrast, the highest quality footage from the GH5 and many other similar cameras from other brands makes the controls feel like something has been knocked out of alignment. Like the adjustments aren't what you asked for, and the footage is fragile rather than robust. You take skintones and push the WB and they fall apart, looking fake and somehow corrupted, rather than simply looking warmer or cooler. Strange casts appear and you fight with the footage rather than create with it. The Canon footage was somewhere in-between. Obviously not nearly as poor as the pro-sumer cameras, but it had a tinge to it that was definitely noticeable. It's an emotional thing. My brain wasn't unhappy grading the Canon cinema camera footage, but grading the other footage is an emotional process where the results are joyous in some way, but the Canon falls flat. This is why I've mentioned previously, potentially in another thread, that BM gave us magic in 2012 and Canon still isn't giving it to us a decade later.

-

The catastrophe isn't getting RAW, it's forgetting how good cameras used to look. A decade on, and cameras have 16x as many pixels, but worse colour science. Like @Andrew Reid said "Nothing unique at all about the images, could have been shot on just about anything else.". This is why I said that "the expectation of cameras to even have that level of colour is gone". You look at the images from that camera and think "looks great - yay Canon colour science" but many others think "blah.. another generic look". ARRI created the 65 and improved their colour science from the original Alexa models. Canon hasn't even closed the gap between them and the original Alexa.

-

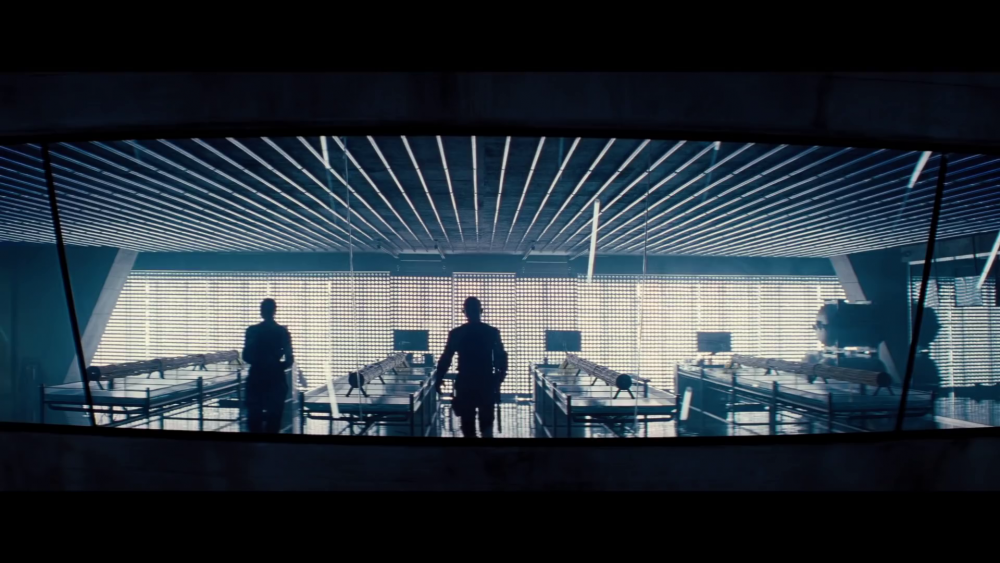

We're all talking about aesthetics. We're talking about aesthetics when we talk about the "look", but we're talking about it when we talk about specifications too. A debate rages about what is "enough" resolution, "enough" sharpness, "nicer" bokeh... what is "cinematic"... what is "visible"... what is "practical". This thread is a reality check against the warped concepts that stills photographers and their camera-club specifications-obsessions have given us. Because, for the most part, better objective measurements are mostly worse subjectively. It's our imperfections that creates our humanity, and it's analog imperfection that creates emotional images. Baseline First, let's establish a baseline. Here are some test images from ARRI that are designed to showcase the technology, not a creative aesthetic. Note the super-clean image, lack of almost all lens-distortions (except wide angle distortion on the wide lens, which is actually super-wide at 12mm). If you were there, this might be what it actually looked like. Those were grabs from a 4K YouTube upload, but lots of trailers aren't uploaded in 4K, so here is a still from 1080p Youtube video that ARRI uploaded in 2010. Now, without further ado.... The Aesthetic - The Chilling Adventures of Sabrina These are obviously very distorted, and I chose frames that were especially so. This should instantly disavow you of the idea that somehow Netflix demands "pristine" images - these are filthy as hell, but this is appropriate to the subject matter, which is about witchcraft, the occult, demons, and literally, hell. Whenever I hear someone say "oh I can't believe how terrible that lens is - look at the edge softness" I just laugh. The person may as well be saying "not only don't I have a clue about film-making, but my eyes also don't work either.. please ignore everything else I say from now on". The Aesthetic - Sex Education A show with a deliberately vintage vibe, the look is suitably vintage, with some pretty wicked CA. One thing that's interesting is the last shot, which was either a drone with a vintage lens on it, or it was doctored in post, because it has pretty severe CA - look at the bottom right of the frame above the Netflix logo. Also note how nothing looks sharp - the first image should have had something in-focus, but softness of this level is deliberate because once again, the last shot is a deep-focus shot with a stopped-down aperture and should be super-sharp but isn't. The Aesthetic - No Time To Die Some shots are softer than others, but note the amazing barrel distortion and edge softness on the middle two shots. In case you missed it, here's the star of this $400M movie in a pivotal scene from the movie: Are there lenses that could have made this shot more "accurate"? Sure - just scroll up to the ARRI shots which look pristine (and they're ZOOMS!). This was deliberate and is consistent with the emotion and narrative. The Aesthetic. The Aesthetic - The Witcher Sharp when it wants to be, oversharp too - see the second image, but with anamorphic bokeh for the look. Ironically, a fantasy story of witchcraft and monsters, using cleaner more modern looking glass. The complete opposite approach of Sabrina. Note on the second-last image the vertical anamorphic bokeh, and then look at the last image and note the "swirl" in the bokeh. I doubt this was accidental. The Aesthetic - You Sharp and clean when it wants to be, and other times, really not. Appropriate for the subject matter. The Aesthetic - Squid Game Clean, sharp but not too sharp, neutral colour palette, but note the vertical lines on the edges of the frame aren't straight? Subtle, and perhaps not deliberate, but picture it in your mind if they didn't flare out.. it makes a difference, deliberate or not. The Aesthetic - Bridgerton Clean, spherical, basically distortion free, but sharp? No. Go look at that Witcher closeup again for some contrast. The Aesthetic - The Crown Clean and relatively distortion free, but lots of diffusion, haze, and low-contrast when required. The Crown is a masterpiece of the visuals matching the emotional narrative of the story, which is made extra difficult as the story is set in reality, and the emotional tone is so muted that had lesser people been involved in making it any subtleties may well have simply been bland rather than subtle but deep. The Aesthetic - Mindhunter Perhaps the most interesting example here. After looking at the previous images the above might seem completely unremarkable, except that this look was created in post. and I mean, completely in post: More here: https://filmmakermagazine.com/103768-dp-erik-messerschmidt-on-shooting-netflixs-mindhunter-with-a-custom-red-xenograph/#.YftoaC8RrOQ and here: https://thefincheranalyst.com/tag/red-xenomorph/ (there's a great video outlining the lens emulations in post in this one). That's enough for now. Hopefully now you can appreciate that "perfectly clean" optically is actually only perfect for "perfectly clean" moments in your videos. Sure, if you're out there doing corporate day in and day out then it might seem like "clean" is the right way, or if you're in advertising or travel where neutral reigns, but when it comes to emotion, it's about choosing the best imperfections to suit the desired aesthetic.

-

It's even worse when it slows down at the end and then speeds up again. Some careful EQ could help with the noise when it's moving but all bets are off at the ends. It does seem like that might just be how it is though, rather than being faulty. One trick I've used in the past in hifi tweaking is to stick blu-tak to various surfaces to see if that helps. If something is vibrating then it will damp it, and it's easily removed and doesn't do any damage, so is quick and easy to use to diagnose what might be happening. Just be careful not to put it in the way of something that is moving, although if it does hit something then it deforms relatively easily so is a forgiving material. If you find surfaces that are vibrating then there are other solutions like adhering sheets of foam rubber and other absorbent materials. Anything that doesn't move can often just be damped by putting blu-tak around it, as long as it doesn't get hot then it should be fine.

-

I'm gradually building my portable editing and grading setup, and have just bought the BM UltraStudio Monitor 3G, which is a Resolve-controlled thunderbolt-in / HDMI-out device the size of a large matchbox. This necessitates using an external monitor. The question is, what is the right physical size? Does anyone use a portable monitor as an external reference monitor for editing / colour grading? I'm thinking that a normal 5" or 7" monitor designed for on-camera duties is probably too small, as you can't see enough detail in the image (for colouring at least) and wouldn't be larger than the Viewer window on the UI anyway, but a 15" reference monitor would likely be cumbersome to travel with.

-

I agree, mostly. I think that increased dynamic range is actually a good thing, as film clips really nicely and it takes more DR to emulate that in digital than it did in chemistry, but it seems to have come at the expense of colour science, rather than supporting it, as I think it has the potential to do. IIRC, film is mostly linear in the middle sections, with large rolloffs at each end. A camera that took its 14 stops of DR and applied a contrast curve like this, and applied nice colour saturation and tonality, and output that as a 709-style 10-bit profile in-camera would be great. Such a profile could apply the normal orange/teal colour separation and cool-shadows / warm-highlights that is the basis for almost all colour profiles. This would give the camera a very usable SOOC file that would have more analog highlight and shadow rolloffs, potentially with some adjustment in-camera of the overall contrast and the black-levels perhaps. Such a profile wouldn't clip anything, and would have the benefit that if you wanted to colour grade in post, there would be more bits in the middle levels where the skintones etc are. A camera that took its 14 stops of DR, applied nice colour, and applied a completely perfect log curve and output that as a log-style 10-bit profile in-camera would also be great. This would allow a completely neutral encoding that would enable the maximum flexibility of files in post for adjustments that severely push the image in terms of exposure and grading. This would make the camera a very usable and flexible camera for grading in post. These things are completely possible, just not done. Mores the pity.