-

Posts

7,835 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by kye

-

I think it's actually worse than this. On the one hand we have people saying that 8K is more visible than 4K, when 4K isn't particularly visible over 2K even when the screen is cinema sized, and on the other hand, more and more people are watching videos on their portable devices, which have absolutely TINY screens with 4K or less resolution. Soon we'll have people saying that 24K is absolutely mandatory and in the same sentence saying that everyone is watching videos on a postage stamp. The cognitive dissonance is palpable. (unlike the aesthetic of the image because the camera and lenses are all pristine, and therefore, soul-less!)

-

I would be curious to see data on this. Did you link to something previously? I keep hearing people say that Netflix has these amazing requirements, but then I keep hearing about shows they've purchased after they were made that didn't meet the criteria but were purchased anyway, and then I hear that much/most? of what is on Netflix is purchased after the fact, rather than made for Netflix in the first place. I think it's easy to get a misleading view of what is actually on Netflix because it's always promoting its own content and the higher budget shows they've bought. I find that if you search for random words then you find entire universes of content that you'd never know was there. Often they reveal swathes of content that wasn't made specifically for them, and is actually very low budget. Actually, the driver for increased resolution cinema cameras is coming from the VFX department that is involved in more and more productions these days. It's a continuing debate that has been around since cameras started going above 2K. This video shows the dynamic, which is still relevant today, but note that this video was from 2014! You're right that it's horses-for-courses. I think the unfortunate thing is that spec-obsessed videographers fail to understand the aesthetic catastrophe that has occurred during the last decade of camera development. This is now partially offset by the proliferation of RAW, but that comes with "hidden" costs. I put "hidden" in quotations, because the costs are staggering and completely obvious, it's just that proponents of specifications fail to see them, and do so not only willingly, but deliberately. The cost is literally 10's of thousands of dollars. This is true but is perhaps the most useless and misleading statement, because it misses an entire section of film-makers. In a debate over specs there are often two camps - the "specs are good, give me more" camp, and the "we don't need more, we need better" camp. The former is normally commercial videographers who only care about selling modern-looking images to uneducated clients. The latter is people who are chasing the aesthetic of cinema, part of which is a softness of image that is life-like and human, rather than artificially clean and modern. The premise that your statement makes is that the only people in the second group who are winning awards are allowed to express an opinion. This is simply untrue. There are a number of people on these forums, and I count myself as one of them, who are interested in an aesthetic other than "clean/modern". An interest in vintage lenses is a sign of this desire, an interest in older cameras is a sign of this desire, there are others too. There are multiple FB groups full of people who make fun of the borderline worship of the Sigma 18-35 lens, BM P4K and P6K cameras, and the whole "it's good because <insert specification here" argument. The sad thing, and I think the thing that causes so much conflict, is that the specification-driven clean/modern aesthetic videographer folks don't understand they're only one audience in a sea of other people with deeply different needs, and so tend to shut down others as if anything other than their-style of film-making isn't a valid style of film-making (unless you're winning an Oscar....) Completely agree. In fact, the manufacturers not only managed to convince us that more resolution is better, they managed to convince us that spectacular images are not expected. In the years after the OG BMPCC and BMMCC were created, people loved the colour and took that level of image to be a new standard. Now, a decade on, not only do the multi-thousand dollar cameras not meet that level of colour science, but the expectation of cameras to even have that level of colour is gone.

-

Ironically, I see him as being on the more useful side of the spectrum... He's consistent His motivations and commercial relationships are pretty obvious He is well aligned with his audience He is knowledgable about equipment and normally mentions when he has or hasn't used / tested the equipment he's talking about He shows the equipment warts-and-all and his skills in using it warts-and-all (this is exceptionally useful compared to the PR people that heavily edit and don't show equipment failing) He doesn't talk outside his experience (he doesn't, for example, claim to know what a professional cinematographer would want, or a wedding / corporate videographer, etc etc.. this is the trap lots of camera YT falls into) He's entertaining I'd suggest that if you're not a fan then it's probably because either: You're not his target audience You don't like his style / humour ....and if so, that's fine. But don't confuse that with being a bad reviewer.

-

This video might be of interest if you haven't already seen it. But I'm not sure how large you want to rig your camera though, which is Matteo's main stabilisation strategy IIRC.

-

That was a long time ago, but IIRC I cleaned all the lenses that really needed it, and all the lenses are in tact, so.. I think so? 🙂 With that in mind, I bought an inexpensive but highly-rated hygrometer. The maximum humidity over the last 24 hours or so is 47%. I'll keep an eye on it but perhaps a dry cabinet is not necessary here. This is my approach. I cleaned the lenses that had stuff in the optical path (fungus, dirt, grease, whatever) and then just made sure that any remaining fungus wouldn't continue to grow. One thing that comes to mind as a cheaper solution is to make a DIY "dry-ish cabinet". Simply get a container / cupboard / something that mostly seals and then put something into it that will keep the humidity in it lower than the threshold. I was thinking that perhaps rice might be a good material to act as a de-humidifier. If you put a few kg's in a cloth bag, or an oven baking tray, etc, then you could put it in the bottom of the cabinet where it will gradually absorb the humidity in the cabinet. Once it saturates, you could dry it out again by putting it near a heat source for a few hours to re-charge it. Maybe an oven on the lowest setting (many ovens will go down to below boiling point). Of course you'd have to keep an eye on the humidity every few days etc. I very much doubt that the long fuzz was something else - how would it have gotten into the lens? Small particles might have gotten there from hundreds of focus adjustments and the lens being rotated this was and then that way, but something that was pressed into the shape of the lens wouldn't have fallen or been blown into the mechanism, especially if it was being pressed against the sides of the chamber it was in. Good to hear that you cleaned it and got it back together, and bummer to hear about the other lens that you didn't manage to. Many of the lenses that I bought didn't have any videos / articles available on how to tear down, and some of them I didn't fully dismantle. Often you could pull a group out of the lens and the group would be fine, so no point in taking that apart unless there was stuff inside it. For those I'd clean the outer elements and if it looked OK I'd just put it back. When you see teardowns from people that really know what they're doing you might notice that they take detailed records when disassembling, will put marks on parts to show the rotation and orientation of various parts, etc. I tried to do that, and occasionally had to refer back to reference something or other. There's definitely an art to it. I mostly avoided removing aperture mechanisms, and on some lenses I put them back together again and had parts left over. They all worked fine afterwards though, which was a bit surprising! Those aperture mechanisms sure are complicated and extremely fiddly.

-

I haven't played with this side of Resolve, but I'd say it's likely. MIDI is a very standardised protocol, and one of the most used and longest running standards around I think, so it's likely that most MIDI controllers will provide a significant benefit.

-

I think a few are doing "education" and the rest are on the wrong side of "infotainment" and sliding into confirmation-bias and hear-say. I think there's a broader challenge with this stuff, which is that there needs to be a balance between information and entertainment. Take books for example, a pulp fiction novel provides entertainment but no information and a textbook contains all information and no entertainment, but sadly the textbook also provides no benefit unless there is enough motivation provided to make the person actually read and understand it. I have many dense books that I haven't read due to lack of motivation, unfortunately. It's harder than you'd think. It requires: critical thinking an understanding about matching variables familiarity with lens design and history capability, capacity, and motivation to prepare and plan the video a willingness to go out of their comfort zone motivation to do anything except whatever half-baked thought happens to occur to them at that moment (or is provided to them by a vendor, a forum, or some other flaky information source....)

-

If you really want to go down the rabbit hole, I'd suggest searching in scientific resources that deal with the biology of fungus itself. It may well be too complicated a topic, based on the vast array of fungus varieties/species/strains?? and how they're likely to be very different, but it's worthwhile going to the right sources. I figured that the key was making sure the humidity was low enough to stop it from growing, and then just to remove any clumps that were visible (IIRC none were visible in the images) so it was more for piece of mind. My fungus lenses were all cheap eBay ones, so I just pulled them apart and cleaned them as best as I could, using some dish soap, some distilled water, and some cotton tips and a blower. I'm absolutely not an expert, far from it, but this thread of mine might be useful.. if even just to make you feel better 🙂 I posted a number of pics in there from my lenses. I'd imagine they're worse than yours, but funnily enough were still optically fine.

-

I think the internet has gone through a number of phases at this point: Who is going digital? Can it ever be as good as film? Digital is great - now it's even sharper than 35mm film! GIVE ME THE SHARPEST LENSES IN THE WORLD!!!! Wow, all this resolution and sharp lenses look kind of clinical and dead. Hmmm. Digital needs to be tamed by vintage lenses. It's all about CZ, FD, Takumars, Leica R, and the Soviet lenses. These are the ones worth talking about. Mirrorless is great, now I can adapt any lens I like. Plus, those CZ and Leica Rs are so ridiculously expensive. Weren't there other brands too? I vaguely remember some other brands..... We're in the latter one now, basically. There are people in the vintage lens groups pulling Konica sets together and cine-modding them, but they're not so common. Vintage zooms are amongst the worst quality lenses ever made, optically at least, so they're the least likely to get any attention.

-

Another thing worth noting, there's this line of logic that somehow "fungus is infectious". You hear this thinking when people talk about "getting rid of" the fungus, "keep the lens isolated", "prevent it from spreading". This is false. The Zeiss page I linked to earlier said that fungus is everywhere. They were downplaying it. Source: https://www.uni-mainz.de/presse/13180_ENG_HTML.php Fungus is already in your lens. Lenses are probably assembled in a clean room, but any part of the lens that isn't completely sealed will soon get fungus into it. Lots of lenses expand when focusing, which pulls in air. If there's 1000 spores in every cubic meter of air then even one focus motion will pull multiple spores into your lens. The reason it's not a problem most of the time is because it's not growing.

-

Reminds me of a book I just read - In the Weeds: Around the World and Behind the Scenes with Anthony Bourdain by Tom Vitale. Some crazy crazy stories in there. Great book.

-

Yeah, when I first started buying vintage lenses (and I was buying the suuuuper cheap eBay ones) I shone a light through a lens for the first time and just about had a heart attack. It wasn't until I shone a light through some other lenses that were fine that I realised that basically all lenses look like a garbage dump when looked at with that kind of scrutiny, but probably give images that are fine. Interesting about the little spiderweb illusions from bright light, I think I have seen these before too. I suspect it's something to do with the water in your eyes? I remember blinking and that having an effect on them, but maybe I'm mis-remembering. Only a few days? That's incredibly fast! The first thing I'd suggest that anyone buy is a combo thermometer / hygrometer so you can see temp and humidity. In my case where I live is very dry so I was fine and I just store lenses normally in a drawer or on a shelf, so no cause for extra equipment. You also want to make sure you don't under-humidify them, as the articles are very specific about that being bad for the lenses. I think things start to dry out in the lens? But it's best to be able to see what you're doing so you don't go too far one way or the other.

-

Sorry to hear it. There is so much misinformation about fungus online that it's practically impossible to get good information. I caution you against the, likely inevitable, wrong replies you will receive in this thread. To that end, don't listen to what I have to say either - get information from trusted sources. I'd suggest this page from Zeiss: https://www.zeiss.com/consumer-products/us/service/content/fungus-on-lenses.html Here are some interesting bits from that page, which I've highlighted in bold: Fungus is everywhere. Humidity is normally the issue. The Sony page is also useful: https://www.sony.com/electronics/support/articles/00062800 I've bought and cleaned a number of vintage lenses, some with fungus and some without. Depending on how long the fungus has been in your lenses, there might still be minimal damage. At first they just grow on the surface, which doesn't really do any harm at all. Left longer they can damage coatings, which wouldn't have a huge optical effect, especially if on elements further away from the sensor (which are much more out of focus). Eventually they eat into the glass, which is the most severe, but this may very well take years or decades. I would suggest evaluating each lens by stopping it all the way down and taking pictures of a blank surface (like a wall or ceiling) and seeing if there are any spots visible. You'd be amazed at how disgusting a lens can appear but yet how unaffected the images from it can be.

-

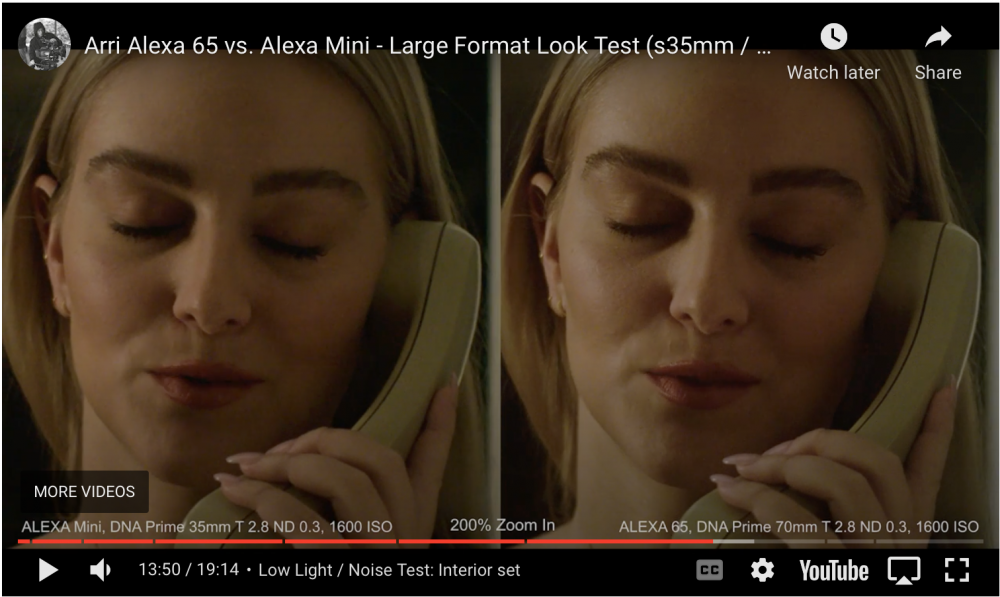

I'm having devious thoughts for a separate thread, but it will require some filming, so might take a bit. I think one key aspect of the computational stuff is having a depth map, which can be used to generate realistic anamorphic bokeh, which currently I don't think there are good simulations of really. Certainly this is one aspect where the stupendous resolutions will help - doing 3D edge detection on an 8K image is likely to generate a much better result than at native 2K or similar. The sheer weight and scale of the image from the 65 in that ARRI video is just wonderful. No doubt whatsoever. What was interesting to me was how "imperfect" the lens is on the edges, with smear and vignetting etc. It makes a wonderful look, but normally the high-end optics are as clean as they can make them, but not clinical. Sure, the 65 sensor is larger, but if you're making custom glass then I still don't see why you can't just scale everything in a lens design up proportionately and get a larger image circle. Maybe there's something I'm missing there though - optical design is definitely outside my expertise.

-

Not a martyr at all. You asked a question and I answered it. If you think I'm off-topic then I guess you're just not seeing how it connects. No wonder you're not following. You ask what does colour science have to do with lenses. Let me ask - what did that quote have to do with colour science? You're right that the look of a specific lens is linked with the sensor that you use it on, but I am talking about the aesthetic effects of a lens attribute. For example, barrel distortion. Lots of lenses have that attribute. Luckily it's one we can add and manipulate in post. So is vignetting. So is contrast. So is halation. So is sharpness and so is resolution (in the sense we can reduce it in post). Depending on how sophisticated we want to be, we can stretch all these parameters to develop a 'look'. They're actually quite easy to adjust in most software, so it's not a super-esoteric kind of thing. It's simple enough that a person could develop some looks they enjoy and just save them as pre-sets, and apply them during an editing session. I've heard you mention several times that you're a fan of the Cooke look. While I'm not suggesting you can emulate that in post, what I am suggesting is that you could take a lens that was objectionable, perhaps because it is too perfect (like many complain the Sigma 18-35 is, for example) and apply similar adjustments to push it in the direction of a Cooke. No, it probably won't fool anyone, but perhaps it gives an aesthetic that suits a project, and maybe gives a look that's desirable. In fact, by adjusting the parameters individually, you could even tailor a look to a project where no lens in existence would give that exact combination. The show "The Chilling Adventures of Sabrina" on Netflix has a dark other-worldly vibe with huge amounts of lens distortions, which I suspect come from old anamorphics with huge and very compromised squeeze factors. While us amateurs are not going to be emulating anamorphic bokeh in post any time soon, the wild distortions are certainly possible with a few nodes in Resolve. This is the relevance of the Yedlin quote that @Django didn't seem to read / understand. By identifying individual lens attributes, isolating them and their influences and how we might emulate them in post, and by understanding which ones and how much give what aesthetic effects, we get creative control, rather than being limited to simply "shopping" for the look we want from any specific lens. I've shown this kind of thing on the forums before. Perhaps the people who resist an analytical approach simply don't understand what is possible? IIRC that was simply matching the colours ( @Django oh no! - looks like colour science might have something to do with lenses!! gasp, cough, splutter). The more we understand the elements of an aesthetic the more empowered we are to manipulate it and create it ourselves, which gives us greater potential for creativity. Isn't that what we're all trying to achieve?

-

It sounds like you're suggesting that I also get into the weightlosing challenge too, with all my "bloated debates and become bloated themselves". 🙂 I'd enjoy more discussion about footage and lenses too. Maybe you should post some? I've posted multiple threads including lens tests, camera threads, and colour science / profiles. You've only created three new threads since mid-last year and one of them is this thread which just seems to be complaining about the other people on the forum... If you want the forums to be better, then go right ahead and start a good conversation.

-

I'm happy to discuss the relative merits and aesthetics of lenses, but that can be done without sensor size being part of it....... but sensor size was the premise of this whole thread, so that's what I was talking about. I have a very particular interest in learning about how this stuff actually works. Fluffy discussions that conflate various things together and confuse one thing with the next prevent tangible things from being learned. I'm getting philosophical here, but you questioned my approach to discussions, so it's relevant. Steve Yedlin put it wonderfully in his spectacular essay "On Colour Science": That applies to all of film-making, not just colour, so let's unpack that a little. "identify, isolate and understand any of these underlying attributes so that we can manipulate them meaningfully for ourselves" The idea here is to identify things we like and things we don't like. Things that work for a particular aesthetic. Then we isolate the underlying attribute. We do this all the time when actually making films. We film something from two different angles and then choose the one we like better. We do this in isolation by looking at a frame, taking a step or two to the left and looking at it again. We position the subject in the centre of the frame, then position them to the right and then the left, examining the effects of changing just one isolated variable. We do this in post by choosing a warm or cooler WB, but putting more or less contrast. etc etc. If we made a list of every individual aspect that is decided when making a film, most of us would have some idea of the effects of these and could talk about them. We then understand these underlying attributes so we can manipulate them for ourselves. I'd imagine that most people into cinema in one way or another would realise that a romantic comedy would be high-key, warmer WB, less sharpened, smoother camera movement, slower editing, happier music. A horror/thriller would be low-key, cooler WB, sharpened harsh image, jerky camera movement, fast paced editing, with ominous music. This is about understanding the aesthetic impacts of these individual aspects of film-making. To turn back to this discussion, or others where you feel I'm being particularly "dense or obtuse" it's actually about trying to drill down using this identify/isolate/understand approach. As far as I can tell, I'm one of the people working hardest on these forums to actually pull apart the specific elements of film-making and understand them. If there are others, they're not sharing their efforts or findings much. @BTM_Pix is an exception of course. This idea of trying to isolate variables is one of the fundamental principles of science, and its taken us from the dark ages to space exploration. Not quite all of human knowledge, but a good deal of it, and it's not anally-retentive about details just for the hell of it, it's because it actually matters. But, I get it. If you're not trying to drill down and get to the specifics, then it's fun to just hang out and say things with loose words and may or may not be true, and just agree with each other for the sake of being nice. I'm not here, posting detailed responses in a thread about the look of various sensor sizes to chew the fat and hang. I'm here to try and learn WTF is actually going on. This discussion has actually been useful for me, counter to how it might appear, I've learned a great deal in terms of sharpening my understanding of the topic, further developing my understanding of how much other people actually know, and the overall psychology of humanity. I also get it that most people here are just looking at cameras as a thing you buy to shoot, edit a bit, adjust the colour a little, export, get paid and move on. From a business perspective it makes total sense. Choose the best package and then just go use it. That's not my approach. I spend more time in post than most, and am willing to put in more effort to develop my skills for post as well. If I can do that and get an advantage then that's worth it for me. My "obtuse" posts are trying to sharpen the conversation to be more specific (seemingly against the wishes of the other participants it seems) to understand concrete things. Sorry if you were just here to speak loosely and draw conclusions without having your logic checked, but that's not what I thought the focus of these forums were. The entire idea of the DSLR revolution was trying to get the best results we can with modest affordable equipment, but that takes specific knowledge, and that requires rigour and questioning.

-

I did read your post. The fundamental challenge is that it's not possible to do a comparison without changing so many other variables that you can't see past them to actually see the effects of sensor size. Your post acknowledges that it wouldn't be possible to do a better test, but that doesn't mean that the best test is of a high enough standard to be useful. How would you know? What if once you match these things perfectly there was no difference? Can you tell me without any doubt that there even is any difference? No. Because no-one has seen a test where all the other variables have been eliminated. This thread is about the difference between sensor sizes, and you're trying to say that you can extrapolate this test to all sensors of different sizes despite there being an array of differences of other variables, but I have tried to eliminate the other variables and I'm saying that the difference in look was so reduced that it wouldn't be possible to tell if there was any actual difference or not. In a sense you and I are disagreeing about the impact of the lens distortion, colour science, vignetting, etc, but you haven't seen the altered version. That's why I was being a bit sarcastic. You're talking about the look of something you haven't seen. You're literally arguing with no experience of something against someone with hours of experience of it. Yes, this is called crop factor. This isn't new or useful information - it's literally part of the maths of how an optical system works. Yes, I agree. In fact, I can speak from experience here because when I matched the Mini to the 65 (which required adding quite significant amounts of barrel distortion and vignetting) the Mini looked like it had so much more mojo too. It's an amazing observation, but when you take two camera setups and put a lens on one that has lots of mojo, that cameras images will have more mojo. I thought this was a sensor size discussion? Instead you're talking about the lenses. You're kind of saying that the FF look is using much nicer lenses. But that's not a FF look at all - every format benefits from using nicer lenses. This is a faulty assumption. When you match DoF between sensors, you have to use faster lenses on the smaller sensor. There are many variables at play here, and mostly they're overtaken by the various low-light capabilities of the various sensor technologies. A FF sensor with one native ISO will be absolutely killed in a low-light test by a MFT sensor with dual-native ISO. We also don't know what differences there are in processing between the two cameras. The only conclusions you can draw from this is that the ALEXA Mini has worse low light than the Alexa 65. It's not directly applicable to any other camera. Actually, it's a very specific and singular test that showcases the look of two particular cameras, and two particular sets of lenses. There are so many variables different between the two of them, even an economist would throw their hands up in the air before declaring that somehow multivariate analysis would yield anything useful. If I did a test between a P4K with Master Anamorphics and the worst FF camera and lens ever made you wouldn't be happy if I drew any conclusions from that at all. I'd conclude that MFT had higher dynamic range, lower noise, was incredibly sharper, had a much more cinematic look, more mojo, etc etc etc. You'd claim that the test wasn't fair, and I'd just say; "it's a very thorough and revealing test that showcases just about everything to consider" "sounds to me like you're focusing too much on the exposure, color science etc" Of course, these objections wouldn't be that logical because I've picked two camera/lens combinations that aren't really a level-playing field, I haven't adjusted for any differences that aren't directly related to the sensor size, and I'm claiming that the differences in lenses and colour science etc somehow don't factor into the equation in any meaningful way, or not the the extent that it would overwhelm the test. Based on my P4K Master Anamorphics vs crap FF test, you wouldn't say that it would apply to a Panasonic GF3 + kit lens vs Sony Venice + Zeiss Super-Speeds test, because in that comparison there's no way that the GF3 would win in any way at all. In this sense, a comparison of two cameras and two lens sets is simply not enough to extrapolate from.

-

@Django @PannySVHS You guys are hilarious. I wonder if you can tell me what the colour science of the next canon camera will be like? I mean, I know you haven't seen it, but you also haven't seen the Mini adjusted in post to match the 65, and you're confidently speaking about that! Maybe you can compare the GH6 to the next Canon camera? If I promise to take a video of the lottery numbers next week, can you tell me what that video contains? That'd be great, thanks!

-

@Django @PannySVHS I really truly would encourage you to compare the footage yourself. There are SO MANY tiny differences between them, and they're often too subtle to see unless you're flipping between them in an NLE. However, once you equalise them, or get close even, you'd be amazed at how much the gap closes. Some differences aren't that subtle though. Like you said - let's look at 13:50. The 65 is quite obviously brighter. Guess what. Making an image brighter makes it nicer! A common trick in colour grading is to track a window over the main subject in a shot and add a bit of contrast and raise the brightness. Even a subtle touch of this changes the entire composition of an image, it's such a powerful effect. Here, you get it for free from the camera/lens/grade. There are a dozen other things different between the shots, mostly too subtle to see, but they add up. Doing the comparisons yourself is a masterclass in aesthetics, and why this isn't a good comparison for sensor size.

-

That's a fascinating video, but probably for all the wrong reasons. I spent over a dozen hours when it was released with a range of shots from it, adjusting them in post to be a more equal match. By the time you take the Alexa Mini shots and adjust the vignette, barrel distortion, contrast, colour, diffusion, sharpening, and cropping to make the frames much more comparable, the differences are greatly diminished between the s35 and LF sensors. I HIGHLY HIGHLY HIGHLY recommend that everyone downloads the video, pulls it into Resolve and tries to adjust the images to be more similar. I learned an incredible amount about imaging from this exercise. It really gave me an appreciation for how much better the colour science is on the 65 than the Mini - despite the Mini being spectacular in its own right to begin with. ARRI truly did an incredible job in its development. It's a difficult thing to match them as the vignetting is very non-linear and so all I could do was approximate it. I went the full-way and spent hours adjusting one image while it was on top of the other with a "difference" blending mode so I was only seeing the mathematical difference between the images, trying to line up everything perfectly to minimise the errors. I performed individual colour grades on different parts of the image in this mode, eliminating tints and shifts in the colour science, etc etc. Most of the adjustments were non-linear in this sense. Unfortunately I couldn't get them to match closely enough to "see through" the various optical differences and answer the question if there was a difference between the sensors. It's a great test to demonstrate the difference between the two cameras, but a terrible test to show the effects of only the sensor size. As great as an Alexa Mini is, the 65 is a far superior image, so in a way it's like comparing a GH1 kit-lens combo with a Sony Venice Masterprime combo and saying the difference is due to the sensor sizes.

-

They're around, but were always quite expensive... good luck!

-

That makes sense, as definitely more classic FF stills lenses around than other formats. Your "no math involved" logic isn't so straight-forwards though, in cinema at least. For years I consumed content designed for consumers where FF was the reference. I learned that a 50mm lens was a 'normal' lens as its focal length was similar to the diagonal on a 35mm frame, compares to how the mind 'sees', and I also learned that the 50mm is a very common lens on shooting films. I learned that S35 was the standard format for film and all about 3-perf and 4-perf and Vistavision and ..... umm, hang on! When we learn that The Godfather was shot purely on a 40mm lens, that's on film, so is a 58mm FF FOV - right? and Psycho and its 50mm is a 72mm FF FOV - right? I genuinely don't know how many times I heard about a 50mm lens and thought "that's a 50mm FF FOV". https://www.premiumbeat.com/blog/7-reasons-why-a-nifty-50mm-lens-should-be-your-go-to-lens/ Maybe your comment "no math involved" is right. But instead of math being required, maybe we should replace it with confusion rather than clarity! This is another one that I'm not sure about. If this were true then a 10mm lens on a S16mm camera should be unwatchable, yet it looks similar to a 28mm lens on FF. In fact a 10mm lens was a pretty common lens back in the day - so common that almost anyone can afford to buy an Angenieux! https://www.ebay.com/itm/154807066202?hash=item240b385e5a:g:-BAAAOSwehBh4xlM

-

The quote from Ozark presents a common view - "the FF look is having shallower DoF". Or a fast wide image. I don't want to say that it's wrong, but it's kind of not actually about sensor size, but about lenses. The myth is that you can't get shallow DoF on crop sensors, but the reality is that nothing is stopping this from happening except that they don't make the lenses required (eg, fast wides) or they're so expensive that the people who can afford them just shoot FF anyway (eg, Voigtlanders). It's like if I bought a set of paint-brushes and only bought black and white paints, painted in black and white for ages, and then said "these paint brushes have a 'look' - they lack a certain lifelike quality to the images". Obviously this would be ridiculous as the lifeless quality would be the paint I used with the brushes, not the brushes themselves. Of course, there's not much point in saying that an iPhone sensor can do anything a FF sensor can do, all it needs is a lens that no-one has ever designed or built or sold, because that's ignoring the fact you need a lens to make an image. I imagine that much of this reasoning (of shallow DoF) was historic rather than now where fast glass is everywhere, so it's less-relevant now, I think. The same type of logic could be argued for other factors as well, like DR, noise, etc, which differed between sensors due to limits of the technology rather than the physical size of the sensor having some fundamental limitation. Having said all that, I'm still wondering if there's other factors that sensor size contributes to that might be causing an aesthetic difference. I'm curious to hear any other thoughts you might have.

-

I'd be curious to see which tests you're talking about that get different results. Not questioning that you've seen it, but curious as to what other factor is probably causing what you saw. The quality of camera comparison tests is very very poor, even with professional cinematographers testing out high-end cameras. One of the things these people love to do is dial in 5600K on each camera and just assume that's the same WB, and when you look at the footage it's clearly no-where near the same. They then talk about what they're seeing and completely ignoring the fact they've stuffed up the whole test. Other people do that and then WB in post, but this ignores the colour science that might be applied if the footage wasn't shot in RAW. I see basic errors that invalidate tests time and time again. What you saw was probably someone messing up the test like that. There's a reason that R&D departments of huge companies have enormous budgets - doing proper testing where everything is controlled properly is extremely difficult and is called "science" and is an entirely different field of study that even professional scientists get wrong much of the time, let alone cinematographers who may well have never even studied science in high-school, let alone understand the fundamentals of experiment design and the scientific method.