-

Posts

7,835 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by kye

-

I did find it odd that the DR only improved when it was downscaled in post rather than in-camera. I can't think of why that would be the case.. and ideas?

-

Seems to me that a killer app for a camera like this is that "it can do it all, when you need it" so you'd shoot whatever resolution / frame rate / bitrate that was appropriate for the shot. It's kind of a "just-in-case" camera. So if you were shooting a corporate then you'd shoot 4K, but if you needed a green screen you might shoot 12K just for those shots to be able to get a super-clean key in post. At that point, shooting 4K with it wouldn't require any better a lens than any other camera shooting 4K. Compared to something like the P4K which requires external everything (power, audio, etc) this camera wouldn't be that much more difficult or demanding to use, wouldn't it? Apart from the crazy demands of 12K files, what demands do you think this camera has that a R5 or P6K don't have?

-

Once again, I'm talking about innovation and you're talking about small incremental improvements already on offer. Currently, hybrid cameras offer: good enough two channel recording no safety channel no XLR support You're talking about: better two channel recording no safety channel XLR support I'm suggesting: 6+ channels of recording internal support for safety channels You're right that most hybrid shooters wouldn't pay more for what you're talking about, but if you put my suggestions in front of most hybrid shooters I'd suggest they'd be interested in them. Being able to have more than two channels (or more than one channel with a safety track) would be something most people would appreciate. I understand that good practice recording interviews is to have a lav on each person, but use an overhead shotgun and on-camera shotgun as backups in case of issues. These can be useful even if someone says something but rustles their clothes against the mic at the same time. One of the killer tricks for cinematic footage is to put ambient sounds underneath the music - you can barely notice them consciously but you sure notice if you turn those tracks off. I understand this is big for weddings and events, especially when they're paying thousands of dollars per minute for the final 'cinematic edit'. Even me, who just makes home videos, would love to be able to record a stereo ambience track along with a shotgun track (with safety). Currently I download free ambience tracks to put under the music / shotgun tracks in the edit, but I'd much prefer to capture my own in realtime. In terms of "camera makers will do whatever they need to do to stay in business even if that means trickling out features like better audio one feature at a time" - do you really think that's what Olympus did? Do you think that Nikon is doing that? This site and many others is virtually a live-stream of the death of the consumer camera market, which is in sharp contrast to the explosion of demand. I'd suggest that most camera companies are staying in business despite their protective strategies, rather than because of them.

-

I understand your points and agree that most users are "perfectly happy" with the paltry choices they have been provided, however: How many of those "perfectly happy" users would like these features if they were available? I'd say almost all of them, but they're happy because the manufacturers have managed their expectations Even if they wouldn't complain, people often don't ask for things until they get PR... think of how many people are talking about 6K and 8K but weren't talking about that 5 years ago, but our eyes, the size of TVs, and the type of content hasn't really changed - should we have suggested that 6K and 8K aren't good because people weren't picketing the streets demanding them? No-one knew they wanted an iPad... "hi, would you like a laptop computer that can only run one program at once and doesn't have a keyboard?" "um..... no?" ... 425 Million iPads have been sold since 2010. Things like 32-bit recorders are getting lots of great press because they solve a problem - they don't need a safety track and don't need careful gain selection. This is a great example of something that people weren't demanding but now it's available it is getting lots of interest. This is the kind of innovation that camera manufacturers aren't doing. I think your understanding of the camera market is a bit flawed. You've indicated that hybrid cameras are used more for taking photos than video, and that the video-first people use larger cine cameras. As someone who finds camera size to be a significant factor, and therefore uses hybrid cameras only for video, I think you're missing a trick. Think about ARRI and the Alexa Mini cameras. ARRI thought that they'd only be used for drones and odd mounts, but people realised that the smaller size had all kinds of advantages and so even ARRI, who are very in-touch with their user-base, were surprised that size mattered so much to people. I think this is a common reaction, misunderstanding size. If you asked your "true video" people who would like a smaller camera with the same features, I think they'd almost all universally want that. So that really rules out the "just use a camera 10x the size" argument.

-

Actually, if it's two cameras with the same lens then there won't be a difference in background compression at all. But I get what you're saying - that had the OP used identical cameras and different lenses then there would have been a difference..

-

Yes, and they didn't use it. XLR is the connector type for a type of balanced audio connection. Balanced audio is great for long cable runs and for its ability to eliminate interference. It would be impractical to run USB signals over large distances (USB standard and cabling isn't designed for this). However, if a camera manufacturer provided a USB interface on their cameras then it would mean that any audio interface by any company would work with any camera. The cable runs from the audio interface box would still need to be XLR. I get that they're trying to protect their cinema lines, but this is also stupid as those don't even have half the features I mentioned. Customer: "Hi, I really like this tiny camera you're selling, its very light and fits in my pocket, but can I get more audio inputs please?" Manufacturers: "If you want more audio inputs then oh boy we have the right camera for you - here is a 10kg cinema camera that doesn't even fit in a backpack" Customer:"WTF?!?!" The video industry is run by a bunch of dinosaurs and is ripe for disruption. It's no coincidence that the media consumption of the average person is up through the roof and the bottom line of almost all camera companies are through the floor. They should be asking themselves "how can we give the customer as much of what they want as possible" but instead they're asking "how can we give the customer the least while not losing them as a customer". The equation is really simple - if someone can make money doing it then you could have done it instead and you could have been making that money instead of them. This is why I mentioned that the camera companies have the advantage of synced audio. If a camera company make a recorder and a third party make a recorder, then as a customer the two products have very different costs: buy the camera company audio interface (up-front financial cost) buy the audio company audio interface (up-front financial cost, cost of separate batteries, logistics of charging them separately, risk of lost shots due to audio issues, cost of syncing audio - hardware or in-post) So what is the business case? It's that there is an entire industry making a profit from selling the second, and no-one except you can offer the first, which has large and ongoing advantages for the customer, potentially worth thousands of dollars. Any company that can't understand that deserves to go bankrupt.

-

@Anaconda_ The differences mentioned above will be real, but you can compensate for them in post, and even if you didn't almost no-one would recognise them anyway. If you're unsure, get any camera and any fast prime and shoot a test clip wide open and stopped down a few stops. If the lens is fast then the wide-open shot should be softer. Now pull them onto a timeline, put a grade on them, and play with sharpening the wide-open shot and potentially softening the stopped-down shot (a small radius blur applied at a low opacity is a good trick and simulates the halation from a soft lens without actually blurring the detail). You can also experiment with using different amounts of diffusion on the cameras. If you do this then likely the lenses won't be identical, in which case, choose which lens goes on which camera by making them look more similar. If your question is actually a "should I use this approach" question, then the answer is yes.

-

Awesome step in the right direction. To me, camera companies are both archaic and stupid the way they treat audio. Syncing audio in post is a critical function (audio sync issues are completely intolerable) and yet the camera companies have done nothing, literally zero, to try and take market share from the external audio market, despite the fact they have the advantage of being able to sync to the image. To give an idea about the ridiculous state of in-camera audio, here's what I would like: the TRS (stereo) for the mic should be a TRRS and record three channels of audio a combination hot-shoe / top handle or "battery grip" style add-on with XLRs and high-end low-noise pre-amps every channel should be low-noise pre-amps at least one channel should offer a virtual safety channel, where the single audio input is split to two channels - one gets digitised at that level and the other gets a 20dB attenuation and then is digitised The way it stands, you can't record two mics with one of them having a safety channel, you can't record a stereo signal as well as a mono signal, and on most hybrid cameras the audio is so poor you can't even use a non-powered mic. Almost every hybrid camera has stereo internal mics and a stereo mic input, and yet they can't record all 4 channels at once, the internal mics are often useless (recording digital interference or recording the lens focusing mechanism etc). Audio is significantly more important than image, and yet camera companies basically go "we're not going to use audio pre-amps that cost $80c more - instead we'll make the customer spend hundreds and hundreds of dollars to buy external audio interfaces / recorders and spend time in post syncing the audio on every project". It's like they don't even know what "integration" means.

-

Nice link - I vaguely remember seeing that some time before. The promo video was quite amusing, my favourite part is the end where the wind is going through her hair and all the trees in the background are motionless lol.

-

I like the feel of MF, but I don't film myself so I don't have to worry about it. I've also noticed OOF shots regularly on all but the highest budget productions. I'm rewatching some favourite 90s shows and I see the odd shot where they missed focus, even shots without any action at all, and the show is in SD! You wouldn't have thought it would have been that hard to focus in SD, but monitoring was probably terrible in comparison to todays options, so there you go. I think it's an anxiety-inducing thing because when AF goes wrong, it can go SERIOUSLY wrong, and that's what people are afraid of.

-

Humans move instinctively, and quite frankly, anyone saying anything worth listening to will probably move too much simply because they're emotionally engaged in what they're saying. I don't think that's a practical option, unless you want to replicate the early days of moving pictures where everyone stood still like they were dead and skewered because that's what they were used to from having their portraits taken with low ASA film and having to stand motionless for 30s. But you're right that it is an option, here's a revised list: Set focus and only shoot boring videos where nothing ever moves Get some shots out of focus Shoot with deep DoF Shoot with an operator Shoot with soft 1080p Spend a lot of money

-

Seems to me that there's a feedback loop going on here... People want shallow DoF for the "cinematic" look People use AF to record themselves without an operator AF makes mistakes and sometimes these end up in the final edit People watch videos and notice that the focus is off sometimes People who watch those videos and want to make their own content bump up "focus" as a priority Brands market the crap out of AF as a feature People decide that AF is the solution to focus issues Go to 2 So the loop goes around and around and we end up with people knowing that AF is the solution without even knowing what the problem is. I think the reality is that you have to choose one of the below: Get some shots out of focus Shoot with deep DoF Shoot with an operator Shoot with soft 1080p Spend a lot of money

-

Lots of gimbals offer remote functionality IIRC. Maybe a combination of dumb tripod, gimbal with remote control, and wireless monitoring / control is the way to go?

-

There is an argument to be made for using faster lenses with these older cameras, based on their lower bitrate codecs. Simply put, the limited bitrate struggles then given too much motion and detail to try and replicate. This is why video of snow-storms, waves, etc often descend into mush - the bitrate doesn't hold up when the frame has lots of detail and motion. Placing the camera on a tripod is a great way to limit the motion - when the background doesn't move then the bitrate can focus on what is moving and give it a higher image quality. It's the same with having shallower Depth of Field, as it makes more of the frame out-of-focus and therefore much easier to describe, so the compression gives those parts less bitrate and is able to put more bitrate into the things that are in focus. It might seem rather strange to pair these older cheaper cameras with super-fast super-expensive lenses, but that's how the math works out.

-

The crop factor looks to be completely ridiculous...

-

The GH5 has so many features that the only thing more shameful than the other manufacturers not matching it is that people bought the more recent cameras that failed to meet the standard it set. It's not the best camera in the world by any stretch, but it screws customers the least, and might even be the camera that cripples the hardware the least. BMs cameras also don't cripple the hardware too much. I imagine that's why both the GH and Pocket camera lines are so popular. In terms of what people want from a camera, mostly the feature lists show how immature people are as cinematographers and how little they understand film-making, even to the point where they understand it so little that they don't even know that their feature requests make them look like idiots. One thing that strikes me is that an internal fixed ND is great for getting things in the ballpark and I'd still need a vND to dial in the exposure properly. For me, and others shooting fast or in less controlled lighting, I don't want to adjust the aperture to fine-tune the exposure and can't dial up and down all the lights to get it right on, so having internal NDs doesn't have enough control. How do you find that most people deal with this situation? ie, where they want to shoot exteriors at a wider aperture and thus need more ND than a single vND can give? Do they put on a fixed ND and a vND? I have both but find stacking them very cumbersome.

-

What is it that the S-line has that the GH6 won't have? My impression of what the market wants, apart from PDAF, isn't much beyond the C4K 10-bit 422 unlimited of the GH6. In fact, the GH groups/forums that I'm in are so populated with people who just bought a GH5 (and more join every day) that it's hard to get past the newbie questions, and these people are so happy to get a camera with even the GH5 specifications.

-

I'm not surprised that BM hasn't made in-roads to the "full production" side of film-making. There is a lot of hyperbole and irrationality when it comes to cameras and the economics of them. Most importantly, to this conversation anyway, are: Once you have enough cast/crew that you have to schedule your shooting days then you're probably paying enough (even just in logistics) that the hire of a high-end camera isn't that much of your budget so you can afford whatever camera you want I agree with @barefoot_dp comments about getting hired - if you're doing this to make money then start with that as the goal and work backwards, which looks like the FS7 or FS5ii are much safer bets BM makes cameras with great specs for considerably cheaper than the existing incumbents in the market, but this only really matters to people outside the above two situations, such as: Amateur film-makers who are shooting micro budget films with people donating their time, so need to own a camera because they can't afford to book camera rentals and then have cast/crew cancel on them last minute when it's too late to get a refund on equipment hire People self-funding documentary work where the phone could ring and they need to get in a car and go in order to capture the action as it happens Film students or people who are producing their own projects and who will use their camera enough for it to pay for itself over a few projects Hobbyists who shoot at a moments notice or shoot a little each day for many days I'm a hobbyist, so I care absolutely about what things cost and all the technical and image quality aspects of a camera, but in a business it should be viewed in a completely different way.

-

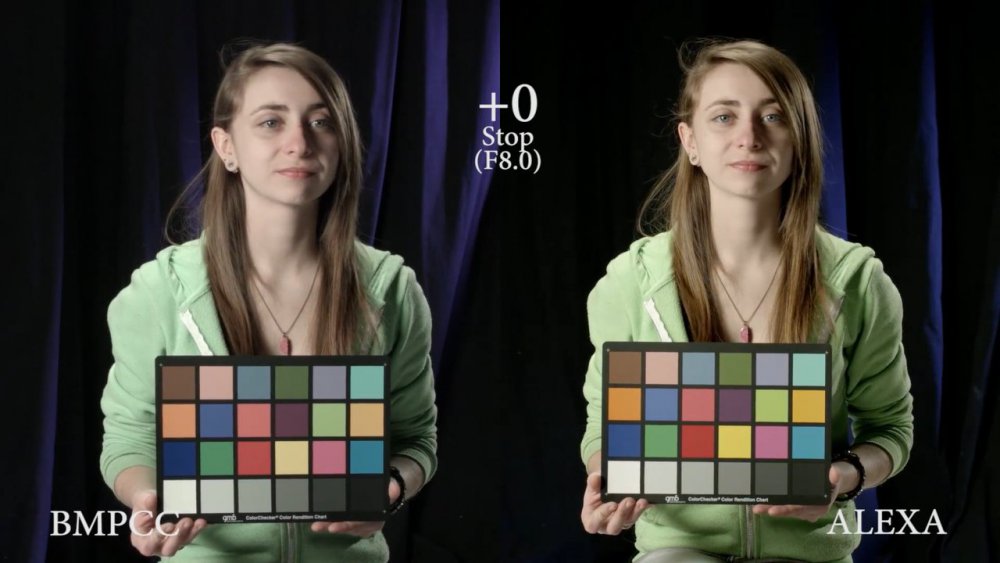

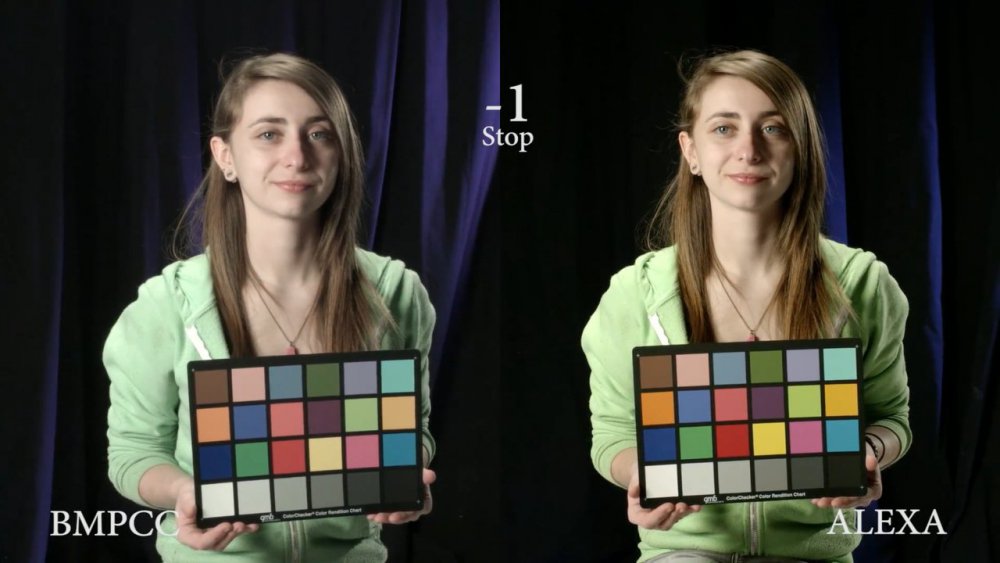

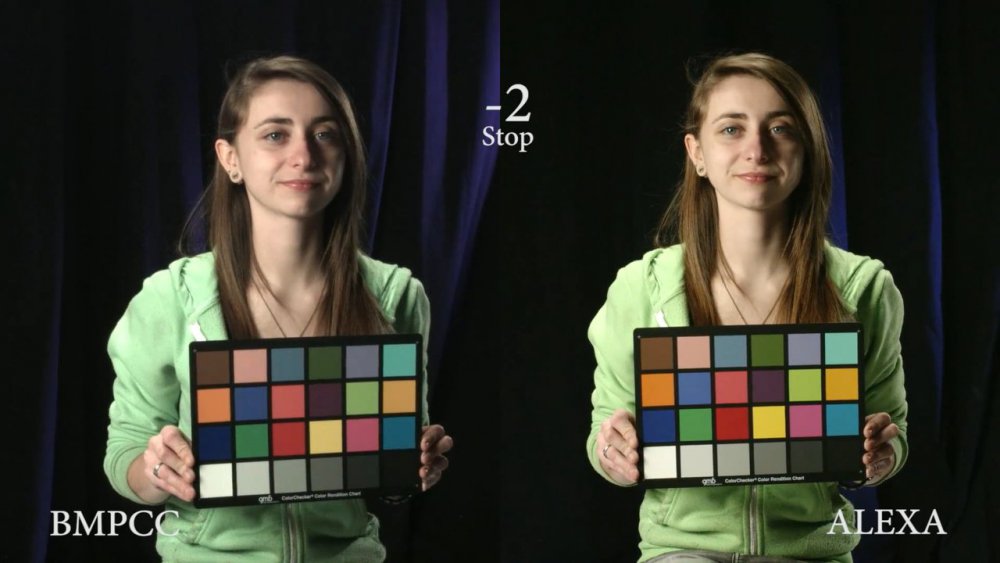

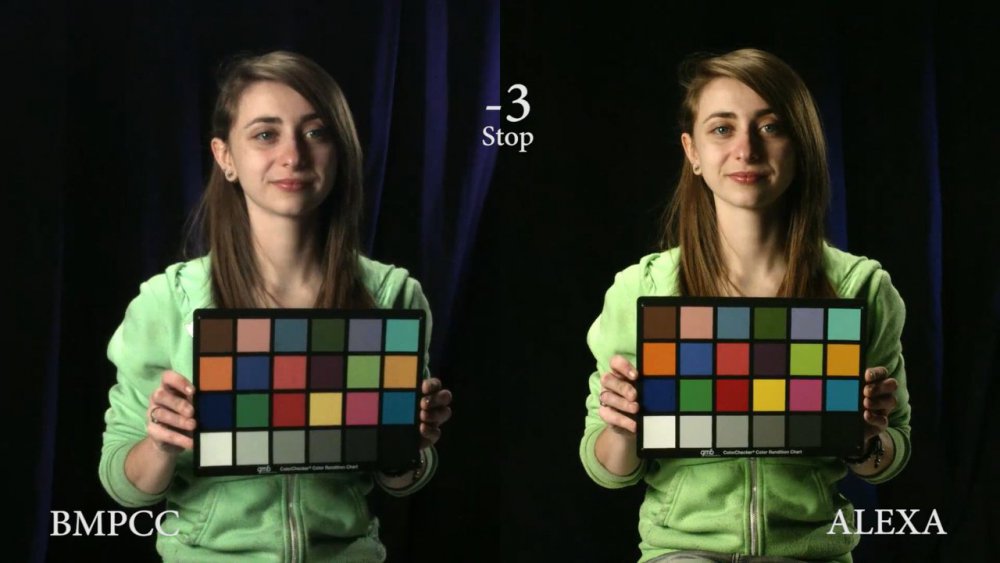

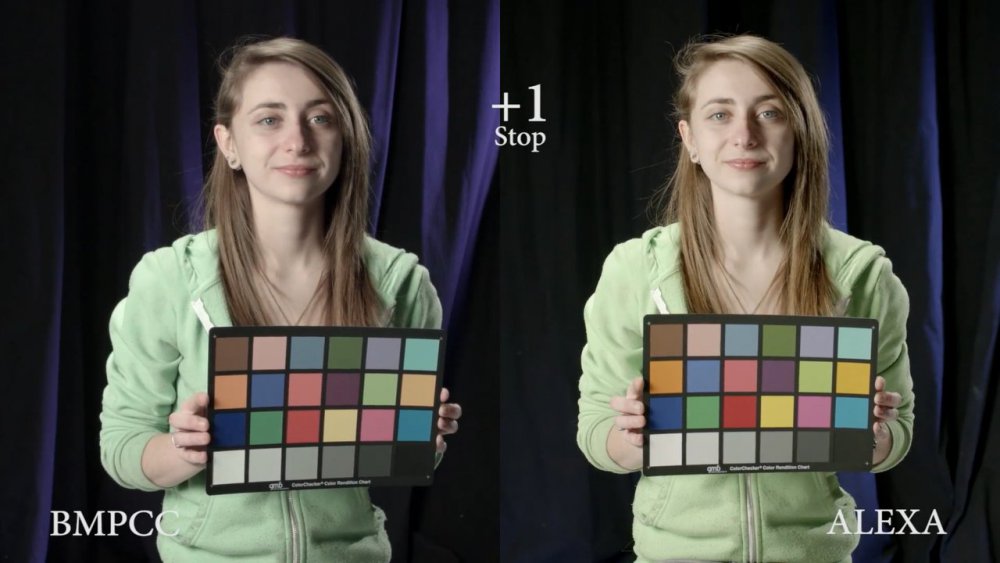

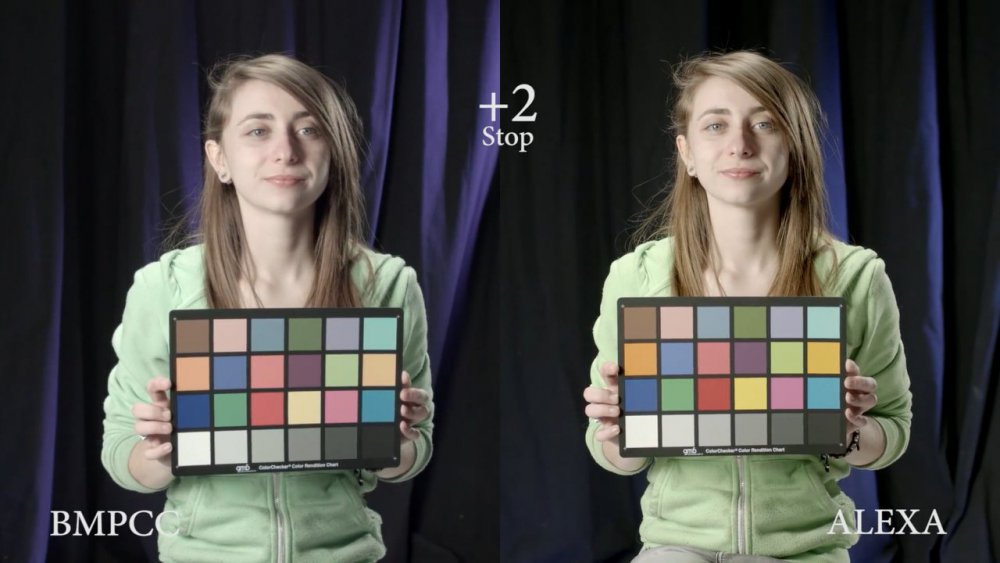

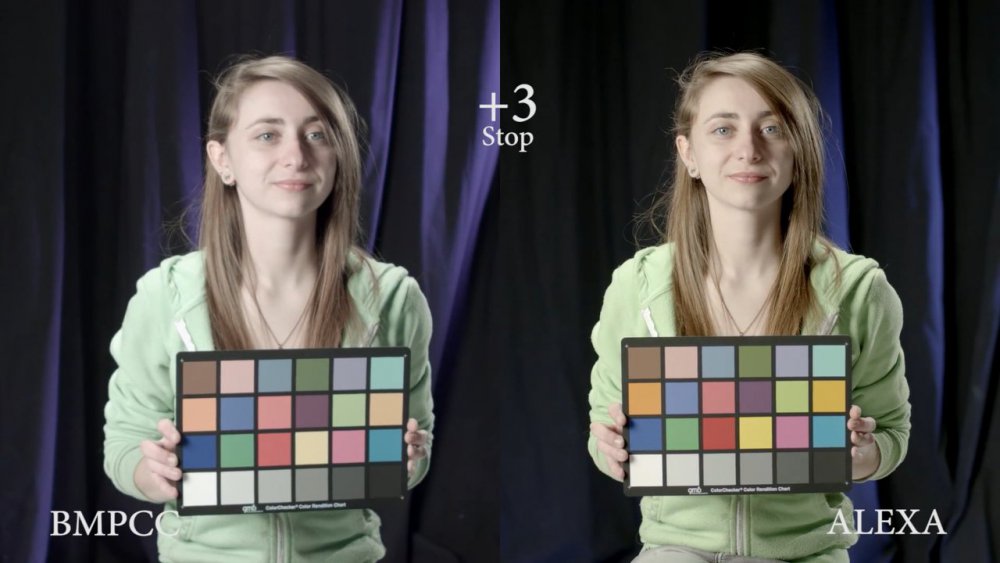

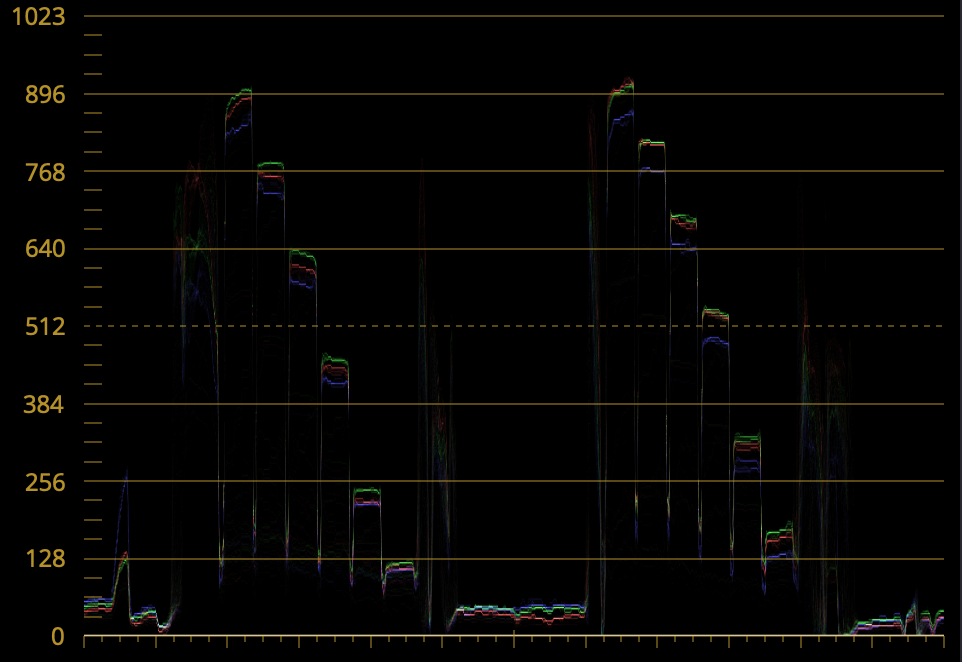

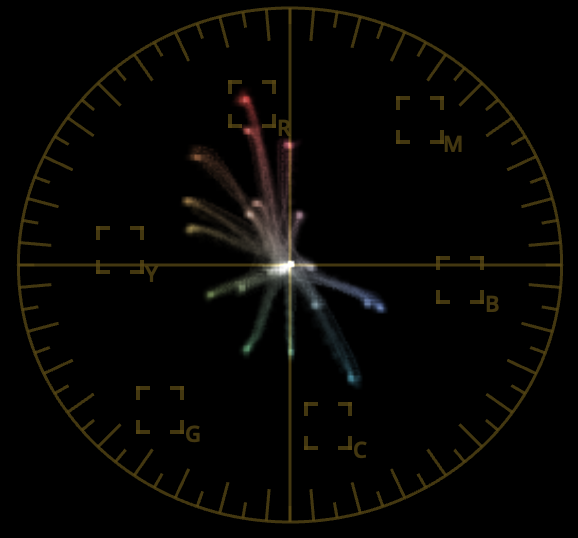

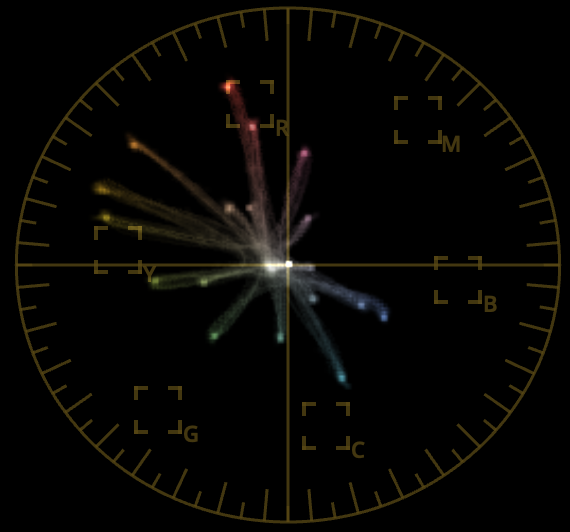

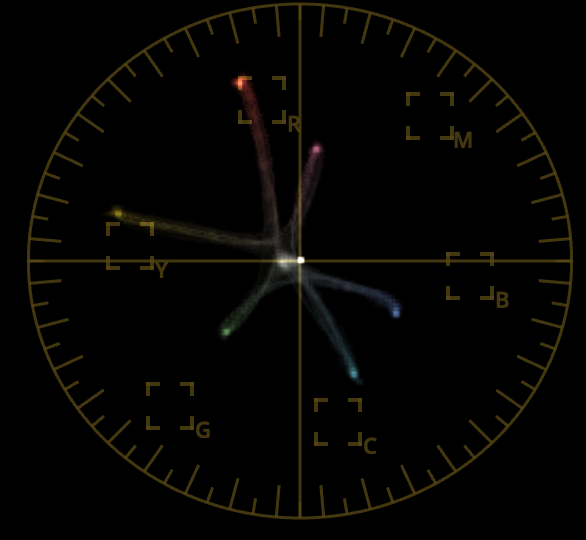

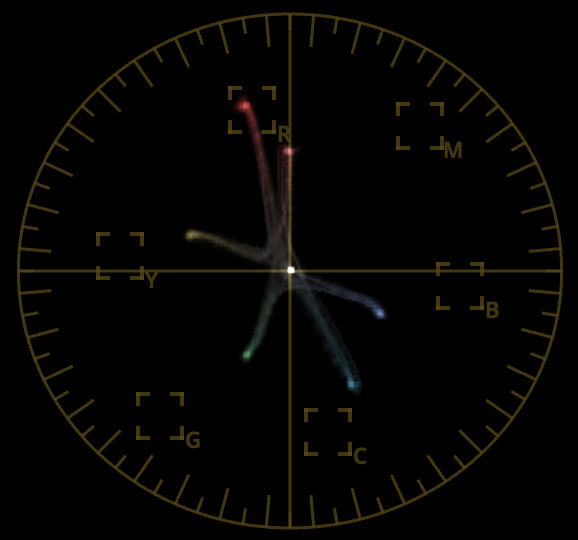

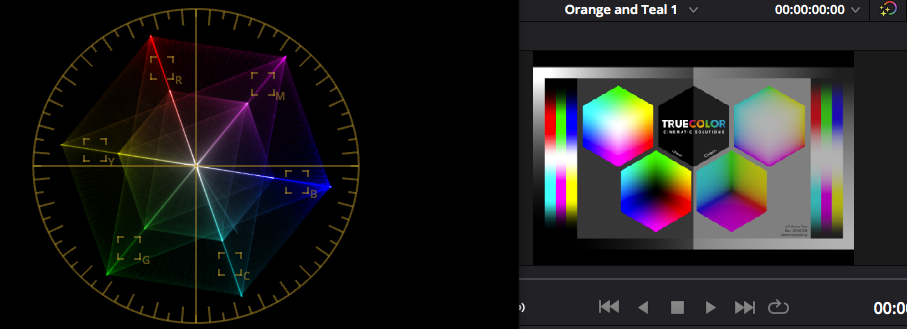

Thanks to @TomTheDP for finding this comparison between the Original BMPCC and Alexa, and thanks to Alain Bourassa for performing the test! As @Matt Kieley says, the BMPCC and BMMCC have the same sensor, so it's a proxy for the BMMCC as well. Not a bad match, considering that this appears to have no adjustments, and shots have different White Balances: The WB comparison: Latitude tests: What I see from this comparison is: Obviously the Alexa has more latitude, no surprises there Both comparisons vary quite a bit in tone and levels between the shots (this is an indication to me that the shots were taken quickly and the results not processed too much, so I think is a good indicator of what things are like when used for real rather than in meticulous lab environments) The BMPCC fails at +4 and the Alexa doesn't, but both look reasonable at -3, so the highlights are where the Alexas increased DR is revealed The BMPCC looks very usable across the whole range and this reflects my impressions of shooting with it Vectorscope comparisons, with colours extended 2X in the scope for ease of viewing.. BMPCC Primaries: Alexa Primaries: BMPCC chart: Alexa chart: For reference, this is a colour chart I shot with my GH5. The absolute level of saturation can't be compared as I can't remember what I did in post, but check out how different the overall shape is, which is much closer to 'correct': I suggest this is a very good result, especially considering how old and how cheap this camera is, even after the price has risen since COVID lockdown and nostalgia shopping.

-

You're spending a lot of money on such a decision, and will have to live with it, so I suggest getting a bit organised and taking some time to think about it systematically. My advice is this: Make a list of all the differences between the cameras (ie where they differ significantly), including your own personal feelings and impressions as well as specs Find the top 5 (or top 10) items that are most important to you, considering things that impact the final image as well as things that impact how easy and pleasant it is to use as well as speed and efficiency (which effect your income) Weigh these factors up and see what comes out of that analysis, it may be relatively equal with pros and cons on both sides, or may be more clear cut Check how you feel about that result. It's often that we don't know how we truly feel until a decision is made, and then we are either relieved about it, or have a negative reaction as we were wanting it to go the other way. I find personally that I can think about things over time, reading more info and gathering opinions as food for thought, and that eventually I develop an understanding about what I value and what might be the right direction to go in. But if I do something like the above using pen and paper then it reduces that thought process from weeks to days because it forces you to see clearly. I'd also suggest that you consider business and personal experience aspects more than tech specs. A camera that has makes beautiful images might be nice, but if you hate your life as you slowly go broke, well, the image would have to keep you warm at night for it to be worth that price...

-

I have no idea what they were doing there, but your confusion between "absence of evidence" and "evidence of absence" is a pretty basic logic flaw, and one that pretty much prevents reasonable discussion, especially when I told you where to go to look at the evidence that I have seen. Let's get back to the BMMCC and stop assuming that our own personal lack of experience or understanding somehow translates to the rest of the world.

-

What are the specific things you're looking for in the XT3/4? If you're wanting to match cameras it's always easier to start with similar cameras, or at least from the same brand in order to have a similar style in colour science. The EOS-M series cameras are mirrorless and have an APSC sensor that can take a speed booster to use FF lenses, but also has a port of ML so you can get RAW out of it, plus it will be Canon colour science so should match much better. Of course, if you were after features that the EOS-M lacked then I'd understand why that might not be a good fit.

-

Most of the BTS shots were from Cinematography Database YT channel, but were embedded deep within his videos. The irony wasn't lost on me at the time, he was saying how everyone thought they weren't being used, but then he was only including the evidence hidden in the middle of other videos. I remember at the time he was doing lots of lighting breakdown videos and so would be sharing stills from whatever BTS footage he could find, often of people being interviewed on set or other little snippets where you could see lighting rigs etc, and he'd go off on a little tangent and mention the XC10 seen in the background of the lighting setup he was breaking down. It went on for 6-12 months IIRC, so to find them you'd have to watch a years worth of his videos unfortunately. It was very difficult to tell what they might have been using them for. Considering that they were designed to match exposure settings and colour profiles with other Canon cameras, and had a fixed lens, and internal media, all you had to do was to run a timecode into them and it could literally be a XC10 sitting on a tripod being used for a real shot. There would be no way to know from the rig what it was being used for. An A7S3 sitting on a tripod with the kit lens would typically mean it wasn't being used for anything significant, but if it had an anamorphic lens, matte box, monitor, etc then you'd know it was being used more seriously. Often they were pointed at the set, and sometimes from interesting angles, so maybe they were being used for the odd random angle, or maybe as a webcam to the control room, who knows. Regardless, they were on set and being used for something.. Lots of older cameras still being sold as refurbished units, but not available new. Comparing the BMMCC with the F5 I think is appropriate and really signifies the pedigree. It was good at release and is still good now. The unique form-factor of the BMMCC means it doesn't really have any direct competition, and typically the other options are GoPros or Zcam Z1, but neither of those are even in remotely the same league, let alone serious competitors. Studying colour science is only possible in direct comparison of the same scene, or using a colour checker. Otherwise who knows what is the camera and what is the scene. If you have any stills from the S1 that include a colour checker then I'd be curious to see a waveform and vector scope of them. If I didn't care about size, weight, or cost then I'd be a fan of the P6K too, and probably own a P6K Pro. Of course, I'd be shooting 1080p Prores HQ downsampled from the whole sensor, but that's just me. The FX6 seems like an interesting camera too, and capable of a great image. Of course, almost everything can make great looking images if you're a colourist of Juans capability, and although I haven't experienced it myself, apparently the Alexa is a complete pain to shoot with, especially as a solo operator, so if you can get 90% of the image from a P6K then you're going to reach for it almost every time I would imagine.

-

I should also say that I shot a few outings (walk on the beach / visit to a park / etc) in Prores LT and even Prores Proxy and what was interesting was that although you can see the compression artefacts, the 'feel' of the footage didn't change, it still felt malleable and cooperative. I'd be tempted to say that the linear response and overall cooperative colours isn't matched by other cameras because they do something in their colour science to tint or otherwise process the shadows differently to the mids and highlights, which is why when you underexpose or overexpose and then adjust levels in post you don't get the same kind of image as if you shot it with the right exposure. However, I'm not sure if it's true. In theory if you process all colours the same, regardless of their luma value then you should be able to pull up underexposed shots or pull down overexposed shots and still keep the same colours. But it's more complicated than that to get a "neutral feeling image", because it doesn't work like that with magenta/green shifts in WB, like I obviously have in the above. If you have a WB control that essentially moves the whole vector scope around then you have to process all hues the same otherwise you'll bend the colour response. For example, imagine a scene where there is a white wall lit by three lights, one is neutral, one has a CTO gel and is mostly on one side and the other has a CTB gel and is on the other side. If you WB the camera properly, you should get a straight line on the vector scope going from orange to cyan through the middle of the scope. Now imagine we introduce a magenta shift in the WB of the camera. We'll get a straight line on the vector scope, but it won't go through the middle of the scope, it will pass on the magenta side of the curve. Here's the kicker - the BMMCC rotates and desaturates hues on the magenta and green sides of the vector scope, which will bend that line. If we then adjust that line in post we won't have to pull it towards green as far because the magenta got desaturated (quite a lot actually) and that will mean we still have magenta in the warm and cooler parts of the image - the middle of the wall will look neutral but the sides will look magenta. If we adjust further towards green the sides of the wall will be warm/cool but not magenta or green, but now the middle of the wall will be green. I haven't done this test, but I do know that the camera desaturates the magenta and green parts of the vector scope. It's actually quite a long way from neutral, you'd be amazed at how something so distorted could look so nice. I don't have the BMMCC one handy, but this what the Kodak 2382 film emulation LUTs in Resolve does, which the BMMCC and Alexa would be doing similar things to. Unprocessed vs processed: Note that hues are rotated, saturation is compressed, blues and green are desaturated and yellows are quite saturated. Having said all that, I don't know what the BMMCC is doing because if you shoot something and then adjust the green/magenta WB it doesn't feel like it's falling apart or that colours are going off in that way. Maybe they are but I just haven't noticed, or maybe there is some 'buffer' built into the skin tone areas so that if you get the WB wrong the skin tones will behave predictably when you try and recover them in post. Who knows.

-

The response of the BMPCC / BMMCC is strange in that it isn't strange. You adjust a control and the adjustment gets made and your eyes see what they were expecting to see. That might sound strange, so let me provide some context. My first attempts at colour grading were trying to match low quality footage (GoPro, iPhone footage, etc) shot in mixed lighting. Even using relatively advanced techniques, and animating various adjustments over the course of a shot, it was hard to get things to match, and footage would break. It was enormously frustrating. I then bought the XC10, which I now know I didn't use properly, as I used it with some auto-settings and so was seeing its poor ISO performance, as well as sometimes trying to bring up shots there were underexposed etc. This was similar to trying to adjust the bad quality shots, and also got bad results. The 'feeling' is that the footage is very fragile and that any adjustment will break it, and the feeling is also that the sliders in Resolve were fighting with me all the time. You adjust WB and instead of the image getting neutral and good, the colours go from being too blue or too purple to just being awful, so you make localised adjustments and the closer you get to neutral the more it looks like you shot the whole thing at ISO 10,000,000. Then I bought the GH5 and it was like a revelation. The 10-bit footage could be pushed and pulled and you basically can't break it even if you try ridiculous things. The 'feel' of the footage is the same as watching a colourist grade footage from a RED or Alexa on a YT video - they pull up a slider and the image just does it without falling apart. The footage comes to life when you adjust it, rather than falling apart. However, when you're shooting on auto-WB (like I do) and every shot requires manual WB adjustments in post, and occasionally have to be animated in post too if the lighting changed during the shot, there is still an element of the colours not cooperating, and kind of feeling like they're degrading under your controls. It's night and day better than the XC10 shot outside its sweet spot, but still not ideal. Then I bought the BMMCC and it was a revelation. I've shot both RAW and prores and really pushed and pulled the footage in post and the problems are just gone. You push and pull it and it just does what you tell it, the footage doesn't break and the colours don't feel like you're fighting them. Adjusting colours feels more like you've been given a spectacular image that has been deliberately degraded in post to look flat and dull and that you're un-doing the degrading treatment. The image gets better as you play with it and get it to where you want it. I posted this video earlier in the thread and it's useful as a reference point: I shot that earlier in the year, in Prores HQ with a fixed WB of 5500K and ETTR. The footage was nice, but didn't match shot for shot (my vND has a green tint) and some shots are obviously exposed very differently to each other depending on if I was protecting the sky or not. One thing you will notice in post is that there is a small knee to roll-off the highlights, but it's a very linear response across the whole range. So if you shoot a shot 3 stops under the exposure of another shot and adjust them in post to match (either by lowering the brighter one or raising the darker one) then you'll get very similar looking images, taking into account the raised noise floor and clipping of course. It's like an Alexa like that, with a broad and very neutral response, unlike other cameras. Here's the shots on the timeline with a 709 conversion and not much else: and here's the final grade: As you can see, I've played a lot with the colours, and especially the green/magenta balance that is the key to a great sunset. Hopefully that helps to explain what "the footage just does what you tell it" actually means in practice. I agree. I feel there's a whole other world out there that's invisible due to NDAs and "professional appearances". During my time owning the XC10 I really had this made clear to me, as on the one hand the entire keyboard warrior camera forum world was saying how the XC10 was a disaster and that no-one would ever use it, and simultaneously I was subscribed to a YT channel where the guy was posting a BTS still from some high-end production or other where an XC10 was visible on set in the background. Of course, if you google "XC10 <movie name>" then there are no hits, so it seems like it doesn't exist, but the pictures are unmistakable. So a camera can have huge press because it's YT camera reviewer flavour of the month, and yet a consumer camera could be in-use daily on high-budget sets across the world and you'd never hear a peep about it. The amateurs make all the noise and essentially the pros move silently. I've heard people say that this is false and that there's heaps on info about what happens on-set available online, but when you see the XC10 in the BTS pics of a half-a-dozen feature films and not a single google hit across any of them, you know that there's an entire world of professional use that's simply invisible. I suspect the BMMCC is firmly in this camp. After all, name another camera that was released in 2012 and is still available as a current product by a major manufacturer...