-

Posts

7,835 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by kye

-

.....and is FF and 8K. What price would we pay for such a thing? Easy - history teaches us this lesson time after time... 100Mbps 8-bit codec with 11 stops of DR.

-

Makes sense. Let's hope they decide to focus on image instead of specs...

-

I think @scotchtape nailed it - it's about AF. Step 1: work out if you want AF or not and then buy the Canon if you do and P6KPro if you don't Step 2: setup some test scenes with a colour checker (or some brightly coloured objects) and shoot it with both cameras and then work on a preset colour grade that will match the colours in post Step 3: go shoot stuff

-

I got that from watching - the idea that there's a normal public street and a secret garden right next to each other was a cool idea to start the teaser with and pulls you in.

-

The 14mm really surprised me from that shoot actually. The edit you guys saw was only a subset of the larger video I shot which included lots of shots of my wife who was there with me (she's internet shy) and the lens looks great for people. Specifically the ~40mm equivalent focal length, the rendering of detail at a normal subject distance and with the lens wide-open is quite flattering, it's borderline as long a focal length as you'd like to use hand-held in a small rig without OIS, and the DoF was shallow enough (or even moreso) to create some subject separation. My follow-up to that video was to do more tests with the 14-42mm f3.5-5.6 kit lens to see what the DoF and subject separation is like on that slightly slower lens, and I also bought a 12-35/2.8 to try that too. I think that using these lenses (any of the ones I mentioned above) wide-open is probably a good balance between getting a softer rendering to avoid moire, to not have too digital a look, to get shallow enough DoF to separate your subject, but also to not have too shallow a DoF to be able to manually focus relatively easily, especially on a smaller screen. I can imagine that I might end up with these setups: P2K with 7.5/2 and 14/2.5 as a truly "pocketable" setup M2K with 7.5/2 and 12-35/2.8 as a larger rig for slow-motion P2K / M2K with my 17.5/0.95 as a low-light solution

-

As @fuzzynormal suggested, proxies are the best approach. In terms of habits dying hard, which I understand, it sounds like you're at one of the rare points where you're going to change your habits anyway, so I'd suggest considering what will be the best investment in the long-term. Sure, proxies are a different way of working, but: If you render to a Digital Intermediate then you're encoding an extra step unnecessarily and taking the hit to the image quality - I'd even question if shooting 6K with an extra conversion lowers the quality to 4K levels.... a test I'd suggest you try before committing to a DI workflow When you archive your project you will either have to delete the DI (meaning you can't load your project and hit play), store both (more than doubling the storage requirements because h265 is much more efficient than Prores for the same quality), or if you delete the originals then you'll both increase the size of the media you stored and be stuck forever with a degradation in quality. When you work with proxies you can just delete them at the end (if you want to) and the project will still be in-tact, or if you don't delete them they won't take up much space as they'll be much smaller You can edit on virtually any computer you like, as you can make your proxies whatever resolution you want Yes, there are some operations best done on the full-resolution files (like stabilisation and adjusting image texture such as NR and halation / grain effects etc) but these typically don't have to be done real-time and they can be done at the end of a project during the mastering phase. The only obstacles I've seen for these is 1) if you are colour grading live with a client in the room, or 2) if your computer is so under-powered that things like the new AI functions just won't work regardless of how long you leave them to process, but these may not apply to your situation. The only thing worse than having to spend $4K on a new camera is having to spend an additional $4K on a new machine that can handle the extra K's in the image.... oh, and just wait for h.266 - it's coming, and it will make editing h265 seem like editing, well, h.264 in comparison!

-

My question was deliberately ridiculous. I asked it that way because your answer is obvious - that you want a camera more than you want the crypto you have that totals that value. This is the basis for any exchange economy as division-of-labour and specialisation is the foundation of civilisation. The fact you own crypto and know it's worth money sort of implies that you're aware that you can sell some for fiat currency and turn that into a camera via a normal store, but the way you worded your question indicates you don't want to go via a fiat currency, and also that you kind of wanted to hide that aspect of it, or at least not lead with it. This has certain implications, most significantly: Your question is actually "how can I buy a camera with crypto while bypassing the whole fiat currency system", and begs the question of why you are so interested in bypassing it I own some crypto myself, not a lot of it, but enough to be familiar with the waters, so to speak, and it's a rather strange and involved question, thus my deliberately ridiculous answer. To actually go back to your question, I heard PayPal was implementing a feature that allowed you to pay a vendor in fiat currency but to pay PayPal in crypto, so if that's been rolled out already then you can use that mechanism to buy almost anything from almost anyone.

-

Why trade an appreciating asset for a depreciating one?

-

They can always implement a haptic feedback engine that can emulate it, assuming there is enough demand. Not sure if you've used a MacBook Pro touchpad or an iPhone home button, but they're pressure sensitive and don't click, however the illusion that they do click is (at least to me) completely flawless. I didn't realise it they weren't physical buttons until I tried it when the devices were powered off and literally I got a small shock because I genuinely thought it was physically clicking. Even now if I happen to press when the device is off I am still surprised. ...who are fuelled by the continuous appetite for more resolution. That's the thing about capitalism, it's a feedback loop from the consumer to the manufacturers, and then back again through marketing. If we all just stopped voting for huge-resolution cameras with our wallets, they'd eventually stop selling them. Do most people do that? No.

-

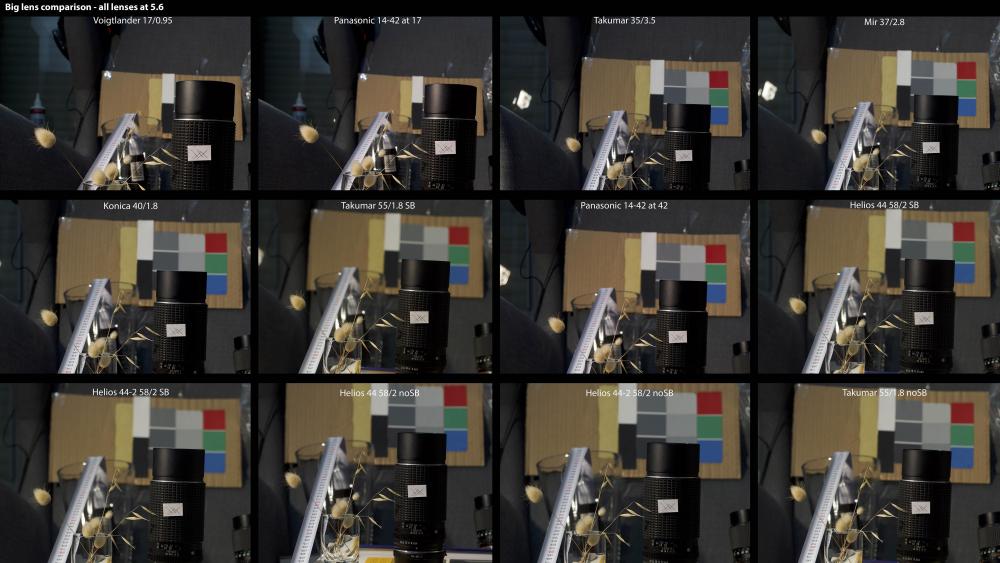

Music Video with Bmpcc4K in CNDG and Bmmcc with old 16mm Russian Lenses

kye replied to alvaromedina's topic in Cameras

Great stuff! You are taking on a mammoth challenge by trying to match the Fairlight sensor from the Micro with the Sony sensor of the P4K. I would suggest the differences are in colour, texture, and resolution. To match the colour, I'd suggest building a test scene with a number of colour swatches and lighting it with a high-CRI light, then shooting the same scene with both cameras. After that you can adjust one camera to match the other. I'd suggest matching the WB first, the levels second, and then paying attention to the Hue of colours, the saturation of them, and the luminance of them third. That should get you into the ballpark. In terms of resolution, I'd suggest filming a test scene with some high-contrast edges and fine detail. One thing I have used in the past is a few grass seeds that have been backlit as they tend to have little hairs and other textures, like this: Once you have that scene, shoot with both cameras, match the framing between them, and ensure to stop the lens down (I'd suggest 4 stops from wide-open) to get the sharpest image hitting the sensor. Then, in post, match the cameras by either adding sharpening to the Micro so it perceptually matches as closely as possible to the P4K, or adding a small-radius blur to the P4K, potentially adding the blur at a lower opacity than 100% (experiment with it). Lastly, we deal with texture. I'd suggest doing the above matching first, then leaving this one last. Texture is a funny thing, and is very subjective, but I'd suggest experimenting with some combination of adding NR and film grain. Maybe you don't need any NR but maybe you will. Obviously matching the Micro to the P4K will be very different to matching the P4K to the Micro, but it's probably worthwhile doing both so you have these things worked out later on when you want to use these on real projects. It's also worth noting that you won't be able to match these exactly, and that it won't matter. Firstly, you won't be shooting the exact same angle with both cameras (and I'd suggest avoiding that if you can), secondly, on a real project you would probably match the cameras and then put a look over the top, which will partially obscure any differences that you didn't manage to match, and thirdly, you don't have to match cameras at all, you only have to match them so that the differences don't draw too much attention to themselves and break the viewer out of the experience of the content of your edit. Good luck!! -

I shot prores, which does a good job of minimising the aliasing, and also the 14mm isn't tack sharp wide open. The setup for that video was this: So the image pipeline was: vND -> IR/UV Cut-> 14mm at f2.5-> Micro -> Prores A number of people online say that there is a special synergy between the P2K / M2K and the 14/2.5 lens, so it could be that. Certainly as a 40mm equivalent prime it's a nice focal length, although it's at the longer end of what you'd want to hand-hold unless you had a bigger rig. I'm also looking at options with OIS like my 14-42/3.5-5.6 kit lens, the 14-42/3.5-5.6 PZ pancake lens, and also perhaps the 12-35/2.8 so will see how I go. It would be great if there was a wider zoom with OIS, but the crop factor is working against us here. I have bought a Black Promist filter since I shot this as well, so I'm keen to try that out on a real location too.

-

This is my concern too. Hopefully I have dissuaded them from your arguments sufficiently. Once again, you're deliberately oversimplifying this in order to try and make my arguments sound silly, because you can't argue against their logic in a calm and rational way. This is how a camera sensor works: Look at the pattern of the red photosites that is captured by the camera. It is missing every second row and every second column. In order to work out a red value for every pixel in the output, it must interpolate the values from what it did measure. Just like upscaling an image. This is typical of the arguments you are making in this thread. It is technically correct and sounds like you might be raising valid objections. Unfortunately this is just technical nit-picking and shows that you are missing the point, either deliberately or naively. My point has been, ever since I raised it, that camera sensors have significant interpolation. This is a problem for your argument as your entire argument is that Yedlins test is invalid because the pixels blended with each other (as you showed in your frame-grabs) and you claimed this was due to interpolation / scaling / or some other resolution issue. Your criticism then is that a resolution test cannot involve interpolation, and the problem with that is that almost every camera has interpolation built-in fundamentally. I mentioned bayer sensors, and you said the above. I showed above that bayer sensors have less red photosites than output pixels, therefore they must interpolate, but what about the Fuji X-T3? The Fuji cameras have a X-Trans sensor, which looks like this: Notice something about that? Correct - it too doesn't have a red value for every pixel, or a green value for every pixel, or a blue value for every pixel. Guess what that means - interpolation! "Scanning back" you say. Well, that's a super-broad term, but it's a pretty niche market. I'm not watching that much TV shot with a medium format camera. If you are, well, good for you. And finally, Foveon. Now we get to a camera that doesn't need to interpolate because it measures all three colours for each pixel: So I made a criticism about interpolation by mentioning bayer sensors, and you criticised my argument by picking up on the word "debayer" but included the X-Trans sensor in your answer, when the X-Trans sensor has the same interpolation that you are saying can't be used! You are not arguing against my argument, you are just cherry picking little things to try and argue against in isolation. A friend PM'd me to say that he thought you were just arguing for its own sake, and I don't know if that's true or not, but you're not making sensible counter-arguments to what I'm actually saying. So, you criticise Yedlin for his use of interpolation: and yet you previously said that "We can determine the camera resolution merely from output of the ADC or from the camera files." You're just nit-picking on tiny details but your argument contains all manner of contradictions.

-

I think the answer is simple, and comes in two parts: They aren't film-makers. The professional equivalent of what YouTubers do would be ultra-low-budget reality TV. Hardly the basis for understanding a cinema camera. They don't understand the needs of others, or that others even have different needs. If someone criticises a cinema camera for "weaknesses" that ARRI and RED also share (eg, lack of IBIS) then it shows how much they really don't know. I recently bought a P2K, but was considering the FP and waited for the FP-L to come out before making my decision. The Sigmas aren't for me for a number of reasons, but I don't think they're bad cameras, just that they're not a good fit for me.

-

Thanks! I keep banging on about how the cameras used on pro sets are invisible because they often don't get talked about, whereas YouTubers talk about their cameras ad-nauseum. Well, do these look familiar? They switched from GoPros to the 4K BMMCC Studio camera, and piped them into these: http://www.content-technology.com/postproduction/c-mount-industries-rides-on-aja-ki-pro-ultras-for-carpool-karaoke/ There's a reason that the BMMCC is still a current model camera.

-

@tupp Your obsession with pixels not impacting the pixels adjacent to them means that your arguments don't apply in the real world. I don't understand why you keep pursuing this "it's not perfect so it can't be valid" line of logic. Bayer sensors require debayering, which is a process involving interpolation. I have provided links to articles explaining this but you seem to ignore this inconvenient truth. Even if we ignore the industry trend of capturing images at a different resolution than they are delivered in, it still means that your mythical image pipeline that doesn't involve any interpolation is limited to cameras that capture such a tiny fraction of the images we watch they may as well not exist. Your criticisms also don't allow for compression, which is applied to basically every image that is consumed. This is a fundamental issue because compression blurs edges and obscures detail significantly, making many differences that might be visible in the mastering suite invisible in the final delivered stream. Once again, this means your comparison is limited to some utopian fairy-land that doesn't apply here in our dimension. I don't understand why you persist. Even if you were right about everything else (which you're not), you would only be proving the statement "4K is perceptually different to 2K when you shoot with cameras that no-one shoots with, match resolutions through the whole pipeline, and deliver in a format no-one delivers in". Obviously, such a statement would be pointless.

-

Those cookies look mighty tasty!

-

Yes and no - if the edge is at an angle then you need an infinite resolution to avoid having a grey-scale pixel in between the two flat areas of colour. VFX requires softening (blurring) in order to not appear aliased, or must be rendered where a pixel is taken to have the value of light within an arc (which might partially hit an object but also partially miss it) rather than at a single line (with is either hit or miss because it's infinitely thin). Tupp disagrees with us on this point, but yes. I haven't read much about debayering, but it makes sense if the interpolation is a higher-order function than linear from the immediate pixels. There is a cultural element, but Yedlins test was strictly about perceptibility, not preference. When he upscales 2K->4K he can't reproduce the high frequency details because they're gone. It's like if I described the beach as being low near the water and higher up further away from the water, you couldn't take my information and recreate the curve of the beach from that, let alone the ripples in the sand from the wind or the texture of the footprints in it - all that information is gone and all I have given you is a straight line. In digital systems there's a thing called the nyquist frequency which in digital audio terms says that the highest frequency that can be reproduced is half the sampling rate. ie, the highest frequency is when the data goes "100, 0, 100, 0, 100 , 0" and in the image the effect is that if I say that the 2K pixels are "100, 0, 100" then that translates to a 4K image with "100, ?, 0, ?, 100, ?" so the best we can do is simply guess what those pixel values were, based on the surrounding pixels, but we can't know if one of those edges was sharp or not. The right 4K image might be "100, 50, 0, 0, 100, 100" but how would we know? The information that one of those edges was soft and one was sharp is lost forever.

-

I think perhaps the largest difference between video and video games is that video games (and any computer generated imagery in general) can have a 100% white pixel right next to a 100% black pixel, whereas cameras don't seem to do that. In Yedlins demo he zooms into the edge of the blind and shows the 6K straight from the Alexa with no scaling and the "edge" is actually a gradient that takes maybe 4-6 pixels to go from dark to light. I don't know if this is do to with lens limitations, to do with sensor diffraction, OLPFs, or debayering algorithms, but it seems to match everything I've ever shot. It's not a difficult test to do.. take any camera that can shoot RAW and put it on a tripod, set it to base ISO and aperture priority, take it outside, open the aperture right up, focus it on a hard edge that has some contrast, stop down by 4 stops, take the shot, then look at it in an image editor and zoom way in to see what the edge looks like. In terms of Yedlins demo, I think the question is if having resolution over 2K is perceptible under normal viewing conditions. When he zooms in a lot it's quite obvious that there is more resolution there, but the question isn't if more resolution has more resolution, because we know that of course it does, and VFX people want as much of it as possible, but can audiences see the difference? I'm happy from the demo to say that it's not perceptually different. Of course, it's also easy to run Yedlins test yourself at home as well. Simply take a 4K video clip and export it at native resolution and at 2K, you can export it lossless if you like. Then bring both versions and put them onto a 4K timeline, and then just watch it on a 4K display, you can even cut them up and put them side-by-side or do whatever you want. If you don't have a camera that can shoot RAW then take a timelapse with RAW still images and use that as the source video, or download some sample footage from RED, which has footage up to 8K RAW available to download free from their website.

-

I've been trying to pull this apart for a long time, or maybe it just seems like a long time, it's hard to tell! I get the sense that the difference is a culmination of all the little things, and that the Alexa does all of the things you mention very well, and most cameras don't do these things nearly as well. Further to that, each of us has different sensory sensitivities, so while one person might be very bothered by rolling shutter (for example) the next person may not mind so much, etc. Also, the "lesser" cameras, like the GH5, will do some things more poorly than others, for example the 400Mbps ALL-I 10-bit 4K mode isn't as good as an Alexa, but it's significantly better than something like the A7S2 with its 100Mbps 8-bit 4K mode. And finally, the work you are doing will require different aspects, like dynamic range being more important in uncontrolled lighting and rolling shutter being more important in high-movement scenes and (especially) when the camera is moving a lot. So in this sense, camera choice is partly a matter of finding the best overlap between a cameras strengths, your own sensitivities / preferences, and the type of work you are doing. Furthermore, I would imagine that some cameras exceed the Alexas capability, at least in some aspects. These examples are rarer, and it depends on which Alexa you are talking about, but if we take the original Alexa Classic as the reference, then the new Alexa 65 exceeds it in many ways. I believe RED has models that may meet or exceed the Alexa line in terms of Dynamic Range (it's hard to get reliable measures of this so I won't state that as fact) and I'm sure there are other examples. There are other considerations beyond image though, considering that the subject of the image is critical, and I couldn't do my work at all if I had an Alexa, firstly because I couldn't carry the thing for long enough, and secondly that I'd get kicked out of the various places that I like to film, which includes out in public and also in private places like museums, temples, etc which reject "professional" shooting, which they judge by how the camera looks. Everything is a compromise, and the journey is long and deep. I've explored many aspects here on the forums though, and I'm happy to discuss whichever aspects you care to discuss as I enjoy the discussions and learning more. Many of the threads I started seem to fall off, but often I have progressed further than the contributions I have made in the thread, often because I came back to it after a break, or because I've developed a sense of something but can't prove it, so if you're curious about anything then just ask 🙂

-

A 4K camera has one third the number of sensors than a 4K monitor has emitters. This means that debayering involves interpolation, and means your proposal involves significant interpolation, and therefore fails your own criteria.

-

Based on that, there is no exact way to test resolutions that will apply to any situation beyond the specific combination being tested. So, let's take that as true, and do a non-exact way based upon a typical image pipeline. I propose comparing the image from a 6K cinema camera being put onto a 4K timeline vs a 2K timeline, and to be sure, let's zoom in to 200% so we can see the differences a little more than they would normally be visible. This is what Yedlin did. A single wrong point invalidates an analysis if, and only if, the subsequent analysis is dependent on that point. Yedlins was not. No he didn't. You have failed to understand his first point, and then subsequently to that, you have failed to realise that his first point isn't actually critical to the remainder of his analysis. You have stated that there is scaling because the blown up versions didn't match, which isn't valid because: different image rendering algorithms can cause them to not match, therefore you don't actually know for sure that they don't match (it could simply be that your viewer didn't match but his did) you assumed that there was scaling involved because the grey box had impacted pixels surrounding it, which could also have been caused by compression, so this doesn't prove scaling and actually neither of those matter anyway, because even if there was scaling, basically every image we see has been scaled and compressed Your "problem" is that you misinterpreted a point, but even if you hadn't misinterpreted it could have been caused by other factors, and even if it wasn't, aren't relevant to the end result anyway.

-

That could certainly be true. Debayering involves interpolation (like rescaling does) so the different algorithms can create significantly different amounts of edge detail, which at high-bitrate codecs would be quite noticeable even if the radius of the differences was under 2 pixels.

-

Upgrade paths are always about what you want and value in an image. IIRC you really value the 14-bit RAW (even over the 12-bit RAW) so I'd imagine that any upgrade would have to also shoot RAW? A friend of mine shoots with 5D+ML and apart from going to a full cinema camera, you're going to find that the alternatives all have some problem or other that would be a downgrade from the 5D. The 5D+ML combo isn't perfect by any means, but other cameras haven't necessarily even caught up yet, let alone being improvements - it's still give and take in comparison.

-

GH5 does this via custom modes. I don't use it for stills really, but I have custom modes for video that have different exposure modes (ie, I have custom modes that are Aperture priority and ones that are Manual mode).