-

Posts

7,835 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by kye

-

Yes, full sized HDMI port. It's funny - I have four cameras and (IIRC) all / most of them have full-sized HDMI connectors. Canon 700D, Canon XC10, GH5, and BMMCC. Of course, this is pure coincidence. Yes, I'm told it has heaps of Mojo, but I'm yet to really get to the bottom of that. Same for me - its crazy that the BMMCC is so much smaller than the GH5 and yet rigged up its considerably larger. The C70 is an odd one. I suspect that most people that invest that amount and shoot with something that big would add a cage / monitor / audio something anyway, thus it's kind of modular by use rather than by design. Technically you're right, although a C70+lens+mic would be as large as my BMMCC rig! Setup times are important, absolutely. I saw a great video of some folks that went to Antarctica with a top-of-the-line cinema setup (something like a C700 or Venice with a huge all-in-one cine zoom) and they didn't want to have to rig up while standing on a beach with the penguins, etc. Their solution (not new) was to get a hard equipment case that could be modified to fit the rig in its fully assembled state. They used the case for accessories during the travel legs, but once they were on the ship they reconfigured it to house the assembled rig (IIRC it was minus the matte box) and then used that for going ashore. Then they just put the matte box on the front, put it on the tripod and they were off to the races. For my purposes I keep my setup (GH5 + Rode VMP + wrist-strap) in one hand the whole time and then I can turn it on and hit record during the motion of raising it up to my face, where the other hand does manual focus and I get the shot. I've included a number of shots in my final edits that were time stretched out to a couple of seconds because the time between my acquiring focus and when the moment ended was under 10 frames, and sometimes only a couple of frames. The Komodo does look pretty cool. Z-Cam is interesting. I've watched a number of reviews and sample footage from Sidney Baker Green on YT (https://www.youtube.com/user/sidneybakergreen) who has a Z-Cam and is a pretty good colourist. His take on it was that the image is pretty good, but the product isn't that refined yet and has small foibles, and the user experience isn't that great with things like the manuals not being kept up-to-date and support really being via user groups. I've really liked the images I've seen from them. Assuming they continue and get more refined over time they look like a pretty good brand.

-

Seems like lots of new affordable modular cinema cameras have come out, with some cool specs like 4K120 or 4K60 10-bit, etc.... or even just getting FF video without a crop, overheating, or crippled codecs. So, who is going modular? How are you finding the transition? My most recent acquisition was a BMMCC and my first modular setup, and I found that getting used to having a separate monitor, needing multiple batteries, cables and cable management, as well as having to rely on a rig just for ergonomics were all a bit of a PITA actually. Plus, despite the BMMCC being really small, and paired with a tiny monitor, the whole rig gets large pretty quickly. and considering that we can't talk about modular cameras without showing awesome pictures of rigs, here's the BMMCC in my sunset configuration:

-

Lenses can be matched in post if you're willing to put in a bit of effort. Here's an example where I match a Samyang to a Lomo... unedited: and matched: If you put a look over the whole film you'll also help to even things out too.

-

Sounds like you know what you want. I'm no expert on what the alternatives to the Irix are, but I know that when you're going as wide as this there aren't that many options to choose from.

-

That link is banned, and also not useful because it links to the original source, which has since been taken down. Luckily, the internet never forgets: https://web.archive.org/web/20180407123236if_/http://juanmelara.com.au/blog/re-creating-the-600-brim-linny-lut-in-resolve https://static1.squarespace.com/static/580ef820e4fcb54017eba692/t/5a560498ec212d5f4a972d25/1515589920801/The_Brim_Linny_Rebuild.zip https://web.archive.org/web/20190227062207/http://juanmelara.com.au/s/The_Brim_Linny_Rebuild.zip

-

What focal length do you actually need? ie, what precise focal length, rather than just wider than 18mm. I'm a huge fan of super-wide-angle lenses, and my second most used lens is a 15mm FF equivalent, but you want to hit the sweet spot. If you go wider than your sweet spot then you will either crop and throw away pixels, or you will get closer and get more wide-angle-distortion. If you go narrower than your sweet spot then you will have to go further back to get the framing you want, and if that's not possible then you won't get everything in frame that you want to. When I was in the market for a super-wide, I got my GoPro, worked out what FOV it was equivalent to, and then did a bunch of test shooting with it to emulate the kinds of shots I wanted to get. I then experimented with cropping to simulate various longer focal lengths (easy to do - double the focal length is half the width/height of the frame) and worked out where the sweet spot was for me. If you're unsure but want to buy something now, get the Tokina 11-16mm second hand, shoot a bunch with it, work out what focal lengths you want, then sell it and buy what you need.

-

What focal lengths and sensor size are you looking for? and what 'look' do you want, ie, the modern look with lots of contrast and sharpness, or a softer rendering?

-

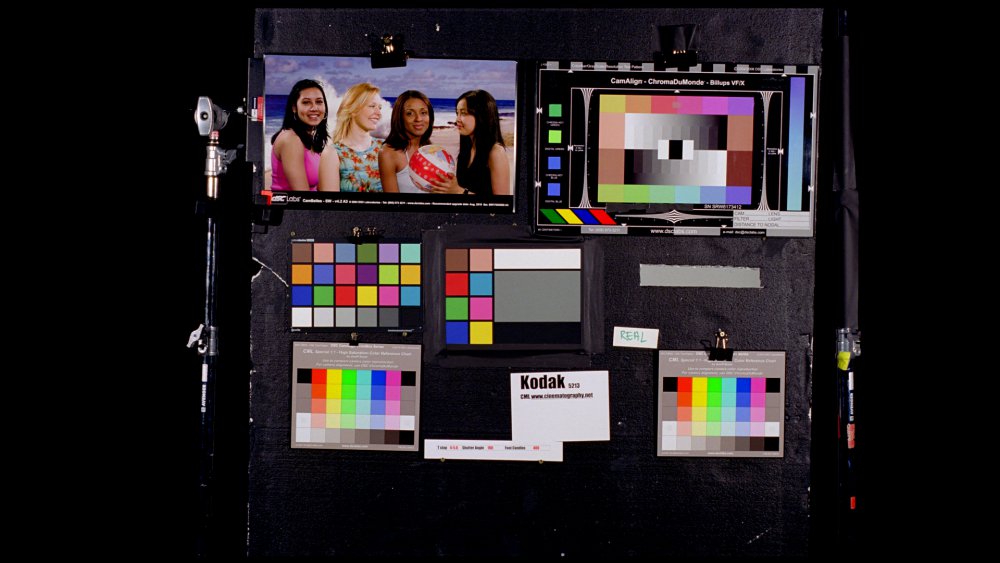

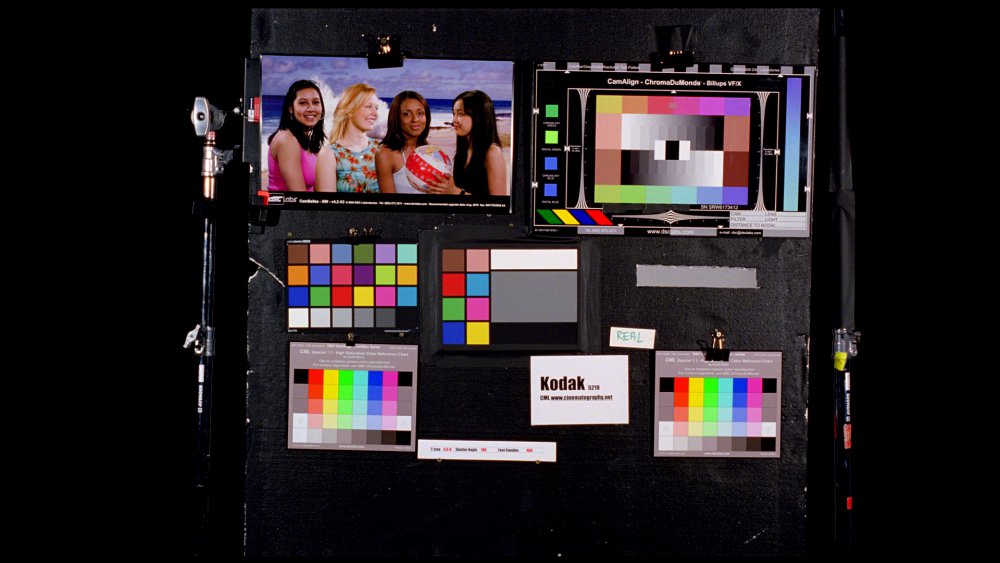

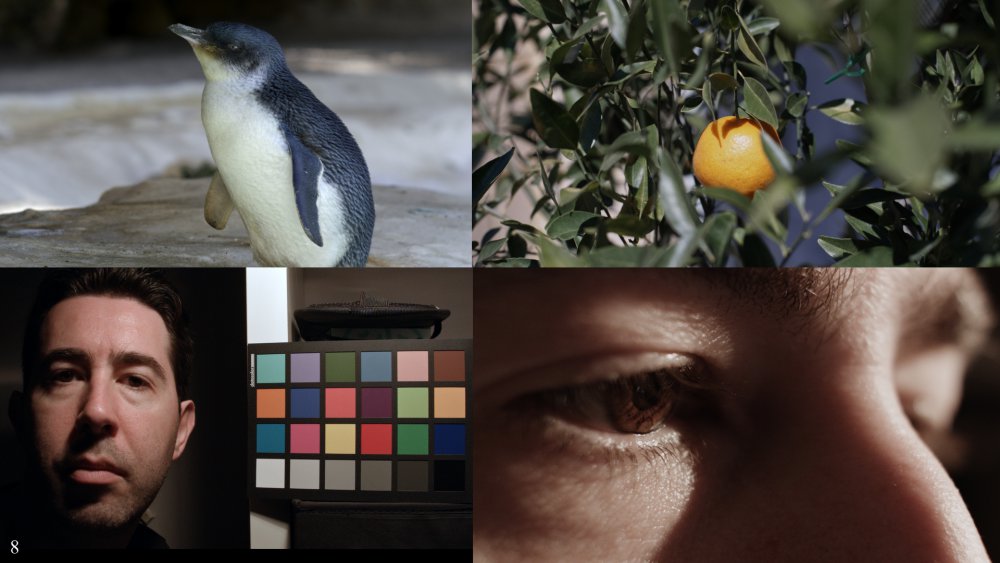

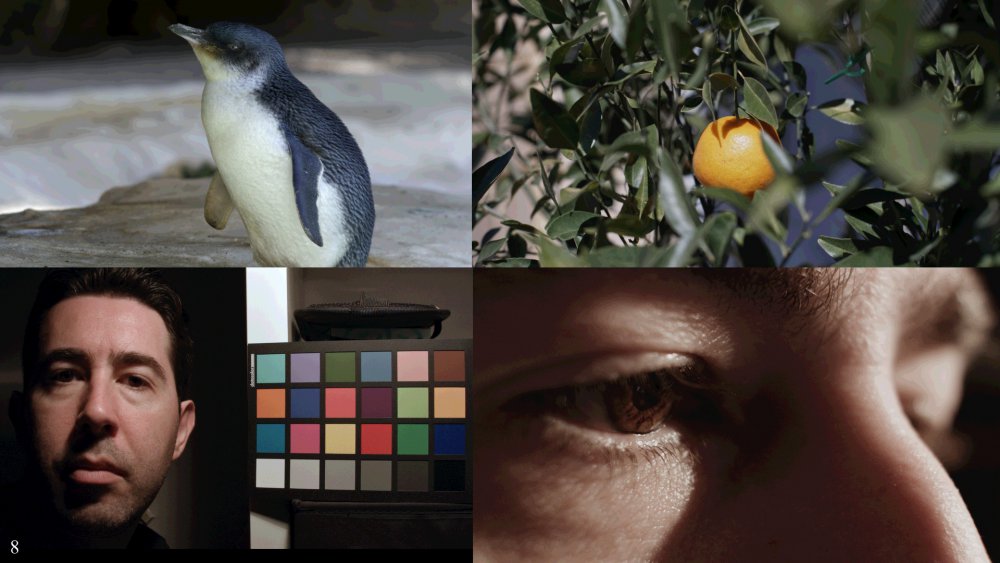

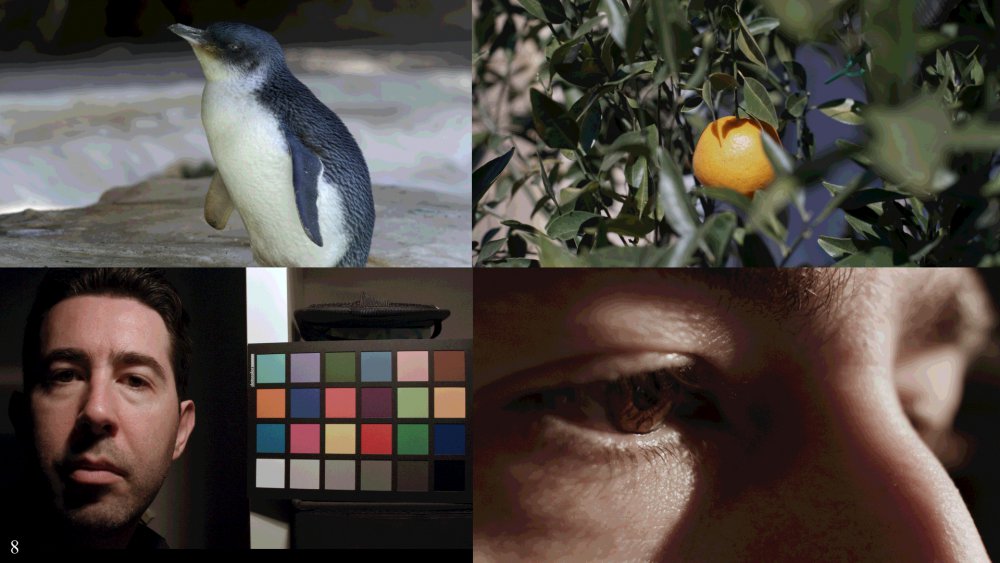

No, no makeup team for me! The people i'm filming have much more variability - more like in this image: That's also a useful image for testing LUTs and seeing what they do to skintones BTW. Nice looking images. The DB certainly had a cult following. Interesting. There were also some great sample shots from the UMP with skintones, I should look through them for good examples. I agree that thickness can happen with low and high key images, with saturated and not so saturated images too. A few things keep coming up, and I think i'm starting to fit a few of them together. One is the ability to render subtle variations in tone, and yet, we're looking at all these test images in 8-bit, and some in less than 8-bit, yet this doesn't seem to be a limiting factor. I wonder if maybe we're thinking about colour subtlety and DR and bit-depth the wrong way. I mean literally, that we think we want more of these things, but that actually maybe we want less. Take this image for example: This image is contrasty and saturated. In fact, it's very contrasty. If you were looking at this scene in real life, these people wouldn't have so much variation in luminance and saturation in their skintones - that baby would have to have been sunbathing for hours but only on the sides of his face and not on the front. In that sense, film and its high contrast is actually expanding and amplifying subtle luma differences, and when we increase contrast we increase saturation too, so it's amplifying those subtle hue variations. One thing i've noticed about film vs digital in skintones is that digital seems to render people's skintones either on the yellow-side, on the pink-side, or in the middle and not that saturated. Film will show people with all those tones all at once. This guy is another example of a decent variation of hues in his skin:

-

I'd suggest using multiple layers of stabilisation. As has been said before, gimbals are great but lose the horizon over time and cornering, and the super-duper-stabilistation modes on the GoPro and Osmo Action etc will also lose the horizon if kept out of level for some time (which is inevitable considering that roads are sloped to drain water away and corner nicely). Due to these factors, I'd suggest a very solid mount (to eliminate wind buffering) combined with a very short shutter speed (to eliminate exposure blurs and RS wobbles from bumps) combined with in-camera floaty-smooth modes combined with stabilisation in post.

-

My images were all GH5, with the second one using the SLR Magic 8mm f4, and the others using the Voigtlander 17.5mm f0.95. I don't think that anyone would suggest the GH5 was designed for low light, and the boat image is actually significantly brighter than it was in real life. The floodlights in the background were the only lighting and we were perhaps 75-100m offshore, so the actual light levels were very low. I was gobsmacked at how good the images turned out considering the situation, but they're definitely not the controlled lighting situation that they're being compared to. The scopes are very interesting and the idea that the good ones transition smoothly is fascinating, and is very different to the GH5 images. That is a spectacular image, and looks pretty thick to me! It clearly has some diffusion applied (I'd say heavily) and I wonder how much that plays into the thickness of the image. Diffusion is very common in controlled shooting. Just for some experimentation sake, I wonder if adding Glow helps us thicken things up a bit? Original image posted above: With a heap of Glow applied (to accentuate the effect): Thicker? It makes is look like there was fog on the water 🙂 I can't match the brightness of the comparison image though, as in most well-lit and low-key narrative scenes the skin tones are amongst the brightest objects in frame, whereas that's not how my scene was lit. No examples offhand, just more anecdotal impressions I guess. I must agree that the bright punchy colours in that image don't look the best. The colours in these two also don't look the best to me either. I've been watching a lot of TV lately and the skin tones that I'm really liking seem to have a very strong look, but I'm yet to find what that maps out to in concrete terms. I suspect that the hues are very well controlled between the yellow and pink ends of the spectrum without either going too far, and the saturation also seems to be very well controlled with lots of skin area being quite saturated but the saturation being limited, in that it goes quickly up to a certain point but doesn't go much beyond that. The skintones I'm used to dealing with in my own footage are all over the place in terms of often having areas too far towards yellow and also too pink, and with far too much saturation, but if you pull the saturation back on all the skin then when the most saturated areas come under control the rest of the tones are completely washed out. I am focusing a lot on skin tones, but that's one half of the very common teal/orange look, so the scene will be skin tones, maybe some warmer colours, and then the rest will be mostly cool in temp. I've been taking screen grabs whenever I see a nice shot and plan on pulling a bunch of them into Resolve and studying the scopes to see if I can see what is going on, and if I can learn from that.

-

Indeed. Reminds me of this article: https://www.provideocoalition.com/film-look-two/ Art Adams again. Long story short, film desaturates both the highlights and the shadows because on negative film the shadows are the highlights! (pretty sure that's the right-way around..) I definitely think it's in the processing. I view it as that there are three factors: 1) things that simply aren't captured by a cheaper camera (eg, clipping) 2) things that are captured and can be used in post without degrading the image below a certain threshold (ie, what image standards you or your client have) 3) things that aren't captured well enough to be used in post (eg, noisy shadows beyond redemption, parts of the DR that break if pushed around too much) Obviously if you expose your skin tones in a range that is either completely lost (eg, clipped) or aren't in an area that can be recovered without exposing too much noise or breaking the image then there's nothing you can do. What I am interested in is the middle part, where a properly exposed image will put the important things in the image, for example skin tones. Anything in this range should be able to be converted into something that looks great. Let's take skin tones - let's imagine that they're well captured but don't look amazing, but that the adjustment to make them look amazing won't break the image. In that case, the only thing preventing the ok skin tones from looking great is the skill in knowing what transformations to make to get there. Yes, if the skin tones are from a bad codec and there is very little hue variation (ie, plastic skin tones) then that's not something that can be recovered from, but if the hues are all there but just aren't nice, then that should be able to be made to look great. This is where it's about skill, and why movies with professional colourists involved often look great. OF course, ARRI has built a lot of that stuff into their colour science too, so in a sense everything shot with an ARRI camera has a first pass from some of the worlds best colour scientists, so is already that much further ahead than other offerings. Of course, many others aren't far behind on colour science, but in the affordable cameras its rare to get the best colour science combined with a good enough sensor and codec. That was something I had been thinking too, but thickness is present in brighter lit images too isn't it? Maybe if I rephrase it, higher-key images taken on thin digital cameras still don't match those higher-key images taken on film. Maybe cheap cameras are better at higher-key images than low-key images, but I'd suggest there's still a difference. Interesting images, and despite the age and lack of resolution and DR, there is definitely some thickness to them. I wonder if maybe there is a contrast and saturation softness to them, not in the sense of them being low contrast or low saturation, but more that there is a softness to transitions of luma and chroma within the image? In other news... I've been messing with some image processing and made some test images. Curious to hear if these appear thick or not. They're all a bit darker, so maybe fall into the exposure range that people are thinking tends to be thicker.

-

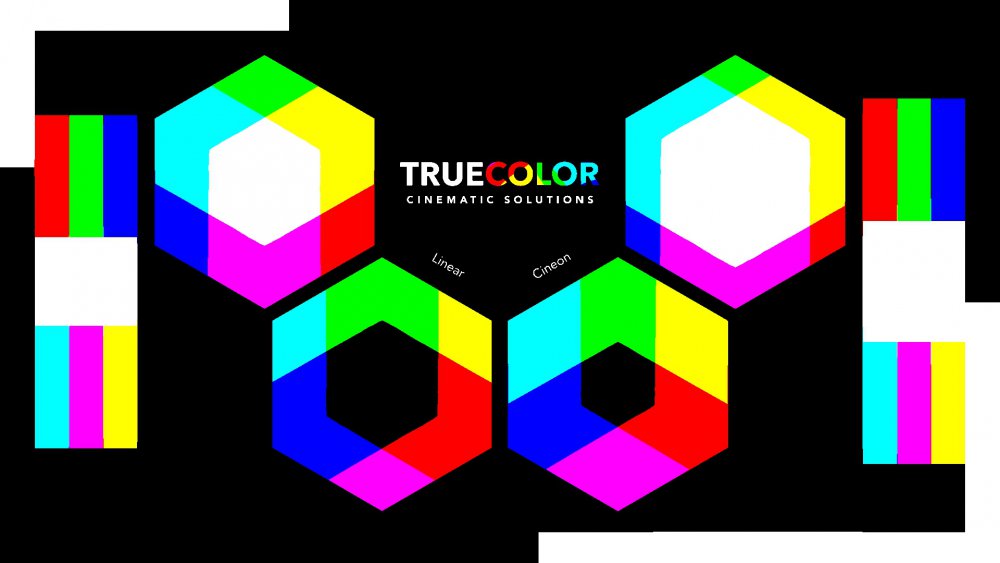

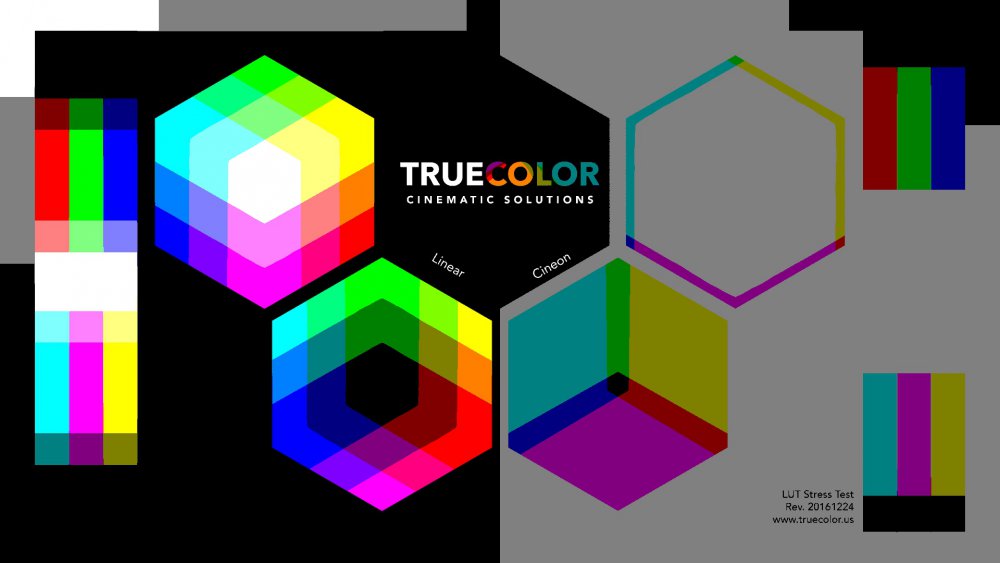

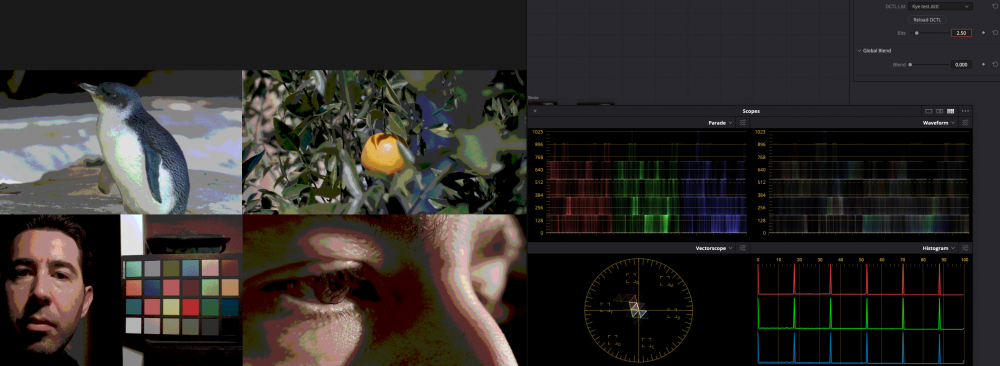

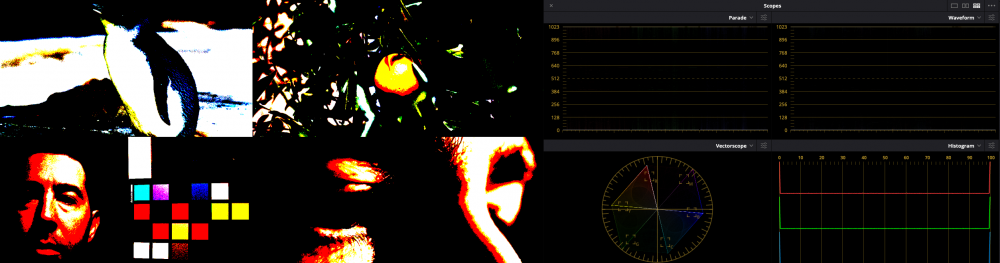

Im still working through it, but I would imagine there are an infinite variety. Certainly, looking at film emulations, some are quite different to others in what they do to the vector scope and waveform. What do you mean by this? OK, one last attempt. Here is a LUT stress test image from truecolour. It shows smooth graduations across the full colour space and is useful for seeing if there are any artefacts likely to be caused by a LUT or grade. This is it taken into Resolve and exported out without any effects applied. This is the LUT image with my plugin set to 1-bit. This should create only white, red, green, blue, yellow, magenta, cyan, and black. This is the LUT image with my plugin set to 2-bits. This will create more variation. The thing to look for here is that all the gradual transitions have been replaced by flat areas that transition instantly to another flat area of the next adjacent colour. If you whip one of the above images into your software package I would imagine that you'd find the compression might have created intermediary colours on the edges of the flat areas, but if my processing was creating intermediate colours then they would be visible as separate flat areas, but as you can see, there are none.

-

Read the book. The title isn't an accident.

-

and some time after that maybe they'll be able to take my photos of 2-3+ hours of vigorous whiteboard dissuasions and actually make people work together better! Now that would be the magic of cinema.... Everything has pros and cons. Typically the people who think something is bad are either unaware of what was gained, or don't value them as highly as the things that were lost. Most often things were better in the good old days, but what that doesn't take into account is that the good old days for the 60-year old were when they were in their early 20s and was what the 80-year old was referring to as "the end of civilised society" at the time. The things we value and basically everything that our lives and the world are made of are the accumulation of all barbarity and demonic possession of civilised society through the entire history of time. See this for some examples: https://www.mentalfloss.com/article/52209/15-historical-complaints-about-young-people-ruining-everything There's a great book on social media use that you might find interesting called It's Complicated: The Social Lives of Networked Teens by Danah Boyd which was an incredible read and actually suggested that teens aren't addicted to their phones, but to each other, and that when teens are looking at their phones its much more likely to be in aid of communicating with each other than when an adult is looking at their phone. A google search found a PDF of the whole thing from the authors website, so if you are looking then apparently it's easy to access.

-

Ok, now I understand what you were saying. When you said "a digital monitor 'adds' adjacent pixels" I thought you were talking about pixels in the image somehow being blended together, rather than just that monitors are arrays of R, G, and B lights. One of the up-shots of subtractive colour vs additive colour is that with subtractive colour you get a peak in saturation below the luminance level that saturation peaks at in an additive model. To compensate for that, colourists and colour scientists and LUT creators often darken saturated colours. This is one of the things I said that I find almost everywhere I look. There are other things too. I'm sure that if you look back you'll find I said that it might contribute to it, and not that it is the only factor. Cool. Let's test these. The whole point of this thread is to go from "I think" to "I know". This can be arranged. Scopes make this kind of error all the time. Curves and right angles never mix because when you're generating a line of best fit with a non-zero curve inertia or non-infinite frequency response then you will get ringing in your curve. What this means is that if your input data is 0, 0, 0, X, 0, 0, 0 the curve will have non-zero data on either side of the spike. This article talks about it in the context of image processing, but it applies any time you have a step-change in values. https://en.wikipedia.org/wiki/Ringing_artifacts There is nothing in my code that would allow for the creation of intermediary values, and I'm seeing visually the right behaviour at lower bit-depths when I look at the image (as shown previously with the 1-bit image quality), so at this point I'm happy to conclude that there are no values in between and that its a scoping limitation, or is being created by the jpg compression process.

-

@Andrew Reid considering how good these things are getting, is it now possible to start ranking phones against cheaper cameras? All the discussion is phones vs phones or cameras vs cameras, but I'm curious when we can start comparing them directly. Or maybe it's obvious and I'm out of the loop because I'm still using my iPhone 8.. It will be a great day when the image quality of a consumer device mimics Zeiss Master Primes. Cameras are tools, and currently the best tools are inaccessible to the vast majority of people who would like to create with them. Democratisation of image quality is good for art, good for creators, and good for society in general. The only people it's not good for is technical elitists, or people that are obsessed with equipment and don't care about art. You do realise that if my camera gets better then yours doesn't get worse, right? and smartphones don't crawl from your nightstand and inject you with heroin while you're sleeping... improving the camera in a phone will help you to get access to traditional camera equipment, not hinder it, and owning a phone doesn't automatically mean that you are forced at gunpoint to browse social media. These things are tools. Agreed. It's a tough job to convince many people that a photographer is more than a camera-transportation-device, but the more that smartphones get better and people take photos and realise they're not that great even though the picture quality seems fine, the more they'll realise that there is a skill component to things. The best way to educate the public is to give them the tools and then watch as they discover it's not so easy. This transition from photographers being technicians who restrict access to the equipment to photographers being artists has been occurring for a very long time. It used to be that accessing and operating a 8x10 camera wasn't accessible, then it was lenses and lights, then with digital it was the megapixels and lenses, now it's just lenses, and once fake-bokeh is convincing then there will be no technical or ownership barriers at all, which is why the good photographers are the ones who specialise in lighting, composition, posing, set design, concepts, etc. It's pretty easy to make an Alexa look like a home video. The equipment won't save the amateurs from needing to be artists.

-

+1 for a normal picture profile, like @TomTheDP says. This will mean controlling your lighting and DR in the scene and white-balancing meticulously. It would also help the colourist immensely if you shot a colour chart, preferably in every setup if you can. 8-bit is fine if it's starting in a 709-like space, and the A73 isn't that bad when you consider that feature films have mixed GoPro footage in with cinema cameras!

-

Perhaps these might provide some background to subtractive vs additive colour science. https://www.dvinfo.net/article/production/camgear/what-alexa-and-watercolors-have-in-common.html https://www.dvinfo.net/article/post/making-the-sony-f55-look-filmic-with-resolve-9.html Well, I would have, but I was at work. I will post them now, and maybe we can all relax a little. No bit-crunch: 4.5 bits: 4.0 bits: In terms of your analysis vs mine, my screenshots are all taken prior to the image being compressed to 8-bit jpg, whereas yours was taken after it was compressed to 8-bit jpg. Note how much the banding is reduced on the jpg above vs how it looks uncompressed (both at 4.0 bits): Here's the 5-bit with the noise to show what it looks like before compression: and without the noise applied: Agreed about 'more than the sum of their parts' as it's more like a multiplication - even a 10% loss over many aspects multiplies quickly over many factors. Not a fan of ARRIRAW? I've never really compared them, so wouldn't know. Indeed, and that's kind of the point. I'm trying to work out what it is.

-

It does hark back to Deezids point. Lots more aspects to investigate here yet. Interesting about the saturation of shadows - my impression was that film desaturated both shadows and highlights compared to digital, but maybe when people desaturate digital shadows and highlights they're always done overzealously? We absolutely want our images to be better than reality - the image of the guy in the car doesn't look like reality at all! One of the things that I see that makes an image 'cinematic' vs realistic is resolution and specifically the lack of it. If you're shooting with a compressed codec then I think some kind of image softening in post is a good strategy. I'm yet to systematically experiment with softening the image with blurs, but it's on my list. I'll let others comment on this in order to prevent groupthink, but with what I've recently learned about film which one is which is pretty obvious. When you say 'compression', what are you referring to specifically? Bit-rate? bit-depth? codec? chroma sub-sampling? Have you noticed exceptions to your 10-bit 422 14-stops rule where something 'lesser' had unexpected thickness, or where things above that threshold didn't? If so, do you have any ideas on what might have tipped the balance in those instances? Additive vs subtractive colours and mimicking subtractive colours with additive tools may well be relevant here, and I see some of the hallmarks of that mimicry almost everywhere I look. I did a colour test of the GH5 and BMMCC and I took shots of my face and a colour checker with both cameras, including every colour profile on the GH5. I then took the rec709 image from the GH5 and graded it to match the BMMCC as well as every other colour profile from the GH5. In EVERY instance I saw adjustments being made that (at least partially) mimicked subtractive colour. I highly encourage everyone to take their camera, point it at a colourful scene lit with natural light and take a RAW still image and then a short video clip in their favourite colour profile, and then try to match the RAW still to the colour profile. We talk about "just doing a conversion to rec709" or "applying the LUT" like it's nothing - it's actually applying a dozen or more finely crafted adjustments created by professional colour scientists. I have learned an incredible amount by reverse-engineering these things. It makes sense that the scopes draw lines instead of points, that's also why the vector scope looks like triangles and not points. One less mystery 🙂 I'm happy to re-post the images without the noise added, but you should know that I added the noise before the bit-depth reduction plugin, not after, so the 'dirtying' of the image happened during compression, not by adding the noise. I saw that. His comments about preferring what we're used to were interesting too. Blind testing is a tool that has its uses, and we don't use it nearly enough.

-

I've compared 14-bit vs 12-bit vs 10-bit RAW using ML, and based on the results of my tests I don't feel compelled to even watch a YT video comparing them, let alone do one for myself, even if I had the cameras just sitting there waiting for it. Have you played with various bit-depths? 12-bit and 14-bit are so similar that it takes some pretty hard pixel-peeping to be able to tell a difference. There is one, of course, but it's so far into diminishing returns that the ROI line is practically horizontal, unless you were doing some spectacularly vicious processing in post. I have nothing against people using those modes, but it's a very slight difference.

-

It might be, that's interesting. I'm still working on the logic of subtractive vs additive colour and I'm not quite there enough to replicate it in post. Agreed. In my bit-depth reductions I added grain to introduce noise to get the effects of dithering: "Dither is an intentionally applied form of noise used to randomize quantization error, preventing large-scale patterns such as color banding in images. Dither is routinely used in processing of both digital audio and video data, and is often one of the last stages of mastering audio to a CD." Thickness of an image might have something to do with film grain, but that's not what I was testing (or trying to test anyway). Agreed. That's why I haven't been talking about resolution or sharpness, although maybe I should be talking about reducing resolution and sharpness as maybe that will help with thickness? Obviously it's possible that I made a mistake, but I don't think so. Here's the code: Pretty straight-forwards. Also, if I set it to 2.5bits, then this is what I get: which looks pretty much what you'd expect. I suspect the vertical lines in the parade are just an algorithmic artefact of quantised data. If I set it to 1 bit then the image looks like it's not providing any values between the standard ones you'd expect (black, red, green, blue, yellow, cyan, magenta, white). Happy to hear if you spot a bug. Also, maybe the image gets given new values when it's compressed? Actually, that sounds like it's quite possible.. hmm. I wasn't suggesting that a 4.5bit image pipeline would give that exact result, more that we could destroy bit-depth pretty severely and the image didn't fall apart, thus it's unlikely that thickness comes from the bit-depth. Indeed there is. and I'd expect there to be! I mean, I bought a GH5 based partly on the internal 10-bit! I'm not regretting my decision, but I'm thinking that it's less important than I used to think it was, especially without using a log profile like I also used to do. Essentially the test was to go way too far (4.5bits is ridiculous) and see if that had a disastrous effect, which it didn't seem to do. If we start with the assumption that cheap cameras create images that are thin because of their 8-bit codecs, then by that logic a 5-bit image should be razor thin and completely objectionable, but it wasn't, so it's unlikely that the 8-bit property is the one robbing the cheap cameras of their images thickness.

-

The question we're trying to work out here is what aspects of an image make up this subjective thing referred to by some as 'thickness'. We know that high-end cinema cameras typically have really thick looking images, and that cheap cameras typically do not (although there are exceptions). Therefore this quality of thickness is related to something that differs between these two scenarios. Images from cheap cameras typically have a range of attributes in common, such as 8-bit, 420, highly compressed, cheaper lenses, less attention paid to lighting, and a range of other things. However, despite all these limitations, the images from these cameras are very good in some senses. A 4K file from a smartphone has a heap of resolution, reasonable colour science, etc, so it's not like we're comparing cinema cameras with a potato. This means that the concept of image thickness much be fragile. Otherwise consumer cameras would capture it just fine. If something is fragile, and is only just on the edges of being captured, then if we take a thick image and degrade it in the right ways, then the thickness should evaporate with the slightest degradation. The fact I can take an image and output it at 8-bits and at 5-bits and for there not to be a night-and-day difference then I must assume one of three things: the image wasn't thick to begin with it is thick at both 8-bits and 5-bits and therefore bit-depth doesn't matter than much it is thick at 8-bit but not at 5-bits and people just didn't notice, in a thread especially about this I very much doubt that it's #3, because I've had PMs from folks who I trust saying it didn't look much different. Maybe it's #1, but I also doubt that, because we're routinely judging the thickness of images via stills from YT or Vimeo, which are likely to be 8-bit, 420, and highly compressed. The images of the guy in the car that look great are 8-bit. I don't know where they came from, but if they're screen grabs from a streaming service then they'll be pretty poor quality too. Yet they still look great. I'm starting to think that maybe image thickness is related to the distribution of tones within a HSL cube, and some areas being nicer than others, or there being synergies between various areas and not others.

-

If I'm testing resolution of a camera mode then I typically shoot something almost stationary, open the aperture right up, focus, then stop down at least 3 stops, normally 4, to get to the sweet spot of whatever lens I'm using, and to also make sure that if I move slightly that the focal plane is deep enough. Doing that with dogs might be a challenge!

-

Actually, the fact that an image can be reduced to 5bits and not be visibly ruined, means that the bits aren't as important as we all seem to think. A bit-depth of 5bits is equivalent to taking an 8-bit image and only using 1/8th of the DR, then expanding that out. Or, shooting a 10-bit image and only exposing using 1/32 of that DR and expanding that out. Obviously that's not something I'd recommend, and also considering I applied a lot of noise before doing the bit-depth reduction, but the idea that image thickness is related to bit-depth seems to be disproven. I'm now re-thinking what to test next, but this was an obvious thing and it turned out to be wrong.

-

What's today's digital version of the Éclair NRP 16mm Film Camera?

kye replied to John Matthews's topic in Cameras

Which do you agree with - that film has poor DR or that Canon DSLRs have? I suspect you're talking about film, and this is something I learned about quite recently. In Colour and Mastering for Digital Cinema by Glenn Kennel he shows density graphs for both negative and print films. The negative film graphs show the 2% black, 18% grey and 90% white points all along the linear segment of the graph, with huge amounts of leeway above the 90% white. He says "The latitude of a typical motion picture negative film is 3.0 log exposure, or a scene contrast of 1000 to 1. This corresponds to approximately 10 camera stops". The highlights extend into a very graceful highlight compression curve. The print-through curve is a different story, with the 2% black, 18% grey and 90% white points stretching across almost the entire DR of the film. In contrast to the negative film where the range from 2-90% takes up perhaps half of the mostly-linear section of the graph, in the print-through curve the 2% sits very close to clipping, the region between 18% and 90% encompasses the whole shoulder, and the 90% is very close to the other flat point on the curve. My understanding is that the huge range of leeway in the negative is what people refer to as "latitude" and this is where the reputation film has of having a large DR comes from, because that is true. However, if you're talking about mimicking film then it was a very short period in history where you might shoot on film but process digitally, so you should also take into account the print film positive that would have been used to turn the negative into something you could actually watch. Glenn goes on to discuss techniques for expanding the DR of the print-through by over-exposing the negative and then printing it differently, which does extend the range in the shadows below the 2% quite significantly. I tried to find some curves online to replicate what is in the book but couldn't find any. I'd really recommend the book if you're curious to learn more. I learned more in reading the first few chapters than I have in reading free articles on and off for years now.