-

Posts

7,835 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by kye

-

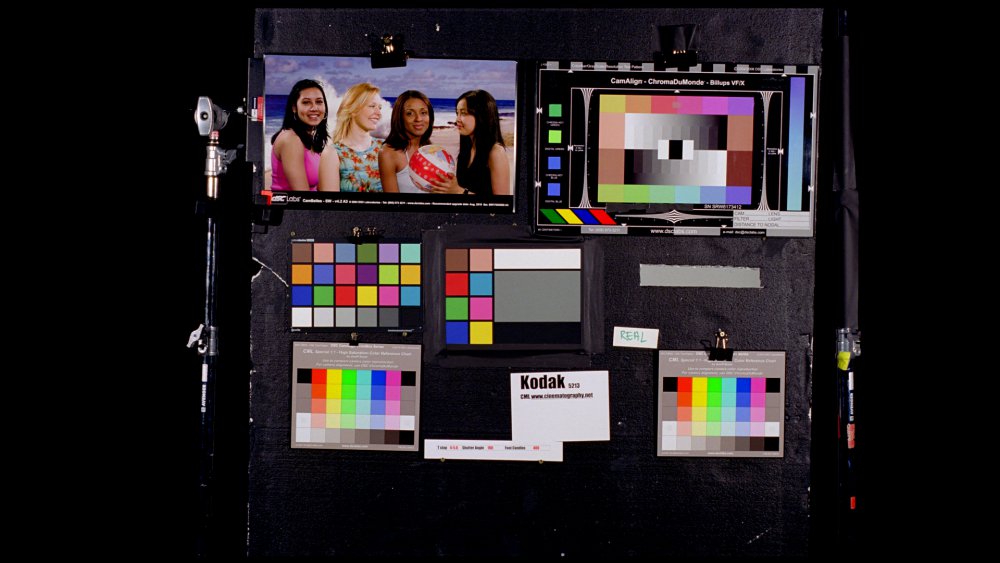

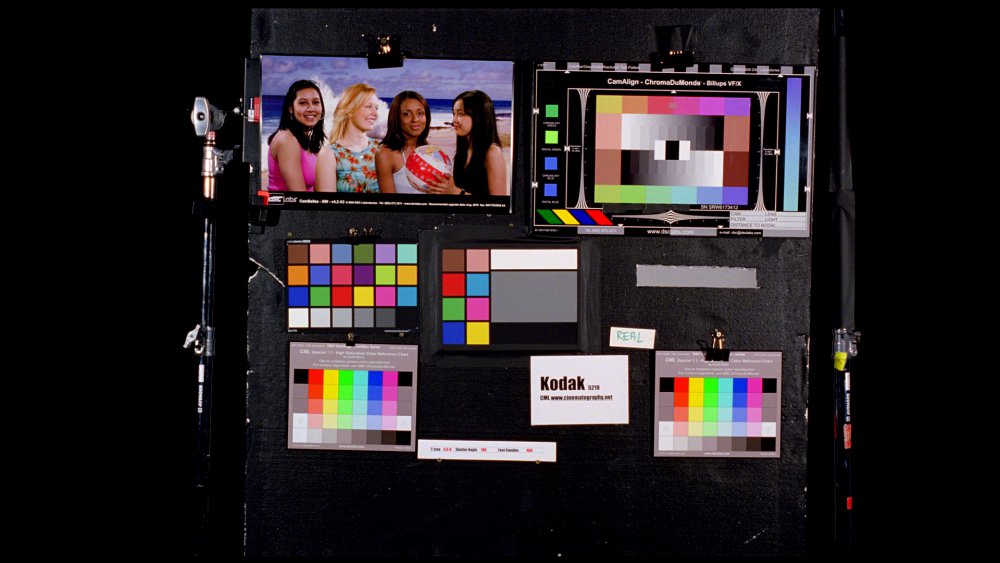

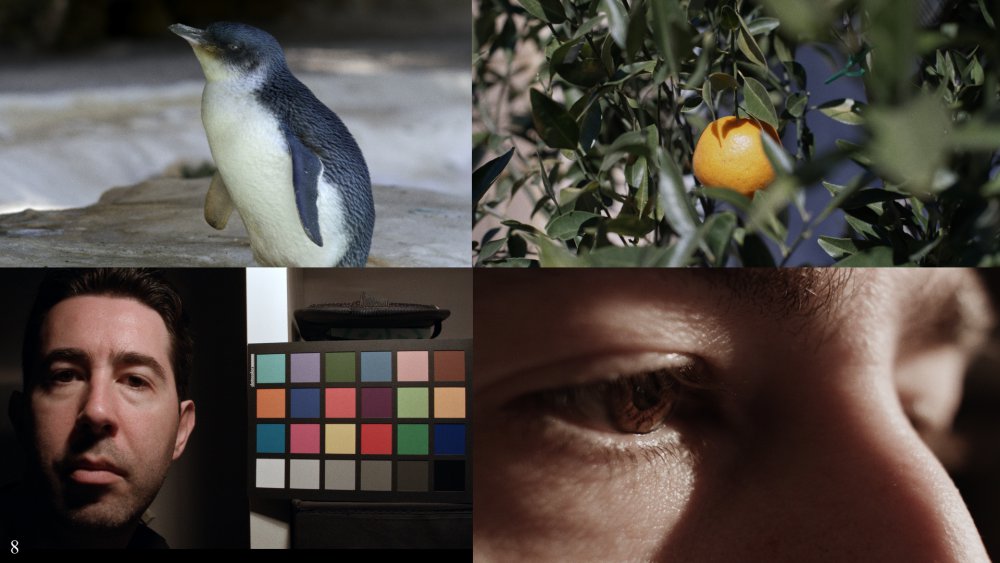

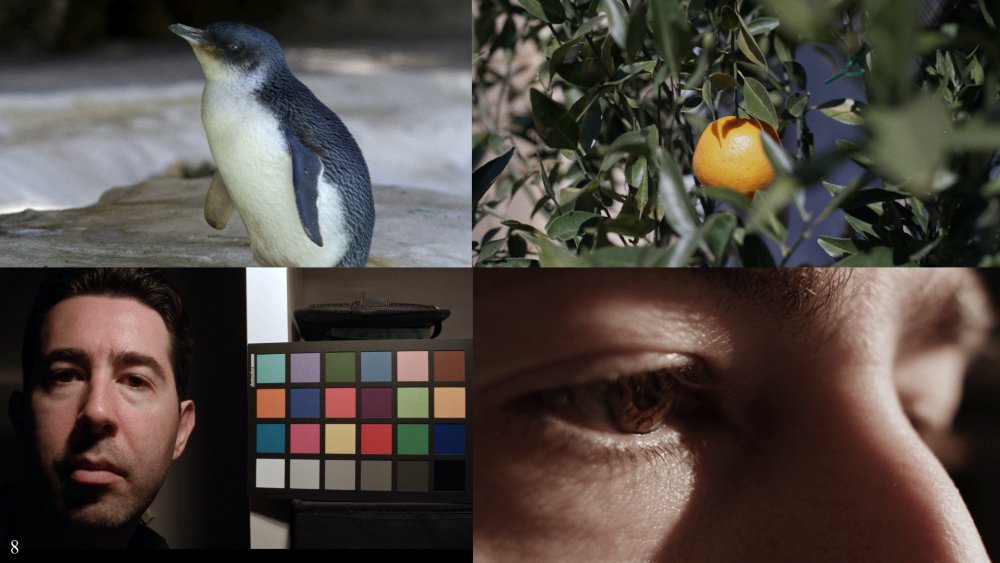

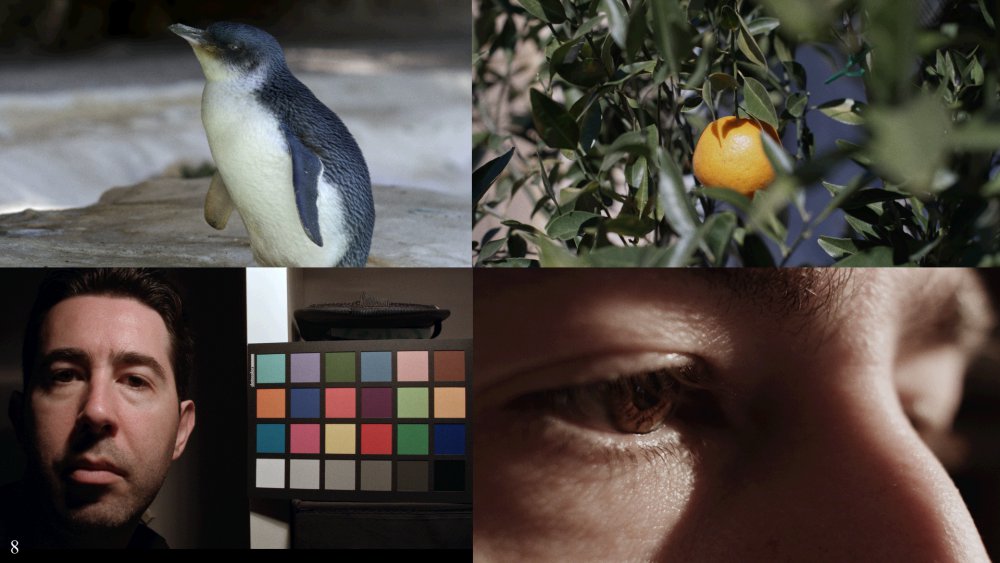

That's what I thought - that there wasn't much visible difference between them and so no-one commented. What's interesting is that I crunched some of those images absolutely brutally. The source images were (clockwise starting top left) 5K 10-bit 420 HLG, 4K 10-bit 422 Cine-D, and 2x RAW 1080p images, which were then graded, put onto a 1080p timeline and then degraded as below: First image had film grain applied then JPG export Second had film grain then RGB values rounded to 5.5bit depth Third had film grain then RGB values rounded to 5.0bit depth Fourth had film grain then RGB values rounded to 4.5bit depth The giveaway is the shadow near the eye on the bottom-right image which at 4.5bits is visibly banding. What this means is that we're judging image thickness via an image pipeline that isn't that visibly degraded by having the output at 5 bits-per-pixel. The banding is much more obvious without the film grain I applied, and for the sake of the experiment I tried to push it as far as I could. Think about that for a second - almost no visible difference making an image 5bits-per-pixel at a data rate of around 200Mbps (each frame is over 1Mb).

-

-

-

-

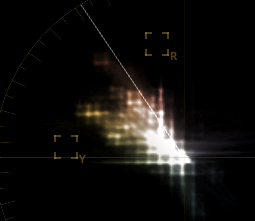

I'm not sure if it's the film stock of the 80s fashion that really dominates that image, but wow... the United Colours of Beneton the 80s! Hopefully ray bands - I think they're one of the only 80s approved sunglasses still available. 80s music always sounds best on stereos also made in the 80s. I think there's something we can all take away from that 🙂 Great images. Fascinating comments about the D16 having more saturated colours on the bayer filter. The spectral response of film vs digital is something that Glenn Kennel talks about a lot in Colour Mastering for Digital Cinema. If the filters are more saturated then I would imagine that they're either further apart in frequency, or they are narrower, which would mean less cross-talk between the RGB channels. This paper has some interesting comparisons of RGB responses, including 5218 and 5246 film stocks, BetterLight and Megavision digital backs, and Nikon D70 and Canon 20D cameras: http://www.color.org/documents/CaptureColorAnalysisGamuts_ppt.pdf The digital sensors all have hugely more cross-talk between RGB channels than either of the film stocks, which is interesting. I'll have to experiment with doing some A/B test images. In the RGB mixer it's easy to apply a negative amount of the other channels to the output of each channel, which should in theory simulate a narrower filter. I'll have to read more about this, but it might be time to start posting images here and seeing what people think. I wonder how much having a limited bit-depth is playing into what you're saying. For example, if we get three cameras, one with high-DR and low-colour separation, the second with high-DR / higher colour separation, and the third with low-DR / high colour saturation. We take each camera, film something, then take the 10-bit files from each and normalise them. The first camera will require us to apply a lot of contrast (stretching the bits further apart) and also lots of saturation (stretching the bits apart), the second requires contrast but less saturation, and the third requires no adjustments. This would mean the first would have the least effective bit-depth once in 709 space, the second would have more effective bit-depth, and the third the most in 709 space. This is something I can easily test, as I wrote a DCTL to simulate bit depth issues, but it's also something that occurs in real footage, like when I did everything wrong and recorded this low-contrast scene (due to cloud cover) with C-Log in 8-bit.. Once you expand the DR and add saturation, you get something like this: It's easy to see how the bits get stretched apart - this is the vectorscope of the 709 image: A pretty good example of not having much variation in single hues due to low-contrast and low-bit-depth. I just wish it was coming from a camera test and not from one of my real projects!

-

Another comparison video... spoiler, he plays a little trick and mixes in EOS R 1080p and 720p footage with the 8K, 4K and 1080p from the R5. As we've established, there is a difference, but it's not that big, compared to downscaled 1080p and through the YT compression.

-

What's today's digital version of the Éclair NRP 16mm Film Camera?

kye replied to John Matthews's topic in Cameras

A Canon DSLR with ML is probably an excellent comparison image wise. Canon / ML setups are similar to film in that they both likely have: poor DR aesthetically pleasing but high levels of ISO noise nice colours low resolution no compression artefacts I shot quite a bit of ML RAW with my Canon 700D and the image was nice and very organic. -

Also, some situations don't lend themselves to supports because of cramped conditions or the need to be mobile. Boats for example:

-

Depends where you go.. my shooting locations (like Kinkaku-ji) are often decorated with signs like this: which restrict getting shots like this: while shooting is situations like this: I lost a gorillapod at the Vatican because they don't allow tripods (despite it being maybe 8" tall!), and although they offered to put it in a locker for me, our tour group was going in one entry and out the other where the tour bus was meeting us, so there wouldn't have been time to go back for it afterwards, and the bus had already left when we got to security, so that was game over. Perhaps the biggest challenge is that you can never tell what is or is not going to be accepted in any given venue. I visited a temple in Bangkok and security flagged us for further inspection (either our bags or for not having modest enough clothing) which confused us as we thought we were fine and then the people doing the further inspection flagged us through without even looking at us. Their restrictions on what camera equipment could be used? 8mm film cameras were fine, but 16mm film cameras required permission. Seriously. This was in 2018. I wish I'd taken a photo of it. With rules as vague / useless as that, being applied without any consistency whatsoever, my preferred strategy is to not go anywhere near wherever the line might be and so that's why I shoot handheld with a GH5 / Rode VideoMic Pro and a wrist-strap and that's it. Even if something is allowed, if one over-zealous worker decides you're a professional or somehow taking advantage of their rules / venue / cultural heritage / religious sacred site then someone higher up isn't going to overturn that judgement in a hurry, or without a huge fuss, so I just steer clear of the whole situation. It really depends where you go and how you shoot. I've lost count of how many times a vlogger has been kicked out of a public park while filming right next to parents taking photos of their kids with their phones because security thought their camera was "too professional looking", or street photographers being hassled by private security when taking photos in public which is perfectly legal. Even things like a one of the YouTubers I watch who makes music videos was on a shoot in a warehouse in LA that almost got shut down because you need permits to shoot anything there even on private property behind closed doors!

-

Sounds like you aren't one of the people that needs IBIS, and as much as I love it for what I do, it's always better to add mass or add a rig (shoulder reg, monopod, tripod, etc) than to use OIS or IBIS. I use a monopod when I shoot sports, but I also need IBIS as I'm shooting at 300mm+ equivalent, so it's the difference between usable and not. I'd also like to use a monopod or even a small rig for my travel stuff, but venues have all kinds of crazy rules about such things and I've had equipment confiscated before (long story) due to various restrictions, so hand-held is a must.

-

This is interesting. If colour depth is colours above a noise threshold (as outlined here: https://www.dxomark.com/glossary/color-depth/ ) then that raises a few interesting points: humans can hear/see slightly below the noise floor, and noise of the right kind can be used to increase resolution beyond bit-depth (ie, dither) that would explain why different cameras have slightly different colour depth rather than in power-of-two increments it should be effected by downsampling - both because if you down downsample you reduce noise and also because downsampling can average four values artificially creating the values in-between it's the first explanation I've seen that goes anywhere to explaining why colours go to crap when ISO noise creeps in, even if you blur the noise afterwards I was thinking of inter-pixel treatments as possible ways to cheat a little extra, which might work in some situations. Interestingly, I wrote a custom DCTL plugin that simulated different bit-depths, and when I applied it to some RAW test footage it only started degrading the footage noticeably when I got it down to under 6-bits, where it started adding banding to the shadow region on the side of the subjects nose, where there are flat areas of very smooth graduations. It looked remarkably like bad compression, but obviously was only colour degradation, not bitrate. True, although home videos are often filmed in natural light, which is the highest quality light of all, especially if you go back a decade or two where film probably wasn't sensitive enough to film much after the sun sets and electronic images were diabolically bad until very very recently!

-

IBIS is one of those things that gets lots of hype, which means that many people think it's great and then they get past the hype and think it's worthless. I think IBIS is a thing that some people really do need, and other people think they need but haven't really explored all the options and when they realise they didn't need it then assume no-one else does either. I've shot with and without IBIS on the same lenses at the same time (doing camera comparisons) and I also regularly shoot in situations where the IBIS gives normal hand-held looking motion because it's turning footage that would be completely unusable into footage with some motion in it. IBIS is like everything else in film-making, and in life more generally. It's neither good, nor evil, but somewhere in between, and is context dependent. Anyone making sweeping generalisations just doesn't understand the subject well enough to realise that everything has pros and cons.

-

It's not my terminology - it's what I've put together over reading many threads about higher-end cameras vs cheaper ones and how people try to quantify the certain X-Factor that money can buy you. I've heard it enough over the years to get a sense that it's not being used randomly, nor only by one or two isolated people. I've seen first-hand that there are people who have much more acute senses than the majority of people. Things like synaesthesia and tetrachromacy exist along with many others that we've probably never heard of, so just by pure probability there are people who can see better than I can, thus, my desire to try and work out what it might be in a specific sense. Interesting. Downsampling should give a large advantage in this sense then. I am also wondering if it might be to do with bad compression artefacts etc. This was on my list to test. Converting images to B&W definitely ups the perceived thickness - that was on my radar but I'm still not sure what to do about it. Interesting concept from DXO - I learned a few things from that. Firstly that my GH5 is way better than I thought, being only 0.1 down from the 5D3 and also that it's way above my Canon 700D which I thought was a pretty capable stills camera. People always said the GH5 was weak for still images and I kind of just believed them, but I'll adjust my thinking! Yes, and then streaming video compression takes the final swing with the cripple hammer. This has long been a question in my mind about how we can evaluate the differences between a $50k camera and a $100k camera using a streaming service that uses compression that a $500 Canon camera would look down its nose at. I suspect that it's a case of pushing and pulling the information in post. For example, if you shoot a 10-bit image in LOG that puts the DR between 300 and 800IRE and then you expand out that DR to 0-100IRE then you've stretched your bits to be twice as far apart, so it's now the equivalent of a 9-bit image. Then we select skin tones and give them a bit more contrast (more stretching) and then more saturation (more stretching) etc etc. It's not hard to take an image that was ok to begin with and break it because the bits are now too far apart. We all know that, but the thing that I don't think gets as much thought is that the image is degrading with every operation, long before it 'breaks'. Even an Alexa shooting RAW has limits, and those limits aren't that far away. Have a look at a latitude test of an Alexa and read the commentary and you'll note that the IQ starts to go visually downhill when you're overexposing even a couple of stops.....and this is from one of the best cameras in the world! My take-away from that is that I need to shoot as close to the final output as possible, so I switched from shooting HLG to Cine-D. This means that I'm not starting with a 10-bit image that I have to modify (ie, stretch) as the first step in the workflow. Agreed. Garbage IN = Garbage Out. Like I said above, if you're having to do significant exposure changes then you're throwing bits away as the first step in your workflow. I think there's a couple of things in here. You're right that accurate colours isn't the goal, Sony proved that. But my understanding of the Portrait Score wasn't about accuracy, it was about Colour Nuance... "The higher the color sensitivity, the more color nuances can be distinguished." What you do with that colour nuance (or lack of it) determines how appealing it will look. Of course, any time you modify an image, you're stretching your bits apart, so in that sense it's better to do that in-camera where it's processed uncompressed and (hopefully) in full bit-depth.

-

One of the elusive things that film and images from high-end cameras have is often called "thickness". I suspect that people who talk about "density" are also talking about the same thing. It's the opposite of the images that come from low quality cameras, which are often described as "thin" "brittle" and "digital". Please help me figure out what it is, so perhaps we can 'cheat' a bit of it in post. My question is - what aspect of the image shows thickness/thinness the most? skintones? highlights? strong colours? subtle shades? sharpness? movement? or can you tell image thickness from a still frame? ...? My plan is to get blind feedback on test images including both attempts to make things thicker and also doing things to make the image thinner, so we can confirm what aspect of the image really makes the difference.

-

Which BMPCC? 2K? 4K? 6K? They're all different sensor sizes..... you need to be specific.

-

They'll likely stick with DFD, and while it is painful now, it is the future. PDAF and DPAF are mechanisms that tell the camera which direction and how far to go to get an object in focus, but not which object to focus on. DFD will eventually get those things right, but will also know how to choose which object to focus on. I see so many videos with shots where the wrong object is perfectly in focus, which is still a complete failure. This is from the latest Canon and Sony cameras. Good AF is still a fiction being peddled by fanboys/fangirls and bought and paid for ambassadors. Totally agree - as a GH5 user I'm not interested in a modular-style cinema camera. and if I was, then I'd pick up my BMMCC and use that, with the internal Prores and uncompressed cDNG RAW. It might sell well. The budget end of the modular cine-camera market is starting to heat up, as people get used to things like external monitors (for example to get Prores RAW) and external storage (like SSDs on the P4K/P6K) and external power (to keep the P4K/P6K shooting for more than 45 minutes), then modularity won't be as unfamiliar as it used to be. Everything being equal, making a camera that doesn't have to have an LCD screen (or two if it has a viewfinder) should make the same product cheaper to manufacture.

-

Is the DSLR revolution finished? If so, when did it finish? Where are we now? It seems to me like it started when ILCs with serviceable codecs became affordable by mere mortals. Now we have cinema cameras shooting 4K60 and 1080p120 RAW (and more) for the same kind of money. Is this the cinema camera revolution? What is next? Are there even any more revolutions to have? (considering that we have cameras as good as Hollywood did 10-years ago).

-

This video might be of interest in matching colour between different cameras. He doesn't get a perfect match by any means, but it's probably 'good enough' for many, and probably more importantly is a simple non-technical approach using only the basic tools.

-

Oh, the other thing I recommend is to test things yourself. I've proven things wrong in 5 minutes that I believed for years and never heard anyone challenge or question. Most of the things that "everyone knows" online is pure BS, and the ratio of information to disinformation is so small that if someone is disagreeing with the majority of people, then the majority is probably wrong and maybe the minority right. Oh, and if someone tells you something is simple, they just don't understand it enough.

-

YouTube 4K is barely better than decent 1080p.. here's a thread talking about this very topic: In terms of why your 200D 1080p is soft, that's a Canon DSLR, and nothing to do with 1080p. My advice is this: Watch a bunch of videos ON VIMEO to see what cameras are really capable of in 1080p - you'll be amazed. If you decide you want/need 4K then so be it, but do yourself a favour and try and actually look at images instead of brand names. And yes, "4K" is a brand name - just how the manufacturers of TVs marketed it to people to get them to replace their perfectly good 1080p TVs. Most movie theatres have 2K projectors, so lots of marketing was needed to get people to buy a TV that has 4 times as many pixels as a movie theatre. Forget about Canon, or be willing to pay the Canon Hype Tax. The internet is full of people who think that Canon is the king and everything else is second class. These people are fools who don't know how to tell if a camera is any good or not so they just check what brand it is and then go hang with the people they know will make them feel better. Canon has great colour science - so do most other brands. Canon has great AF - so do many other brands. Canon cripples their products because the fanboys and girls will buy whatever they're selling anyway. Go to the ARRI website, the RED website, and the BM website, download their sample clips and have a look at how plain they are. Try and colour grade them and see what you get. This should show you that the glorious images that you are seeing online from the cameras that you're lusting after, the Canon ones especially, are due to the skill of the operator in post, rather than the manufacturer who designed the camera. Good luck. My journey started with me wondering why my 700D 1080p files looked so bad and thinking I needed Canon colour science and 4K to get good images. I've now deprogrammed myself and use neither Canon equipment nor 4K, but I've spent a lot of money on glass. Good luck.

-

I've heard that VFX is a different thing entirely, and that you want RAW and at a high resolution as possible. The RAW is because you want clean green-screens without having to pull a key and be having to battle with the compression that will blur edges etc. The resolution is so that the tracking is as accurate as possible so that when you composite 3D VFX into the clip the VFX parts are as 'locked' to the movement of the captured footage as possible - VFX tracking has to be sub-pixel accurate so that the objects appear like they are in the same space as the footage. Screening in 2K is probably an advantage as well as it would mean that there is a limit to how clearly the VFX will be seen, so in that sense 2K probably covers up a bunch of sins.. like SD (and then HD) hid details in the hair/makeup department work that higher resolutions exposed.

-

@Neumann Films I posted it here because this thread is about the poor quality of the YouTube codec, rather than any commentary about the R5 or the economics or artistic value of vlogging. We all have opinions on a range of matters, but I just thought it was an interesting example, considering that Mattis audience is tech / image / camera centric, and yet even on such a channel a workflow upgrade / resolution downgrade wasn't really noticed. Even for Matti, who even the nay-sayers suggest would be heavily preoccupied with his social media engagement and comments section opinion on various camera products. For reference, I watch his content on a 32" UHD display, in a suite calibrated to SMPTE standards (display brightness is 100cd/m2, ambient light is ~10% that luma with a colour temp of 6500K, and viewing distance within the sweet spot of viewing angle, etc). Maybe some are watching on a phone, but not everyone - one of the first things those who are caught up in the hype of things like Canon CS / 4K / cinematic LUTs / 120p b-roll etc would acquire is a big TV and/or 4K computer monitor. I know that because when I got into video that's one of the first things I did, as that was the prevailing logic online, and I definitely wasn't the only one. Threads like this one are part of my journey of un-learning all that stuff the internet is full of and is often flat-out wrong. For anyone delivering via a different platform other than YouTube it's a different story. Of course, not that different if you do a little reading about how many features have been screened in 1080p in theatres and the film-makers didn't get a single comment about resolution, but that's a different thread entirely 🙂

-

Matti Haapoja got the Canon R5. Matti Haapoja shot in 4K and 8K. Matti Haapojas computer absolutely choked. Matti Haapoja went back to 1080. Apparently, no-one noticed.

-

Agreed. Like everything in film-making, it's great if you can make it look nice, but if it gets in the way of the story or content, then it's wrong. I remember a wedding photographer talking about taking group photos once, and how it is critical to get everyone on focus in a group shot, which if it's a large group of people can be tricky as the people at the edges are a different distance away. They also mentioned that the most important people at a wedding are the bride/groom, but the second most important people are the oldies as its a very common situation where "the last nice photo of grandma" was taken at a wedding, so making sure to get them in focus should be a huge priority. It's easy to forget, and make the film equivalent of a cake made entirely of icing.

-

The more I pull colour science apart, the more I realise that companies like BM and Nikon have colour science either just as good as Canon or within a tiny fraction, and also that ARRI colour science isn't perfect and there are things about it I don't care for. I know that this will get me ejected from the 'colour science bro club' but I don't care about being popular and fitting in, I care about colour science and good images.