-

Posts

7,835 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by kye

-

The more I fall down the colour grading rabbit hole, the more I realise how deep it goes. I've kind of developed two parallel (and perhaps not compatible) concepts around colour grading: The first is that those of us that work on lower-budget / no-budget / small-crew / no-crew productions are at a tremendous disadvantage. This is because: A good grade starts on the set with location selection, shoot schedules, set design, costume, hair, etc, and we may have less control, or no control, over those things. This means that while on a large budget show they can ensure that professionals who have been applying colour theory for 20 years made sure that every object on the set supports the colour scheme that will reinforce the emotional and aesthetic experience of the images to support the project, even before anyone arrives to shoot. A good grade also benefits from great lighting design and execution. Large budget productions can do things like have heaps of lights further away from the scene, might have time to tailor lighting to every setup individually, etc. A great camera (that most of us aren't shooting with because we can't afford it) will shoot with higher bitrates and more robust codecs, allowing more flexibility in post. Plus, the colour science of many manufacturers is nicer on their flagship cine cameras than it is on the budget models or hybrids. **Edit: This is actually something that is changing now, with P4K/P6K etc offering un-crippled codecs, bitrates, and colour science. Additionally to this, and while I won't speak for others here it's definitely something I suffer from, is that sometimes the cinematographer (me!) royally screws the pooch on shots! In this sense, our job is actually much more difficult because when we sit down to grade the footage is less robust and needs more work (and in my case often needs urgent medical attention!) so the difference between the source footage and greatness is that much larger to span. The second concept I have developed is that it's not the first concept above that is the dominating factor, but that colouring is far more difficult than people make it out to be and that colourists are actually far more skilled than how we (or I) think about them. My recent posts in the XC10 thread showing the noise in that Cinque Terre image is a case in point. It's got horrible 8-bit LOG noise, but I stumbled upon something that seems to magically rescue the image. How many of these techniques are colourists using all the time and that's just part of the job? Who knows. Things I've read from colourists include: If it's well shot I just adjust levels and primaries and the image pops into place Yes, the Alexa often skews towards green, no problem, just grade it out That project had some issues so I just had to work through them with power windows and pulling keys I'm starting to have a sneaking suspicion that these comments (from professionals who have a reputation to uphold) mask that they might be regularly getting fed awful and damaged images where sometimes they have to pull out all the stops, and maybe a dozen or so power windows to adjust every freaking little thing on some horrible images, to get something that looks like there was no problem in the first place. I'm actually testing this theory by collecting a bunch of RAW sample footage from ARRI, RED, and others and going to start grading it. It should be a good reality check around how difficult my footage is to grade rather than what these manufacturers put on their websites as example footage, which should be basically problem free. Anyway, one thing I read from a colourist once was that "if the image looks good, it is good" so if you've got a workflow that works for you, declare victory on the project and move on with your life 🙂 Thanks Chris - you too! I think I've also got a solid understanding of the basics, at least on a technical level. I'm hoping more for hints around 1) how to recognise why an image might look 'funny' and what to do about it, and 2) how to take an image that looks OK and turn it into something that pops a bit more. I've picked up a bunch of ideas from various colour grading videos, but hoping to get a more systematic approach. I kind of feel like the YT colourists talk about the 'tricks' but don't cover the fundamentals, so it's like I'm cooking with rubbish ingredients and the wrong recipe but I've got 100 suggestions about what spices to use to finish it off. I'd be curious too. Noam Kroll is someone I read regularly and I've seen that he's got LUT packs and the like for sale, but haven't really got a sense of how good they are. Almost everyone online has LUT packs for sale, except the professional colourists who talk about the fundamentals. I suspect they also know all the 'tricks' but they probably don't want to give them away.

-

I was recommended the Colour Correction Handbook - Second Edition by Alexis Van Hurkman so I ordered it. It just arrived today, and I am having trouble remembering a time when I was so excited about a book. I wasn't excited when it arrived, I only got excited when I started reading through the contents page, and flicking through some of the chapters. It's like every heading is a thing I've been wondering and struggling with. At first glance it looks like a good mix of technical stuff, techniques, and creative applications. and the best part? It's over 600 pages!

-

EOSHD testing finds Canon EOS R5 overheating to be fake

kye replied to Andrew Reid's topic in Cameras

Matti credited EOSHD in this video... but didn't link here. 46K views after being up for 15 hours. -

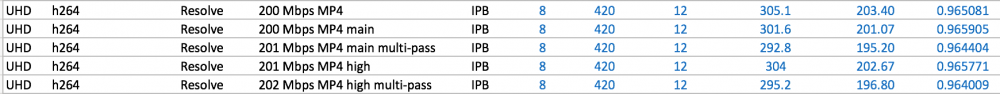

Compared to my current machine, the spec I'm looking at will be 2.6x as powerful, and that's CPU alone. Benchmarks for the GPU will be almost double. That's before we get to the T2 Chip which the new one will have over this one. Then if I decide to upgrade my eGPU, well.... Exporting some trials now. It occurs to me that the previous way I was exporting was Quicktime -> h264 so I'm trying MP4 -> h264 which has more arguments. On OSX and my dated Resolve I only have encoding profiles of Auto, Base, Main, and High. I also have multi-pass mode, so I've queued up a few combinations of those. Slowly but surely...

-

Yeah, that wouldn't make sense.

-

I think we're getting our wires crossed here. Your above test is what I would expect. If you have an 18-55mm f2.8-4 zoom then you would expect for it to be brighter at f2.8 than at f4. If the manufacturer decides to offer a constant aperture 18-44 zoom then it might take that design and just make it 18-55/4, which it can do by taking the 2.8-4 design and changing it so that the 2.8 isn't available at the wide end. ie, crippling the lens.

-

Aperture is literally the ratio of the diameter of the hole to the focal length. At a constant aperture it should approximate a constant exposure. I say "approximate" because exposure takes into account other things like the transparency of the glass and internal reflections etc, but it should be pretty darn close to exact. I suggest you do a test - put the camera into full manual, find a large blank surface, and zoom in and out at constant aperture as well as at maximum aperture and see if the brightness of that surface changes. You should see an increase in exposure (of that reference surface) when you open the aperture when zooming out.

-

I think what @Enjay is saying is that when you design a zoom it's maximum aperture will always be larger on the wide end, so in order to make a constant aperture zoom they just design it so that it closes down at the wide end to match the maximum aperture of the long end. In this sense, if you had a variable aperture zoom, say an 18-55mm f3.5-5.6, then you could just set it to f5.6 and then zoom in and out and you'd have the benefits of a constant aperture, as well as being able to open up wider than f5.6 in the middle and at the wider end.

-

Prores from ffmpeg is significantly different - different bitrates and performance. Obviously this is rabbit hole I'm going to be falling down for a while!

-

Just found out that ffmpeg can encode to Prores. The tests continue!

-

This is interesting / surprising. Do you mean that the Colour Space Transform is wrong? I have found with the "cheaper" cameras (XC10, GH5) that its unclear what colour profiles they align with and no clear answers online. I just assumed that the C-Log in the XC10 would be the first version and that the CS would be Canon Cinema Gamut but I guess not. It was billed as being able to be processed along with footage from the other cine line cameras and was completely compatible, although @jgharding mentioned above that this wasn't the case in reality. I'm not against grading it manually, so that's not an issue. I've been wondering what the best approach is to grading flat footage. I've got about half-a-dozen projects in post at the moment with XC10 "C-Log", GH5 "HLG", GoPro Protune, and Sony X3000 "natural" which are all flat profiles with no direct support in CST / RCM / ACES. I was thinking one of my next tests would be to grab some shots and grade them using: LGG Contrast/Pivot Curves CST to rec709 then LGG CST to rec709 then Contrast/Pivot CST to rec709 then Curves CST to Log-C then LGG CST to Log-C then Contrast/Pivot CST to Log-C then Curves etc? to try and get a sense of how each one handles contrast and the various curves. I was hoping to get a feel for each of them to understand which I liked and when I might use each one. I suspect that curves will be the one as I will be able to compress/expand various regions of the luma range depending on the individual shot. For example I might have two shots, one where there is information in the scene across the luma range and I would only want to have gentle manipulations, but a second shot might have the foreground all below 60IRE and then the sky might be between 90-100IRE, in which case I might want to eliminate the 60-90IRE range and make the most of the limited gamma of rec709, bringing up the foreground without crushing the sky. What's that saying... "I was put on earth to accomplish a number of things - I'm so far behind I will never die". I have sooooo much work to do.

-

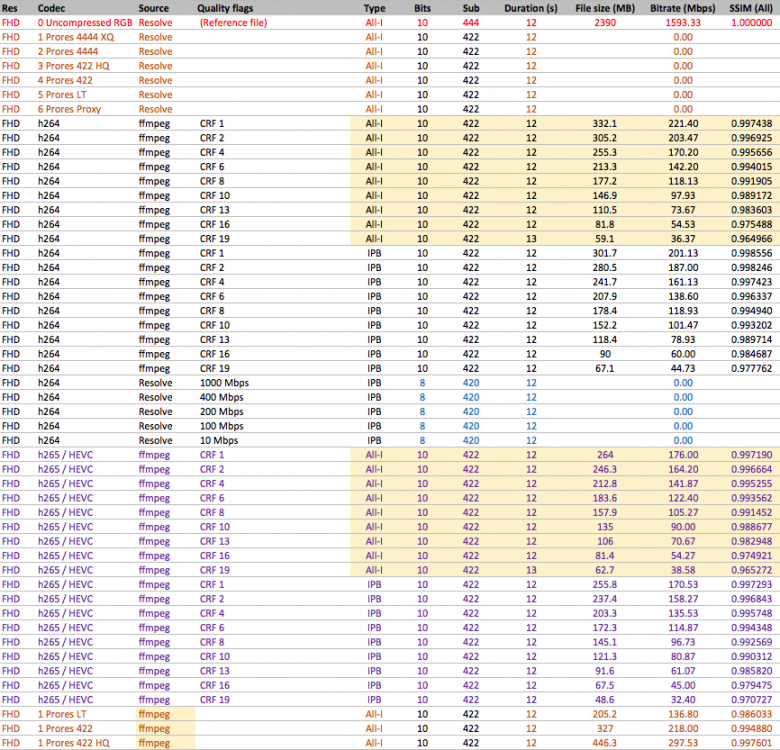

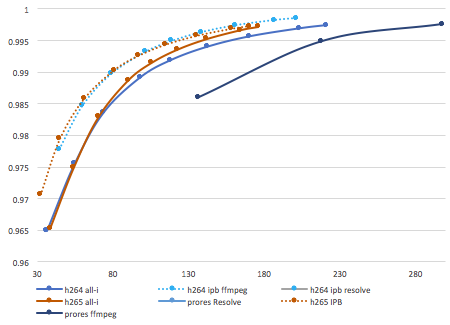

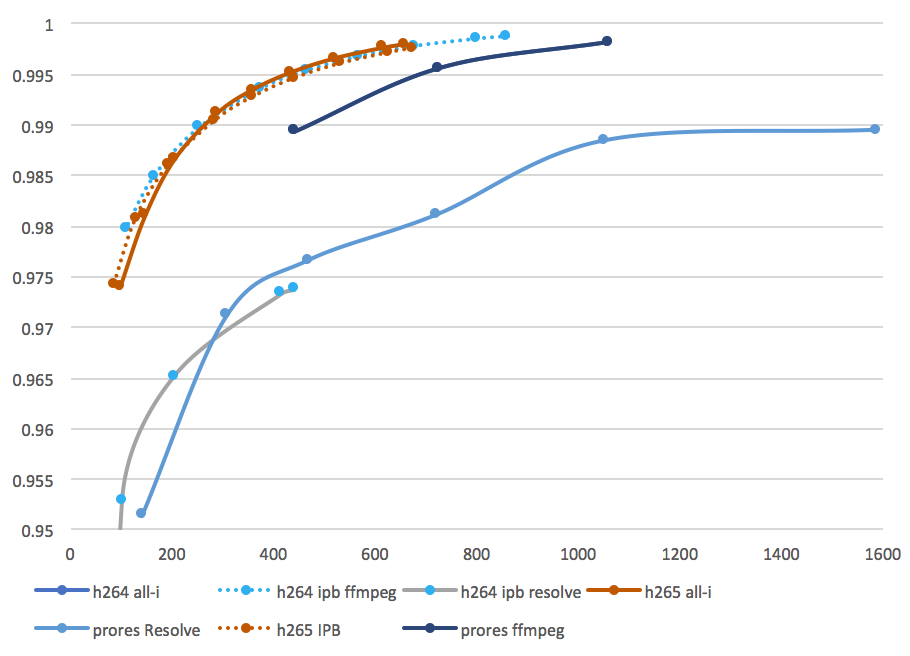

It's a bit more complicated actually, which tripped me up. You're right that it's "-intra" for h264, but the h265 conversion doesn't accept that - it just ignores it, thus my question. You want "-x265-params keyint=1" for the h265 conversion. For example: You can tell it's working because when you run it, it shows: The comparison command to render the SSIM is: I didn't look at this specifically (and now I don't have to since you did!) but it makes sense. In terms of an acquisition format, the question of prores vs h264/5 doesn't really come into question that much if your h264 option is below ~150Mbps, which is where I went down to. Prores Proxy is 141Mbps and people don't really talk about using that in-camera, so in a sense that's below the conversation about acquisition. It should scale perfectly, if you take the approach of bits-per-pixel. Prores bitrates for UHD are exactly 4x that for FHD. Thus, I would assume that you can simply translate the equivalences. I might do a few FHD tests just to check that logic, but I can't see why it wouldn't be true. I have two remaining questions: How do I get Resolve to export better h264, for upload to YT Is Resolves Prores export that good? The h264 outputs didn't seem to be anywhere near ffmpeg, so maybe the Prores export is weak too? I'll have to return to this and investigate further. I wonder if there's a way I can configure it to be better than 420 and 8-bit too.

-

Unconventional choice, but my most used lights are: 1) The sun 2) Halogen work light from hardware store (for lens tests) 3) I own an Aputure AL-M9 which is super handy too Mostly it's available lighting for me though, so the sun but also practicals at night.

-

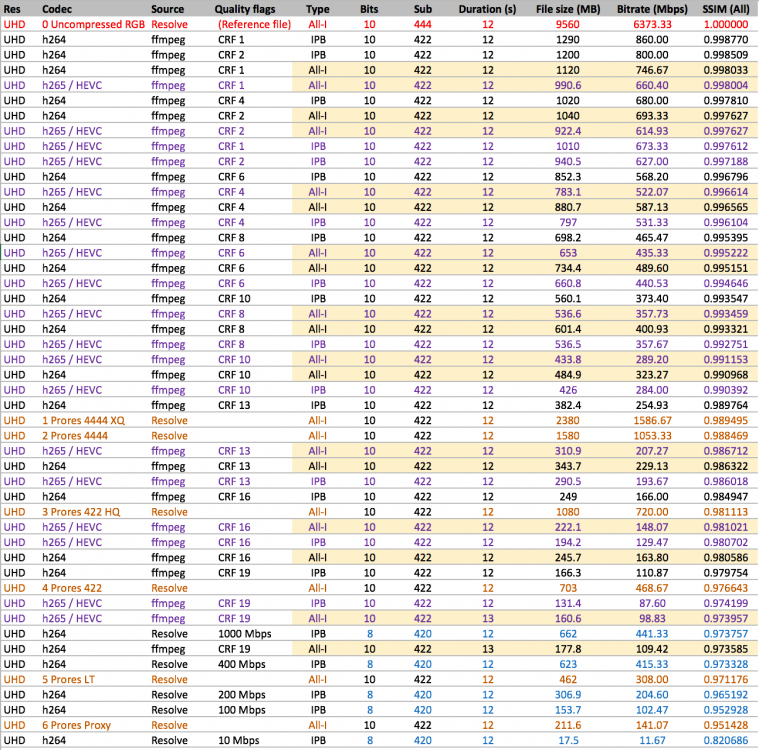

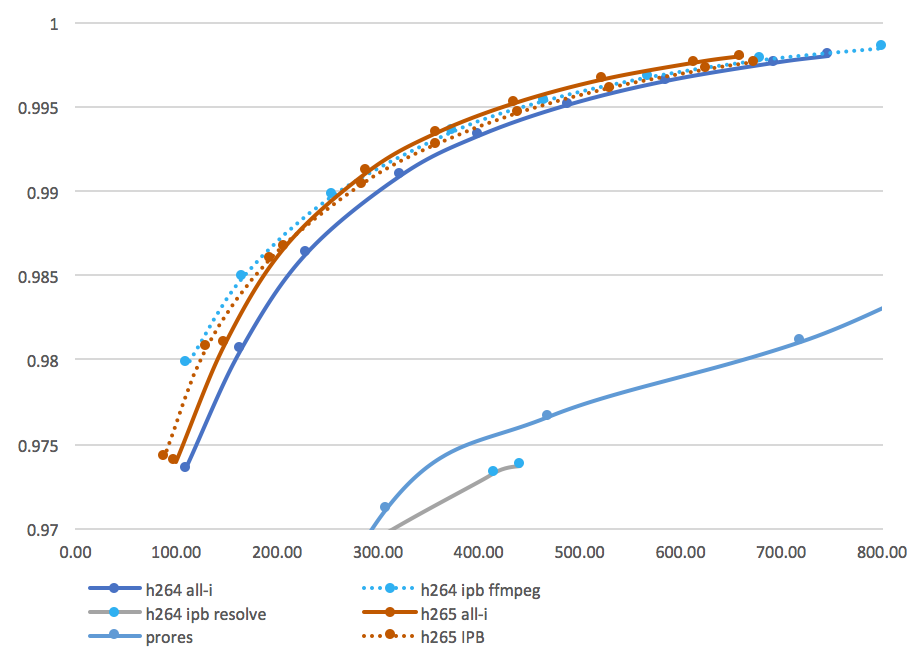

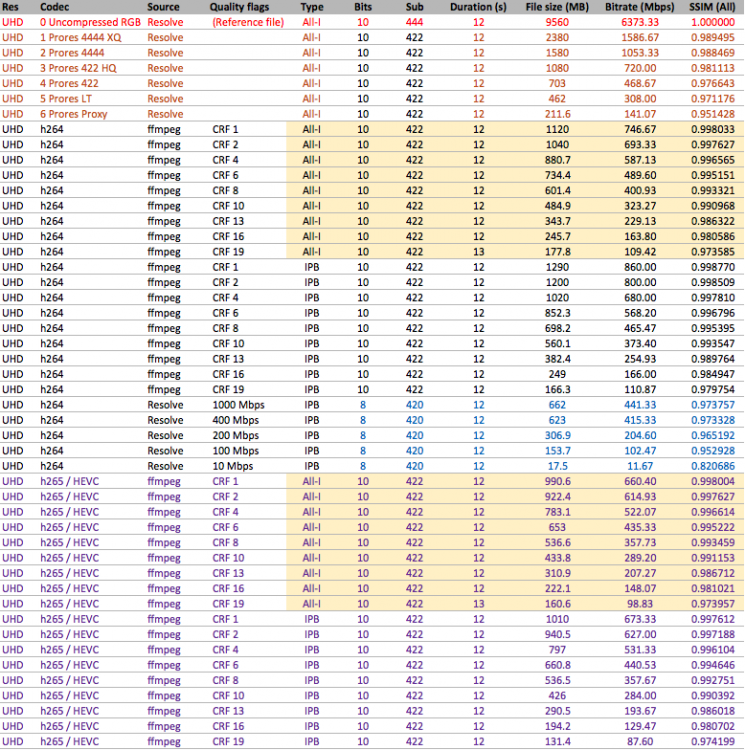

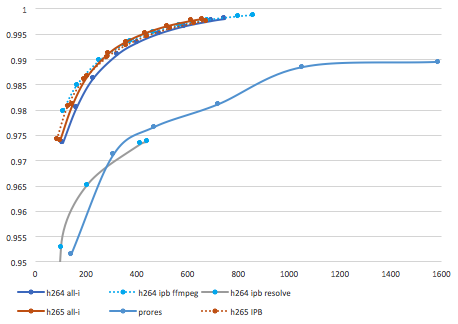

Ok, here's the second attempt..... and graphed: So, it looks like: UHD Prores 422 HQ from Resolve can be matched in quality by ffmpeg h264 422 10-bit ALL-I at around 170Mbps and a similar bitrate for h265 which is about a 4X reduction in file size UHD Prores 4444 and 4444 XQ from Resolve can be matched in quality by ffmpeg h264 422 10-bit ALL-I at around 300Mbps and around 250Mbps for h265 which is about a 3-4X reduction in file size There doesn't appear to be a huge difference between h264 and h265 all-i efficiencies, maybe only 10-15% reduction Happy to answer any questions and to have the results challenged. Let's hope I didn't stuff anything up this time 🙂

-

Thank you! That worked like a charm - unlike the h265 intra encoding, which worked exactly the same as the non-intra encoding. The SSIM comparison between an intra encode and a non-intra encode revealed an SSIM of 1.000000. 😂😂😂😂 Now to work out how to encode 10-bit intra. You already need to have a different binary for h265, who knows what support for intra it has, or doesn't have. The commentary I read was that the h264 and h265 modules have the same arguments, but obviously there are differences. I had previously considered buying a Mac Mini as a desktop and buying a low-spec laptop and the T2 chip caught my eye in that scenario. I have since realised that although the 13MBP I'm buying is only quad core and the Mac Mini is 6-core, the cores are slower and are only 8th generation (IIRC) whereas the 13MBP are 10th generation and that basically makes up the difference. I'll be getting the T2 Chip regardless, and combined with my decision to go back to 1080p and use the 200Mbps ALL-I mode on the GH5 it should cut like butter. The question then becomes how it handles the footage from the Sony X3000 which is 100Mbps h264 IPB, and from my phone, which are h265. I was wondering if I should render those to Prores HQ 1080 and then grade from there, or to just stick with the original files. I guess the T2 Chip might mean I keep the originals of those files. Interesting times.

-

Nice colours! It needs more saturation though - see below reference image!

-

No idea. I guess bigger is better for low-light, but bigger requires bigger optics, so there would be a limit. MFT seems like it might be around that size, but who knows.

-

I don't know about you, but I'm increasingly finding that feature to rather miss the mark. Not only has math stopped being my friend ("hi great to see you, wow, when was the last time I was in town, it was..." "STOP! don't do it!" "... 22 years ago at that bar!") but Facebook now intermittently: Shows me pictures of me with my ex Tells me that it's my mums birthday, reminding me that she's dead or reminding me of friendships lost, etc etc... There's a quote that I found particular amusing.. in about 60 years there will be 800 million dead people on FB. Agreed. We're nowhere near the end of this thing. We'll know when it actually starts to get desperate when the bottom falls out of the real-estate market. I don't know about you in NZ, but here in AU the market doesn't tend to drop, it just stagnates. Partly because people just refuse to sell and take the loss, as well as constant bullish marketing by real estate folks, but it goes down when people are forced to sell as they can't cut elsewhere in their living expenses to make up the difference.

-

Screw it. Goodbye 4K, hello 1080p. Why? I can either: Spend hundreds of dollars replacing my 2x256Gb SD cards with 2x512Gb UHS-II SD cards (to retain similar recording times) Shoot 400Mbps 4K 16:9 or 3.3K 4:3 10-bit 422 ALL-I Or: Spend that money instead on upgrading the SSD in my MBP upgrade I'm about to do so I can edit files SOOC Shoot 200Mbps 1080p 10-bit 422 ALL-I Completely eliminate the proxy workflow I've had to use, with all the rendering and re-conforming timelines between proxy and original SOOC footage Colour grade on 25% as many pixels as UHD, giving me either 4X fps in playback, or 4X processing ability for the same performance Use Resolves Super Scale feature when rendering for 4K YT uploads (this looks pretty good after all......) Use the digital zoom function of the GH5 instead of cropping in post (this is for when I don't have a long enough lens on the camera - the digital zoom looks like it applies to the 5K sensor readout, so isn't a crop into the 1080p downscaled image, but an adjustment of the downscale that is already occurring) All else being equal, h264 ALL-I codecs are slightly better than Prores. Prores HQ for 1080p is 176Mbps and the best 1080p mode on the GH5 is 200Mbps. Both are 10-bit, 422, and ALL-I, so all else is equal. So, if 1080p Prores HQ is good enough for feature films then that mode should be good enough for me, where I'm only publishing to YT or watching locally on TVs. The last footage I was involved with shooting that got projected came from a PD150, so the big screen doesn't factor in my world that much lol.

-

Haters gonna hate.... That's top 5% of the most sensible things ever said on a camera forum. Nice to see a departure from the "based on one video I'm going to buy it / I'll never buy it" all-or-nothing sentiments 🙂

-

Does anyone know how to confirm that my h265 files are ALL-I? On the h264 files the ALL-I ones have this from ffprobe: "Stream #0:0(und): Video: h264 (High 4:2:2 Intra) (avc1 / 0x31637661), yuv422p10le, 3840x2160 [SAR 1:1 DAR 16:9], 745951 kb/s, 23.98 fps, 23.98 tbr, 24k tbn, 47.95 tbc (default)" and the h264 IPB have: "Stream #0:0(und): Video: h264 (High 4:2:2) (avc1 / 0x31637661), yuv422p10le, 3840x2160 [SAR 1:1 DAR 16:9], 860083 kb/s, 23.98 fps, 23.98 tbr, 24k tbn, 47.95 tbc (default)" but the h265 files I rendered with -intra as an argument have this: "Stream #0:0(und): Video: hevc (Rext) (hev1 / 0x31766568), yuv422p10le(tv, progressive), 3840x2160 [SAR 1:1 DAR 16:9], 350312 kb/s, 23.98 fps, 23.98 tbr, 24k tbn, 23.98 tbc (default) which seems to suggest they're progressive, but I definitely used the -intra flag. I even converted another one and changed the order of the arguments in case that was stuffing things up.