-

Posts

7,835 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by kye

-

Blackmagic casually announces 12K URSA Mini Pro Camera

kye replied to Andrew Reid's topic in Cameras

She was smiling in a certain way when she said it. From what I understand about how they build cameras, this might actually be possible via a firmware update. This is pure speculation of course, and should be treated as such, but imagine the DR improvements!! -

Blackmagic casually announces 12K URSA Mini Pro Camera

kye replied to Andrew Reid's topic in Cameras

I told the wife about this camera. She said I can get it, but I have to put every other purchase on hold for a long time, I am only allowed to buy $10k worth of storage, and once that's full, I have to go down to 640x480! -

Blackmagic casually announces 12K URSA Mini Pro Camera

kye replied to Andrew Reid's topic in Cameras

Wow. I went to bed last night and woke up to this.. I predicted an 8K FF camera as a semi-ridiculous joke, but I had no idea that it would turn out not audacious enough!! My comments in the 1080p thread are interesting in this context though. We are playing the megapixel game, but very few are giving high-bitrates to downscaled resolutions, but if this is giving 4K RAW downscaled from 12K then the image should be spectacular, while not requiring the computer to be able to push 12K pixels around. This would make it a hugely flexible camera - yes you can shoot 12K RAW if the project warrants it, but you can also shoot 4K RAW with no crop at great bitrates and bit depths for projects where post needs to be more cost effective. Also, if the colour science is great, this could put them on the map as a serious challenge to ARRI / RED. Then they'd have to nail the reliability in order to actually take market share, but it's a shot across the bow nonetheless. BM are thinking big - they do it with Resolve every year, did it with the pocket cameras since the OG Pocket, and now at the high-end too. -

BMMCC. I bought a cage for it, and it was a good deal to get top handle, which I thought would be good considering it has no IBIS, so I got that too, but couldn't work out how to rig it up! Awesome.... Hey - I see a photo I took in there! Cool 🙂 Obviously, this is the one I'm looking for: It was so obvious the whole time! Rode Video Mic Pro+, which has a reasonable shock-mount on it, but I don't want to bump it if I'm doing any more vigorous camera moves (which is what a top-handle is good for). I also don't want to have things too far forwards, as they'll get in the frame with a wide lens. I think the 7.5mm Laowa would be a great fit for it as it's got a horizontal FOV equivalent to a 22mm lens, and can focus quite close, so would be fun in a wider aspect ratio. I don't understand why people rig the top handle at the back like this: It means that all the weight of the rig is further forwards, so you're straining to hold it one handed, unless you're aiming at the ground that is. There are some cool ideas in there though:

-

Does anyone know how to effectively rig up a smaller camera with a top handle, but also still keep a small monitor and shotgun mic also rigged up at the same time? And not have it become ridiculously large, and/or get handling noise on the microphone (from mounting it too close to the top handle). I've only seen effective rigs like this on larger cine cameras where there's more real-estate to play with. The only configurations I can come up with require two hands because they're not top-handle centric.

-

Haven't tried it, but if you can find the lens optical diagram for a specific lens then that might show which lens elements have coatings on them. My guess would be that there would be coatings on the inside as the flare above would be caused by light bouncing back and forth between lens elements inside the lens. That's why you often introduce reflections when you put on a filter - the light goes through the filter then reflects off the front element of the lens then reflects off the back of the filter then goes through the lens to the sensor. Can you buy flaring filters for these kinds of flares? Does anyone know? You'd have to get two curved elements with a gap between them, perhaps?

-

Then we'll all need a wheelbarrow to carry it around!!

-

I hear the difficult part is building it.....

-

Maybe the overheating is the in-camera implementation of the 'boring detector' feature in Resolve that tells you when shots are too long?

-

Do they shoot 8K RAW? Smart money these days says not to go off planet for anything less than 8K RAW.

-

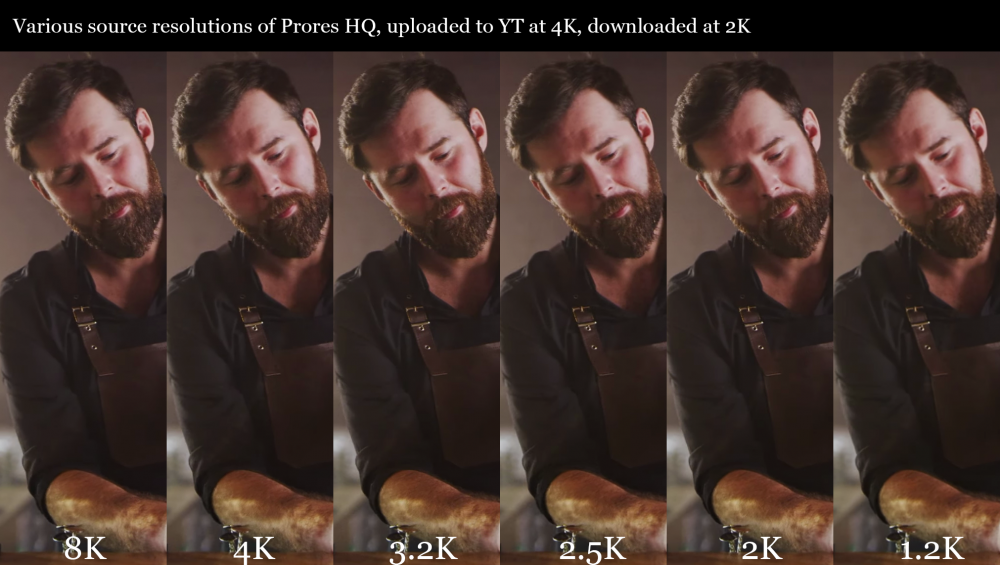

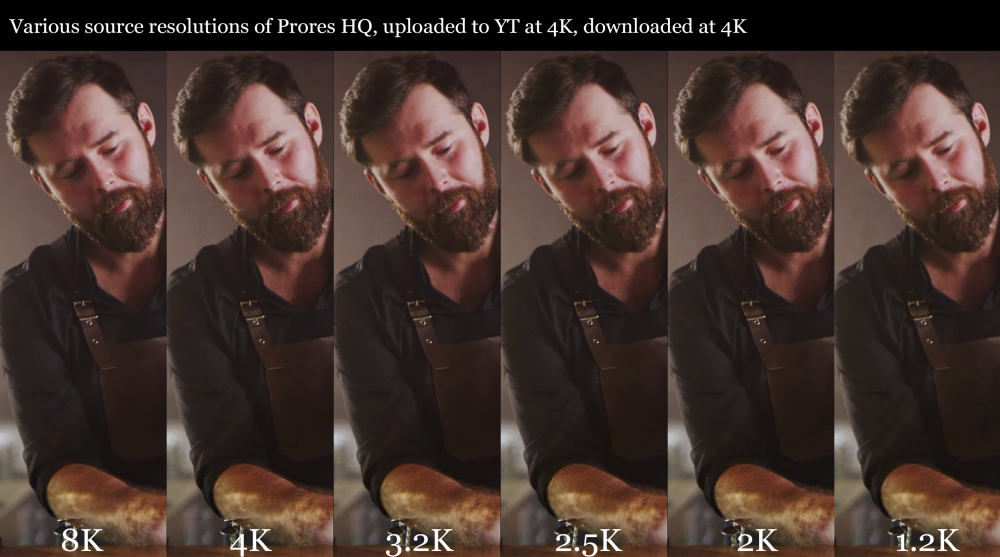

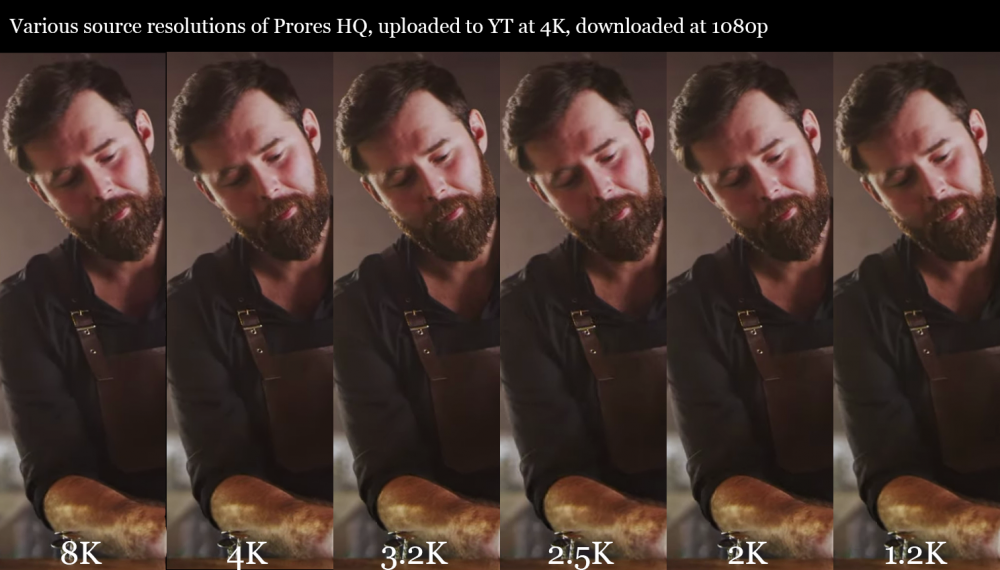

So, with all this talk about 8k RAW, it's got me thinking, and I'm contemplating going back to 1080p. I've been thinking about all these cameras with high resolutions and ferocious data rates, and why they don't implement higher bitrates and bit-depths on the lower resolution modes. Noam Kroll just shot a low budget feature in 2K Prores HQ on his Alexa Classic. In 4:3 no less! https://noamkroll.com/playing-against-filmmaking-trends-on-our-feature-with-arri-alexa-classic-2k-prores-hq-43-aspect-ratio/ His pipeline was RAW -> Prores HQ -> storage. Prores HQ in 1080 is around 176Mbps, is All-I, and is 10-bit. It sounds lovely. Uncompressed 1080 10-bit is a whopping 1490Mbps, so the 176Mbps of HQ is quite a saving of data rates. But what do I actually want? So I made a list: I want more bit-depth than 10-bit 10-bit is fine if you're on a controlled set or have time to get your WB broadly right in camera, but for some of the horrendous situations I find myself in, having more bit-depth would help (remember how with RAW you can WB in post - well, bit depth is what enables that) I want high bit-rates for a good quality image A good quality image means that every portion of the screen gets a decent amount of data, so this is about bit-rate. It's not about resolution, because a 100Mbps 4K file will still have half the data available for each square cm of the screen than a 200Mbps 1080 file I want files that are easy to edit in post It doesn't matter if my 8K smartphone files are only 100Mbps, the computer still has to decode, process, display, and encode 16 times as many pixels as 1080 So, do I want 1080p RAW? Yes, and no. RAW has great bit-depth, much larger bit-rates than I care for, but also isn't the best that 1080 can get because it is lower resolution after debayering. Do I want 2.5K RAW? Maybe. Problem is that RAW and IBIS are very rarely found together. What I really want is some kind of compressed, but not too compressed, intermediary file. What I really want is 1080 Prores 4444 (which is 264Mbps) or Prores 4444 XQ (which is 396Mbps), because these are 12-bit. 12-bit would do me very nicely. So, what do we get from the manufacturers? We get ridiculous bitrates on the higher resolutions, and paltry token efforts on the lower ones. My XC10 is a classic case - 305Mbps 4K but 35Mbps 1080p. The 4K has 2.5 times the amount of data per pixel than the 1080p, and 10 times the amount of data per square cm of screen. But I have a GH5, which is one of the exceptions, as Panasonic went for the jugular on the lower resolution modes as well as the higher ones, and so I'm down to the three "best" modes that will work on a UHS-I SD card: 5K 4:3 200Mbps Long-GOP h265 4K 16:9 150Mbps Long-GOP h264 1080p 16:9 200Mbps All-I h264 So I shot a test. That test showed me that the 5K mode is far superior, even on a 1080p timeline, but uploaded to YT is a different story. Considering I have partnered with YT for distribution share my videos on YT, that's what my friends and family end up seeing. This lead me to the question about what is actually visible after it's been minced by YT? Luckily I had done a previous test where I took an 8K RAW file, and rendered out various resolution Prores HQ intermediaries, then exported each of them from a 4K timeline. That video is here: So, I downloaded the above video in 4K, 2K, and 1080p resolutions, took screen grabs, and put them side-by-side for comparison. Here they are - you're welcome. So, what can I see in these images? The 4K download is better than the 2K, which is better than the 1080p. This is hardly news, each of these is more than double the bit-rate of the next one and they're all using the same compression algorithm, that's how mathematics works. Watching in 4K each lower resolution is subtly worse than the previous, except for 1.2k (720p) which is way worse. That's to be expected, 2k - 1.2k is a bigger percentage drop than the other resolutions. However, things don't get "bad" until in the 2.5k - 1.2k range, depending on your tolerance for IQ. Moving to the other extreme, watching in 1080 they are all very similar, except for the 1.2k version, which is interesting. Some of these grabs also have a lower resolution one looking better than the higher one next to it. That's not an accident on my part (I checked), it really is like that. As the original video has the resolutions all in sequence in the one video, I suspect that the frame I chose was differing distances from the previous keyframe in the stream, so that will introduce some variation. So, what does this mean? Well, firstly, no point shooting in 8K RAW if your viewers are watching in 1080p on YT. I doubt that's news to anyone, but maybe it is to some R5 pre-orders lol. More importantly, if your audience is watching in 1080 then they're not going to notice if you used 2K intermediaries or 3.2k ones. How can we apply this to our situations? This is more complex. In this pipeline we had 8K RAW -> X Prores -> timeline. This meant that the Prores was by far the weakest link, and Prores HQ is pretty high-bitrate compared to most consumer formats. 1080 Prores HQ is 176Mbps, but UHD Prores HQ is 707Mbps. I don't know of any cameras that shoot h264 in anything even approaching those data rates for those resolutions, so good luck with that. If you're shooting 4K 100Mbps h264 then that's the same bitrate per square pixel of screen as 1.4K Prores HQ, which is pretty darn close to that 1.2k that looks awful in all the above. Obviously if your viewers are watching in 4K then it's worth shooting in the highest bit-rate you can find. What does this mean for me? Not sure yet, I need to do more tests on the GH5 modes, and I need to think more about things like tracking and stabilisation which can use extra resolution in the edit. But I won't rule out going back to 1080p.

-

I tell you what, if they put IBIS into something I'd perk up and pay attention 🙂

-

I think I've already worked out what setup I'll use...

-

I expect that his demographic would be the overlap between "professional" photographers and people who work in higher paid other jobs. My day job is working in tall corporate buildings and you are basically always a stones-throw away from someone who owns a 5D or other flagship camera, and at least a few L-series or equivalent lenses. Many of them have it just to shoot their families, some will shoot for the social/sports clubs they're involved in, and a few have dreams of being a professional photographer and do weddings on the side. I suspect that these are his demographic.

-

Announcing the new BlackMagic P8K - FF - 8k30 6k60 4k120 RAW- 15 stops of DR - $3000 with Resolve. I'm probably way off, but that's the trajectory they're on!

-

Here's a still from my latest video. I forced my wife to star in it, but don't worry - I used peril-sensitive-glasses as an ND filter:

-

I exported a 4K video from Resolve last night for upload to YT and thought I'd give h265 a go, as it would be less to upload, but the quality was worse than I expected, so I did another export in h264 like I normally do. Both were set to automatic quality, and the h265 file was half the size of the h264 file, but the h265 was significantly more compressed with skin textures and other low-contrast fine detail considerably worse. Has anyone noticed this?

-

Great stuff! Music video with one prime is a great idea... lots of room for creativity 🙂

-

@BTM_Pix can we be sure they're completely smooth? ie, if I put a camera on it, record a pan, analyse it and find jitter, how do I know if the jitter came from the camera or from the slider? I have the BMMCC so I could test things with that, but if I do the test above and get jitter then we won't know which is causing it. It would only be if we tested it with the Micro and got zero jitter that we'd know both were jitter-free. I'm thinking a more reliable method might be an analog movement that relies on physics. Freefall is an option, as there will be zero jitter, although I'm reminded of the phrase "it's not falling that kills you, it's landing" and that's not an attractive sentiment in this instance!! Maybe a non-motorised slider set at an angle so it will "fall" and pan as it does? Relying on friction is probably not a good idea as it could be patchy. The alternative is to simply stabilise the motion with large weights, but then that requires significantly stronger wheels and creates more friction etc.

-

We may find that there are variations, or maybe not. Typically, electronics has a timing function where a quartz crystal oscillator is in the circuit to provide a reference, but they resonate REALLY fast - often 16 million times per second, and that will get used in a frequency divider circuit so that the output clock only gets triggered every X clock cycles from the crystal. In that sense, the clock speed should be very stable, however there are also temperature effects and other things that act over a much slower timeframe and might be in the realm of the frame rates we're talking about. Jitter is a big deal in audio reproduction, and lots of work has been done in that area to measure and reduce its effects. However, audio has sampling rates at 1/44100th of a second intervals so any variations in timing have many samples to be observed over, whereas 1/24th intervals have very few data points to be able to notice patterns in. I've spent way more time playing with high-end audio than I have playing with cameras, and in audio there are lots of arguments about what is audible vs what is measurable etc (if you think people arguing over cameras is savage, you would be in for a shock!). However, one theory I have developed that bridges the two camps is that human perception is much more acute than is generally believed, especially in regards to being able to perceive patterns within a signal with a lot of noise. In audio if a distortion is a lot quieter than the background noise then it is believed to be inaudible, however humans are capable of hearing things well below the levels of the noise, and I have found this to be true in practice. If we apply this principle to video then it may mean that humans are capable of detecting jitter even if other factors (such as semi-random hand-held motion) are large enough that it seems like they would obscure the jitter. In this sense, camera jitter may still be detectable even if there is a lot of other jitter from things like camera-movement also in the footage. LOL, maybe it is. I don't know as I haven't had the ability to receive TV in my home for probably a decade now, maybe more. Everything I watch comes in through the internet. I shoot 25p as it's a frame rate that is also common across more of what I shoot with, smartphones, action cameras, etc so I can more easily match frame rates. If I only shot with one camera then I'd change it without hesitation 🙂 For our tests I'm open to changing it. Maybe when I run a bunch of tests I'll record some really short clips, label them nicely, then send them your way for processing 🙂 Yeah, it would be interesting to see what effects it has, if any. The trick will be getting a setup that gives us repeatable camera movement. Any ideas? We're getting into philosophical territory here, but I don't think we should consider film as the holy grail. Film is awesome, but I think that its strengths can be broken down into two categories: things that film does that are great because that's how human perception works, and things that film does that we like as part of the nostalgia we have for film. For example, film has almost infinite bit-depth, which is great and modern digital cameras are nicer when they have more bit-depth, but film also had gate weave, which we only apply to footage in post when we want to be nostalgic, and no-one is building it into cameras to bake it into the footage natively. From this point of view, I think in all technical discussions about video we should work out what is going on technically with the equipment, work out what aesthetic impacts that has, and then work out how to use the technology in such a way that it creates the aesthetics that will support our artistic vision. Ultimately, the tech lives to support the art, and we should bend the tech to that goal, and learning how to bend the tech to that goal is what we're talking about here. [Edit: and in terms of motion cadence, human perception is continuous and doesn't chop up our perception into frames, so motion cadence is the complete opposite of how we perceive the world, so in this sense it might be something we would want to eliminate as the aesthetic impact just pulls us out of the footage and reminds us we're watching a poor reproduction of something] Maybe and maybe not. We can always do tests to see if that's true or not, but the point of this thread is to test things and learn rather than just assuming things to be true. One thing that's interesting is that we can synthesise video clips to test these things. For example, lets imagine I make a video clip of a white circle on a black background moving around using keyframes. The motion of that will be completely smooth and jitter-free. I can also introduce small random movements into that motion to create a certain amount of jitter. We can then run blind tests to see if people can pick which one has the jitter. Or have a few levels of jitter and see how much jitter is perceivable. Taking those we can then apply varying amounts of motion-blur and see if that threshold of perception changes. We can apply noise and see if it changes. etc. etc. We can even do things in Resolve like film a clip hand-held for some camera-shake, track that, then apply that tracking data to a stationary clip, and we can apply that at whatever strength we want. If enough people are willing to watch the footage and answer an anonymous survey then we could get data on all these things. The tests aren't that hard to design.

-

Schneider Xenon full frame cine lenses. Old price €4320. New €1590!

kye replied to Andrew Reid's topic in Cameras

Wow. These lenses look like they measure very nicely too. https://www.lensrentals.com/blog/2019/05/just-the-cinema-lens-mtf-charts-xeen-and-schneider/ and the quality control looks to be excellent: https://www.lensrentals.com/blog/2017/08/cine-lens-comparison-35mm-full-frame-primes/ The drop-off in resolution towards the edges of the frame remind me of the Zeiss CP.2. -

@BTM_Pix that motion mount is AWESOME! It also, unfortunately, probably means that there's patents galore in there to stop other camera companies from implementing such a thing. eND that does global shutter with gradual onset of exposure is such a clever use of the tech. What is interesting is how much it obscures the edges when in soft shutter mode. Square: Soft: This would really make perception of jitter very difficult as there's no harsh edges for the eye to lock onto, effectively masking jitter from the camera. And integrating ND into it as well is great. One day maybe we'll have an eND in every camera that can do global shutter, soft shutter, and combined with ISO will give us full automatic control of exposure from starlight to direct sunlight, where we simply specify shutter angle and it takes care of the rest. The future's so bright, I have to wear eNDs.

-

Welcome to the forums Jim! How is your editing going? I feel your pain. I used to also be like this, but what turned it around for me was two things. The first was Resolves new Cut page. I'm not sure if you've edited in Resolve, but the process to review footage was a bit painful previously. You had to double-click on a clip in the Media pool to load it in the viewer, then JKL and IO to make a select, and I set P to insert the clip into the timeline. Then you had to navigate with the mouse to load the next clip. I could never find how to set keyboard shortcuts to get to the next clip. I suspect it might have required a numeric keypad, which my MBP doesn't have. Then Resolve created the Cut page. Theres a view in the Cut page that puts all the clips in a folder end-to-end like a Tape Viewer. Then you can just JKL and IO and P all the way through the whole footage. No using the mouse, or even having to take your fingers off those keys, and can do it completely without looking. It sounds ridiculous but those extra key presses were adding enough friction to really make an impact. Looking at my current project, if it took 5 seconds in total to take my hand from the JKL location to the mouse, navigate the cursor to the next clip, double-click, then put my hand back at JKL, and I had to do that 3024 times, then that's 4.2 hours just navigating to the next clip! Thinking about it like that it doesn't seem such a small thing! My suggestion would be to try and optimise your setup to have as little friction as possible, as even little things will be adding up unconsciously. The second thing that I had forgotten when I stalled in editing was how lovely it was to look at the footage. Not only did I get to re-live my holidays, and only the best bits of them at that (we don't film the awful bits, when you're cold / hot / tired / grumpy and things are smelly etc doesn't come through). Also, I've found that though the sheer quantity of footage I take, the lovely shots are inevitable and finding them is very rewarding. I do find frustrating things sometimes, like when I was in the boat in the wetlands and I missed the shot of the eagle swooping down and pulling the fish out of the water because I was filming something else in some other direction, or when I get out of sync and record the bits in-between the shots and don't record the bits when I'm aiming the camera at something cool, that's frustrating! The other thing to keep in mind is that for our lives, and family or friends, the footage actually gets more valuable as it ages, not less valuable as it does for commercial or theatrical footage. In that sense, keep shooting because sometime later on you might pick it up and go through it. Or someone else might. I don't know about you, but if my grandparents or great-grandparents had vlogged, or recorded videos of holidays, or whatever, I'd be very interested in looking at that footage. In a sense, our own private footage is about history, not the latest trends. Also, the longer it has been since you shot the footage, the more objective you will be in editing it. Street photographers often deliberately delayed developing their film because the longer they delayed the better they were at judging how good each shot was, rather than remembering the sentiment and context around it. Hope that helps!

-

Great discussion! Yeah, if we are going to compare amounts of jitter then we'd need something repeatable. These tests were really to try and see if I could measure any in the GH5, which I did. The setup I was thinking about was camera fixed on a tripod pointing at a setup where something could swing freely, and if I dropped the swinging object from a consistent height then it would be repeatable. If we want to measure jitter then we need to make it as obvious as possible, which is why I did short exposures. When we want to test how visible the jitter is then we will want to add things like a 180 shutter. One is about objective measurement, the other about perception. Yes, that's what I was referring to. I agree that you'd have to do something very special in order to avoid a square wave, and that basically every camera we own isn't doing that. The Ed David video, shot with high-end cameras supports this too, with motion blurs starting and stopping abruptly: One thing that was discussed in the thread was filming in high-framerates at 360 degree shutter and then putting multiple frames together. That enables adjusting shutter angle and frame rates in post, but also means that you could fade the first/last frames to create a more gradual profile. That could be possible with the Motion Blur functions in post as well, although who knows if it's implemented. Sure. I guess that'll make everything cinematic right? (joking..) Considering that it doesn't matter how fast we watch these things, it might be easier to find an option where we can just specify what frame rate to play the file back at - do you know of software that will let us specify this? That would also help people to diagnose what plays best on their system. That "syntheyes" program would save me a lot of effort! But validates my results, so that's cool. I can look at turning IBIS off if we start tripod work. To a certain extent the next question I care about is how visible this stuff is. If the results are that no-one can tell below a certain amount and IBIS sits below that amount then it doesn't matter. In these tests we have to control our variables, but we also need to keep our eyes on the prize 🙂 One thing I noticed from Ed Davids video (below) was that the hand-held motion is basically shaky. Try and look at the shots pointing at LA Renaissance Auto School frame-by-frame: In my pans it was easy to see that there was an inconsistent speed - ie, the pan would slow down for a few frames then speed up for a few frames. You can only tell that this is inconsistent because for the few frames that are slower, you have frames on both sides to compare those to. You couldn't tell those were slow if the camera was stopped on either side, that would simply appear to be moving vs not moving. This is important because the above video has hand-held motion where the camera will go up for a few frames then stop, then go sideways for a frame, then stop, then.... I think that it's not possible to determine timing errors in such motion because each motion from the hand-held doesn't last long enough to get an average to compare with. I think this might be a fundamental limit of detecting jitter - if the motion has any variation in it from camera movement then any camera jitter will be obscured by the camera shake. Camera shake IS jitter. In that sense, hand-held motion means that your cameras jitter performance is irrelevant. I didn't realise we'd end up there, but it makes sense. Camera moves on a tripod or other system with smooth movement (such as a pan on a well damped fluid-head) will still be sensitive to jitter, and a fixed camera position will be most sensitive to it. That's something to contemplate! Furthermore, all the discussion about noise and other factors obscuring jitter would also apply to camera-shake. Adding noise may reduce perceived camera-shake! Another thing to contemplate! Wow, cool discussion.