-

Posts

7,835 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by kye

-

EOSHD in lockdown in Barcelona - photos from Coronavirus ghost town

kye replied to Andrew Reid's topic in Cameras

Good idea to document this decision and journey. Regardless of the decisions made in the end the footage will be valuable on a personal level, and if they do decide to get involved then there will be a lot of demand for stories once we're over the peak of this thing. It might even help to offset some of the financial losses due to cancellations. We're not on lockdown here in Australia, yet, but who knows how the trajectory will go. -

Ah yes, that sounds about right.. and considering that I'd only use Vimeo because I wanted their higher quality, any video will be more than 500MB. Whereas YT has no limits and doesn't buffer endlessly for no reason.. Game over! Maybe we just use this thread to post entries?

-

I'm guessing that won't support YT uploads? I have vague memories of Vimeo having some kind of restriction on uploads if you aren't a paying member? I auditioned it as the platform I was going to share my random camera test videos and ended up on YT because there was some problem with Vimeo.

-

Tom Antos on shooting with a single lens...

-

Considering that my trajectory was a 4K cine camera (XC10), a 4K non-cine camera (GH5) and latest purchase is a 2K cine camera (BMMCC) I have enough data points to confirm that 8K is definitely not on the trajectory I am taking!

-

Stop motion! Yes, that makes sense. It turns any 12MP camera into a 4K BEAST!!

-

@Michael1 I agree. I see three factors in play: The first is that cameras bitrates and DR and processing has improved to the point that 4K h264/h265 isn't that much worse (on well lit material) than 1080 RAW, especially if the compressed codec is 10-bit or more. The second is that the in-camera profiles are the result of a huge investment in Colour Science and processing by very skilled technicians, much more skilled than most colourists. The third is when using in-camera profiles the compression is applied after the adjustments have been made rather than before it. This matters because most compression schemes are optimised for hiding the data loss in areas of the image we don't look at much or are less important, however if we record in a flat profile then it might be that when we process the flat gamma curve that we're pulling parts of the image from where the compression was a bit more severe into the parts of the resulting rec709 image that we're more sensitive to.

-

If we're stuck on lockdown, how the hell are we meant to shoot FILM and then get it developed and scanned?!?!?! Unless somehow you're in lockdown but live in a vintage film museum or something!

-

A lot of people have been asking why we're not all working remotely by now, considering the tech has been there for years, and it's been obvious the barrier is humans not machines. I wonder if this will have had a significant effect on that barrier, by the time we're done at least. It's a good idea to use the time for educating ourselves for something that will serve us well down the road. The photography / videography market is changing rapidly, and in "change or die" markets it's typical to see people who change, and to see the people who don't survive be the ones that are bitter about it. There's always an upside, if we can just find it

-

@BTM_Pix I know you didn't explicitly call for it, but "Having a couple of lens world cup shootouts to try and thin the collection" gets my vote! The One Room challenge isn't too bad either We're not on lockdown over here, but who knows what will happen. Our arc with this thing is just beginning.

-

In case anyone wants to overcomplicate their selfies, here is a couple of videos to take things to the next level....

-

No need to apologise.. unless you represent the sun, that is It's a strange thing, but the sun here in WA is stronger than on the east coast, and the Aussie sun is stronger than elsewhere, like Europe or Asia. My skin is fair, but I could go out in the sun for an hour or so when I was in Italy and not get burnt, but here it's like 10 minutes. I've gotten burnt wearing a wide-brimmed hat sitting in a chair on the side of a grass sports field, which is completely ridiculous. Anyway, isn't it your turn to post a selfie? I have a vague recollection you might have posted one before at some point...? Great pic. Nothing broken in your lighting or composition skills!

-

This is from commuting to work at sunrise and home again in late afternoon, standing in the shade when waiting for buses, and not going anywhere outside in-between. I'm not sure where I'm meant to be wearing that hat. My indoor lighting isn't that powerful!!

-

Easy. Just pick the one that looks most like you playing the lead in an Indy film called Modern Ninja where you are John Normal, a man who achieves invisibility through being unremarkable and where special skills are centred around his ability to talk about local sporting events, a passionate love but mediocre understanding of politics and global affairs, and traditional and slightly close-minded values.

-

Mark loves his Takumars but just got some CZ lenses, so I'm looking forward to seeing how they compare. I think the Takumars might not be too far away from the CZs TBH.

-

Post the one that is the most cinematic....

-

Way ahead of you.. this is me hanging out with my colour checker in specially arranged "mood lighting" 😂😂😂 PS, I'm not quite as sunburnt as I look, but I am pale as hell and live in Australia, sooooo......

-

oh, and to clean their lens before recording.

-

Another tip is to tell people to hold the camera really still for 10s. I once gave a camera to some friends during an event to film and when I looked at the footage afterwards I realised that everyone waved it around like they were watering the lawn - I ended up pulling stills from it as the camera only settled onto anything for 3-4 frames. I was mad until I remembered that I did that the first few times I filmed anything. It seems to be a common thing when people hold a camera for the first time. If you do get vertical video then you can use several shots side-by-side in a Sliding Doors kind of way, and I've deliberately shot vertically on my phone on a travel day for the express purpose of using this layout. I used it to show the same location over time so showed the shots simultaneously instead of via jump-cuts, and I also did a bunch of shots out of the window of the moving train to show the diversity of the landscape. This works for fancy b-roll, but if you get a speaking part then maybe put that one centre and use the audio from that one and have the ones on the sides as b-roll. Definitely get people good mics. I've used the Rode ones that plug into the microphone port on a smartphone and they're pretty good, but IIRC the cheap knock-offs are almost as good and much cheaper. You can use the opportunity to get creative shots too.. The trick to take-away from this video (which is a bit of a one-trick video) is that the camera was mounted to something stable and had the subject in a consistent location in the frame without much movement. I think you're in danger of each person taking footage that is all the same - one person might only take landscape shots from far away or another might only take close-ups. Some advice about trying to get lots of different shots (far away, close up, from above, from down low, etc) and to make them interesting. I suspect that people will want to shoot good footage but won't know how. Think of how many beginners say things like "I would never have thought of doing that". It's also dangerous to give people too many instructions, so I'd suggest only giving them the top ones. Maybe something like: hold your phone horizontally / point the microphone at the person talking / hold it steady and count to 5 before hitting stop / get lots of different shots / make them interesting. Good luck! If you're given enough footage you can make something useful, shooting ratios are a thing and b-roll doesn't have to be super related.

-

Ok, I think I've got my head around a few things. The unknowns are: UV filter Lenses RGB filters on sensor WB image processing on GH5 Colour profile (3D LUT) on GH5 Compression UV filter and Lens I have eliminated by testing the UV filter and 14mm lens vs 14-42mm lens without UV filter, and these are within ~1% of each other, so as I am not going to get within 1% in my matching, I can eliminate these as variables between the cameras. RGB filters on the sensor I can work out the transformation for by matching a RAW still image from the GH5 to a RAW DNG from the Micro in uncompressed RAW. Compression is separate to colour so I can treat that separately via resolution tests. WB image processing on the GH5 and the Colour Profile will take some doing, but here's the plan. The camera applies the RGB filters, then on top of that applies the WB, then on top of that applies the Colour Profile. So, what I have to do is to correct the colour profile, then the WB, then convert the RGB filters from the GH5 to the Micro, then I will have a matching GH5 image in Linear to the Micro image in Linear. So, firstly I work out the RGB filter adjustments (via a 3x3 in Linear). Then I construct the following: <colour profile compensation> ------> <WB compensation> ----> RGB compensation. At first I will know the third, but not the first and second. The trick is separating them, so I can shoot the same image in two different WB settings. If I make it so that one of the shots has had the right WB and one has the wrong WB, then I just make it so that one has a WB adjustment built in and one does not, and then I work out how to make the colour profile compensation so that it works for both WB settings. This should separate the WB adjustment from the colour profile compensation. This should give an adjustment that will work regardless of the WB on the clip. Hooray for year 10 algebra

-

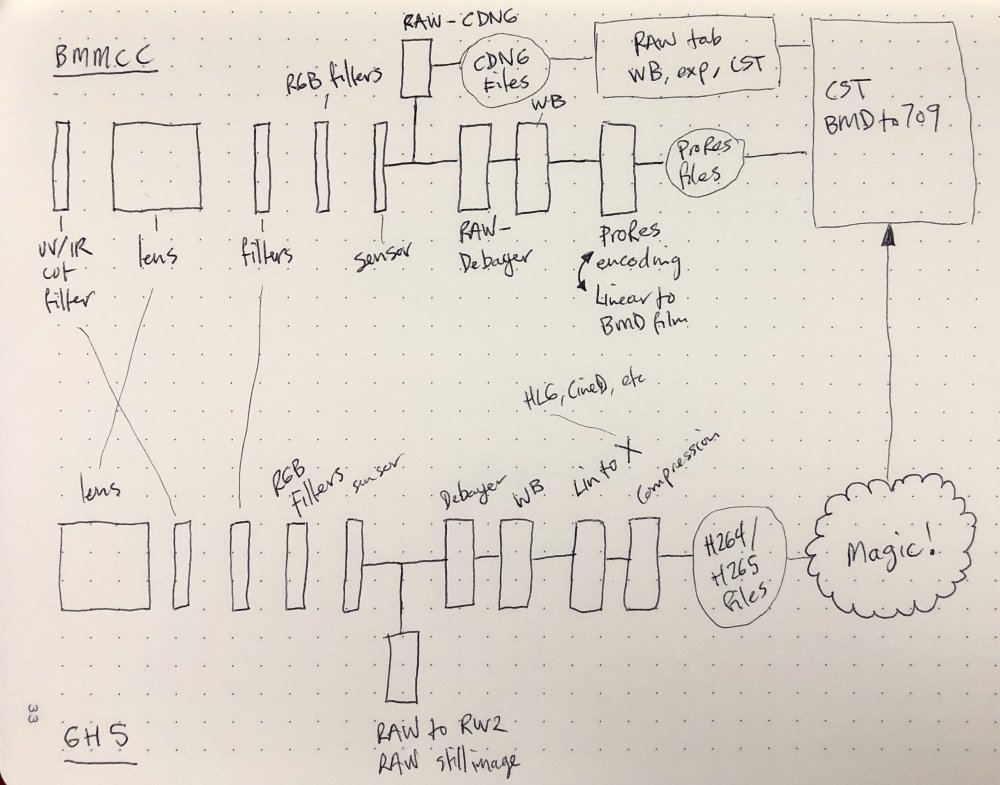

I'm now more confused. I think this is the system we're dealing with: The challenge is that on the Micro I am shooting RAW and so the only colour variable is the RGB filters on the sensor, but with the GH5 the variables are (something like) the RGB filters on the sensor, the WB setting, and then the colour profile, which is likely to be a 3D LUT with all sorts of unknowns in there. A realisation that's useful is that I can take a RAW still image on the GH5 and that should allow me to do a straight 3x3 adjustment in Linear to match them, without having to worry about the WB and 3D LUT that's on the video files. I should also be able to lock the GH5 down on a tripod and take a series of RAW images and video files and then I can try and use the RAW image as a reference to isolate the WB+3DLUT away from the RGB filters on the sensor, although this doesn't seem to help that much as the 3D LUT is where all the nasties are. I suspect that: the RGB filter adjustment is a 3x3 RGB mixer adjustment in Linear the WB adjustment is an adjustment of the levels of RGB in Linear and that the 3D LUT is all sorts of wonkiness I've also worked out that I can use my monitor as a high DR and high saturation test-chart generator. It won't be calibrated, but it shouldn't matter because the entire idea of this conversion is that any light that goes into either camera should result in (broadly) the same values after my conversion, so absolute calibration shouldn't matter. I've also realised that this is a mixture of black-box reverse-engineering where there are a few black-boxes and we can take guesses about how each is likely to work. Of course, Resolve isn't a black-box reverse-engineering tool, and it's very difficult to get pixel-perfect comparison images between the two cameras so that's kind of what makes it a challenge. I'm wondering if I should be converting things in reverse order, peeling the onion at it were, so doing the opposite of the 3D LUT, then the WB, then the RGB filter. I've also realised that I should switch to Cine-D profile instead of HLG, because although it gives a slight reduction in DR, it will enable the conversion to work on >30p footage too.

-

I am a hobbyist and I was a big champion for AF, now I'm a big champion for MF. What changed for me was: I thought MF would be way harder to get right than it actually is (although I don't shoot in the most difficult situations) I also thought that having something perfectly in focus at all times was required I was upgrading and it came down to the A7iii vs GH5 and the only significant downside of the GH5 was poor AF, so I did an few experiments with MF and discovered that I actually like the look of MF as it makes things more human and suits the style I prefer I think that there are situations when AF is great (doing those gimbal shots where you run around the bride/groom at a wedding for example) but I suspect that a lot of people new to this just haven't put in the work to discover how good they are at MF and what the aesthetic really looks like. Plus, Hollywood gets shots OOF all the time.

-

@mercer very nice image - deeper colours than your normal grades but it sure works!

-

It's not personal.. for me it's about trying to reduce the myths that mislead people. Like "MFT lenses are soft wide open" and "FF lenses are all sharp wide-open" There are MFT lenses with way more than 2K resolution wide open, and the P4K shoots RAW 4K so there's no limitation on the codecs either.

-

This conversation started with "MFT isn't as good as FF because MFT doesn't have wide lenses that are sharp enough wide open" and now we're here talking about how softness is something that is actively sought after by the folks that make the best images around. Apart from cropping or doing green screening, it sounds like your point is that MFT can't be used to make deliberately softened 2K output files because it doesn't have >30MP lenses, which just doesn't make sense.