-

Posts

8,050 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by kye

-

Everyone knows it takes less time editing to make a longer film.. same in writing

-

Now is the time to buy and adapt Medium Format lenses...

-

I've been doing lots of research into this recently and I concluded that there is no 'best' set, they all have a certain aesthetic and it depends on what you shoot and what you value. There are modern lenses which are sharp with lots of contrast and micro-contrast, then there are vintage lenses which can have any combination of less resolution, less contrast, or less micro-contrast depending on the glass and coatings used. Some links to some of the popular sets: Russian lenses: http://www.reduser.net/forum/showthread.php?152436-Russian-Soviet-USSR-Lens-Survival-Guide Be aware that Russian lenses aren't one company - they were several companies all competing for market share behind the iron curtain. Contax Zeiss: http://www.reduser.net/forum/showthread.php?92044-Contax-Zeiss-Survival-Guide Minolta Rokkor: http://www.reduser.net/forum/showthread.php?92246-Minolta-Rokkor-Survival-Guide Medium format and others (future proof your tiny FF sensor!): http://www.reduser.net/forum/showthread.php?139153-Mamiya-Medium-Format-Lens-Survival-Guide http://www.reduser.net/forum/showthread.php?160886-Lomo-70mm-format-lenses-other-65mm-70mm-format-lenses Canon FD lenses have a great reputation. As do the Rokinon/Samyang, although I'm not sure on budget for those. Google can probably supply you with more examples. Remember that photography is littered with excellent glass that doesn't have enough acronyms after its name to still be fully in demand, but are optically excellent, and fully manual, which is actually better for us instead of worse! Depending on what you're after, great lenses can still be had for under $10 on ebay if you're willing to wait a bit for the extreme bargains to surface, some lenses were just so popular that even though they're excellent there just isn't enough demand for manual lenses to rally push the price up. I got a great 135 / 3.5 FF in fully working condition for USD$3.50 plus shipping just because there are a zillion 135mm lenses and the optical formula was simple so they're basically all great.

-

Also, if you just spent hundreds of dollars on a SB then adding a dumb adapter to the front of that instead of spending hundreds more on a second SB is relatively attractive!

-

Buy a camera that is "good enough" and learn to colour grade. Seriously. I used to be like you, but then I worked out what I wanted in an image and bought the right camera and lenses, and now when I hear that there's a new 12K RAW 16-bit pocket camera with built-in gimbal and AI drone I don't even care. It happens that the camera I bought was a GH5, but if you have different needs then it will be a different camera. The other part is learning to colour grade. I was "done" with other cameras when I pulled the GH5 files into Resolve and it looked and felt like when the pros grade cinema camera footage - they pull in a shot and it looks flat because it's log, and then they push and pull it around almost mercilessly and it just does it and looks great, and the GH5 files did that too. With all my previous cameras the file was fragile and would break given half a chance. I'm not getting spectacular images out of the GH5 yet, but that's because I just need to learn to grade better. I know the GH5 is great and it's about my ability to grade, not its limitations. Specifically I think that's because it's 10-bit and the colour science doesn't have problems, so I know I can work with it. Your results and preferences might be different, but when you're wishing you had something else it's because you're not happy with what you have. There are always better cameras and we all wish we had an Alexa but many of us are satisfied even if the Alexa has a better image.

-

I don't really know that much about zcam or cinemartin, and I've only watched a few reviews of kinefinity products, and the digital bolex people aren't in it anymore, so I'm hardly an expert, but they all seemed to be in the "RED used to be the plucky up-start taking it to the cinema establishment, but now they are the establishment and cost both arms and both legs, so here's the people that are doing that job now....." camp. Maybe they were only taking up a piece of the pie from people that weren't actually in the market for a modular cinema camera (like me), but they were on the radar as one of the companies in that segment.

-

You don't say...

-

Very interesting. They've delivered cameras before, and upsampling 6K to 8K will be similar to upsampling 3.2K to 4K which has proven to be successful for other manufacturers in the past. With the recent fall of Cinemartin, there is more pie for people like Zcam.

-

We're about due for the GH6....

-

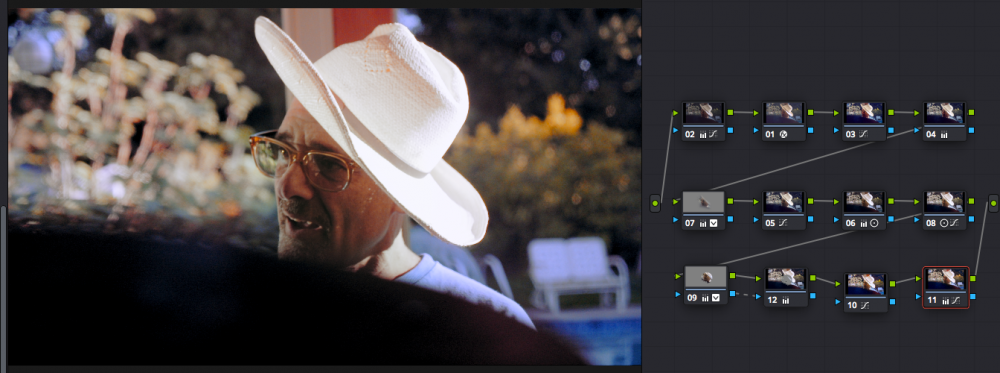

This is an interesting image showing many different frames:

-

Actually, I think that number is wrong, those do look a little dark for this scene. This article has some useful information: https://www.premiumbeat.com/blog/how-to-use-false-color-nail-skin-tone-exposure/ That shows that skin is in the 48-52 IRE level. This one is a bit more difficult because it's a lot more contrasty: But those levels really change if the image is high key like this one: or low key like this one: and this one has no skin tone - only shadows and highlights: I just make things look right. I wouldn't be too afraid of contrast either - Hollywood isn't afraid to absolutely crank it right up. I'd suggest pulling together a collection of reference images from skilled colourists

-

The approach of skipping FF and having APS-C and Medium Format is an interesting one. Just wait a decade when everyone wants Medium Format video and all the FF companies will be behind the 8-ball and Fuji will have been there with lenses for a decade! I have no idea how much of this video is due to it being a MF camera and how much is colour science, but it looks just great to my eyes:

-

Totally agree, and to take this one step further, I've discovered that in life people tend to project an image of who they want to be rather than who they are. People who project confidence are typically very insecure, people who project wealth tend to be spending all their money on showing off and are broke, those who are showing off how happy they are (eg, instagram) are typically miserable. I saw a documentary on what life is like for most billionaires and it's pretty awful actually - they can't trust anyone because people are after their money, they have these huge houses but are constantly renovating them to try and one-up their other billionaire friends, and spend most of their time driving fancy cars and drinking expensive champagne wishing they had some real friends. So @kaylee, when you're looking at friends who are married and having kids and feeling that you're missing something, your friends are being torn apart by trying to have careers as well as families, pay their mortgages, not strangle their kids after the 4th sleepless night with the baby crying and the toddler drawing all over the good couch with their favourite lipstick that isn't made anymore, and wishing they could just live in a small town with their dog and get to have a bit of glitz and glam of the film world. I'm out here in the suburbs surrounded by lots of broken people who are now single parents because their relationships failed and when their kids phone gets hacked and their nudes are posted to other kids and they're getting bullied and coming home in tears and don't know WTF to do. Never compare your insides with someone elses outsides.

-

Haha.. you're welcome! I think that's the whole idea of these lenses - apart from being more expensive than a body cap there is no other disadvantage but it means you always have a lens on the camera, meaning you're one step closer to the whole "the best camera is the one you have with you" kind of "always be ready to shoot" philosophy. Considering that I shoot home videos I always try to have my camera setup and ready to turn on and shoot in case something funny or interesting happens. Every now and then I will be running through the house to grab the camera and turning it on as I run back through the house to film something interesting. I also especially like the idea that the lenses aren't great and aren't fast, so they force you to concentrate on having great composition, angles, lighting, and genuine content, kind of giving you a bit of immunity to the "shallow-DOF slow-motion music-video eye-candy b-roll-seqeunce of meaningless-crap" genre of film-making. Having a slow and low-resolution lens attached to a Raw camera like the P4K combined with the added emphasis of content over empty aesthetics should result in some great and very organic looking images. I look forward to seeing some footage when you've had a chance to play with it some

-

Sure! Firstly, I'll preface this by saying that I'm not an expert, and also that grading is subjective, so there's no right or wrong - only right or wrong for the project you're doing. Comparing my still with your two grades Your first grade: My grade: Your second grade: Here's what I'm seeing: The hue of the skin tones seems fine and matches across all three grades I look for skin tones in that middle-ground between yellow and pink and it's there I also look for a variation in hue because skin colour varies across different areas of the face and yours also has that It's the contrast of the skin tones is where they differ In my example the skin has far less contrast than on both of yours, I'm looking at how bright the lighter areas are compared to the darker areas. This also contributes to yours having slightly more saturation in skin tones too. Your second grade is much better in terms of exposure, with the first one being too bright and losing skin texture. I think you're meant to put skin tones at a certain gamma level (40 IRE?) and your second one is much better than your first, although both yours second and mine might both still be a bit light. I am constantly surprised by how dark skin tones are in professional situations. Looking at the wider image of your second grade vs my first grade Yours has slightly more overall contrast than mine with black and white points a bit more pushed apart Probably the main difference is in the contrast of the mid-tones where mine has less contrast, giving mine a more vintage feel The distribution of saturation is different too, mine looks to have more saturation in the mid-tones (look at the red/brown in the wood) but that might be because those items are lighter in mine and from a mathematical perspective they might be similar, however, the most saturation areas of yours seem to me more saturated than the most saturated areas of mine, for example the red light on the far left or the red umbrella directly above his head. However, the saturation of the other colours appears to be similar, look at the green lights or the coloured tassels hanging above the shrine. IIRC I might have deliberately played with the reds, and I definitely did a Sat vs Sat to boost saturation of mildly saturated tones without also boosting the more heavily saturated tones. Overall WB is very similar and you don't appear to have tinted the shadows or highlights. In terms of taste, I prefer mine as it is more flattering and a bit more vintage, but I suspect that if we were physically there yours might be more accurate with the level of contrast. Mine kind of looks 'nicer', both in skin tones (contrast on skin tones isn't flattering) as well as the overall look - the world that yours is from looks like a harsher and less friendly place to live. Hope that helps?

-

I'll test that theory.. here are some old pics from a less than stellar technical setup (photos, not frame grabs). IIRC these are all Panasonic GF3 and the 14-42 3.5-5.6 kit lens, with the camera probably on full-auto. The first three were taken blind out the window of moving vehicles. Nice... Have you considered Film Convert? I've never used it but I suspect it might be a possibility to get the kind of look you want with the fewest controls to mess with.

-

Absolutely.. BTS isn't mandatory

-

LOL. I filmed mine on my phone... you know, just saying

-

Nice. Simple and well executed

-

Ok, here's my first effort. Filmed on a iPhone, audio recorded on iPhone headphones supplied with iPhone, editing, voice-over and grading done in Resolve. I've never done a voice-over before, so a big chunk of my time was spent figuring out how to do it, and then trying to work out how to make Resolve do it, including reading tutorials etc. I even came up with the idea for it in the three hour window, so that's cool too. ah crap. Just realised mine is way longer than 1 minute! oh well.

-

Nice film Ha! Ringleader... lol.

-

No. Just kidding.. Sure. But only because you asked so nicely! ???

-

Nice! After every project it's good to think about what worked, what didn't work, and what you might do differently next time. Join us over in the 3 Hour 1 Minute film challenge thread

-

First attempt is a fail, time to start again I think. This isn't an easy task. This is where I got to. The scopes tell the story pretty nicely - these are with only a CST applied: One is direct sun, the other all shade. One has lots of saturated objects in it, the other has almost none. There are some heavy and complicated green/magenta shifts going on, and I suspect that the hue of reflected light is also different.

-

Let's up the ante... Challenge #3 - colour match the first two images. You are done when you could cut from one to the other in the same scene.