-

Posts

8,047 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by kye

-

Yeah, that FS5 video is exactly what you want - the tech doing the tedious things that you can easily describe and leaving you to do the creative things that you can't easily describe. In terms of AI creating rough-cuts, it works for things like home videos where it just picks the shots with the nicest smiles and tries to include everyone, but other types of videos like commercials, drama, music videos, corporate, etc have much more complex and subtle criteria and often editors themselves don't even know consciously why they prefer one take over another, or why a group of certain shots is better than other groups of similar shots. I've seen a number of those BTS with big-budget feature editors and they often have as many or more assistants whose job it is to log, sort, tag, and create rough cuts. Having a computer do those things, as well as laborious things like checking focus down to pixel level on every shot, would be worth money in basically every scenario.

-

Fascinating. I can feel a camera test coming on

-

Yeah, even in small cities, the world is still a big place unless you stand out in some way or are part of a very small field, like if you're a specialist or charge a spectacular rate. Sadly, human nature is quite pervasive so these aren't unique issues! You only have to be good enough to get hired for work - you don't generally have to be that good at actually doing it

-

You're welcome!! ??? I figured there might be some extra love in there for Canon. Is Canon + ML the only hybrid setup that does 14-bit? I never focused on what bit-depth the BM cameras operated on, or what external setups could offer. I remember those 10-bit vs 12-bit vs 14-bit conversations we had where most liked 12-bit over 10, and some even preferred 14 over 12.

-

What was the main difference that you noticed? Resolution, or something else?

-

I'm a business consultant, so spend my working days in head offices dealing with management types and office politics. I once spoke to a coworker with a HR background after we ejected someone from the building who was beyond useless, and I suggested that we make a note never to hire that person again and they said that there was some kind of HR rule and we couldn't make any kind of list like that. I don't know if that was just for that company, or a wider regulation, but that company wasn't anything special, so similar limitations are likely in other organisations. The company was around 5000 employees, so the chance that someone else in management somewhere sees that person has experience within the org and re-hires them is considerable. But it has its upsides too - if someone only wants to work with people who agree with them then good riddance, I'll go somewhere else and actually be productive.

-

Cool topic and interesting predictions. Innovation tends to come from the intersection of technologies, less from a single technology reaching a threshold. Here's a few combinations that might be interesting: 360 cameras + AI with face-tracking + AI video editing This could create a camera that consumers just take with them to events and it automatically records video, frames shots in post, and edits them together to deliver a finished product or products (photos and video). This might even be free if FB / Amazon / Instagram think the data gained by spying on you is worth the hardware costs, but if you willing to pay for it then they'll happily take the data and your money. Electronic variable ND + better ISO performance (or dual ISO, or Triple ISO?) This combination could create a camera where we control the creative parameters (SS, F-stop) and the camera manually exposes using only non-creative controls (ND, ISO). This means you can set whatever aperture you want, 180 degree shutter, and then take exposure off your plate and think about more creative aspects of shooting. The "cinematic vlog" crowd might help with this as there's a ton of them and they care about SS and F-stop but want fast-paced shooting, and will be adding their voice to the pros who also want built-in NDs. NLE + AI face-tracking and shot analysis Not something people talk about, but there might be benefits to NLEs being able to analyse your footage. Immediate benefits would be logging footage by reading slates (if you have them), detecting takes (visually similar shots repeated over and over), tagging of shots with which faces appear in them, etc. Combined with smart bins this would save a lot of leg-work, especially in doc work where you have huge amounts of footage. For the less serious users (some people felt that FCPX was really iMovie, so Apple are trying to reach these people) you could also have expression detection, framing detection, and other ways to rate clips. The existing 'choose the best shots and make a sequence' technology could be incorporated, creating a rough-cut with a single button. More advanced features would be to watch you through the webcam as you view shots and further refine based upon your reactions. This could be huge as people are recording video and photos all the time, and editing your photos and choosing the best ones has been made easy but video editing is still something the average person doesn't even attempt.

-

Yeah, it's the same with most industries, maybe all of them. It's who you know not what you know. I don't know about the film industry, but in my industry you can do very well by just being very social and talking a big game. It also helps if you don't know anything, people who are clueless tend to respond positively when clients make non-sensical requests and that makes them 'team players' but the people who actually know why those ideas are ridiculous (and are willing to admit it) are negative influences and are to be eliminated at all costs. Fun fun fun...

-

In a sense, after Exit was aired, there's no reason that Mr Brainwash wouldn't stay famous, regardless of if he's an artist or not. Hollywood has shown us that there is plenty of room for people only being famous for being famous, and that once you kick-start that with a PR stunt of some kind (sex tape, art documentary, whatever!) then the world cares about how many times you chew each mouthful of breakfast and everything else.. The shredded Banksy should be worth more not less after it was shredded. It's physical art and now performance art Anyone with aspirations about what they're making feels like this. I continually see great moments happen when I wasn't filming and think about the film I could have created had I got those shots, ah well.... Keep shooting

-

Now you mention it, I kind of agree. I was preparing to refute your statement by saying that stuffing up the motion would ruin it - just like stuffing up anything else would also ruin it, but that's not true. I'm kind of typing out loud here, but you can take a cinematic film and stuff up the colours, remove the slow-motion (many films just slowed down the 24p footage in post anyway, still looked ok), you can crop the framing, etc and you don't have that instant-video kind of effect. Deep DOF doesn't ruin it, camera shake would put a dent in it perhaps, bad lighting would put a dent in it (although all kinds of natural light is fine). What else did I miss?

-

Neither did I, fun stuff! I just watched a doc called Saving Banksy on Netflix and it was about the guy that tried to save a Banksy from San Francisco and wanted to save it from being painted over by cutting it out of the wall, and what happens after that. It's not as good as Exit but still interesting to watch and leaves you with the same WTF reaction over the system and how things just don't fit together. Recommended

-

OMG - run! Stop down the aperture! Shorten the shutter speed! Disable all slow-motion! Bang up the ISO! Must. Protect. Camera. From. . . . Vloggers!

-

Odd, there's normally an option for these things. I have a Panasonic point-and-shoot with some ridiculous zoom (20X or 30X) and wow was it annoying before I found the "resume zoom on wake" option Are you saying I'm a troll? Genuine question - it's hard to understand how other people see your behaviour.

-

I was reading about it after posting and apparently there is a lot of confusion. The wikipedia page for Mr Brainwash (link) has some interesting snippets in it. Which seems to suggest that it's all fake, however then there is this: and this: and this: What we have is a real person, who was featured as a real artist in a fake documentary by a real artist, whose status as an artist is challenged and the real person won't say he is an artist. So that seems pretty straight-forward, he's not an artist. He reveals in a real documentary that all his work since his debut has been completed by a team of graphic designers. Definitely not an artist then. Unfortunately, since his debut, apparently he's done the following: Designed album covers for Madonna Designed art to promote Rock The Vote Done work for Michael Jackson Might have been part of the official promotion for Red Hot Chilli Peppers Directed a video for Coke featuring Avicii Did work for Coachella "created a one-of-a-kind Mercedes-Benz 2015 GLA" for Mercedes, whatever the hell that means Appeared in performances and has sold art worth something like $250K. Does making art, selling art, or appearing as an artist make you an artist? Who knows, but I just love that even by reading the wikipedia page you're still not sure what the hell is going on, and I think that's the whole point. The questions aren't if he's an artist, how much Banksy did, if the documentary is real or not. The questions are: What is art? What makes something valuable? Do we judge people by their actions or by what they say (or don't say)? My take-away impression from the docs was that it was kind of like a prank on the art world that they fell for, but I think that the prank is actually on humanity, because there just aren't answers to these questions. And how shallow was the DOF on that film? About right for a documentary - nothing to do with the work I create

-

Did you ever watch a documentary called Exit Through the Gift Shop? It was a film made by Banksy about the guy who was making a documentary about him. It is a ouroboros mind-fuck and spectacular in every way. The reason that I mention it is that the guy wanted to be as famous as Banksy but without doing any of the work or getting good at art, so he did a bunch of paintings for a huge show and they were terrible, but he talked himself into thinking he was great, and then because he sunk so much money into it people believed he must be great and so his work sold for heaps of money. In the end you're kind of left wondering WTF happened, what is good and what isn't, and how the F can anyone tell anyway. Going for the money doesn't always end badly!

-

It's not. Somehow @webrunner5 has added YouTubers saying that shallow DOF means 'cinematic' with cinema lenses are only F2.8 or slower + Philip Bloom is on YT and come up with owning a 0.95 lens means I'm drinking cordial or something. I think I got bitten by a troll.

-

It depends on what you're trying to achieve. Bayhem is a style of art, but so are the styles of films like Russian Ark, or how Nicholas Cage acts. It doesn't mean we have to like them, or emulate them, or that any style is any better or worse than any other. Don't be afraid to admit you like a particular style. Study it, practice it, celebrate it, and own it. and then get paid for it There's a whole debate around selling out vs being a genuine artist that I think people misunderstand. If you compromise your art just to make money then that's unfortunate, but who are we to judge. And if you happen to make art that lots of people like, then there's nothing wrong with that at all.

-

I think they will continue to improve too in terms of features, but possibly not in terms of the architecture. Things like the UI will probably require a significant re-write, but that might be to our advantage because if they work on it they'd have to re-write it completely, which means that they might completely re-design it too. My vote would be to enable everything to be docked and undocked so you can move everything around. One thing that I think modern computers are severely lacking in is UI innovation. We have GFX cards that can render a 4K virtual universe in real-time but the windowing that we use on normal applications hasn't had a single innovation (except multiple desktops) since 1988! What about having dynamic modes where the windows get bigger when in the middle and when you're not using them they settle on the sides of the screen like thumbnails? You can't argue that there isn't spare screen realestate on a 24inch UHD display when you're working in a word processor.. We give Canon shit for not delivering the full capability of their hardware - todays UI design is the equivalent of getting 240p from a 1DXmk3! Anyway UI rant over. In terms of adding complexity to Fairlight, that will be a long time coming I think. If you operate in the physical world then the mixing desk is still a good way to run a studio, and considering that things like recording an orchestra aren't going to change, any time soon at least, that mindset of how it works will be with us for a long time. But you're right that they could add a modular UI like that to simulate the patch bay that normally sits along side a mixer, which is required as soon as you have more effects modules than you can have wired in at and one time.

-

Cool shorts. In terms of your style, I'm not really that sure. I don't watch enough horror or action to be able to see past the standard techniques and shots to see what is more uniquely yours. Anyone else have a more familiar eye?

-

Thanks mate! Please no!!

-

Thanks @OliKMIA - I hadn't factored in 5K vs 4K, but as you say, it's splitting hairs, and the whole thing is downscaled anyway. Especially for me considering I don't do green-screen, I deliberately use softer lenses and love the film-look of the output, and I typically just publish 1080p to YT. I'd trade-off resolution for bit-depth and DR any day of the week. I guess the whole point of this thread was to understand if there were any hidden down-sides I didn't know about. Apart from shifting the ETC mode from production to post-production I don't really see any issues for me. Yay!

-

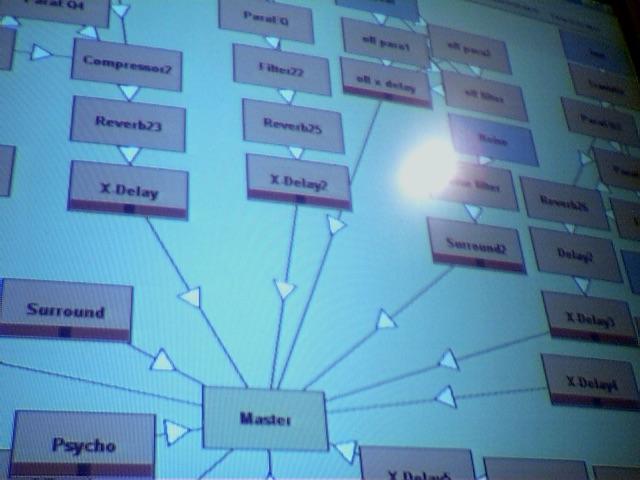

Interesting. I didn't realise that they were keeping Fusion as a standalone product, although that might not be the long-term direction. I know that in terms of integrating or modifying software significantly, certain bits are a lot easier to get working than other bits. Maybe the roadmap is to gradually add in the bits that Resolve hasn't enabled yet, or maybe not, who knows Yeah. I found it interesting the BM guy searched the forum to see how many times it was mentioned, so maybe they do try and respond to users. I kind of got the impression that they do, but also they have a strong strategy - integrating an NLE, Fairlight, and now Fusion into Resolve wouldn't have been them responding to forum users! It was also interesting the BM guy said that it wasn't necessary, and someone countered by pointing out that 8K support also wasn't necessary.. touché! Agreed. I noticed a huge difference in crashing between 12.5 and 14, so I think someone really pushed for it to get more reliable. It went from crashing about every 20 minutes to maybe every 8 hours of use for me, so that's significant. One thing I can kind of sense is that a lot of the niggling bugs have been around for many versions, so I think they might be harder to get to issues more to do with mashing things together instead of just isolated in one module or whatever. Things like the "I get no audio" bug which seems to be fixed by anything from muting and unmuting in each panel to changing system output device or messing around with the database, and has been with us since v12.5 and probably before that even. I agree it's big and blocky. I alternate between using it on a 13" laptop and using the same laptop with a UHD display. It's better on the larger display, but it's definitely not as customisable as would be ideal. I think this is due to the heritage of it being around for a long time, like most other long-lived software packages look old and inflexible. In a sense this is something that also runs through the film industry too, so much of the processes, techniques, terminology, etc is rooted in out-of-date technologies or previous limitations that are no longer present. This is really a symptom of what happens when you take a challenge (make a film) break it up into the steps (shoot, develop, edit, test-screen, picture lock, sound, colour timing, distribute) and then when the technology changes you update each part individually but not the way that it was broken up in the first place. The things that the film-industry talks about as being revolutionary seem completely ridiculous when viewed from an outside perspective, like the director working with the colourist to create a LUT that was used on-set to preview the look as it's shot, or being able to abandon the idea of the picture-lock by being open to making changes to the edit if the audio mixing discovers any improvements that could be made. These are obvious no-brainer things if you haven't gotten used to those limitations over time. In terms of Fairlight I think that's the case too. Having a mixing desk approach made sense when audio equipment was all analogue, but it's always seemed restrictive to me. Here's a screenshot of the free modular software-only tracker that I was writing music with about 20-years ago. Every blue box was an audio generator and every orange one was an effect. Every arrow had a volume control and every module had an unlimited number of inputs. Every module could be opened up and adjusted with sliders for each parameter. Every slider could be automated. Anyone could develop new modules and share as .DLL files. As computers got more powerful you could have more modules. We got to the point where it became difficult to work with because you couldn't adjust the size of the boxes on the screen and so you'd have stacks of boxes on top of each other. I used to write tracks like the above where there was a separate effects chain for every instrument, except things like compressors where you want them to drive the whole mix. My friend used to connect every module to every other module just to see what they sounded like, and he'd get these amazing textures and tones. He was a big fan of Sonic Youth and apart from writing electronic music with me he also used to play guitar and integrate samples from that, and later on we get into glitch and heavy sampling. I think I would have gone insane if I'd been trying to write music limited to the architecture of a mixing desk, or couldn't just right-click and bring up a new module.. what do you mean every reverb instance costs $1000?????

-

Screw PT Barnum. I think you're drinking the Trump /MAGA Kool Aide - that both the past is better than the present, and that somehow we can get there by copying random aspects of it regardless of causality.. or logic Makes sense. Any technique is valid as long as it supports the end goal.

-

Now you're just shit stirring! So much to comment on. So I'll shit-stir back... "He lives and dies with a 70-200mm, 100-400mm lens." Have you ever filmed anything inside a house? If you had, you'll realise that a 70mm+ lens is only good for videos where the voice-over starts with "Has acne haunted you all your life?" "Philip Bloom seems to do ok using tele lenses" No he doesn't. He loves getting shallow DOF with his camera tests, which are exclusively shot at long focal lengths because he's shooting people (or cats) without their permission. When he makes a real film he uses whatever focal lengths are appropriate, and for the B-roll goes to great pains to choose angles where there's foreground, like putting the camera on the ground or peeking out from behind foliage or posts or whatever. "but all these fast lenses are I think unessential" Using red in painting is unessential. Film-making is unessential. So are clothes, ice-cream, and sports of any kind. If we're going to live like that then let's all just live in caves - you go first "30, 40, 50 years ago when movies were the I think the best" You're right, I got it all wrong! It was the lenses that made cinema of the 70s/80s/90s greater, not the availability of cheap VFX, the 2-second attention span of modern society, or the fact that the movie on the big screen is competing with Instagram, Twitter and instant messaging "Kool Aide all those fast Chinese lens makers are pushing" // "wonky looking lens" Rent some expensive fast glass - you'll be surprised! Any lens gets sharp when stopped down two stops, that means f0.95 lens gets sharp at f1.9, when typical lenses are still blurrier than when they just woke up Besides, don't people think the modern look is too sharp? I know you do - you think that camera lenses were what made classic cinema better than Michael Bay, in which case, lenses being a little soft when wide open is just what the doctor ordered Besides, are you aware of the changes in DOF with focal length? If I have a 50mm lens at f4 and want to have the same DOF with a 24mm lens, to match a shot, you know you need faster than f1 for the same DOF at the same distance, right? What about if I need to add a bit of 3D to a super-wide landscape shot?