-

Posts

7,834 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by kye

-

A really simple example might be the home videos from Minority Report: Ignoring the 3D aspect of it, right now we have the ability to shoot really wide angle and then project really wide angle. All you need is a GoPro and one of those projectors designed to be close to the screen - existing tech right now. If you shoot 4K but project it 8 foot tall and 14 foot wide then most people sure as hell will be able to see it - especially if you've shot H265 at 35Mbps!! Projecting people life-sized is a pretty attractive viewing experience, so we're not talking some kind of abstract niche kind of thing - we're talking something that a percentage of the worlds population would see in the big-box store and say "I want that" I understand that. If you read my post carefully you will notice I mentioned that they might have a 24/25/30fps sync - this is different to continuous lighting. While this isn't currently available at full power, there are strobes that can recycle fast enough (eg, Profoto D2 - link can recycle in 0.03s and can already sync to 20fps bursts). All that is missing is having a big enough buffer (capacitor bank) to do full power that fast.

-

That looks really good.. thanks!

-

My impression was that it was a quick 'fix' to bypass some kind of issue. Considering how important aspect ratios are in both still and moving images, and the fact that everyone has known they're important for almost the entire history of photography, it's unlikely it was a mistake. Of course, regardless of how and why it happened, it's likely they'll fix it quietly and we'll never find out

-

I watched that too.. He makes a point, BUT he's assuming that we shoot, process, and display in the same resolution, and that we display that resolution in a 16:9 rectangle with a viewing angle aligned with the THX or SMPTE specifications. This is old thinking. We need to shoot higher than 4K if we want visually-acceptable results with reasonable cropping, VR, or any kind of immersive projection. Not if Canikon gives us an early xmas!! I've gone a little ways down this path, and there are a few adjustments. With a shutter speed you can either shoot for video (180 degree rule), for images (very short exposures), or a compromise. I shoot a compromise because I think that the motion blur you get from something like 1/100 or 1/150 is appropriate because if it's significantly blurred then it was an action shot and I like that in the photo, but this is personal taste. In terms of formal posed moments, video modes aren't currently well suited to this, so you're better off just shooting images. However, cameras could evolve in this aspect (and would need to) by perhaps having something like a 'burst video mode' where it shot RAW for a burst, perhaps 0.5s before and after the shutter button was pushed. This combined with high ISO performance could give sufficient image quality to work fine with continuous light, or you could get continuous lighting with a burst mode, or 24/25/30fps sync for that burst. These technologies are either already with us, or are pretty close, so it's more a case of them being combined and the market working out what photogs would use and find practical. In terms of picking shots, I think it's actually a bit easier to choose shots than with photos, but it requires a different mind-set. When you're taking photographs you're trying to notice everything and then hit the shutter at exactly the right moment to capture it. You then take those moments into post and have to choose between the shot with the nicest groom smile but slightly forced bride smile, and the better bride smile but less groom smile, or to spend the time photoshopping the two together. With video, you're capturing every moment and now you get to choose any moment to retrospectively 'hit the shutter button'. In this sense, you should view it as a continuous capture, not individual images. During a moment, there are lots of micro-moments happening: the bride smiles, the groom smiles, the trees flutter, the distracting noise happens in the background, the couple hear it, the couple look puzzled while they process it, then they laugh at slightly different times. You will have captured all of those moments and all of the moments in-between, so the task is to scrub the play-head back and forwards to find the moments of 'peak smile'. This is kind of how sports photographers choose images from bursts - they don't think "crap, I've got to choose between 3000 images" they know that in each burst there is the best moment and they just scroll through them to pick that best moment. The difference will be that even if you're shooting in the fastest bursts of Canikon (around 10-12 fps) the magic moment in sports is often between frames. There's a reason that flagship bodies are pushing for higher burst rates - pro shooters aren't saying "oh shit, higher burst rates make my life worse by making me choose between more images". In the future the burst video mode for flagship bodies will be much faster than video - it will be 50, then 100, then higher frame rates. If you look at the iPhone burst functionality, it captures a burst and then shows you the whole burst as one image, which if you click on it to edit it you can choose which ones are kept and there's a button to discard all the rest. Apple has worked out that a burst is different to a whole string of individual images, this is more aligned with the new way of looking at this type of capability.

-

Same here. It's just strange when a topic like "how can I boost the power of my laptop" comes up and people answer "buy a 24 ton supercomputer" and you're like "how on earth does that even make sense?"

-

In case anyone hasn't seen it... The video references a kickstarter for a battery adapter that looks really useful as it's both an external battery solution for multiple devices but is also a charger:

-

LOL! Yeah, I love the guessing too!

-

Cool. The more control they have over these things and the better they are at colour the closer they should be able to get in matching colours between cameras with different sensors. With tools like Resolve, if you have time and some dedication you should be able to get the look you want from most cameras these days. Matching in a multi-camera setup requires a higher skill level, but I've seen youngsters on YT match many cameras passably well in a very short period of time, using only the LGG controls, and if you're willing to dive into colour checkers you can dial things in pretty quickly if you do a bit of homework about how the colours differ beforehand.

-

So in one corner we have the argument that companies without a cinema line could go all in and will make a non-crippled camera. In the other corner we have the argument of trickle-down technology where those companies with a cinema line will have already recouped the R&D costs of really fancy tech and the low-end of their range will be a combination of high performance and lower cost. Maybe we should just toss a coin to predict these things!

-

It won't be a direct competitor, no. But for some people who are in between, it might be a toss up between two options that only partially provide what they want. I know this won't be that many people though.

-

@srgkonev - thanks for sharing, that is a really cool finished video! Cameras really are like musical instruments: they create nothing while sitting on a shelf, good instruments don't improve bad players, and they only fully bloom in post production!

-

This makes me wonder what the architecture of 'colour science' is inside a camera. Does anyone know exactly how sensors are implemented? I figure that it could be one of these two scenarios: Scenario 1: Sensor gives RAW readout -> image is processed according to colour profile / image settings / codec -> file is output Scenario 2: Sensor is configured to give 'tuned' RAW readout -> image is processed according to colour profile / image settings / codec -> file is output If we look at what Magic Lantern does for Canon, the RAW files look quite Canon-ish. Does that mean that scenario 2 is the case? My impression is that making a sensor that can get 'nice skin tones' by changing the RAW electro-chemical properties of the photo sites would be almost impossible but maybe I'm wrong. Either way there's pretty powerful options for adjusting the image, so they've got a fighting chance of doing an impressive job matching them.

-

That video is just wonderful.. Normally I remove videos when I quote posts, but it deserves a bump!! One of the things that struck me was the DR. I'm no expert, but the highlights looked soft (ie, not harsh) and the shadows looked nice too, with nice mid-tones. People keep talking about skin tones when 'colour science' gets mentioned, but more and more I think (for my eyes at least) it's the DR and highlights that really matter. As an example, the video above is in direct contrast to the below video that was shot on Canon, where the highlights look clipped / harsh / videoish. To be fair, Matti could have shot this on his 1DXII or his 6DII, and I'm pretty sure he doesn't shoot log, so it won't be using the full DR from the sensor, and it's also some pretty difficult lighting conditions, and he might have also rushed the grade (considering he's travelling with friends and family and trying to vlog at the same time), but yeah, it's not nice. However, to also be fair to the Nikon D850 video as well, there are lots of shots in there with quite challenging lighting conditions too. I was saying that my shortlist was the A7III, Pocket 4K, Canon FF ML and Nikon FF ML, but I think Nikon has just gone from an academic inclusion to an option I will take seriously! You missed out the people waiting for the Canon FF mirrorless. The consensus is that it's unlikely Canon will do anything revolutionary, but there's still a chance, and I think there will be quite a few people waiting to see what that looks like before ordering anything. Also, those on the fence about the Pocket 2 might wait to see sample footage and reviews start trickling in, considering the initial issues / bugs that BM had with the Pocket 1.

-

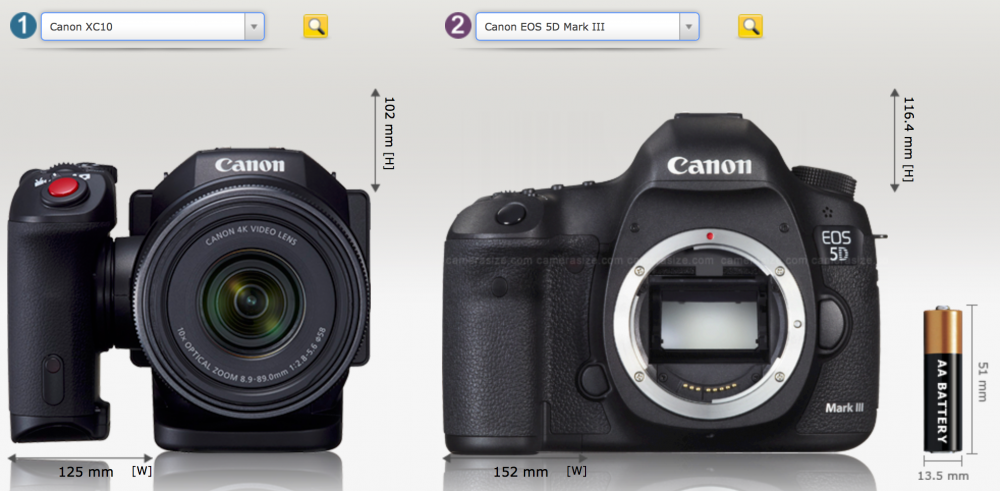

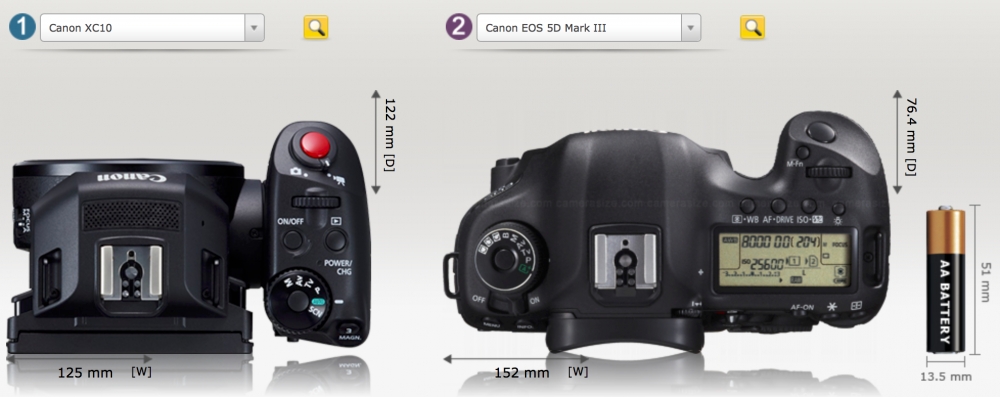

A camera in-between the XC10/15 and C200 would be absolutely fantastic. The C100 is sized closer to the C200 than XC10 but only does 1080. Those industrial cameras you mention only do 1080 (link link) and would be large and attention grabbing by the time you rigged them up so they had screens and the proper ergonomics. You're right about the camerasize.com images looking strange, for some reason they cropped the lens out of the top angle!

-

I totally get your point. It'll be a hangover from the days when burning film was a fixed lower limit on making a film - you couldn't shoot a 90 minute film without exposing, processing and developing at least 90 minutes of negatives. In that sense, "No Budget" would have made sense if it was below that minimum cost. In todays world when digital cameras are being thrown away, old computers capable of editing video are being thrown away, and editing software is available for free, you can literally make a film for nothing. In this sense, $2200 is a large amount of money because it doesn't have to be spent on equipment. It's yet another culture clash caused by technology letting all the riff-raff in! Anyway, enough tangents from me

-

Actually, this thread is mis-named. It should be called "YouTube has come to its senses and doesn't add black bars to non-16:9 content".

-

It seems like the decision is if you're swapping to FCPX or not. I'd suggest watching some of those "I switched from PP to FCPX" videos, some about FCPX to PP, and see if you can find any where people went PP -> FCPX ->PP again, and listen to the reasons why people made those switches and what their impressions were. It depends on what style of editing you do as each editor will have slightly different strengths and weaknesses, and will have slightly different philosophies / approaches to things. It's about how well they fit to how you like to think and what your workflow looks like. I use Resolve, not because it's "the best" (it's not) but because it fits my mindset and workflow.

-

I've enhanced the image using the latest Hollywood VFX and I can conclude it's so large because it has to fit in all the video modes.. I can clearly make out 1080 RAW 12bit, 1080 RAW 14bit, 4K RAW 14bit, 4K RAW 16bit, 4K Prores 444 HQ, 5K RAW, 5K Prores 444 HQ, 6K Prores 444 HQ, 8K Prores 444 HQ, but the last two are too blurry - I'm assuming they're 10K to downsample for 8K.

-

It's not that hard to cool a sensor in a small body. The Canon XC10 has air cooling with fans, and it's a very compact body. Here it is vs a 5D mkIII body:

-

I know that's how it works @IronFilm but it just seemed a little too far from <common sense / normal humans / the real world> to leave hanging... the film industry is a very strange place where Low Budget can mean $400,000 and No Budget can mean $2,200 (link link). That puts a $100 film in the "I Haven't Eaten In A Week Budget" category, and yet it still got a $15,000 camera!

-

I'm not sure what you should choose, but here's a handy list of codecs that might inspire you? https://blog.frame.io/2017/02/13/50-intermediate-codecs-compared/ I would imagine your decision would revolve around quality vs bitrate.

-

Plus, since Homo Erectus, people are vertical creatures... so it's almost a mystery as to why video began in landscape. BUT, if you consider that most TV / movies are people standing next to each other it makes total sense. Now with the rise of vertical video and lots of videos of a single person talking to camera it almost seems like these might be connected?!?!!?

-

All this talk of a bazillion stops of DR, I'm reminded of the minor miracle that these manufacturers have managed to accomplish.. 10 stops of dynamic range means that in comparison to the smallest amount of light they can distinguish, the brightest light it can distinguish is 1024x. That doesn't sound like much, but 12 stops is 4096x, 14 stops is 16384x, and 16 stops would be 65535x!!!! Even the claimed 13 stops for the Pocket 4K is 8192x.. that's huge! We really are spoiled ???

-

Now all the YouTubers who record IGTV and YT content will be hassling monitor companies for "flippy screens" as well as Sony!

-

I may be nit-picking, but there might be a difference between what the customers want at home and what will make it sell on the shop floor. There's a thing with hifi speakers about treble - manufacturers deliberately turn the treble up too high because on the shop floor it makes them sound 'detailed' and so they out-sell the other speakers in the line-up, Of course, at home, in a quiet, much more absorbing environment, the speakers sound bad and will be fatiguing. Tests show that more treble is preferable when doing a quick test, but when doing a longer test more balanced speakers win out.