-

Posts

7,831 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by kye

-

It's an interesting question, and I think it depends. If we fed the AI every film / TV episode / etc and all the data that says how "good" each one is, then I think the AI will only be able to predict if a newly created work is a good example of a previously demonstrated pattern. For example, if we trained it on every TV episode ever and then asked it to judge an ASMR video, it would probably say that it was a very bad video, because it's nothing like a well regarded TV show. However, if AI was somehow able to extract some overall sense of the underlying dynamics at play in human perception / psychology / etc, then maybe it would see its first ASMR video and know that although it was different to other genres, it still fit into the underlying preferences humans have. I think we are getting AIs that act like the first case, but we are training them like the second (i.e. general intelligences) so depending on how well they are able to accomplish that, we might get the second one. The following quote contains spoilers for West World and the book Neuromancer:

-

Both can be innovative by doing things that diverge from what was already discovered to be "good", that's definitely true. The difference is that when AI deviates, it can't tell if the deviation is creative or just mediocre, because the only reference it has is how much the new thing matches the training data. If a human deviates, they can experience if it is good according to our own innate humanity. A human can experience something that is genuinely new, and can differentiate something mediocre from something amazing. The AI can only compare with the past. This is, I think, what great artists do. They try new stuff, and sometimes hit upon something that is new and good. This is the innovation.

-

..and the DigitalRev TV channel just reposted about 20 of the videos. Who knows what is happening at their end.

-

Sounds relatively straight-forwards if you work methodically. I suggest the following: Start with a working DCTL that does similar things Make a copy of it in the DCTL folder, run Resolve and get it loaded up and confirm it works Open it in a text editor (Once you've done this you can save the DCTL file, and reload it in Resolve without having to restart) Then make a single change to the DCTL, reload it, and confirm it still works If it doesn't work then undo whatever change you made and you should be back to having it work again, and have another go. Depending on your text editor the save might clear the un-do history, so maybe keep a copy of the whole thing in another document ready to paste back in if you have a challenge Keep making incremental changes until you get it how you want it You should be able to copy/paste functions from other scripts, change the math / logic, add controls to the interface etc. The key is doing it one change at a time so that you don't spend ages making lots of changes then even more time trying to troubleshoot it when it doesn't work.

-

I have a small amount of experience with them. What are you trying to do?

-

It seems that all the original files for the videos magically appeared on Lok's hard drive...

-

I read the link.. it said .... Dan Sasaki @panavisionofficial engineered anamorphic “ adapter “ If you look at the setup, there's a pretty large gap between what is the Panavision component and the body of the camera, and it is easily large enough to accommodate a decent percentage of 8mm lenses ever made, plus the flange distance for 8mm film is very small, so combining those factors, I did take it that it might actually be an anamorphic "adapter". I know enough about anamorphic lenses to know this setup is very common and completely plausible. Therefore, a question about the "adapter" and also about what the taking lens might be seemed warranted. Do you have further information? I haven't seen anything so far that convinces me it's a lens rather than an anamorphic adapter, and even if it was, my original question about what lens it is still stands? Pointing me to a link that obviously doesn't provide any conclusive answers is not very helpful.

-

-

I absolutely agree with @Ty Harper that with enough data it will be able to differentiate the movies that got nominated for an academy award from those that didn't, those that did well in the box office from those that didn't, etc. What it won't be able to do, or at least not by analysing only the finished film, is know that the difference between one movies success and the next one is that the director of one was connected in the industry and the second movie lacked that level of influence. But, if we give it access to enough data, it will know that too, and will tell a very uncomfortable story about how nepotism ranks highly in predicting individual successes... I also agree with @JulioD that the wisdom will be backwards-looking, but let's face it, how many of the Hollywood blockbusters are innovative? Sure, there is the odd tweak here or there that is enabled by modern production techniques, and the technology of the day changes the environment that stories are set in, but a good boy-meets-girl rom-com won't have changed much in its fundamentals because humans haven't changed in our fundamentals. Perhaps the only thing not mentioned is that while AI will be backwards looking, and only able to imitate / remix past creativity, humans inevitably use all the tools at their disposal, and like other tools before it, I think that AI will be used by a minority of people to provide inspiration for the creation of new things and new ideas, and also, it will give the creative amongst us the increased ability to realise our dreams. Take feature films for example. Lots of people set out to make their first feature film but the success rate is stunningly low for which ones get finished. Making a feature is incredibly difficult. Then how many that do get made are ever seen by anyone consequential? Likely only a small fraction too. Potentially these ideas might have been great, but those involved just couldn't get them finished, or get them seen. AI could give everyone access to this. It will give everyone else the ability to spew out mediocre dross, but that's the current state of the industry anyway isn't it? YT is full of absolute rubbish, so it's not like this will be a new challenge...

-

No, it's not an echo chamber, and people are free to have whatever perspectives they want. But take this thread as an example. It started off by saying that 24p was only chosen as a technical compromise, and that more is better. Here we are, 9 pages later, and what have we learned? The OP has argued that 60p is better because it's better. What does better even mean? What goal are they trying to achieve? They haven't specified. They've shown no signs of knowing what the purpose of cinema really is. You prefer 60p. But you also think that cinema should be as realistic as possible, which doesn't make any sense whatsoever. You are also not interested in making things intentionally un-realistic. Everyone else understands that 24p is better because they understand the goal is for creative expression, not realism. If we talk about literally any other aspect of film-making, are we going to get the same argument again, where you think something is crap because you have a completely different set of goals to the rest of us? Also, the entire tone from the OP was one of confrontation and arguing for its own sake. Do you think there was any learning here? I am under no illusions. I didn't post because I thought you or the OP had an information deficit, but were keen to learn and evolve your opinion. I posted because the internet is full of people who think technical specifications are the only things that matter and don't think about cameras in the context of the end result, they think of them as some sort of theoretical engineering challenge with no practical purpose. A frequently quoted parallel is that no-one cared about what paint brushes Michelangelo used to paint the Sistine Chapel except 1) painters at a similar level who are trying to take every advantage to achieve perfection, and 2) people that don't know anything about painting and think the tools make the artist. I like the tech just as much as the next person, but at the end of the day "better" has to be defined against some sort of goal, and your goal is diametrically opposed to the goal of the entire industry that creates cinema and TV. Further to that, the entire method of thinking is different too - yours is a goal to push to one extreme (the most realistic) and the goal of cinema and TV is to find the optimum point (the right balance between things looking real and un-real).

-

The ability of an AI to make a single still image does not mean that AI can generate a series of images that are coherent with each other. The ability of an AI to make a series of images that are coherent with each other does not mean that AI can generate a 3D world that feels like our reality. ....and even if an AI can do that it doesn't mean that the people in that reality will move how we move. ....and even if an AI can do that it doesn't mean that the movement of the people will be believable in-context. ....and even if an AI can do that it doesn't mean that the movement will be emotionally relevant to the situation. ....and even if an AI can do that it doesn't mean that the emotional appearances will be of note, let alone at the standard of winning awards. There is a huge way to go. In a discussion about cinema, pointing to an image generator is like having a discussion about poetry and pointing to a video where a monkey can write basic words with a crayon. No disrespect intended here, but it really seems to me that cinema is an art-form that you don't seem to really understand, and don't really seem to be interested in understanding. All the discussions that I've seen you participate in seem to be where your approach is just at-odds with the entire concept. There is a huge body of discussion and debate about cinema and even what is an isn't cinema, but I haven't seen a perspective so remote from the others. I feel like I'm trying to talk about poetry with someone who thinks that everything should be in full sentences, and the only purpose of the written word is to communicate factual data, that the purpose of life is to optimise human productivity, and that everyone else also thinks this because it's obviously true. The discussion of details is wrong because the context is wrong because the entire nature of what you think is going on and what we are trying to achieve is just wrong. I don't really see how much else can be accomplished with such fundamental differences of thought, when it's pretty obvious that you weren't welcoming of the idea that other people see this very differently to you and you're not really interested in learning about this seemingly new other perspective, despite the fact that this other perspective is integral to the entire way that the entire art-form operates. I wish you the best of luck filming your ultra-realistic nature videos.

-

Sure, but how does this relate to what I posted about?

-

Not sure. I've only had it happen a few times, and although it became really slow, I was able to bring up the Finder window and delete some large files I could replace, then wait for it to recover, then restart it and free up a bit more space. Sometimes that process would involve you clicking on something and then going to get a snack and giving it 5 minutes to process that click. It would normally only occur when I was downloading something and accidentally filled up the drive. Other times it will warn you that you don't have enough drive space (e.g. to copy files) or it will warn you that drive space is low, so you can free some up before it hits the wall. It certainly teaches you to be careful though! Both in downloading, but also in buying the right sized SSD when buying a new machine.

-

Of course, 24p doesn't make the magic of cinema! But, the counterpoint is pretty strong - for many/most (as evidenced by this thread) the absence of 24p sure destroys it. I think that perhaps one of the most overriding (and infuriating) principles at work here is that to make something truly cinematic requires that everything be at a high standard - there's very little room to move on the knife edge. Of course, how much you can deviate from perfect and not ruin the whole thing is different for each element - the quality of acting or production design or sound design or writing might be more or less important than other elements, and are also going to depend on the viewer as well - one person is tolerant of mediocre acting and the next person will walk out of the cinema because of it. For whatever reason, these forums tend to focus the discussion on the image. At our best we talk about it with a certain emphasis over other aspects, and in our worst moments we talk about it like no other aspect of film-making exists. For better or worse this often attracts people to drop into the forums who have no concept that the other aspects even exist, then the resulting discussions are just separated from the reality that almost everyone else lives in. I think AI will replace the farcical comic-book blockbusters that the Hollywood sausage-factory is currently configured to create, but to confuse the seemingly infinite stream of Insect-man and the Saviours of the Metaverse sequels for the entirety of "movies" is a mistake. Cinematic "realism" is a much more nuanced concept than you might think, because I want movies to be intellectually "realistic" and/or emotionally "realistic". People don't react well to serious movies with shallow and contrived plot lines, nor do they react well to bad acting, these are both forms of the movie not being realistic in other ways that do matter. I've been contemplating this concept of "realism" and to be perfectly honest, the more I think about it the more I realise the entire concept is completely non-sensical. If we take "realism" to its logical conclusion then: Gone With The Wind, a movie that takes place over the course of the US Civil War and its aftermath, would have been over 5 years long - jumping forwards in time to just look at the "important" bits isn't even remotely realistic. During screenings the audience will be made to partly starve due to the war-torn conditions. John Wick would be awful. Making it realistic would result in something like this: You get told to go kill John, you are nervous on your way there, you see him and run at him yelling, he almost immediately shoots you in the head, the movie is over in 17m42s. The theatre has specially designed seats that break your legs at the 17m12s mark. or, You are John Wick, to be realistic from the perspective of almost every audience member worldwide, the 78 people in the first scene who are sent to kill you succeed. You die in 3m27s. The seats stab you from 5 different directions to simulate being shot. etc. If you think these are completely preposterous, which they absolutely are, then you need to accept that some aspects of film-making are not best when made more "realistic". From there it is possible to start to have a sensible discussion. Human beings are exceptionally finely tuned animals when it comes to certain things like facial expressions and how things move in 3d environments etc, so I suspect that the uncanny valley will take a while to cross, probably a lot longer than most would imagine. However, I think it will be crossed eventually because people are also great at personification and interpretation, as things like the Kuleshov effect show, especially if we are invested in the subject matter. With enough data, AI will get there. I find that people mis-interpret AI. Here's how I suggest that you think about it. The CPU of a computer has about as much sophistication as a pocket calculator. I'm not kidding, they can literally only do binary logic operations. Modern computers are billions of tiny little pocket calculators built to go screamingly fast. AI is us programming them to analyse a bunch of input data and then make output data that fits the pattern. ChatGPT is literally trillions of screamingly fast tiny calculators playing a game of "what comes next?" with a gargantuan database. If the tiny calculators can learn to write a doctoral thesis, in English, or learn to make a photorealistic image of a monkey climbing a tree, then there is no logic in saying that it can analyse and mimic those things but not a nice edit. In my mind it's like saying that someone has walked 10,000 miles and has made it to the outskirts of the city, but that there's no way it could ever make it to the central train station.

-

I wasn't saying that you did all those things, just explaining the context so you might understand the reaction that some people had. The experts are human too, so don't confuse their expertise and time/effort in trying to help with any human foibles that it is inevitable that everyone has. As far as I am aware, these forums are perhaps the best place online to discuss the video capabilities of hybrid cameras, as the vast majority of people here use hybrid cameras because they take stills as well as video, so by setting the context as a cinema-camera only discussion you not only skewed the discussion away from accessing the majority of knowledge and experience of the members, but you also targeted the involvement of those members who do specialise in video-only cameras. Perhaps, above all, the people who participated in good faith don't react well to helping someone who deliberately misrepresented their position at the outset.

-

It sounds like you missed the point. I didn't post the video saying that it was an example of great image quality, I posted it to make the point that a lot of the techniques being used in cinema are also being used for creative YouTube videos. This entire discussion has been about if film-making should be more or less realistic, and the point that many of us have been making is that almost all of the tools and techniques used in cinema deliberately make things less realistic. The video I posted was an example of many techniques that improve the creative aspects, but make the end result less realistic, including: cutting up clips into sequences that aren't 'continuity editing' but are more emotive combining multiple images at a time (e.g. the top-down shot in the bedroom) splicing in audio clips that were recorded at a different time than the visual being shown overlapping audio clips and other foley and SFX to create a creative rather than realistic sound design non-realistic colour grading filming insert shots (like the hanging of the clothes in the closet) for the purpose of association rather than limiting the edit to 'real' events production design techniques like use of lighting and light modifiers, smoke machines, etc in-camera visual effects like the top-down shot of the medium format camera etc. there are likely lots more, these are just the things I could name off the top of my head From this perspective, such a video is an example of a great many techniques that are employed by film-makers to make the finished product more appealing, but do so specifically by making the end result less realistic. You didn't like it, and that's fine, but my point was that there are deliberately non-realistic techniques being used on YT and the example shows a variety of them in use. It didn't share it because I thought everyone would like it, it was an example to discuss the techniques.

-

As someone who cares a lot about camera size, I find that discussions often ignore a number of important variables. For example, larger cameras can: Attract additional attention (sometimes very negative attention) making the shooting process more difficult in some situations When shooting candid situations they make the people in the frame act very differently, which completely overrides any image quality advantage they might have Make you feel more awkward, leading to a less enjoyable shooting experience, and negatively impacting your creativity in terms of compositions, camera movement, etc Make you feel more awkward, leading to people around you also being awkward (body language is a powerful thing) Make you more tired, leading to worse footage If shooting outside controlled situations, you can be blocked from shooting by security guards and other "official" folks, who will decide who is allowed to shoot and who isn't based solely on the size and appearance of the camera Etc. For my family and travel shoots I have swapped from my GH5 to the GX85 purely for these reasons. The image on the GH5 is great, but high-quality capture of compromised shooting of awkward people isn't as good as a lower-quality capture of creative shooting of relaxed people. Not by a long shot. I understand the pull of the nicer cameras / codecs / DR / colour science / etc, but encourage you to consider the big picture - the end goal is to get the nicest possible finished edit, right? Another factor to think about is lenses and how large they are. You'll likely be trading off elements such as: size weight image stabilisation AF performance (and even AF vs MF) aperture / low-light / depth-of-field primes vs zoom / zoom range cost To this end, I have decided to move from MF primes with large apertures to a single (comparatively) small aperture zoom lens. This is because I have found that working backwards: the best edit comes from having the most options in the edit the most options in the edit comes from having 1) a greater number of shots of a scene and 2) a wider variety of shots having a greater number of shots is enabled when you can work fast, which means AF instead of MF having a greater variety of shots is enabled by having a variety of focal lengths quickly available, which means a zoom rather than primes (and the time it takes to swap lenses) In terms of the overall aesthetic, I'm a bit of a niche player in that I want the most cinematic images I can get, but (unlike almost everyone else on the internet) I've actually looked at images from cinema and realised they don't have a crazy shallow-DoF (and a large percentage have everything in focus) and cinema doesn't have super-sharp images, even in the normal theatre which is projecting in 2K. Combine this with the ability to colour grade images nicely (even rec709 images) and it makes it very clear that while a camera like the S5iiX is a very impressive piece of equipment, it isn't even close to the optimal for how I shoot. Obviously these are my thoughts and your situation will be very different, but hope this is useful.

-

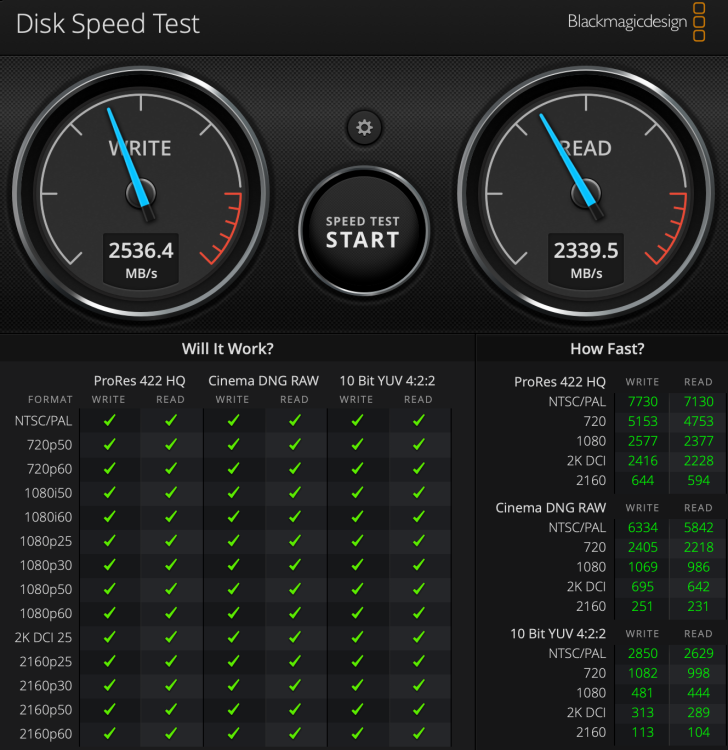

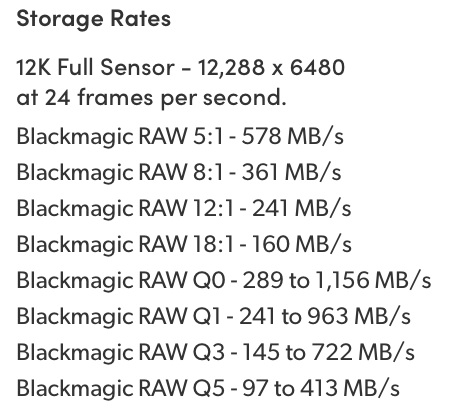

I'm curious. Do you have any experience about how much 'headroom' you should allow for in disk speeds? For example, if you had an SSD that could do 2000MB/s you wouldn't want to use it for a codec that was 2000MB/s because if anything happened then the drive couldn't ever catch back up again. Also, things like IPB codecs require decoding the previous keyframe then catching up to the frame you want, so there's overhead there too. Also, the internal drive is likely to be asked to do other things randomly by the OS, but external drives wouldn't be hassled so much (but still would be for background processes), so curious to hear how these compare in reality. I'm asking because I've never worked with codecs that are anywhere close to the speed of the drive, but it seems a good thing to be aware of when talking about these things.

-

You'd be amazed at the number of folks that drop into the forums, ask a question, and then: never answer follow-up questions and are never seen again argue with all the people trying to help them supply critical information many many pages later, despite having been asked directly along the way participate in the discussion nicely, then go out and buy the a wrong camera that was eliminated in the discussion, then complain about it because it has all these issues that everyone warned them about Hardly anyone even says 'thank you' either. This is why experts burn-out from posting on forums - the trail of people who ask for help and then fight every inch of the process to try and provide the help they asked for.

-

Panasonic S5 II (What does Panasonic have up their sleeve?)

kye replied to newfoundmass's topic in Cameras

Just watching this video and the guy says that it has the same / similar(?) colour science as his Leica Q2. I don't know if that's news to anyone? Anyway, thought I'd share in case.. -

I just watched John Wick 4 and what a movie! No spoilers, but I found it to be enjoyable, surprisingly creative and also cinematic as hell. I cannot imagine, in any form, where making it more realistic would improve things. I think computer games and immersive experiences definitely benefit from being more realistic, but cinema is just a fundamentally different type of experience.

-

The best form of defence is a good offence!

-

You can't upgrade an existing Mac, so its a matter of buying the one you want. ie, "upgrading" what you're purchasing. It depends on what you mean by "high resolution". Here's the speedtest I just did on my 2020 MBP internal SSD. It's not state of the art by a long-shot, but as you can see it's green across the board for all the codecs, including 4K60 in CinemaDNG. Looking at the data rates from the UMP 12K the highest ones for 12K 24p are 578MB/s for BRAW 5:1 and up to 1156MB/s for Q0, so in theory those should work fine too. You'd want to have a decent amount of headroom of course, but I suspect that having enough processing power is more likely to be the challenge rather than the speed of your SSD.

-

My wife bought a gaming monitor that can do 240Hz and (after much research) we figured out a solution to actually get 240Hz to it. I haven't played with it, but in theory I could play back video at 240fps on it, given the right software. I have no idea what it would look like, and don't in any way think it would be cinematic, but it would be interesting to see. My phone shoots 240p, so in theory I could record some video. I hate the look of 60p and also find 30p to have the same slippery look, just less severely than 60p. You've got me curious now.

-

I'm really talking about advanced stuff in my previous posts, and doing tricky work-arounds for things. If you simply buy a big enough SSD to hold the operating system and software in the first place then you should be fine. A good way to estimate that is to look at the total space you've used on the drive, and then take away the size of any folders that have your own files in them (documents, music, etc) and then you'll know how much space everything else is taking up. If you're upgrading and going to be using the same software then this should be a good guide for how much space you'll need. Software installations get larger over time of course, so build in some allowances for that, but otherwise you probably don't ever need to think about it. Probably the only things that might enter the equation is if you install some software and it decides to install a bunch of sample media libraries, or something like Resolve will render proxy files etc. For these things you hope that the software will give you the option to specify where to put all those files, but that's not really an Operating System thing, that's a software thing from Adobe or whoever.