-

Posts

7,834 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by kye

-

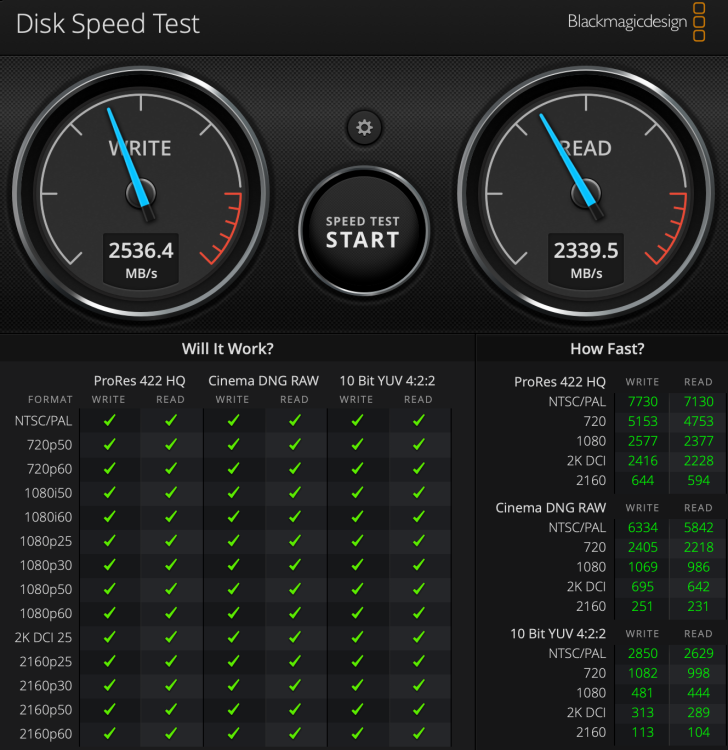

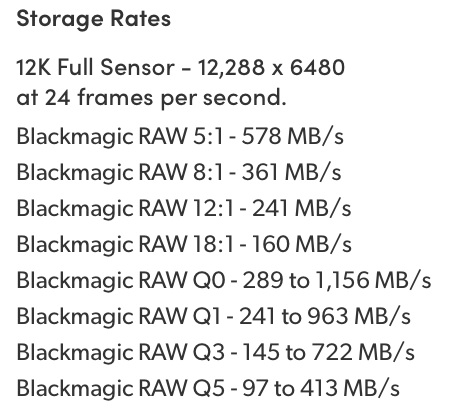

You can't upgrade an existing Mac, so its a matter of buying the one you want. ie, "upgrading" what you're purchasing. It depends on what you mean by "high resolution". Here's the speedtest I just did on my 2020 MBP internal SSD. It's not state of the art by a long-shot, but as you can see it's green across the board for all the codecs, including 4K60 in CinemaDNG. Looking at the data rates from the UMP 12K the highest ones for 12K 24p are 578MB/s for BRAW 5:1 and up to 1156MB/s for Q0, so in theory those should work fine too. You'd want to have a decent amount of headroom of course, but I suspect that having enough processing power is more likely to be the challenge rather than the speed of your SSD.

-

My wife bought a gaming monitor that can do 240Hz and (after much research) we figured out a solution to actually get 240Hz to it. I haven't played with it, but in theory I could play back video at 240fps on it, given the right software. I have no idea what it would look like, and don't in any way think it would be cinematic, but it would be interesting to see. My phone shoots 240p, so in theory I could record some video. I hate the look of 60p and also find 30p to have the same slippery look, just less severely than 60p. You've got me curious now.

-

I'm really talking about advanced stuff in my previous posts, and doing tricky work-arounds for things. If you simply buy a big enough SSD to hold the operating system and software in the first place then you should be fine. A good way to estimate that is to look at the total space you've used on the drive, and then take away the size of any folders that have your own files in them (documents, music, etc) and then you'll know how much space everything else is taking up. If you're upgrading and going to be using the same software then this should be a good guide for how much space you'll need. Software installations get larger over time of course, so build in some allowances for that, but otherwise you probably don't ever need to think about it. Probably the only things that might enter the equation is if you install some software and it decides to install a bunch of sample media libraries, or something like Resolve will render proxy files etc. For these things you hope that the software will give you the option to specify where to put all those files, but that's not really an Operating System thing, that's a software thing from Adobe or whoever.

-

It depends on what you want to do with it. For most people it's great. On my MBP the external SSD tests as fast as the internal one (2500MB/s read and write), and that's over USB, not even thunderbolt. The only challenge comes when you run out of space on the internal one and have already moved all your files onto external drives, just because various software likes to keep things in directories that you often don't get to choose, for example if you install games through something like Steam then I think they want to be on the local drive. You can side-step some of these things, but you're getting into technical territory here of complicated command-line stuff, which is beyond most people. One example that I was thinking of when I was writing a previous reply was the ability in unix to create a symbolic link from anywhere on the file system to anywhere else, which is incredibly useful for moving things to external drives to free up space on the local SSD for example, and doing it in such a way that the OS doesn't know and doesn't throw a fit. When you mentioned powershell I googled it and it seems that it supports some of this stuff, which is cool - that must have come later. Also, searching for files using grep etc was always really handy. I used PCs all through my computer science degree, and so became aware of the various scripting capabilities of DOS and shell scripts and writing shell scripts was just always so much easier than trying to write them in DOS and having to download some new third-party utility in order to do stuff that was really straight-forward in unix.

-

G9ii to get external RAW in future firmware update...

-

Come on... we all know that Jack will buy one - he can't help himself!

-

People definitely use external SSDs to augment their internal storage, but there are limitations to that kind of thing. At the time you needed about a dozen third-party utilities to be able to do all the things that you wanted. When I moved to the Mac I did a bunch of googling about "how do I do X in OSX" and read a bunch of tutorials on how to switch, and at the end of it I realised instead of needing a dozen third-party utilities I only needed 1 or 2. That was an interesting observation. I realise things likely changed in the meantime as Windows gradually added things. Maybe it was just a point-in-time thing. Maybe it was just because I already knew how to use unix, so using things like piping and regular expressions etc was all stuff I knew how to do. Every time there was a new version of Windows it took a bunch of hours to deal with the fallout of things not working the same etc, and that time requirement just stopped when I made the switch. It was something I really noticed at the time. I'm not trying to say that either one is better or worse, and if I had to switch platforms then I'm sure I'd be fine and would see some new things I like as well as some annoyances too. I realise today things are much more ubiquitous. Probably every software package I use would be available on both platforms, and the hardware might be better on the Windows side now too. I've had a constant stream of Windows laptops since then for my day job and they seem to work ok.

-

Yeah, and she said she shot with baked-in colour profiles for lots of it, and then spent 30 hours trying to colour grade it. The struggle is real!

-

I found this video when searching for the lenses one above and just re-watched it. It talks about how David Fincher designed the colour palette of the movie Se7en to accentuate the story-telling aspects and heighten the climax. Spoilers for the movie Se7en from 1995, in case you haven't gotten around to seeing it yet lol. The underlying concept is that these choices are designed to provide psychological queues to the audience and heighten the excitement. Once again, none of this is remotely realistic, and does not aspire to realism as a goal, but does the opposite for dramatic effect. For those who like cameras and recording video and watch YT but don't really have much awareness of how cinema and high-end TV shows are created, these things might be completely new concepts and the above videos hopefully provide a glimpse behind the curtain. For those that think this stuff is purely for Hollywood and cinema, it's alive and well in the world of YT. Here's a video from Natalie Lynn, and is a great example of film-making techniques applied to personal travel videography, and is very emotive in a way that an impartial camera cannot achieve. and here's an interview and production breakdown of how it was created: Spoilers: it includes special lighting, she bought a smoke machine, spent a ridiculous amount of time in the edit, and lots of other things. It takes a lot of work to make things look effortless.

-

Since we started using SSDs the RAM limitations were much less impactful, but WOW does OSX fall apart if you run out of SSD space! It becomes a struggle to even use the computer to delete some files and recover, things just unravel.. I moved from PC to Mac in 2012, and at the time the experience was night and day. Since then OSX has definitely become far less reliable. The phrase "it just works" was definitely true, but since then that's stopped being the case - I think that started with the update that broke a bunch of people's WIFI (something to do with Airdrop I think). I also understand that Windows has mostly sorted itself out as well, so that's true also. I have a background in tech and at the time I knew that Windows was fiddly because I was sick of having to fix this thing or that thing, but it wasn't until I actually switched that I really understood the difference. It was like I used to have an angry neighbour who would come and interrupt what I was doing every few days, waste my time, make me angry, and would then go away and I'd have to calm down and remember what I was meant to be doing.... and then all-of-a-sudden they moved away! At first you don't notice it directly, but then you're like "oh wait... hang on!" and things are suddenly much nicer 🙂 For the few things that I have wanted to do that weren't natively supported since switching to Mac, I can just bring up a command prompt and have the full power of a unix implementation, which is spectacular. Having DOS sitting underneath Windows is a poor substitute if you want to do something that the OS doesn't provide functionality for. It likely depends on what you want to do of course, but the combination of Apple's design simplicity (and forced workflows) and Unix underneath seems like a better experience than Windows and DOS underneath it.

-

Funny.. when I read "Can't see why a rental house would be interested" I interpret that as saying that 'not a single rental house would be interested'. I don't hear 'some would be interested but not enough to make it commercially viable'. It's like saying 'I can't see why a person would be interested in marrying Jack' but meaning 'only a few dozen people would be interested in marrying Jack'. Poor Jack - he'll be single forever unless dozens of people fall madly in love with him!

-

Given the track-record of Kodaks business decisions throughout the transition from film to digital, I wouldn't rule anything out in terms of what they might be thinking / hoping for. I would, however, be confident in saying that making it primarily for rental houses would likely be a commercial mistake 🙂

-

Just for interest, I went back to the original posts. I've added my own emphasis. Clark said this: You replied with this: and I replied with this: It is a strange thing how discussions can often veer significantly from what was being discussed originally, and no-one bothers to go back a page or two and see what was actually said.

-

So, we agree that there might be one unit in a rental house somewhere? That was literally my only point... somehow you've managed to change my argument and then argue against me 🙂

-

This thread inspired a different one: I think that what I described in that thread is one of the underlying factors in this discussion.

-

I think one of the main sources of confusion and disagreement is that creativity and technology used to be aligned, but are now diverging, and it's confusing a lot of people. What I am talking about Take resolution as an example: First, film and lenses were low resolution, and everyone wanted them to be higher Then, digital was low resolution and everyone wanted it to be higher At some point, somewhere between 2K and 6K, the resolution of sensors and lenses exceeded the human visual system This meant that the people who wanted to create for the human eye and the people who loved tech were aligned up until that threshold was reached, and now the perceptual people are saying "enough already - we've reached the goal - put your development efforts into something that matters" and the tech people are saying "more is more - I thought you agreed with us - why would you choose this inferior thing instead of the latest and best thing???" It's the same with frame rates, as we have seen in a very civil and productive discussion in another thread.... 😂😂😂 Dynamic range is approaching this point, with some saying there is enough and the discussion starting to move from "everyone needs more" to "sometimes I need more" and will eventually be "we're done - except for specific and rare situations". Bitrates and codec quality are getting into this territory, on the capture side at least, and streaming bitrates will get there in years to come as internet bandwidth inevitably increases exponentially. There are many other examples... Some predictions I suspect the discussion will further segment into the "virtual realism" industry and the "creative storytelling" industry. The "creative storytelling" industry will continue in the directions that cinema and TV have been developing in for the last century. This will be 2D image capture and playback, different lenses used, heavy editing with 2-10s per shot, sound design in post, potentially heavy VFX, focus on creating an emotional journey for the viewer. The "virtual realism" industry will develop from its current infancy. It will be for immersing yourself into situations that you wish to experience but don't have access to, or wish to experience multiple times. This will likely be things like music concerts, cultural experiences like festivals, events like watching the Pope speak at the Vatican or attending an F1 or Nascar race or space shuttle launch, personal recordings like your child's school play or dance recital, scenes from nature like watching the sun rise in Death Valley, and (of course) the adult entertainment industry. This will be 3D VR, minimal editing with very long immersive shots (maybe even just a single shot for a concert etc), sound captured almost exclusively on location or very naturalistic sound design in post, and a focus of creating an experience that matches reality in as many ways as possible. This technology will likely also extend into capturing more senses too like smells, air movement, temperature, etc. The sibling of the "virtual realism" industry will be the "virtual worlds" industry, which will be the same as above, but with everything computer generated. This will be things like gaming, interactive experiences, etc. It will rely on mostly the same viewing technology, but augmented with things like force-feedback / touch controls, and of course a massively powerful computer generating the virtual world. I also predict the virtual realism folks will step into discussions that the creative storytelling folks will be having, and the worlds will collide, mis-communication will dominate, egos will be bruised, etc. This already happens when the documentary folks start talking to narrative folks and things like camera size and low-light performance etc are hotly debated by people with vastly different requirements and priorities.

-

Here's a video that explains the basics of lens choice: Perhaps the single biggest take-away from this video is how the cinematographer is speaking - he is talking about how he wants the audience to feel, not what is 'realistic'. In fact he introduces the video by saying "Hello. I'm Tom Single and I've been a cinematographer for the past 40 years. Today I'm going to be focusing on how film-makers achieve the desired mood as it relates to lens choices". Think about that... "the desired mood". Realism isn't the goal, and it's not even relevant to the context. It's completely besides the point for the industry that he's in. You can take almost any aspect of film-making and when you find very experienced people talking about it, it will always be discussed in the context of the mood and perceptual associations you want to create.

-

Nice work! I like the colour grading and overall image processing, the texture is nice too. The long shutter on the movement is cool, and getting the right level of shake to represent the experience of riding a motorcycle on modern streets was a nice touch. 🙂

-

I was suggesting that there might be one. I thought you were saying there wouldn't be any. I wouldn't imagine they would be common, but I did think there would be sufficient demand in the market for the most film-centric rental house in the middle of Hollywood to have at least a single unit.

-

I would imagine there would be a niche larger than you might think. I think the revival of 35mm still film is a reasonable parallel - it is much easier to shoot digital and emulate it in post with one of the many excellent plugins available. But people like shooting film because it's somehow "authentic". Noam Kroll shoots a lot on film, as I am sure you're aware, shooting a number of short films on it, and shot this ad on super 8mm: https://noamkroll.com/shooting-super-8mm-red-gemini-for-banana-republic-in-joshua-tree/

-

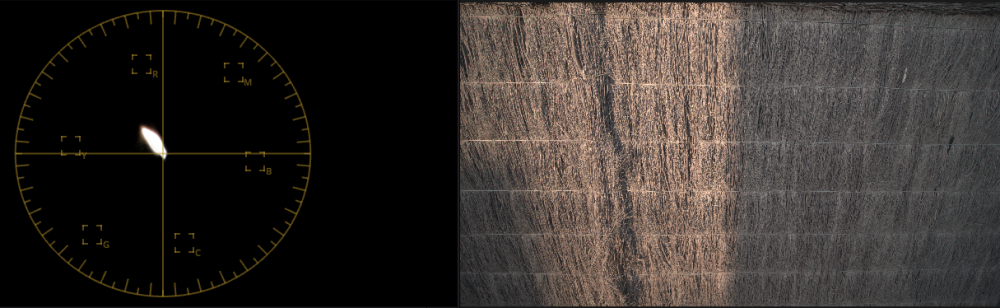

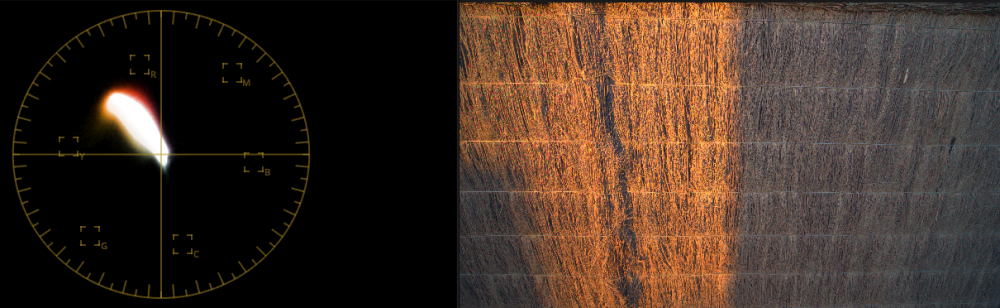

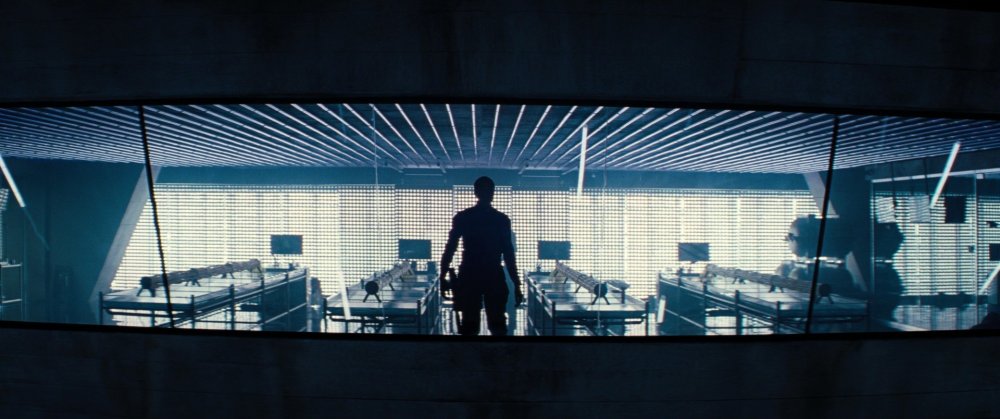

Actually, the Orange and Teal look is copied from reality (but sometimes dramatically overdone). Any time the sun is shining and the sky is blue then objects that are lit directly from the sun will appear one colour and objects in the shade will be lit solely from reflected light, which a significant amount will have come from the blue sky, so shadows are more blue than things lit by the sun, and in comparison, things lit by the sun are more orange (the opposite of the colour of the sky) than the shadows. This is a subtle effect, but is observable. I did the test myself. Here's a RAW photo of my fence at sunset: If we radically juice up the saturation, then we get this: and if we shift the white-balance cooler, then we get this: So, although reality doesn't look anything like how strong this colour grade is, the orange/teal look is part of reality, not a fictional thing that's made up. Also, a great many movie colour grades don't have the orange and teal look. Here are a bunch of movie stills from Blockbusters: (you have to click and expand the image to view it large enough) Many of them are almost one hue, with almost zero colour separation: But, once again, since you missed the point I was trying to make... with all the equipment and talent these movies have at their disposal, why on earth would they look like this if they were trying to make them look realistic? You've got it all wrong - it's the other way around. People who make movies want to make things a certain way, and shooting 24p is one of the (dozens / hundreds) of ways they accomplish this. I'm not sure what the point is that you're trying to make? Genuinely? If it was simply that movies were in 24p but were trying to be as real as possible in every other way, then yes, you could make the argument that it was a legacy choice, but there is practically no aspect of movie-making that is trying to imitate reality. I think you're exactly right. If these movies were 'realistic' then they would look like small reality shows. The evolution of film-making started by recording theatre productions. There were no cuts, it was like you were sitting in the crowd watching a play. They didn't think they could edit because real-life doesn't suddenly jump to a new location. When they worked out that cutting was fine and the human mind didn't get disoriented if you did it, they still thought that the mind wouldn't understand if there were jumps in time, so they had continuity editing, which meant that if someone entered the room then you'd have the whole sequence of them opening the door, walking through it, closing it behind them, then walking across the room, and only then starting to speak to the person inside. Turns out we're completely fine with cutting most of that out - in dreams we experience time jumps and the theory is that we're fine with time jumps because dreams do it. The history of film-making is a journey from one-shot films that made you feel like you were at a theatre production, and have gradually evolved into Bayhem, Momento, Interstellar, etc. If anyone wanted realism then they've been walking in the wrong direction for an entire century now. Either the entire history of cinema was done by people who are completely incompetent, or, they're aiming at something different than you are.

-

Everyone is complaining about their EVF and LCD screens, but she's not!

-

The whole idea of movie stars when I was growing up was that they were "larger than life". I think, once you start looking, you'll find that practically nothing about cinema is even remotely realistic / real-looking. Look at the visual design / colour grading for a start.... I mean, these projects all had the budget, had highly skilled people, and had every opportunity to make things look lifelike, but none of these things look remotely like reality. Even the camera angles and compositions and focal lengths - none of these make me feel like I'm looking at reality or "I am there". Bottom line: studios want to make money, creative people want to make "art", neither of these are better if things look like reality. I'm in reality every moment of every day, why would I want movies to look the same way? It's called "escapism" not "teleport me to somewhere else that also looks like real-life... ism".

-

He does. I applaud Markus for the work he does and the passion that he brings, trying to beat back the horde of Youtube-Bros who promote camera worship and the followers who segue this into the idea of camera specs above all else. But there's a progression that occurs: At first, people see great work and the cool tools and assume that the tools make the great work - TOOLS ARE EVERYTHING Then, people get some good tools and the work doesn't magically get better. They are disillusioned - TOOLS DON'T MATTER Then they develop their skills, hone their craft, and gradually understand that both matter, and that the picture is a nuanced one. TOOLS DON'T MATTER (BUT STILL DO) This is the same for specs - they are everything, they are nothing, then they matter a bit but aren't everything. By the time you get to the third phase, you start to see a few things: Some things matter a LOT, but only in some situations, and don't matter at all in others Some things matter a bit, in most situations Some things matter a lot to some people, but less to others, depending on their taste Film-making is an enormously subtle art. Try replicating a particular look from a specific film/show/scene and you'll find that getting the major things right will get you part of the way, but to close the gap you will need to work on dozens of things, hundreds maybe. The purpose of any finished work is to communicate something to the audience. For this, the aesthetic always matters. Even if the content is purely to communicate information, if you shoot a college-looking-bro delivering the lines sitting on a couch drinking a brew filmed with a phone from someone lying on the floor, well, it's not going to seem like reliable or trustworthy information, unless it's about how many beers were had at the party last night (and even then...). The same exact words delivered by someone in a suit sitting at a desk with a longer lens on a tripod and nice lighting will usually elicit a very different response (sometimes one of trust, and sometimes a reaction of mis-trust, but different all the same). A person wearing glasses and a lab coat standing in front of science-ish stuff in a lab is also different. Humans are emotional animals, and we feel first and think second. There isn't any form of video content that isn't impacted by the aesthetic choices made in the production of the video. Some might be so small that they don't seem relevant, but they'll still be there in the mix.

-

Even if budget was no option, sadly it's the GX85 for me, and that's a very compromised option in almost every way, but is the right balance of trade-offs. In a way I'm lucky that my best camera isn't ridiculously expensive, but also, it means there is nothing I can do to work towards a better setup.