-

Posts

7,834 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by kye

-

Cined lab test of the iPhone 15 is out... https://www.cined.com/iphone-15-pro-lab-test-rolling-shutter-dynamic-range-and-exposure-latitude/ Highlights: Rolling shutter is very low (4.7-5ms for rear cameras and 9.3ms for front-facing camera) DR varies across ISOs due to heavy NR and processing (testing was done in 4K Prores HQ Apple Log) and they had to adapt their testing methodology for a range of reasons DR measurements are in the 11-14stops range, depending on how you measure them, and some assume no NR but this has tonnes of it, so.... In terms of exposure latitude it has 5-6 stops of latitude, depending on ISO. In general the latitude relates to the DR, so by comparing with cameras that have 12 stops of DR and 8 stops of latitude, that would mean the iPhone has 9-10 stops of DR In the end, it's a smartphone with a tiny little sensor The article is a good read and there's lots of nuance there.

-

But I hold you personally accountable for the entire content of the internet! Well, except for when @BTM_Pix makes funny jokes or posts ironic pictures of spam - that's all him.

-

Interesting video. First things first - could that guy be any cooler? I'm pretty sure there isn't a single element in that video that isn't an automatic 10/10 for hipster chic. Wow. Talk about those people whose whole life is their own art project! Secondly, his commentary is all over the place. I see the differences he's talking about in the footage, plus a great many more that he probably sees but didn't mention - there are hue shifts and gamma shifts and subtractive colour operations and all sorts of wonderful things that are different between the two cameras. However, he points to differences and says "see the difference with 10-bit?" whereas I think the differences are likely to be a mix of: FX6 sensor read-out bit-depth FX6 image processing Sony RAW-LOG profile conversion (gamma, gamut, and bit-depth) FX6 compression I built a DCTL plugin for Resolve that reduces the bit-depth of the image, and to my surprise, you can reduce the bit-depth of rec 709 footage to 6-bits (and some shots to 5bit!) before there are visible changes. I'm not saying that there aren't any differences between 16-bit and 10-bit, because there are (however subtle they might be) but the things he was pointing at were definitely NOT all bit-depth related, and I'd suggest that most of them were processing/compression related actually. If you want to understand what the differences are with one parameter, you can't change dozens of them at once and then just declare that all differences are due to the one variable you want to talk about. You could take his whole video and just replace the phrase "10-bit" with "compression" and it would make just as much sense, despite having exactly the same examples. So yeah, it's a great video to show an FX6 LOG vs Komodo X RAW comparison, but it isn't an isolated 10-bit vs 16-bit comparison.

-

In Cullen Kellys most recent livestream he mentioned that he's working on a guide for Resolve around the "why don't my exports look the same on YouTube as they do in Resolve" question, and he said that it's a recurring problem because it's actually about 20 problems and you need to make sure that every step is correct. I suspect that most of these issues will apply to any NLE, not just Resolve. It might actually be more difficult for other NLEs because serious Resolve users will purchase BM hardware to get a clean video out signal that bypasses all the OS colour profiles etc, whereas I don't know how available/popular such a setup is with other NLEs, so Resolve likely skips some of the issues on other packages.

-

Would he have any say in that? My guess would be a change of management / ownership. Does having a YT channel with backlog of videos require any work at the back-end? If it was generating spam or unhelpful customer enquiries or something maybe they just pulled it to save on some kind of management effort required? Or maybe it was a PR decision and the content was just dated? Things move fast in consumer electronics after all..

-

Thanks for the promotion to colourist @markr041! It seems like we need to get a handle on what the relative strengths and weaknesses are across the options. Certainly, lower DR in a camera used to record outdoors in uncontrolled conditions with a wide-angle lens likely to have the sun in-shot is a long way from the ideal situation... and just for your information, I am currently revising my setup to include a second camera to my GX85 because I want something with more DR for exactly these reasons. Realistically it's going to depend on what you're shooting and how that aligns with the relative strengths/weaknesses of the different models. So far we have what... iPhone 15, GoPro 12, Insta360 Ace (Pro), DJI Osmo Pocket 3, and DJI Osmo Action. Are there others in this fixed-lens / super-compact / small-sensor market segment?

-

It sounds like a very "business" decision to me. That's the intellectual property of <holding company name> so <no-one else can have it / no-one can have it for free / we don't want to risk being sued during the transfer process / etc>. If this was a company permanently closing a storefront / restroom / whatever during a business reshuffle then no-one would bat an eyelid, but when there's any element of common good then people notice. Unfortunately, the idea of a sharing economy is basically incompatible with a zero-sum-game business mindset, which is where the confusion / reactions start. There's a fascinating relationship between the social contract and the legal frameworks that control how businesses deal with the public, and people often react very differently to how business people would think they do.

-

I accidentally skipped winter one year, with a couple of overseas trips in the northern hemisphere happening to be over the coldest part of the year. I didn't realise it until the following winter what I was like "Gee, it's getting cold/wet now... it hadn't been like this since.... hang on a second!!" If I won lotto, I'd do that every year 🙂

-

Indeed! Good luck with this, though I'm not optimistic.

-

200Mbps should be fine. The only times having more bitrate would help is when there is huge amounts of random movement, so scenes with water / snow falling / rain / trees blowing in the wind / etc. Other things to try are doing a factory reset on the camera, and it might be a good time to update the firmware if you're not on the latest version. Also, check the firmware for each lens you use, those have firmware too!

-

Perhaps you were thinking of @Mako Sports?

-

Obviously the decision between these would be heavily dependent on the situation, but obviously I'd choose the smallest one for the millions of reasons I've already shared. When I look at the choices of S5iiX, Sony, GX85, Nikon, Fuji, I see the choice as being around: Size and weight - for both your own ergonomics as well as how stealth you wish to be Dynamic Range - some of these setups have higher DR than others Stabilisation - some of these setups will have Dual IS, but i'm assuming some won't Obviously there are loads of other things going on too, but these are the ones that I see mattering to me. Ones that don't matter to me are: DoF - almost all these lenses are capable of creating levels of background defocus used in cinema, which is enough for me Codec / resolution - the GX85 with 100Mbps 4K IPB h264 codec edits fine on my Intel MBP and is sufficient on a 1080p timeline (if it's good enough for Hollywood it's good enough for me) so I don't need extra resolution or ALL-I to edit with Colour science - with colour management any of these cameras can be coloured just as easily as any other and [production design] > [colour grading ability] > [camera colour science]

-

This is a great point - if you don't need the portability then a Mac Mini would be spectacular value. They're not powerful enough for the serious colourists, but I think those not as far into their careers use them, and I've heard that large post-houses often use them for background / batch tasks like preparing footage, rendering projects etc. The Mac Studios with the Ultra processors are apparently stunning performers, but more expensive obviously.

-

In this context IDT and CST are the same thing. I think that IDT is an acronym from ACES (Input Device Transform) which obviously is used to transform from the input devices' colour space to ACES, whereas CST is a Colour Space Transform which can go from any space to any other space, and you might use them in various places in the node graph for various purposes. I've seen colourists put a CST as their first node transforming from camera to working colour space, but label it IDT, and the output one ODT, so it's more of a terminology thing at this point. I've heard colourists say that you can tell that people who use IDT and ODT learned colour management at a certain time period or on certain equipment, so it sort-of dates you in a sense. The other reason you might use the IDT / ODT acronyms is that Resolve only displays very short node labels (if they're too long it chops the end off) so IDT and ODT are short and useful node names.

-

I don't have V-LOG on the GH5 - I just use the HLG profile and CST using rec2100 which works fine 🙂

-

I've been paying mild attention to the relative performance since the M1 came out and it's really difficult to get a sense of the economics vs performance for a couple of reasons: Unless you have infinite money, getting a faster CPU will mean not being able to upgrade the RAM, which is often shared, and depending on the circumstances you're in the RAM might be a bottleneck rather than the processing The price of older Apple silicone products hasn't really dropped significantly, so although the M1 and M2 chips are great performers you're still going to pay decently for them I've seen threads of colourists talking about upgrades and what to get, and there are lots of discussions about what trade-offs should be made and which shouldn't. Professional colourists are perhaps at the cutting edge of this stuff because they have to be able to colour grade any footage in full delivery resolution and in real-time with the client sitting there, so there is no possibility of using any proxies or performance settings etc.

-

It's an interesting question, but I think a clue is likely evident from the question. If we rephrase the question to be "Why don't they support 24/30p when it's a trivial technical change?" then it becomes obvious it's not a technical decision, and in a capitalist consumerist marketplace, if something isn't technical then it's probably economic..... Also, if you just record in 23.976p instead of 24p, put the clips onto a 24p timeline, and have your NLE configured to re-time by selecting the nearest frame, then it will be frame perfect for any clips under ~20s long. If being frame/subframe perfect worries you, then I would suggest you do not ever consider the fact that almost all computer displays are 60p or some other refresh rate that isn't a multiple of 24, so when your video is viewed by almost all viewers, it'll be going through something like a 3:2 pull-down, so most frames will be off by a significant percentage and motion will be sputtering all over the place. I've been thinking about this lately, and there are some very interesting things going on. For example, if you record 30p and put it on a 24p timeline, and then display that 24p timeline on a 60p display, almost every frames from the original 30p will be time-perfect with zero time-shift, but there is a repeating pattern of the odd repeated frame, so the feel will still be that of 30p rather than 24p. If you put 24p on a 24p timeline and view that on a 60p display, almost all the frames will be off by some significant percentage, but it will still feel like 24p. I've come to really dislike the feel of 30p - it feels like 60p but only about half as 'slippery', so this stuff matters to me, but might not be visible to others.

-

I'd imagine it is partly to do with the analog and ADCs, but don't forget that just because modern ICs are very capable doesn't mean the manufacturer will sell you a product for a song. Also, digital processing is another step that the high-end products may well also have capabilities for.

-

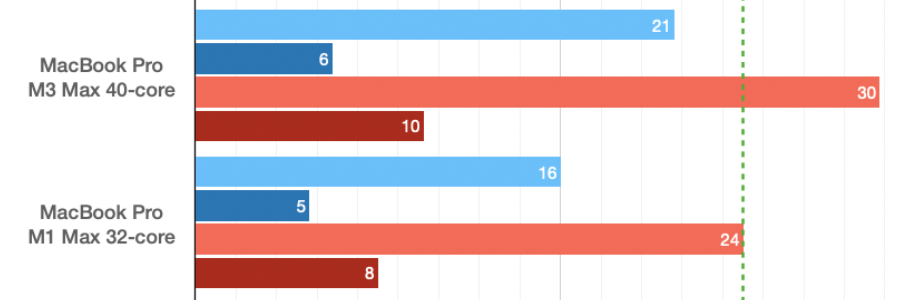

On the colourist forums there's a sample test project and users submit their specs and FPS on the various tests, here's the M1 and M3 - the numbers are FPS: Each colour is the same test between them. The jump up from the Intel Macs is enormous - mine gets about 4FPS on the light blue test and about 3FPS on the lighter-orange test, but once you're on the Apple silicone there seems to only be incremental improvement. Those tests above are pretty brutal by the way - the light blue test is a UHD Prores file with 18 blur nodes, and the dark blue is 66 blur nodes, and the orange ones are many nodes of temporal noise reduction! The differences between the Pro, Max, and Ultra chipsets is much more significant though. I found that the Resolve results correlated pretty well with the Metal tests in Geekbench: https://browser.geekbench.com/metal-benchmarks

-

Preposterous! It would never work!!

-

Panasonic S5 II (What does Panasonic have up their sleeve?)

kye replied to newfoundmass's topic in Cameras

I was talking about the location of the hot-shoe, which is where you'd mount the mic on this hypothetical small vlogging camera. No point having a flip-up screen for vlogging if the mic will obscure it. That's why cameras with the flip-up screens like the G7X don't have a hot-shoe and require external accessories: or they have a hot-shoe and have a flippy screen, not a tilt-up one: ....and before you start mixing vlogging with a pocket camera with rigging out a cinema camera, by the time you have to put a cage on it, you might as well use a bigger camera that already has a mic port, interchangeable lenses, 4k120p, 15 stops of DR, and all the other crap that the independent-Sony-marketing-affiliates camera reviewers all use. -

Panasonic S5 II (What does Panasonic have up their sleeve?)

kye replied to newfoundmass's topic in Cameras

It probably wouldn't be too hard to adjust the screen tilt mechanism to make it pop up and become a selfie screen too, which would make it infinitely more attractive to that market segment. Of course, the clash between an on-camera mic and tilt-up selfie screen is a difficult one to reconcile, as many/most vloggers view both as a requirement. They could take notes from other manufacturers and sell a separate mic that uses the hot-shoe (and therefore camera power) and also doesn't get in the way of the flip-up screen, that would be awesome! I did a bit of googling on that Sony HX99 and it looks like an interesting little camera. When you have gotten familiar with the ZV-1 it would be interesting to see a comparison between them. Of course, we can anticipate that low-light will be a weaker area for it of course. -

BTS: This looks good to me, but I think could have been shot with almost anything..

-

Absolutely.. the thing is, this has probably been the case for many years now. It certainly has been the case that at least some of the affordable cinema cameras would be indistinguishable to audiences, the OG BMPCC / BMMCC / 5D + ML RAW for example. To me the milestone of having at least one affordable cinema camera be good enough is a much more significant event than "every" cinema camera being of that standard - who gives a crap how long it takes for the worst models to catch up?

-

Panasonic S5 II (What does Panasonic have up their sleeve?)

kye replied to newfoundmass's topic in Cameras

My condolences about your mother. We're here for you - especially if it involves fighting over irrelevant technical details! I've said it previously, but I actually don't have a huge list of wants for an updated GX-line camera, and the GX85 is now my daily driver as-it-were. I've heard people ask for a range of improvements but they all seem quite modest actually. Things like PDAF, 10-bit, LOG, full-sensor readout, etc. These may well only require a sensor upgrade and a processor upgrade, perhaps to existing chipsets that aren't even the latest generation, which may not actually require that much additional power / cooling / space. At the moment the GX85 already has: IBIS / Dual-IS Tilt screen / touch screen EVF half-decent codec etc etc Plus, it's right at the limit of actually being too small from an ergonomic perspective. The grip design on it is really quite effective and I enjoy using it, but I probably wouldn't want it to be any smaller. If it had a slightly larger grip then that would actually be an advantage ergonomically, wouldn't make the camera much bigger in practice because it would still be smaller than most lenses, and would allow for a larger battery size. With proper colour management it is actually a very malleable image too, I did an expose test with it some time ago, doing exposures under and over and bringing them back to normal in post, and while the DR wasn't great, the image was very workable. The endless pursuit of megapixels has proven that a feature can be designed and then marketed and people will go nuts over it, despite the fact that it's not of any practical use to most consumers and it also requires upgrading of all associated equipment in turn. I think this shows that even if you did make a perfect camera for the people who shoot, the people who like progress for its own sake will always think that the perfect camera is the next one. Please no!!!!!