-

Posts

8,027 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by kye

-

I used to edit projects on the train to/from work using a base-model Intel Macbook Air and external SSD with proxies rendered to 720p Prores Proxy format. This was in Resolve 12.5, so a long time ago. They edited like butter. Rendering them was a pain, swapping between the proxies and full resolution files was complicated and took a bit of work to get sorted out, but it made editing possible on very modest equipment. Now with Apple Silicone, you can edit almost anything without needing proxies.

-

Some initial testing using the default Apple Camera app. I did some bitrate stress tests and got bitrates between 550-700Mbps so it looks like the default camera app is just using Prores HQ. The bitrates for the stress-test and for static scenes were all within this range. Latitude tests using the native Apple camera app, the 1x camera and 4K Prores Log mode. How I tested was I started recording, then clicked and held in the middle of the image, then dragged down all the way to the bottom to progressively under-expose. Then in post I found the frames that aligned nicely to -1, -2, and -3 stops of exposure. Reference image - this is how the app decided to expose and WB. These are the fully corrected images (-1 stops, -2 stops, and -3 stops): These are the images without any corrections so you can see the relative exposures: and these are the images that have had exposure corrected but not WB corrected: My impressions were that these graded beautifully in post. I've graded a variety of codecs (everything from 8-bit rec709 footage to "HLG" footage to GH7 V-Log to downloaded files from RED / ARRI / Sony) and these felt completely neutral and responded just how you'd expect without any colour shifts or strange tints or anything else. I've seen enough tests from iPhone 15 and 16 to know that Apple are doing all kinds of crazy HDR shenanigans, but realistically this footage seems like it's really workable. If I can pull images back from -3 stops to be basically identical then that means that whatever colour grading I'll have to do on a semi-reasonably exposed image will be just fine.

-

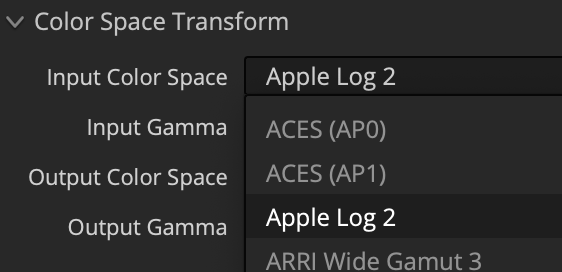

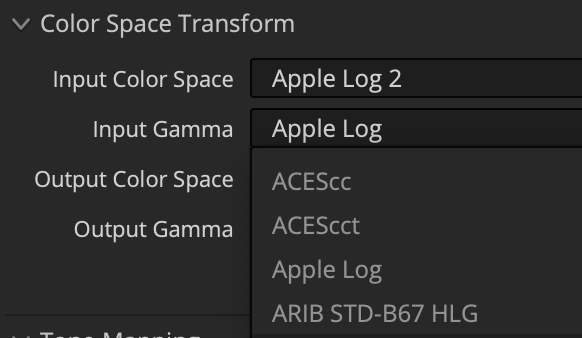

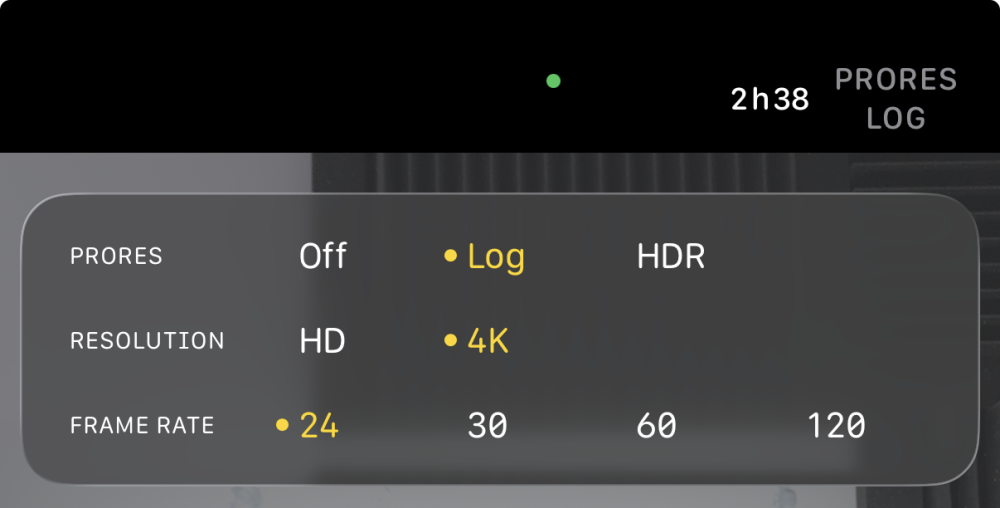

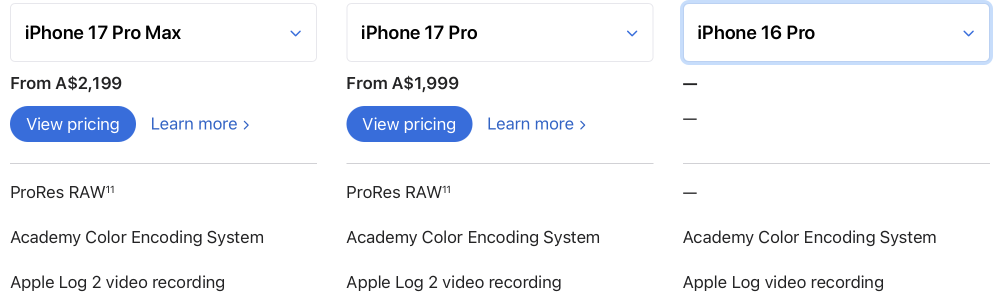

Well, that was fun. New phone has new colour space which requires updating Resolve which wouldn't install and required me to update MacOS. Recording and then viewing the first clip took a lot longer than anticipated. Here's some initial observations as I find my bearings. Resolve 20.2 only has Apple Log 2 in the Colour Space, and only has Apple Log in the Gamma. If we assume that BM and Apply both know what they're doing then that means that Apple Log 2 gets a new colour space but keeps the same gamma curve, and iPhone 15 and 16 users get an upgrade to Apple Log 2. In the default camera app you only get Log and HDR (whatever that is) in Prores mode. Not entirely sure what Prores flavour it is, but I'd guess HQ because the fine print in the settings to enable it says 1 minute of 4K 30p is 6GB which is 800Mbps (and would be 640Mbps for 24p) which is sightly lower than the 700 typically stated, but is definitely higher than the 471Mbps typically stated for 4K 24p Prores 422. If you select Prores and Log, the image displayed is the log image.. no display conversion for you! BM camera app gives tonnes more options. I'll have to investigate that further, but last time I looked it seemed to only offer manual shooting, rather than the auto-everything that I need when shooting fast.

-

Interesting and thanks for sharing. I hadn't heard the RAW was external only, I guess every new camera has its 'gotchas' in terms of combinations of features that do/don't work together. Mine arrived yesterday (Friday), which was a nice surprise as when I preordered it they predicted it wouldn't be delivered until Monday. I got all the notifications etc, so it didn't just rock up with no warning though 🙂 Odd that the sensor stabilisation isn't supported in RAW. Is it IBIS or is it EIS? If it's IBIS then I have no idea why it wouldn't be supported?

-

100%.. definitely what's happening. I heard it was a flop. One reason I didn't mind having a smaller phone is that I don't really do a lot of stuff on it, as I prefer using my laptop. If I used my phone as my main device I'd be wanting the largest thing I could practically carry around. I'll be curious to see how it goes. On my last trip I bought a Pop Socket, which I found to work really well actually. This is what it looks like if you're not familiar, and means you can hold it really securely, and it folds almost flat. I put it towards the bottom and on one side so I could open it, pull the phone out of my pocket, open the camera app from the Home Screen, take some video, lock the phone and put it back in my pocket again, all with one hand. The flaw was that because the socket wasn't in the middle of the phone so when walking the weight of the phone would rotate the phone. I got another one for the 17 and I'll put it in the centre of mass of the phone so when I walk with it there's no rotation induced from unbalanced motion. I don't know what that means for how usable it will be one handed. I guess sacrifices have to be made to go from h265 HDR to Prores with Apple Log.

-

I also really like the Mini size and form factor, and TBH it frustrates me quite significantly that they don't put the good cameras in the smaller models. It's like forcing you to buy a truck just to get power-steering. I also agree that the square selfie-camera is a cool addition. I'll be curious to see how wide it is, my recollection of previous versions was that they were a bit long of a focal length for what I wanted to shoot (me in a cool location) so I'd normally just use the wide-angle camera and turn it around and shoot blind.. hardly ideal. The holy grail for these cameras is to look the same as a nice camera with a stopped down aperture. I'd suggest that those iPhone 15 Apple Log vs Alexa videos showed that it was getting pretty close, although now with RAW it will be interesting to see how close it really is. When the first Android RAW samples came out there was something about the footage that still looked like a phone but at the time I couldn't work out what it was. There will be other things that might give the game away, but these will probably be how the camera is used rather than some innate property of the image itself. I'll be putting mine through its paces once I get back from my trip and upgrade to Resolve 20.2 to get the RAW support. I might have to do some side-by-side comparisons too.

-

Just be sure that the apps you will use will all still fit on the internal drive. Apps are getting more and more "featured" and are more and more bloated with things, or they do things like cache data and store it locally for enhanced performance. You might also benefit from some wiggle room, depending on how you're going to use it and how long you'll keep it. My iPhone 12 Mini from 2020 is still going strong and if I didn't use the cameras so much I wouldn't feel the need to upgrade, so you might end up keeping it for quite some time. Yeah, I've seen tests of this configuration and they looked pretty good. I suspect I'll end up just using the default camera app for speed of use, and I don't think it allows this combination (Log and h265) so I'll have to see what it allows me to do. Ideally I'd go for 4K and Prores 422, which is around 500Mbps, so large but still manageable. I think there are a few very specific situation where you'd want to get the dock and use a phone rather than just use a proper camera with a (much) larger sensor, but not that many. I am in chats with a lot of professional cinematographers who shoot a variety of client projects and the consensus seems to be that when the client wants something to look like it's been shot on a phone it's far far easier to just shoot it on a phone rather than try and get that specific look in post, but of course while shooting this "look" on a professional set you'd still want all the functionality like remote directors monitors and timecode sync for audio etc etc, so it's a very niche product but if you're doing that then it can save an incredible amount of hassle. Besides, now you can get your phone, add the external box, get a proper battery setup, and add a HUGE monitor.. no more being forced to shoot video on your iPad!!

-

Nice. I'm getting the Pro one to replace my 12 Mini, so it should be quite the upgrade. When I'm on trips I use the big camera for shooting the surroundings and environment and my phone for quickly shooting the people I know as we're out and about doing things. Ironically that means that it's my most important camera and the other stuff is my secondary setup. I'll be curious to see what the lower-bitrate files are like, as shooting at full res gives very little recording time.

-

Definitely agree that lots of operations are better done in colour spaces other than the ones supported by Resolve natively. Are you using OKLab for your Lab conversion? I plan in integrating that into my tool once I get back to developing it. I'd keep all the secondary adjustments like vignetting etc as power-windows in Resolve, as getting a single vignette slider that looks good across many/all scenarios probably isn't possible. I haven't played with spatial adjustments in DCTLs yet, so I'm not sure what the performance hit is compared to OpenFX tools. I'll probably investigate this at some point though, as there are a few operations I'd do that might benefit from being integrated into a single DCTL. I played with the colour slice tool and I think it is actually very disappointing as I found it broke images incredibly quickly, while also simultaneously being too broad for lots of adjustments I'd like to make. I've had much more success in doing more targeted adjustments in Lab that didn't go anywhere near breaking the image. I find Lab is a far cleaner space to work in for lots of operations as the things you might want to do that are colour-slice-esque are often far simpler and far more universal. There are tonnes of things you can do in Lab that target certain ranges but are applied globally, so won't break the image, like how doing (most) adjustments with the Channel Mixer can't break the image.

-

I've been working on a similar one, but in L*a*b colour space and offering a lot of more advanced tools to quickly do things I do all the time. One advantage of doing things in a DCTL rather than using the GUI controls is that when grading in Resolve while travelling etc, where you just have a small monitor and no control surfaces etc, you can make the viewer larger (IIRC using Shift-F) and it essentially gives you the viewer and the DCTL control panel on the right-hand-side of the screen, so it's a really efficient layout for grading using only the keyboard/mouse. @D Verco if you're looking for ideas on how to expand the tool I'd suggest thinking about it for use with power-windows as well as over the whole image. For example, my standard node graph has about 6 nodes with power-windows already defined that are ready to just enable if I want them. I have ones for a vignette, gradients for sky and left and right, and four large soft power windows for people where I will typically do things like brighten / add contrast / sharpen, and do basic skin operations like hue rotations / hue compressions / etc. Most of the operations I'd do with those windows are covered by your tool, but not all of them, and if a tool can be used for a range of other tasks other than just basic image processing then all the better. If you're taking a leaf from how Lightroom works, one of the most powerful features I used to use all the time (and wedding photogs would absolutely swear by) was the preset brushes. I had brushes for skin smoothing, skin brightening, under-eye, redness, etc, and of course they all used the standard Lightroom controls, but in specific combinations they really worked well. Something to think about. I'm all for people being able to charge money for their efforts, but in todays climate, the more value you can provide the easier it will be to get people to part with their (often hard-earned) money.

-

I've been musing how to proceed next, but figured out I have two challenges: - work out what lens characteristics I can emulate in post vs those that can't be emulated easily / practically - work out what lens characteristics I actually care about The reason the first one is important is that there's no point in testing how much vignetting there is between various lenses when I can simply apply a power-window or plugin in post and just dial in what I want. This will then leave the lens testing to compare the things I can't do in post, like shallow DOF etc. I figure there's no point choosing a lens with strengths I can emulate in post over a lens with strengths I can't. In my setup the real question is why wouldn't I just use the Voigtlander and Sirui combination, because it is relatively sharp anamorphic with reasonably shallow DOF, and then just tastefully degrade it in post. So, naturally, I asked ChatGPT which things were which.. ==== Characteristics You Can Emulate in Post (2D effects). These are primarily image-level artifacts that don’t depend on actual 3D geometry of the scene: Vignetting (darkening at frame edges) – trivial to emulate. Chromatic aberration (color fringing at edges, longitudinal CA is harder) – lateral CA is easy to add/remove, longitudinal CA (color fringing in front/behind focus) is less convincing. Distortion (barrel, pincushion, mustache) – geometric warping filters can mimic this accurately. Flare & ghosting – lens flares, veiling glare, rainbow ghosts can be faked, though authentic multi-bounce flare with complex aperture geometry is tricky. Bloom / halation – glow around highlights is very doable with diffusion filters or glow nodes. Contrast “roll-off” from flare veiling – can be mimicked with tone curves and haze overlays. Field curvature (apparent focus falloff toward edges) – can be approximated with selective blur masks. Edge softness – can be faked by blurring vignette. Focus breathing (slight FoV change with focus) – can be keyframed with a digital crop/scale. Bokeh shape overlays – you can fake oval bokeh, cat’s-eye bokeh, onion rings to some extent with blur kernels, though it looks “pasted on” if pushed. Characteristics That Are Hard or Impossible to Emulate in Post. These are fundamentally tied to how the lens bends and gathers light in 3D: Depth of field & true bokeh rendering – You can blur in post, but real optical bokeh depends on entrance pupil geometry, field position, occlusion, and interaction with 3D scene depth. You can approximate with depth maps, but never get the organic overlap of layers of out-of-focus light. True lens breathing with parallax – cropping simulates magnification change, but real breathing also subtly shifts perspective/parallax in the image. Perspective rendering (due to focal length + entrance pupil position) – can’t be changed in post without full 3D scene reconstruction. Aberrations that depend on 3D ray paths – e.g., coma (stretched points off-axis), astigmatism (different focus for tangential/sagittal rays). You can fake streaky stars, but not with the depth-dependent accuracy of real glass. Micro-contrast / “lens pop” – the way fine details render due to MTF, stray light control, and glass polishing isn’t really capturable in 2D. Polarization effects – coatings reduce or enhance reflections differently based on polarization of incoming light; impossible to recreate after capture. True specular highlight interaction with aperture blades – starbursts, iris shape, and diffraction spikes are fundamentally optical. Subtle 3D flare interactions – when a bright light causes ghosts that float relative to the scene geometry, which post plugins often fail to mimic convincingly. Sensor–lens interaction effects – e.g., color shifts or vignetting from oblique ray angles on digital sensors, microlens interference. ==== It's not a bad list, although some things are a bit mixed up. The things in the above that stand out to me for further investigation are: Longitudinal CA I wonder how much of the Tokina + wide-angle adapter look was this, it seems to have subtle CA though everything Experiment with oval inserts to get a taller bokeh shape, maybe between the taking lens and Sirui adapter ...and just setup some tests in post for barrel distortion, edge softness, resolution (blur and halation). The rest of the stuff above I either know about or don't care about.

-

I don't really know much about what their highlight recovery actually does, but if you're talking about super-whites (levels above 100) then they might get decoded and might be available in the nodes? My GX85 records super-whites and in my standard node tree for it I just pull down the Gain slightly and they just get pulled down into the working range. If you're talking about the smart behaviour of using some colour channels to recreate a clipped colour channel then I think reducing the saturation of the highlights might do broadly the same thing. There are also other tricks you can use like combining masks with the channel mixer to effectively copy one (or both) other channels to the one that's clipped. That will preserve the saturation of the recovered area, useful for recovering things like tail-lights or flames, whereas reducing the saturation obviously won't.

-

and also confirmed here (linked to timestamp):

-

Also, the new iPhones all use a new "Apple Log 2" profile, so that will require Resolve to be updated as well to support the new colour space:

-

They mention it at 1:06:21 - below is linked to timestamp: I must admit that if BM camera supports it then it's a step towards it being supported in Resolve. It's not currently listed on the BM website, but that makes sense as (IIRC) no iPhone can record it yet: I'll be ordering a new phone and my GH7 already records Prores RAW internally, so I'll be in a strange place where both my main cameras support internal Prores RAW but my NLE doesn't! 😆 😆 😆

-

Sad to hear. Living and working with someone that long is truly remarkable, even if it didn't end up lasting. Gotta be careful about the wedding ring thing though, I've noticed couples that both simultaneously stopped wearing them and it's turned out to be them preparing to renew their vows and getting their existing rings polished / embellished / etc before the celebration! It was even a surprise so their close friends didn't even know it was happening!

-

With great interest and care! As an enthusiast with complex requirements myself I sometimes come upon a new set of requirements and try to find something that will suit my situation but it's hard to find something that meets all the specifics I have. This is typically when I would post as people who know more than me might know of a particular thing that meets all my needs. This is why it frustrates me when people just give random thoughts and riff on my carefully set-out requirements. TBH, now I just ask AI.

-

Yes, it has an emotional component doesn't it - that's a good way of putting it. There are lots of parallels between cinema and dreaming or memories, so in some ways the more realistic the image is the less aligned it is with all the other things about it that are dreamlike or like a memory (e.g. we can teleport, jump time, speed up and slow things down, we can see something happening without being there, we can fly, etc). Considering that memories are formed more readily at points of heightened emotion, I think there's a link between a slightly surreal image and something feeling emotional. Unfortunately the front element rotates with focus on this lens. I had it in my head that means I can't use it with the anamorphic adapter but just realised that's not true as the taking lens should stay at infinity... I'll have to try that next! I'm not sure about hard-edged bokeh.. maybe I'll come around but not sure. Our attention is directed by a number of things, one of which is sharpness, and so focusing the lens is the act of deliberately directing the viewers attention by adjusting the focal plane to be on the subject of the image. When the out-of-focus areas have hard edges, this takes the viewers attention and pulls it towards things that have not been chosen as important in that image, so it sort of undermines the creative direction of the image in a way that you can't really counter.

-

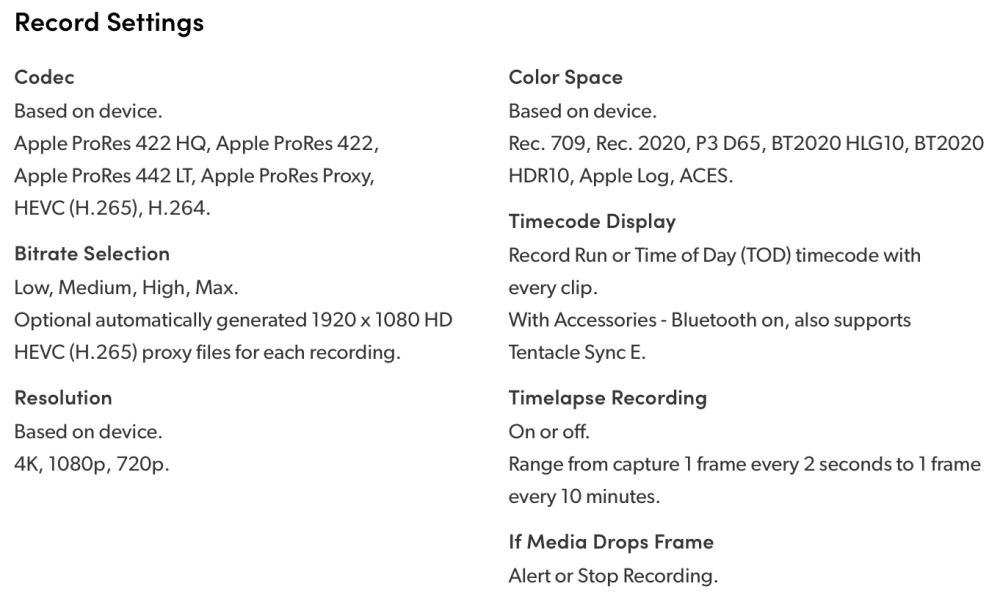

Today I tested the Voigtlander 42.5mm and Helios 58mm F2.0 lenses with the Sirui 1.25x adapter. GH7 >> Voigtlander 42.5mm >> Sirui 1.25x adapter.. Wide open at F0.95: ...and more stopped down (I didn't have enough vND for this much light): GH7 >> M42-M43 Speedbooster >> Helios 58mm F2.0 >> Sirui 1.25x adapter There's a certain look to the Helios, but it's hard to separate it from the changing lighting conditions, and I wonder how much of the differences could be simulated in post too.. reducing contrast by applying halation / bloom, softening the edges, etc. In pure mechanical terms the Voigts go much better with the Sirui, and the extra speed is really handy, so that will probably be my preference over the Helios.

-

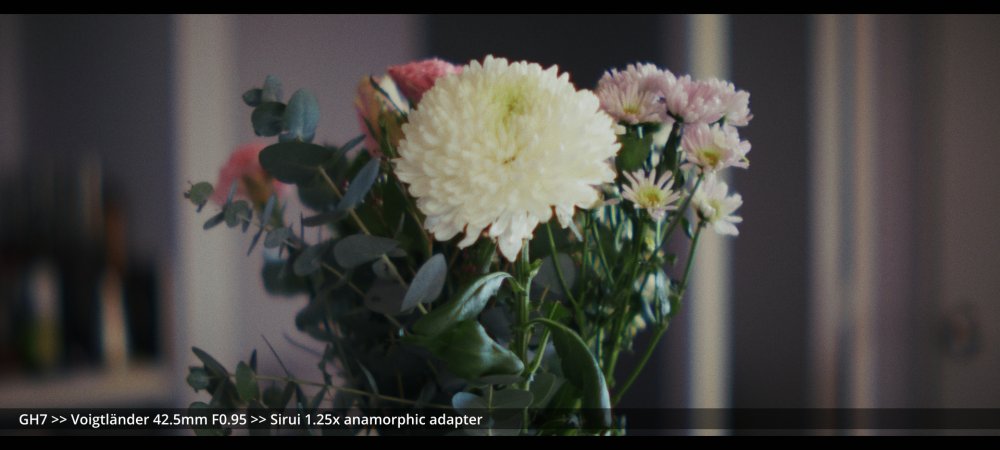

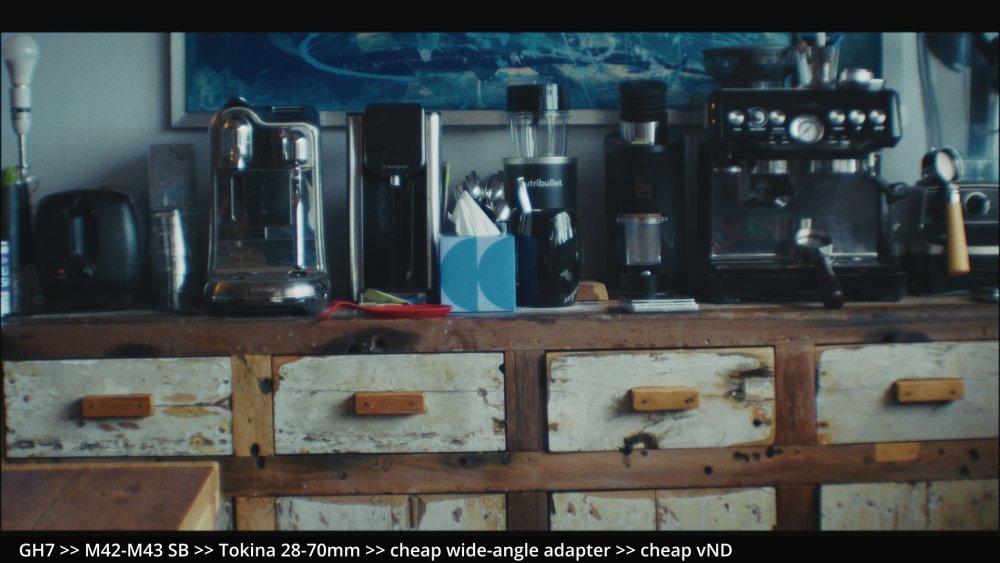

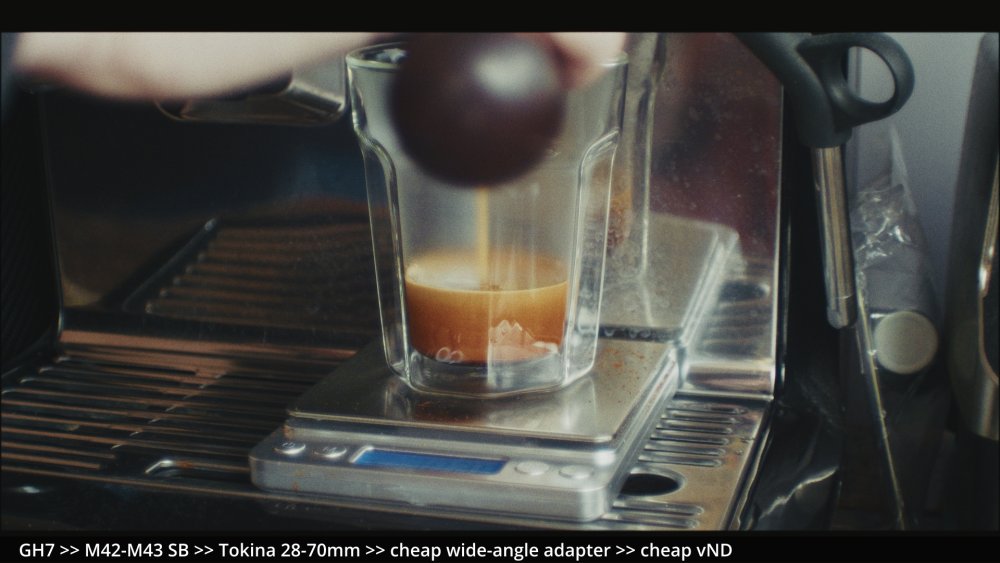

Thanks. If you like contrasty light, my next batch of images should please you! What is it about them you like? Is it how imperfect / degraded they are? They certainly have an incredible amount of feel, that's for sure. This zoom is the Tokina RMC 28-70mm F3.5-4.5 and I understand these were held in reasonably high regard back in the day, so it's potentially not a disaster, although I'm not sure that it's the sharpest / cleanest vintage zoom in the world. Looking back at the initial set of images I posted in my first post, this seems to have gotten the closest with the softness of the OOF areas and the CA / colour fringing etc.

-

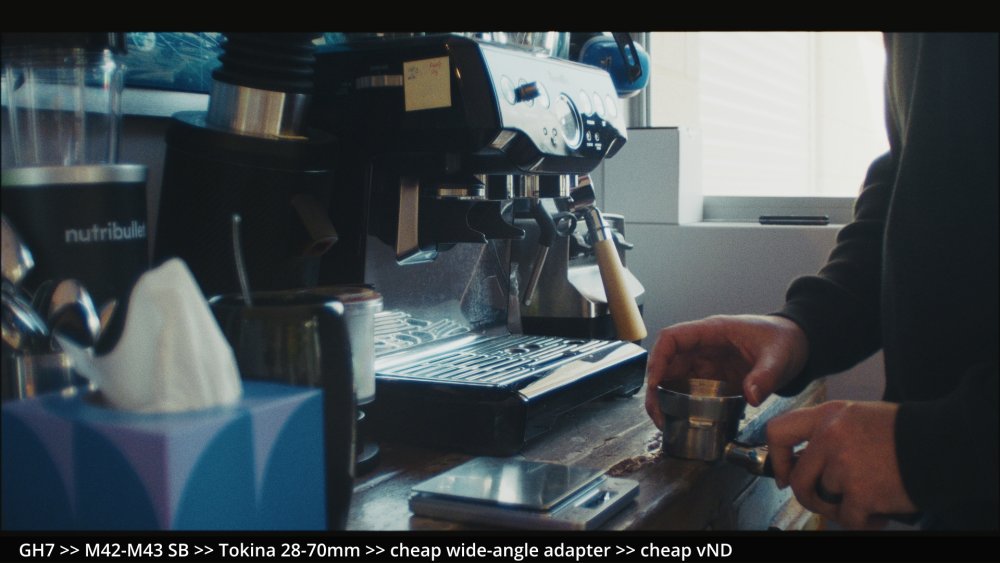

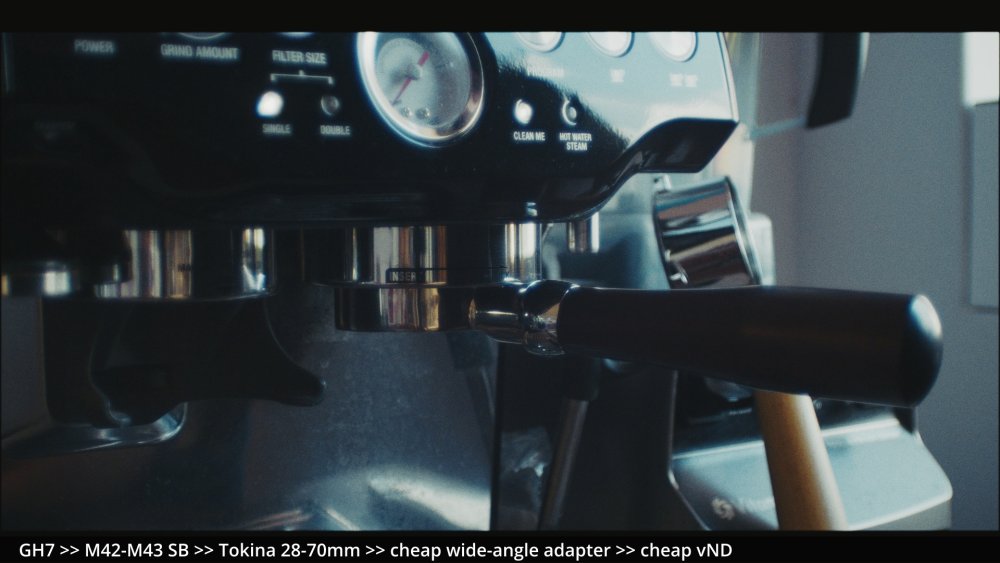

Shot a quick test with the Tokina RMC 28-70mm F3.5-4.5 zoom wide open with the wide-angle adapter. Combined with Resolve Film Look Creator it's super super analog, and maybe a bit too analog for what I would find uses for. Still, interesting reference. GH7 shooting C4K Prores 422 >> M42-M43 SB >> Tokina 28-70mm >> cheap wide-angle adapter >> cheap vND Resolve with 1080p timeline: CST to DWG >> exposure / WB >> slight sharpening >> FLC >> export Personally I am not really a fan of the hard-edged bubble-bokeh and the flaring with strong light-sources in frame is too much for most of what I shoot (mostly exterior locations and uncontrolled lighting) but it's great to know that looks with this level of texture are possible. I'm keen to compare it to the setup but without the wide-angle adapter. That would be less degraded but maybe in the right kind of way.

-

Surprisingly, it seems that Minolta didn't make so many slower lenses? This list here might not be complete, but it barely lists any slower ones: https://www.rokkorfiles.com/Lens Reviews.html and the page on the lens history doesn't include many extras either: https://www.rokkorfiles.com/Lens History.html Is Flickr still a thing? maybe some searching on there might reveal some other options, and with bonus sample images too.