-

Posts

8,046 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by kye

-

I used to shoot 90% of the time on a 35mm equivalent prime, but have since realised that one part of the "cinematic look" is using longer focal lengths. I'm switching to zooms for this purpose. Also, you might be interested to know that loads of the old 16mm zoom lenses were around 35mm at their widest end. When I first started looking at them I couldn't understand, especially considering that we're drowning in 16-35mm FF lenses at this point, but I realised that most productions don't shoot with anything that wide. If you look at those "list of movies shot on one prime lens" posts, you'll notice that basically none are shot on anything wider than 35mm, but that's 35mm on S35, so more like 50mm equivalent. Of course, I'm not saying anyone is wrong to shoot on anything wider than a 35mm equivalent, and of course I have been talking about narrative content, not event coverage, but I find it's useful to keep this in mind when thinking about what tools create what looks.

-

Is it a fee per unit manufactured? Per product model? For the manufacturer?

-

Of course it could be, but there's a question about wether they'll do it or not. This isn't the "Pro" version of the camera - it aligns with the P6K rather than the P4KPro which added NDs and other cool stuff. I'd imagine a Pro version to come out, maybe in a year, that adds internal NDs and other pro features. The other rationale might be that this is a step in the 'ecosystem war' they seem to have started. Conspicuously, Resolve does not support Prores RAW - which says to me that they're essentially holding Resolve hostage and saying 'if you want to grade in RAW on consumer cameras then use our RAW recorders or cameras'. They're not about to drop support for REDRAW or anything in the professional circles, but in the "you can shoot RAW with our camera you just need to assimilate into the Borg collective first" levels, they're fighting for control. I don't think this is the first example of them having conspicuous lack of support, but I can't recall the specifics off the top of my head right now. Incidentally, the more I learn about what it's like to grade in non-colour-managed environments, the more I realise that Resolve is the killer app for colour grading. While other apps do seem to offer colour management, I think that by the time someone wraps their head around the topic sufficiently to get the most from it, they'll be familiar with a bunch of advanced techniques (like subtractive colour models etc) and will want to colour grade in Resolve rather than in the other tools. And once you're colour grading in Resolve, you can just edit and mix in it too, which eliminates the round-tripping, and now if you switch to BM cameras or recorders then you can eliminate the converter for your Prores RAW setup, and bam, now you're fully in the BM ecosystem. Ironically, Instagram (which has sub-1080p resolution) has taught everyone that colour grading is king, and that might have positioned Resolve as the middle of the post-production workflow. I mean, if colour wasn't important then the internet wouldn't be full of people selling matrixes of numbers (LUTs).

-

Yeah, that's sort-of the same as not using colour management, as (unless you use the HDR palette to grade with) you're not getting any of the advantages of colour management. Your workflow is basically the same as applying a LUT and then trying to grade in 709, which is essentially ignoring 95% of the power of the tools at your disposal. Without teaching you how to do colour management, as there are much better sources for that than me typing, what you want your pipeline to look like is this: A transformation from the cameras colour space / gamma into a working colour space / gamma Do all your adjustments in the working colour space / gamma Transform from the working colour space / gamma into a display colour space / gamma (e.g. 709) What you have told Resolve to do is to transform the cameras colour space / gamma into rec709, and any adjustments you're making to that using any of the tools will happen in rec709. Some of the tools are designed for rec709 but some are designed for log, and most of them won't really work that well in 709. Only the "old timers" who learned to grade in 709 and won't change still do it this way, everyone else has switched to grading in a log space - some still grade in Arri LogC because that's how they learned, but everyone else grades in ACES or Davinci Intermediate, as these spaces were designed for this. I transform everything into Davinci Intermediate / Davinci Wide Gamma and grade in that as my timeline / working space (these mean the same thing). I can pull in Rec709 footage, LOG footage, RAW footage, etc, and grade them all on the same timeline right next to each other - for example iPhone, GX85 (709), GH5, BMPCC, etc. I can raise and lower exposure, change WB, and do all the cool stuff to all the different clips and they all respond the same and just do what you want. If you've ever graded RAW footage and experienced how you can just push and pull the footage however you want and it just does it, then it's like that, but with all footage from all cameras. Before I adopted this method, colour grading just felt like fighting with the footage, now everything grades like butter.

-

The order of each pair is the same, cam A then cam B. Were your observations consistent? I wouldn't be surprised if I didn't perfectly match the softness, considering the difference in lenses and resolutions. When I was done I noticed that in some shots the greens were more blue and others were more yellow, but I also noticed that it wasn't consistent which way around it was. I went back and checked, and it wasn't consistent, so it was just my grading. For my purposes, I concluded that it was irrelevant, even if I was using them both on the same shoot. If someone watches your video and their comment was "the hues of the trees didn't match" then that's a comment about your ability to tell an engaging story, not on your colour grading!!

-

Are you using colour management of some kind? ACES / RCM? Once you've learned that, almost all footage is the same. I can't believe that people still grade and don't do this - I was never able to get anything to look good until I worked this out. Sadly, it took me far longer to realise this than it should have! I think this is why there are 50,000 YouTube videos from amateurs on "How I grade S-Log 2" and "How to grade J-Log 9" and why every second video from professional colourists (the few that share content online for free) is about colour management. It used to drive me crazy hearing the same thing over and over again until I realised that it's the key that unlocks everything, and that getting really good results is incredibly simple once you've figured it out (getting great results becomes super complex again because colour grading is an infinitely deep rabbit hole, but that's well beyond these discussions). The other variable is what the individual shots were. It's not uncommon for me to go shoot something, with one camera and one lens, and then some shots just drop into place and look great and other ones are fiddly and I can never get them how I want. This is a hidden trap for downloading footage other people have shot.

-

Translation: the people who graded the Sony footage aren't as good at colouring than the people who coloured the Canon and RED footage. Seriously - there's the Dynamic Range, Bit Depth, Low light, and Frame-rates, but the entire rest of the equation is the skill of the people in pre, prod, or post. Ungraded and poorly lit ARRI Alexa footage looks like home videos until the colourist gets involved.

-

My advice is to take this as a learning opportunity and tighten up your business practices. I've heard this story over and over again in every industry you can think of, where someone acts in good faith and then gets ripped off, and it happens time and time again until they learn to push back and say no, and start enforcing the terms of their contract (and having a contract) and not starting work without up-front payments etc. As soon as you do that, the people will test you and push you, then once you hold your ground the leeches realise you're not a soft target anymore and either they start treating you with respect or you never hear from them again. The most memorable example was a person who recorded an entire album in their recording studio for a band, only charging them $500 for a weeks work because they liked the band and wanted to help them out and they had no money etc. The band refused to pay, claiming poverty, etc etc. Then one day the band turns up in a limousine, with a $2000 per day PR / Management executive in a suit, and still wanted the master recording for free..... Speaking of how life can be difficult for colourists, I was watching an interview with a colourist the other day and he said that in the 80s/90s he was colour grading rap videos and the rappers used to rock up to his colour grading suite for the session with cocaine and Uzi machine guns! He told them to put the cocaine away and to put the Uzi's back in the limo!!

-

Just another normal thread of humans discussing cameras on the forums, nothing to see here...

-

I am reviewing my lineup and decided to compare the OG BMPCC to the GH5, and ended up filming shot-by-shot duplicates and matching them in post. The results were surprising. I noticed that lots of shots had differences because the vNDs on the two setups were in different orientations and polarised the scene differently, but unless you were doing a direct comparison then that wouldn't be noticeable. GH5 was with 14-42mm F3.5-5.6 kit lens, shooting wide-open in 150Mbps UHD / HLG. P2K was with 12-35mm F2.8, shooting wide-open RAW. 1080p timeline as always. I graded each P2K shot and then graded the GH5 to match. I also shot a few of my wife as well, which matched nicely, including skin tones, but I'm not allowed to share those! The P2K has a desirable level of sharpness (being a native 1080p sensor) that is very similar to film, and I didn't need to blur the GH5 footage to match, which I suspect is due to the kit lens not being so sharp wide-open. Obviously the P2K has significantly more dynamic range, but it seems that when that is not required, the GH5 can do a pretty good imitation of it. Tools required to match shots included: contrast, saturation, colour boost, WB, exposure.. plus, I had to rotate the skintones slighty magenta, the greens slightly blue, raise the shadows slightly and tint them, and darken the saturated areas significantly. There were other tiny changes that I noticed that I didn't bother with. This isn't "basic" colour grading but matching GH5 to the legendary P2K isn't a basic goal either.

-

Yeah, looks like BM is doing their normal thing of rapidly updating things with new features and bug fixes (..and also perhaps creating new bugs from their rapid development pace? that's how Resolve is sometimes 🙂 )

-

Ambient temperature was 30C/86F which isn't a real stress-test but is better than the stupid people that test these things practically in the freezer, either to game the results because they're dishonest or because they're too stupid to realise that there are warm countries on earth too.

-

It would be useful if you wrote why you were sharing each video. I'm assuming you're watching more videos than you decide to share. That way, if you post a video that talks about things I know about I can skip it, but if you post one that mentions something new that I don't know about then I can watch. TBH I'm just looking at you post video after video and thinking "I can just search in YT and see random videos too".

-

@Jedi Master How good are your colour grading abilities? You might be better off buying the camera that's easiest to colour grade, or has the nicest out-of-the-box colour science rather than the camera with the most potential if you've got a team of dedicated pros in post-production. Sadly, I'm getting nicer colour out of my iPhone 12 than many are getting from their mega-dollar Sony cameras, simply because I have the colour grading skills of an enthusiastic amateur and they have the colour grading skills of a toddler. You may not be aware of this (many aren't) but the footage from high-end cameras often looks pretty boring when just applying the manufacturers LUT and before the professional colourists have done their work. I provided a few examples here:

-

+1 for going with one of the Blackmagic cameras. They're straight-forwards to use, record incredible images in RAW, lenses are available and practical for use, and you can concentrate on the creative aspects of the image. Seriously - the best cameras look crap when not used creatively, and the best DoPs can make the most of whatever they have access to. Optimising the equipment at the expense of the creative aspects is a ridiculous tradeoff. The point of the whole thing is making good finished videos - start there and work backwards. Don't start at the camera and work forwards...

-

The thumbnails have to grab you and make you watch, so will say whatever does that. I've seen it both ways - hype up an incremental improvement and on things that already have a lot of hype it can be the old 'done buy until you've watched this' gag... In between those two there's the 'hidden feature' that will change the world, etc etc.. when all of those don't fit, there's always ye olde faithful... product + shocked-face 🙂

-

If you'd have read the article then you'd know that he triggered the camera manually and took around 720,000 exposures to eventually get the perfect shot, and that the subject moves so fast that even with a 10 fps burst sometimes the bird isn't even in any of the frames: Good examples. I often shoot out of the window of moving vehicles while on holiday and typically shoot in high FPS video in order to be able to frame the scenery whizzing past. If you're trying to capture any scene where there are things in the foreground/mid/background then they're all moving at different speeds across the frame and so the closer the objects are the more slanted they are. Having a low RS matters more if you're going really fast. Interestingly, I shot a bunch of clips on my phone in vertical orientation to use for a slightly fancier edit (by showing multiples at a time, highlighting the differences of scenery throughout the trip) and there is zero RS because the RS was in the same orientation as the movement. That's not something I expected, but it's definitely a welcome surprise!

-

I can imagine wildlife photographers would get a lot of use from it considering that they take a long time to shoot (e.g. waiting in animal blinds etc), they have a long time in post to select the right shots, they aren't under super time deadlines to get images to a publisher, and the difference between a good image and a great one can simply be a matter of perfect timing and if you get it right there's huge rewards. Obviously this is very different the workflows of sports photographers etc. This photo and the story behind this shot comes to mind: https://www.wired.com/2016/01/alan-mcfadyen-kingfisher-dive/ I would imagine that there might be a lot of subjects where having an insane fps on the burst mode would enable you to line things up perfectly in a shot and really elevate it in a significant way. Street photographers would also benefit, with the decisive moment and all that, but they probably don't need burst modes this crazy fast. However, having said all that, it's a pretty niche application for an entire camera, so I can't see that being worth designing a camera around.

-

I've heard people say that film has rolling-shutter-like behaviour from the rotating shutter and isn't a true global shutter, but it's just that the RS was very fast and so wasn't noticeable in almost all situations. https://cinematography.com/index.php?/forums/topic/53119-why-no-rolling-shutter-on-film-cameras/&do=findComment&comment=357346

-

No digital would mean that everything was analog... Of course, since we're playing completely made up and arbitrary alternative universes, you could end up with super high resolution analog where analog tapes had ever-increasing numbers of lines and the analog circuitry became lower and lower noise and so DR went up, etc. But a digital scanner is a device with an array of photosensitive electronics that could output a digital representation of the light that was detected... but so is a camera. If you can come up with some sort of fictional law of physics that prevents one technology but not the other then good luck - I can't think of one. The only thing I could think of would be speed - that maybe the sensors are too insensitive and film can only be scanned in very slowly with long exposures required for each frame or something, but even then, tech advances so quickly that we'd simply innovate past this point and get to where digital cameras were practical again, so this limitation doesn't fulfil the rules of this fictional scenario.

-

I was talking about if digital didn't exist, not just if digital cameras didn't exist.... It's pretty difficult to dream up a scenario where digital cameras didn't exist, but for completely inexplicable reasons we still had digital film scanners, all digital image manipulation software, and then digital projectors. I mean, how on earth could you scan film but not be able to scan the world directly?

-

On everyone... Think about it - no more CGI / VFX! The industry would have to pivot to strange and alien concepts like, story, and acting, and craft!!! Oh, the humanity!

-

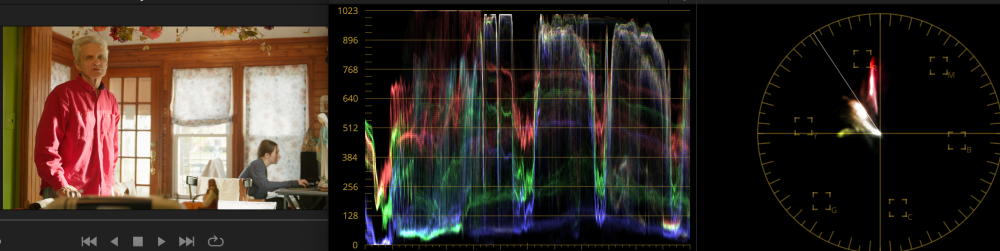

By the time you put a grade over it, those appear to be fine. This is with a 2383 PFE LUT and I adjusted the WB to be cooler for a bit of separation and reduced the saturation a little:

-

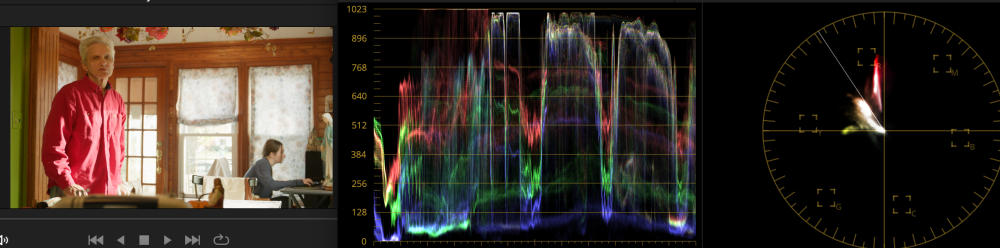

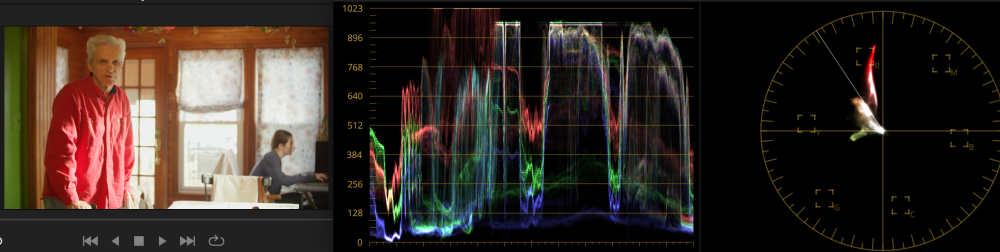

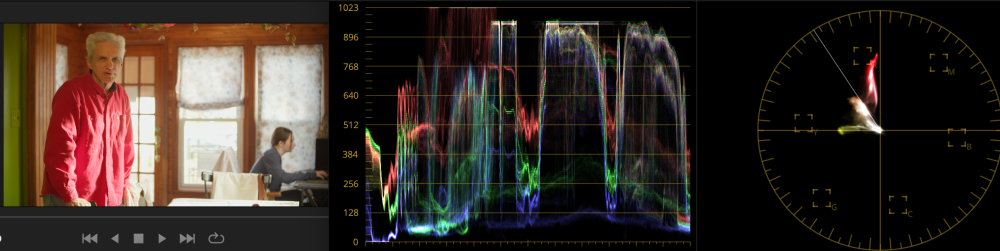

@TomTheDP Here's a basic match. As I don't own the LUT packs, I have just used a CST sandwich - first node converts to Davinci, second does adjustments, third converts to 709/2.4. The Alexa has no adjustments at all, Sony has a Hue rotation for skin and Tint adjustment pushing green for neutrals. These were literally done just by looking at the vector scope. Added Hue curves for the green wall and the jacket: That would be good enough for most work I'd imagine. Skintones. Always skin tones. It's where the viewer will be looking, and we're sensitive to very slight differences.

-

Yes! I definitely agree with you - there must be optimisations that can be made. Please Panasonic!!