-

Posts

8,027 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by kye

-

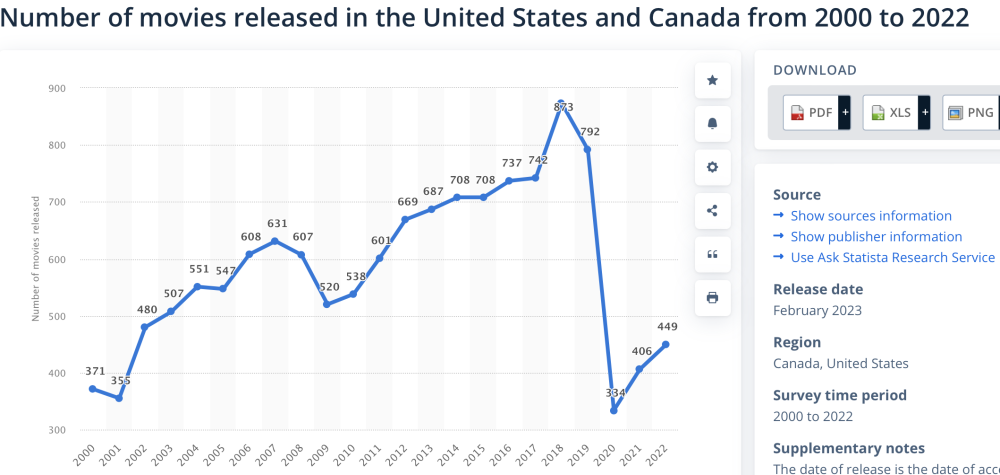

It was marketing. If it was shot on FX6 there would be zero press, but look at the social media frenzy that this film is enjoying - including this thread. There are normally multiple feature films released per day, but how much press are you reading on photography / videography websites about any of the others? https://www.statista.com/statistics/187122/movie-releases-in-north-america-since-2001/

-

Did you grade the FX30 to match the Alexa, or the other way around? I see differences, but they're subtle and not significant. IIRC it was Steve Yedlin that talked about the idea of the "cut back" test for camera matching. Ie, if you were to have a shot from camera A, then another shot, then CUT BACK to camera B, would you notice that A and B were different. I think this is a good test because in real film-making you don't normally cut directly between two cameras showing the same scene from the same angle, so there is some distance there, and so in A/B testing you shouldn't cut directly between the same scene and angle because it's too stringent a test. Yes, in theory you would cut between two angles of the same scene, but in lots of film-making you'd be changing the lighting setup between filming those angles, so getting a perfect match isn't relevant in these situations either.

-

I'd challenge your concept about worth.. all cameras are tools to do a job, and maybe that job is to create footage or to provide nostalgia or demonstrate taste or status, but they're all outcomes. I'd suggest that the Red Raven would excel at most of these. If you lust after the name then it would do that, if you wanted to demonstrate taste or status then it does that, and if you want to create footage with it, holy hell does it do that..... I am not really an expert about the Red ecosystem or what all the cool kids are doing, but it looks pretty good to me. To a certain extent it seems like Red is a bit like Apple, in that you're only cool if you have the latest model and because no-one wants yesterdays model they're all undervalued. For USD2K, you could make some incredible wedding or corporate videos or beauty or branding videos, and if you knew what you were doing you could make some incredible money doing that with an image that good.

-

I know of at least one group of professional colourists who are profiling some of these cameras themselves because the available information isn't sufficient to do proper transforms. You might think all the information is there, but it's not. I don't know enough about colour spaces to know why the information that's been provided isn't sufficient, but if it was then these transforms would have been built already and the professional colourists wouldn't be having to grade these files manually, or build their own transforms for them.

-

The A7CR sensor is ~9500 pixels wide, so at 3x it's only looking at an area ~3170 pixels wide, so almost 4K but not quite. The elephant in the room is that it's a 40mm - 120mm, instead of the wider range on the others. Of course, the Sony 24mm F2.8 lens is also very small and the same math would apply to zooming with it. The level of detail visible is due to the processing of the data from the photosites, not the number of them. I do really like the idea of having a high-resolution sensor and cropping digitally to emulate a longer focal length, as I have repeatedly mentioned when talking about the 2x digital zoom on the Panny cameras, but ultimately I want all the flexibility I can get out of focal lengths. My dream setup now is the GX85 and 14-140mm lens. At only a little larger this setup is still 4K at 10x and isn't missing the 24-40mm zoom range either. Your FF setups are approaching SD at these zoom levels.... I have also found that when cropping into a lens you magnify all the aberrations of that lens. I'm comparing quite optically compromised variable aperture zooms with your (likely) highly tuned and very sharp primes, but when the image is blown up to 3x size then I'm not sure which would win - zooms are compromised but not necessarily that compromised. I also realise these comparisons don't take into account the low-light performance of the camera, the AF, DoF capabilities, and lots of other advantages of full-frame, but believe me when I tell you that users of smaller-sensor cameras are all very very aware of these compromises, but size is of such priority that it takes precedence. One of the things that I find hugely ironic is that if I had $10K to spend on a camera, the GX85 might still be the best choice. If I had $100,000? Still the GX85. If I had $1,000,000? Maybe I'd call up Panasonic and ask for them to CUSTOM MAKE me a camera! Seriously, when size is your primary limitation, your options really are very limited.

-

If only 9.99 got you the image from a 3500 mirrorless camera! I chose the $0 BM camera app 🙂

-

Finally, a camera where 8K won't be a spectacular and non-sensical application of superfluous technology simply for marketing purposes.

-

I previously reviewed the options on the A7iii and concluded that you could do up to about a 2.5x zoom (1.5 APSC crop and the rest with Clearimage zoom) before it started really degrading. I would guess with the extra MP sensor that maybe that's more like 3-4x, which isn't bad. The issue with that comparison is that the FF/40mm prime combo is similar size to the other cameras which have zoom lenses so are doing 3x optically and are therefore still 4K at 3x and extra cropping could be done in post. The A7Cii sensor is ~7000 pixels wide, so at 3x it's only looking at an area ~2300 pixels wide, which obviously puts it at a disadvantage in terms of digital zooming. Sure, having 3x digital zooming in camera is great, but it's hard to compete with optical zooms. It really depends on what you're used to. There was forum member on here years ago who was an ex ENG camera operator, so was used to handling a full-sized shoulder-rig in emergency situations and was also used to pissing people off while shooting (which is an integral part of ENG work). He and I used to disagree on what "small" and "large" meant, because to him a 1DXii was a "small" camera and to me it's absolutely enormous! I was going to mention before, but forgot, that now my eyes are ageing I now need reading glasses to be able to see the LCD when shooting, which is a major PITA considering that if I'm out shooting I'm likely wearing sunglasses and swapping glasses just isn't practical, but I discovered the EVF on the GX85 has that diopter adjustment and what a great feature that is! I'm under no illusions though. It means raising the camera to my face which puts lots of people on high alert in public, and it also means having to take my sunglasses off, both of which are serious downsides. Sadly, some googling yesterday around how to carry reading glasses without looking like an octogenarian revealed there are no good options, so in a pinch the EVF is good for more than just bright situations. I'd never thought if it before, but it's another plus for using it. I definitely agree that the poor resolution and implementation of them is often unfortunate. I've criticised the screen on the GH5 before, because I think the focus peaking calculations are done on the screen resolution, not on the actual image being captured by the camera, which in some situations leads the peaking to outright lie to you, showing the background to be in focus when it isn't and the subject to not be in focus when it is.

-

I would be tempted to say best-bang-for-buck would be OG BMPCC BMMCC... BUT, used prices on them are going up because people have worked out that more pixels isn't progress and are re-buying them again. So yeah, maybe not best value for money..

-

Favourite camera I own... GX85. Favourite cameras I don't own... Alexa 35, GX85 mk 2, BMPCC OG Signature Edition 2023. (Yes, I realise that most of these cameras don't exist, but what's the difference between a camera I've never used and one that doesn't exist? How can you have a favourite if you haven't used it? It's like having a favourite food you haven't tasted... Any hypothetical is a hypothetical!)

-

I always say, if you don't know what the solution to a problem is, then you don't understand it sufficiently. In this case, you seem to understand your two identified options relatively well, so the final piece is understanding your own needs. How do you shoot? Where do you shoot? What do you shoot? What lenses will you need? What other equipment will you need? What does the whole workflow look like? All cameras at this level look great, this shouldn't be your focus - you should be focusing on the camera that is easiest for you to shoot where and how you shoot to get better shots by being supported by the entire ecosystem that you're buying into.

-

Two things are needed - the gamma and the colour space. These are normally referred to in casual discussions as being only one thing, but they are completely independent and one cannot be inferred from the other. DJI should also publish the spec for their profiles too. Hopefully the release of Apple Log and subsequent inclusion in the ACES and RCM ecosystem will inspire the other manufacturers to also start publishing their profiles. Unfortunately, I don't think this is likely to be the case for all manufacturers, as some of the standards are not pretty and the standards documentation would point out those flaws quite explicitly.

-

A published technical specification that would allow it to be integrated into ACES RCM etc.

-

Because you have to pay to shoot anything other than UHD 24p.

-

If you're talking video then "massive ability to crop" wouldn't be how I'd describe it. You're also correct that you can swap the lens for anything you'd like, but the non-abbreviated quote is more like "anything you'd like, as long as it's enormous in comparison". Here's the FX3 with 24-70mm F4, the A7C with the 28-60mm F3.5-5.6 and the LX100:

-

Apparently there was a BTS video that Apple made, but since took down, that showed a bunch of stuff about how it was shot, including that they edited it on Premier Pro and graded in Resolve. Here's an analysis video, linked to the relevant section: I don't know what PP looks like - is that it? It would be strange if they used PP instead of FCPX...

-

What do you feel is the advantage of the LX over something like the GX range? Is it the size? It definitely is much smaller with a roughly equivalent lens (even though I suspect the LX100 lens would extend once turned on)!

-

Of course, there is a third option beyond smartphone and normal camera. That option would be to leverage its existing tech but compromise on the thickness of the device, and include multiple cameras with various different focal lengths, light the Light L16 did. (The L16 tried to stitch the camera images together, which didn't work well, and isn't what I'd recommend. But it did show that you can get 16 smartphone sized camera modules into something very compact, with potentially spectacular dust and water-proofing.) As we saw from the Venice video I posted yesterday, the main camera is broadly a good option but the other cameras tend to suffer significantly from lack of light. If they had extra thickness they could use the same size of sensor for all the cameras, and combine it with faster lenses. There are F1.4 smartphones out there, but we all know that lenses can be built much faster than that. Imagine a device that's sized like a large smartphone but with the thickness of a modern camera body without the lens, and has an array of cameras including 16mm FOV, 24mm FOV, 50mm FOV, 100mm FOV, and 250mm FOV, all capable of 8K60, and all at F0.8. Shooting all resolutions and flavours of Prores in Apple Log of course. If Apple made a dedicated camera, it would have to be completely superior to their flagship phones, and would also have to appeal to the masses who want improved imaging and are willing to pay for it, BUT would have to fit the Apple philosophy of being elegant and simple to use. I don't think an FX3-ish or Alexa-ish device with Apple written on the front would do that.

-

A proper LOG implementation would be even better. That way it could be graded properly using colour management. Now that Apple have shown what is possible with Apple Log, there's no denying that it's possible.

-

Ah, that makes sense. Considering the tens of thousands of complains that we have against all cameras ever released, it makes sense not to put the camera anywhere near the image!

-

Not so good for filming in uncontrolled lighting then. Unfortunately, that's basically ALL that the iPhone gets used for!

-

Moire can be caused at many points in the process. You are talking about moire being caused by the camera not downscaling the image from the entire sensor, which is what is happening in the 1080p example of the A6600. This happens. What also happens, and what I have been trying to explain, is that it can also occur as a function of the native sensor resolution and the real world, which means that the patterns of light that the sensor detects will have moire in them, and it won't matter what happens after that - no amount of good processing can fix it. The cure for this is some kind of optical mechanism to spread the light between the photosites. This could be an OLPF, clever design of the micro-lenses, combination of these, or other things as well. This is why people keep talking about AI - it's the only way that moire could be fixed after being captured.

-

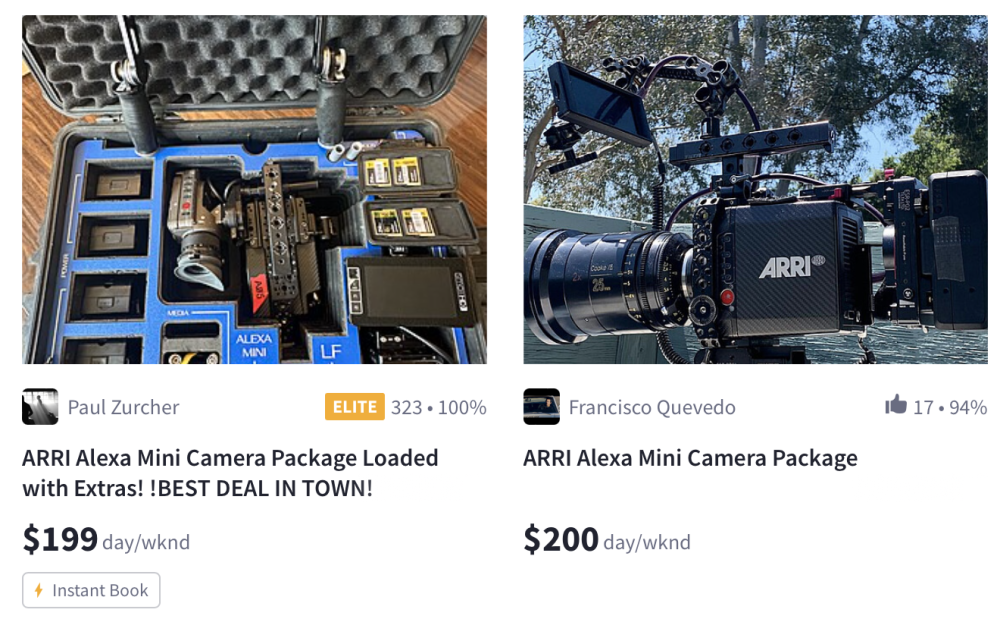

Like every production with a real budget ever. Alexa Mini camera packages are around from $200 a day... on any set whatsoever that's less significant than the catering budget, the fuel budget, etc and absolutely dwarfed by even the vehicle rental budget. It's why there's precious little great-looking footage from all these mirrorless cameras - if you were going to go all the trouble of making a real film then why would you skimp on a camera package? It makes no sense. My sister and I shot a short film in the late 90s when she was in film school, it was self-funded for $2000. We borrowed everything and our largest cost was catering (feed people well and they'll be happy). It was a 5 minute final film, we had 20 people on set, and my estimate was that in total it had thousands of person-hours put into it, including pre and post. Even if everyone gets paid minimum wage, the camera rental cost is inconsequential.

-

In those modes the sensors resolution is higher and the chart doesn't have fine enough lines to create moire. It does indicate that the Sony isn't using the full sensor resolution in its 1080p mode though. This isn't a surprise, the GH5 employs full readouts and high quality image scaling internally, whereas inferior cameras don't.

-

Addendum to the above. I think the shots that suffer the most are the ones with the non-main cameras. I know on my iPhone 12 Mini that the main camera has the noise performance of the GH5 in identical conditions with an F2.8 lens on it, but the wide camera on the iPhone has the same noise as the GH5 in the same conditions but at F8... that's 3 stops worse performance.