-

Posts

8,027 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by kye

-

That was my impression from what he said. I'd imagine that Filmic Pro will provide an update that will use the full capability of the new Prores Log mode, but I don't think it will provide any advantages over the BM app. TBH, if I owned Filmic Pro, I'd be sitting in a closed room with the smartest people in the company scribbling on a whiteboard furiously trying to work out how to stay relevant. My prediction: we'll see a torrent of YouTube videos sponsored by Filmic Pro as a last-ditch effort to keep revenue up (a huge advertising campaign is a standard sign a company is in trouble). I think you make a good point. The way I think about it is this. Your smartphone contains one (or more) small-sensor (just under 1"), fixed-lens (probably a prime-lens), digital cameras, which records internally/externally to h264/h265/Prores/RAW files. If you want to shoot deep-DoF videos with the available FOV in good light and the codec is sufficient for you then it's a solid choice. If you want to shoot with a different lens, larger sensor, in very low-light conditions, or you need better image quality than it can provide, then it's not a good choice. This is the same for any camera. Name a camera and there are things it's not good for. The ARRI Alexa 65 is a terrible choice for skydiving, a smartphone is terrible for shallow DoF projects, the RED V-Raptor XL is not suited to home or cat videos, an IMAX 70mm camera would be miserable for on-location night-time safari shoots, etc.

-

Ah, I just realised I mis-read your comment in my above reply as "the quality that the manufacturers keep in the drawer (through limiting their potential with too heavy-handed image processing and compression)". You are right, of course, especially considering that the main reason people keep them in a drawer is because of their limited technical specifications, when realistically people have just gotten used to the latest technologies. Most cameras we keep on shelves or in drawers are better than 16mm film, and that was what was used to shoot all but the highest budget TV shows and was used on a number of serious feature films too, like Black Swan (2010), Clerks (1994), El Mariachi (1992), The Hurt Locker (2008), Moonrise Kingdom (2012), The Wrestler (2008), etc etc.. I think the biggest problem is that people don't know how to colour grade, or don't know what is possible. I mean, anyone with a Blackmagic camera that shoots RAW has enough image quality to make a feature. Hell, if the movie Tangerine could be a success when shot on the iPhone 5S, then no-one has any excuses for not being able to write a movie that is within the creative limitations of their equipment. Even a shitty webcam could be used to shoot a found-footage horror movie set in the days of analog camcorders!

-

The Hawk "emulation" was simply two blur operations, each at a partial opacity. In Resolve: Node 1: Blur -> Blur tool at 0.53 with Key of 0.6 Node 2: Blur -> Blur tool at 1.0 with Key of 0.35 The first one (0.53) is the small radius blur that knocks the sharpening off the edges, and if you were just using this one on its own you might even want to make it closer to 90% opacity. The second one is a huge blur (1.0) that provides the huge halation over the whole image. I use the Resolve Blur tool because it's slightly faster than the Gaussian Blur OFX plugin on my laptop, but the OFX plugin allows much finer adjustments so that might be easier to play with. You can also adjust the size of the blur and the opacity in the same panel, so it might be easier to get the look you want using it. What are you grading? I'd be curious to see any examples if you're able to share 🙂

-

Yeah, if you can bypass whatever the camera is doing and get the RAW straight off the sensor then it should be a good image. Sony know how to make sensors, and the FX3 shouldn't overheat.... From the Atomos page on the FX3: https://www.atomos.com/compatible-cameras/sony-fx3 I never hear people in the pro forums talking about Canon, only ARRI / RED / Venice. Gotta shoot fast and get that IMAX image! Absolutely. It's one of the reasons I am so frustrated, especially as now they've "unlocked" this quality via bolting on an external recorder instead of just giving us better internal codecs. I mean, for goodness sake, just give us an internal downscale to 2.8K Prores HQ with a LOG curve and no other processing! Even the tiny smartphone sensors look great in RAW. Scale that up to MFT or S35 and imagine the quality we'd be getting from every camera! My favourite WanderingDP video explains everything...

-

I'm curious to hear how it holds up on an IMAX screen too - keep us informed. The link that @ntblowz shared has lots of info: "the filmmakers use the Atomos Ninja V+ as an onboard ProRes Raw recorder" "75mm Kowa 2x anamorphic lens with a prototype of the Atlas Mercury 42mm as a backup for the small spaces where the 75mm was too tight" TBH, the choice of the FX3 could have been as simple (and uninformed) as simply being that they are aware of ARRI, RED, and Sony (through the Venice) and looked at their cine lineups to find the smallest cinema camera, but never evaluated Panasonic or Fuji because they were simply unaware of them. Sometimes a lot of these industry heavyweights can be just as dogmatic about their favourite brand and just as naive / hoodwinked by rumours / misunderstandings / marketing as the worst camera fanboys/fangirls online.

-

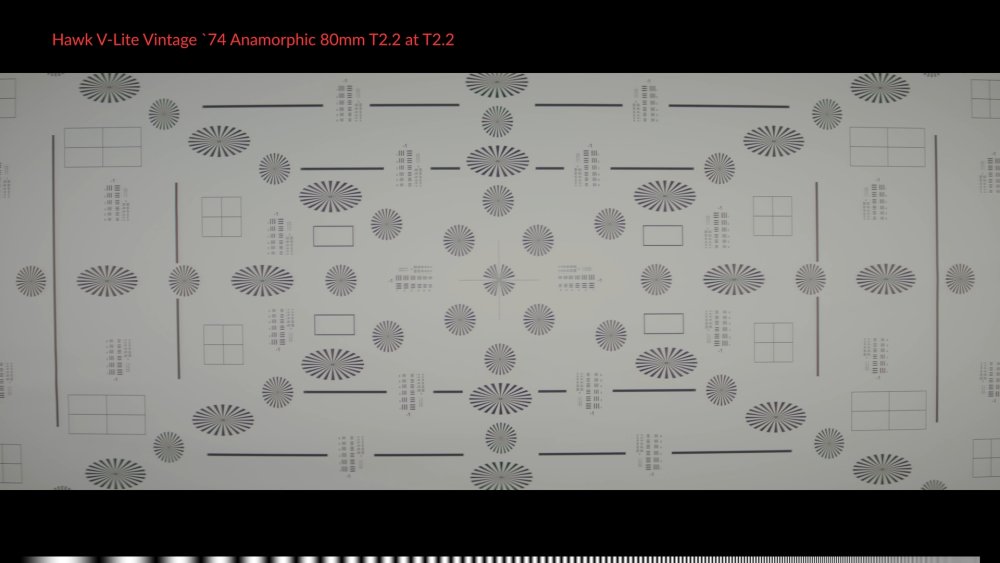

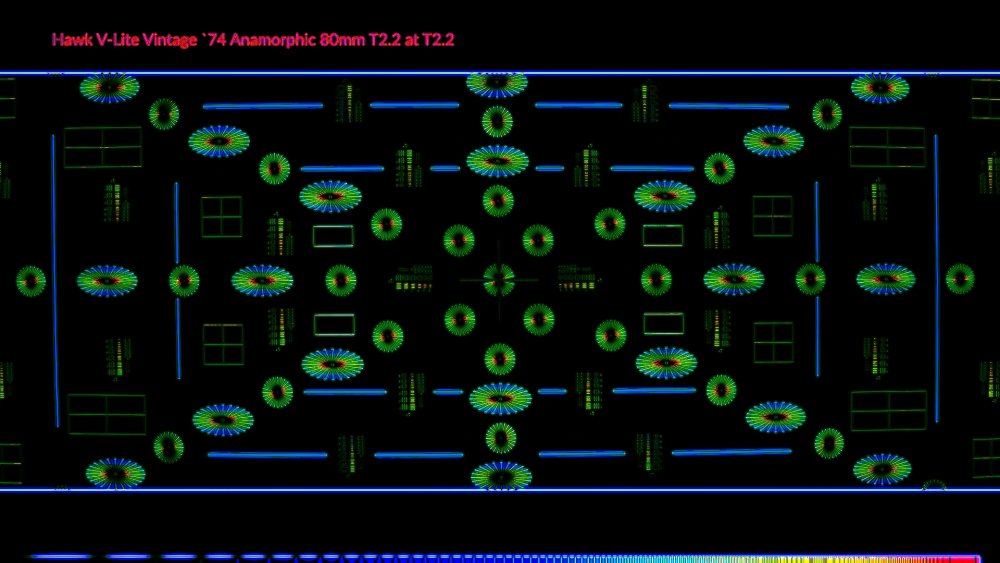

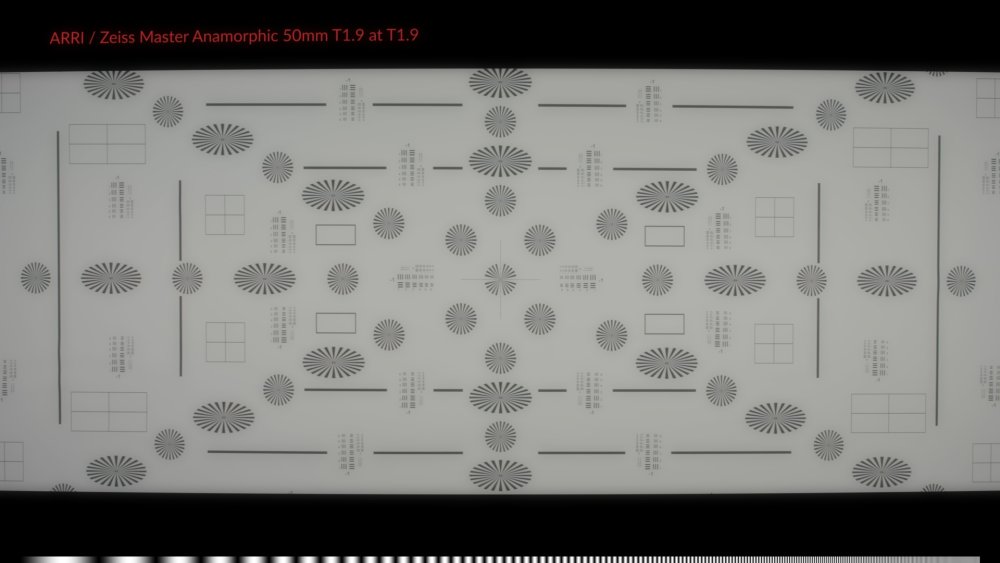

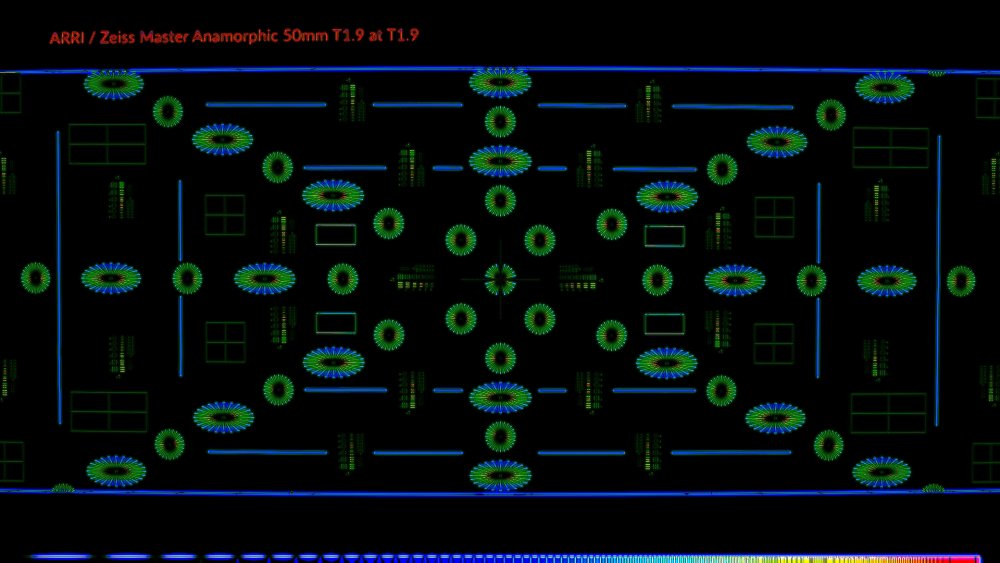

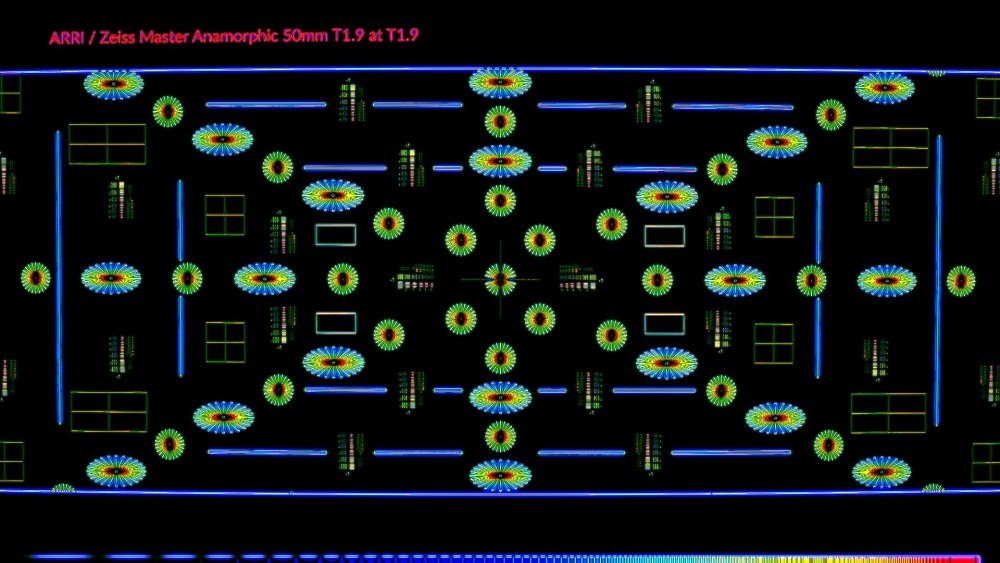

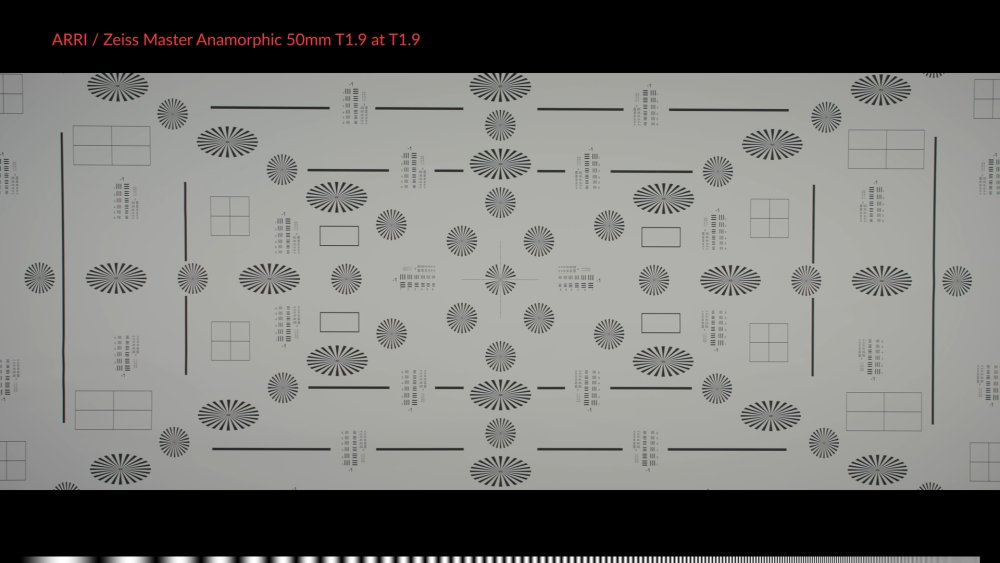

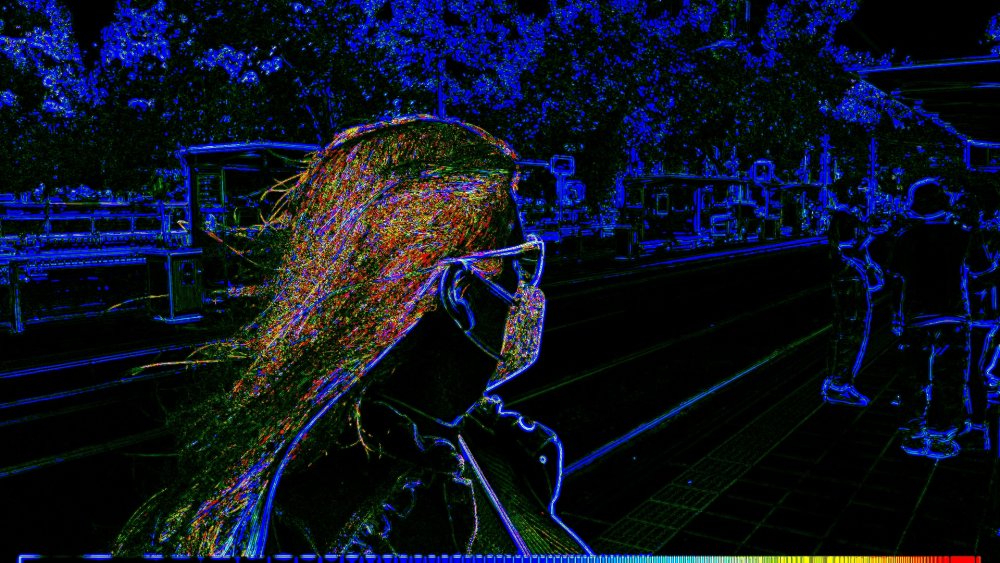

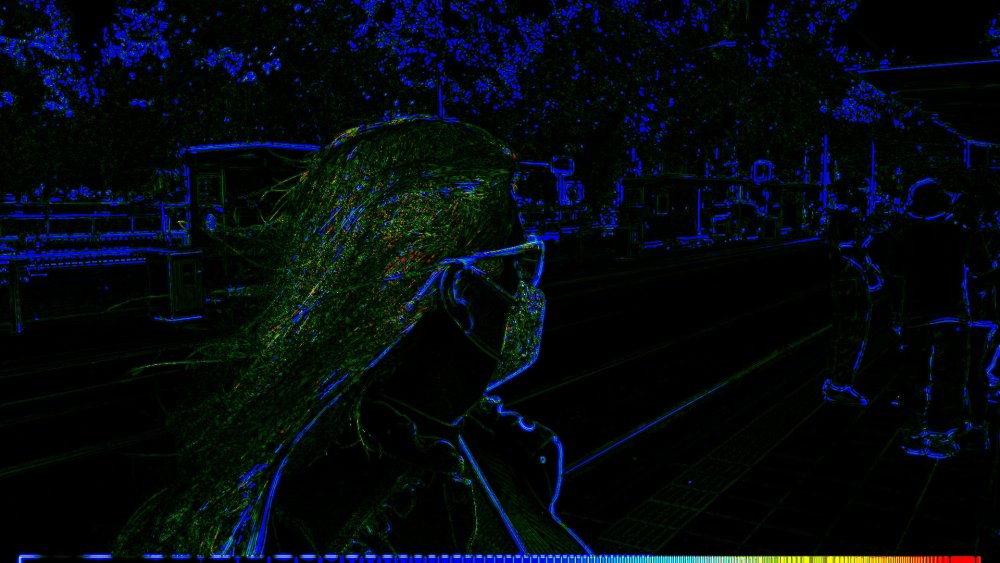

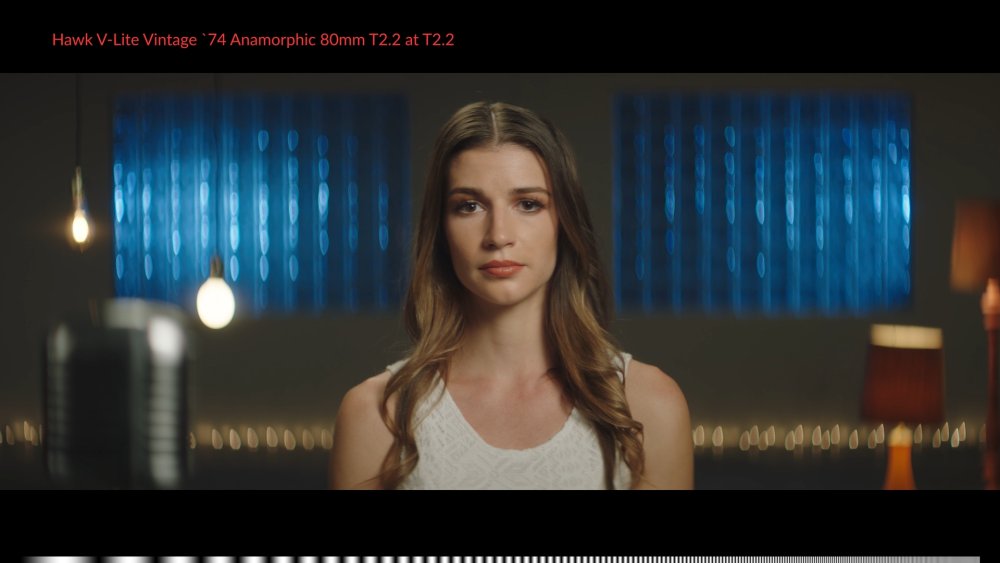

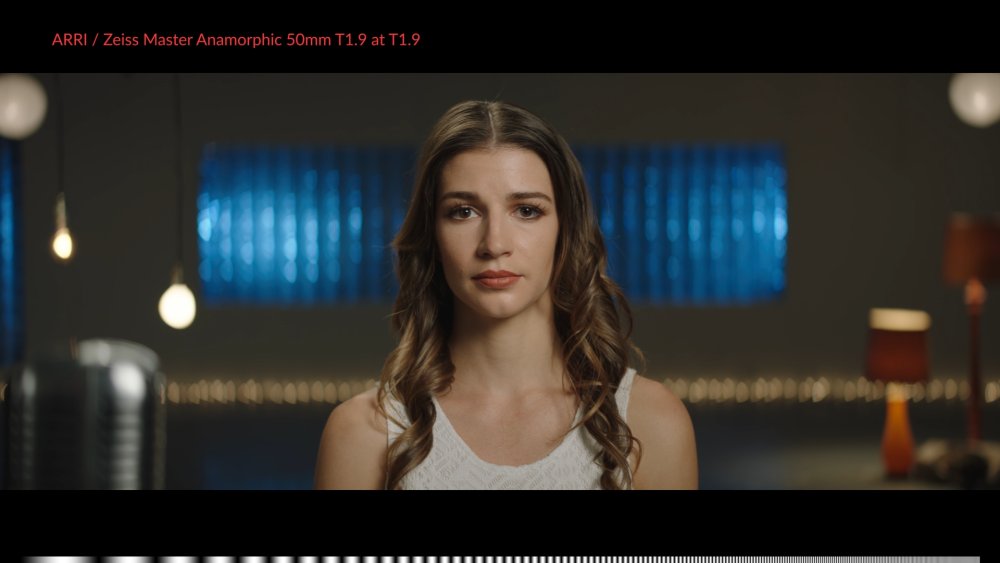

I've developed a more sophisticated "false sharpness" powergrade, but it was super tricky to get it to be sensitive enough to tell the difference between soft and sharp lenses (when no sharpening has been applied). Here are some test shots of a resolution test pattern through two lenses - the Master Anamorphics which are gloriously sharp, and the Hawk Vintage '74 lenses which are modern versions of a vintage anamorphic. ARRI ungraded with the false colour power-grade: Note that I've added a sine-wave along the very bottom that gets smaller towards the right, and acts as a scale to show what the false sharpness grade does. Here's the Hawk: and the Zeiss one with a couple of blur nodes to try and match the Hawk: Here's the same three again but without the false sharpness powergrade. Zeiss ungraded: Hawk ungraded: Zeiss graded to match the Hawk (I also added some lens distortion too): Interestingly, I had to add two different sized blurs at different opacities - a single one was either wrong with the fine detail or wrong on the larger details. The combination of two blurs was better, but still not great. I was wondering if a single blur would replicate the right shape for how various optical systems attenuate detail, and it seems that it doesn't. This is why I was sort of wanting a more sophisticated analysis tool, but I haven't found one yet, and TBH this is probably a whole world unto itself, and also, it's probably too detailed to matter if I'm just trying to cure the chronic digitalis of the iPhone and other digital cameras. ....and just for fun, here's the same iPhone shot from previously with the power-grade: If I apply the same blurs that I used to match the Zeiss to the Hawk, I get these: It's far too "dreamy" a look for my own work, but the Hawk lenses are pretty soft and diffused:

-

LOL I've watched dozens of hours of "how to edit a wedding video" tutorials. They're very similar to my work in many ways, where footage is likely to be patchy with random technical and practical issues to solve and the target vibe is the same - happy fond memories. BUT... I've never shot a wedding video, so I haven't taken the oaths to keep all your secrets!!

-

I'm not sure what you're seeing, but there seems to be two things required. The first is to get Resolve to not automatically do anything to the footage. IIRC you can do this by going to the clips in the Media tab and there's some option when you right-click on the clips that is something like Bypass Colour Management or something similar. That should tell Resolve not to do anything automatically based on metadata in the clip. The second one is the conversion, which should just be a CST from the right space to the destination one. IIRC the video suggested it was rec2020/rec2100 HLG, so you should be able to do a CST from that to whatever LOG format you want to work in. Keep in mind that you might want to do the CST at the start to a common working colour space for all your media and cameras, so that any grades or presets you create will work the same on all footage from any camera. I use DI/DWG for this purpose. Then if you have a LUT that wants a specific colour space, you just do a CST from DI/DWG to that log space, then put the LUT after that and you should be good. For example, the iPhone shots above had the following pipeline: Convert to DI/DWG I manually adjust the clips to 709 with a few adjustments and then use a CST from 709/2.4 to DI/DWG all my default nodes etc are in DI/DWG CST from DI/DWG to LogC/709 Resolve Film Look LUTs (mostly the Kodak 2383 one)

-

The format of this video is a pretty common one I think. My understanding of this style is this: Go out and do something, film what you can Review the footage and "find the story" Write and record a "piece to camera" (PTC) shot (https://en.wikipedia.org/wiki/Piece_to_camera) Edit the PTC into a coherent story, focusing on the audio Put the shots you recorded from (1) over the top of the PTC to hide your cuts If there are still gaps in the edit or it still doesn't work, record another PTC in-front of the editing desk that explain or clarify, put that into the edit I see these videos often, including the snippets from the person in the edit. Sometimes they have recorded a PTC so many times that the whole video is just a patchwork of clips from different times and locations that you're not even sure how it was shot anymore. Casey Neistat used to film his videos where each sentence or even every few words were recorded in a different location, so during the course of a sentence or two he'd have left his office, gone shopping, and returned home. Here's a video I saw recently that has this find-it-in-the-edit format: The above is an example of where the video was very challenging to make, which is why it required such a chaotic process, but it shows that if you are skilled enough in the edit you can pull almost anything together. Also, go subscribe to her channel - she's usually much more collected than the above video! 🙂 Wedding videos often follow a similar pattern in the edit: Find one or two nice things that got recorded (this is normally a speech from the reception, and perhaps if the bride and groom wrote each other letters and they opened their letters from their partner and read them out loud) Edit these into a coherent audio-edit (you literally just ignore the visuals and edit for audio only) Put a montage of great shots from the day over the top, showing just enough footage from the audio so you know who is speaking Put music in the background and in any gaps Done! I'd also suggest that when you say most other people film vlog style with a phone and you want to take it up a notch, try and do that just by filming with your camera on a tripod, but otherwise try and copy their format at first. Innovation is an interactive process, and the way they shoot and edit their videos likely has a number of hidden reasons why things are done that way. Start by replicating their process (with a real camera on a tripod) and see how that goes and what you can improve after you've made a few of them and gotten a feel for it. The priority is the content and actually uploading, right? So focus on getting the videos out and then improve them once you get going. It's always tempting to think you can look in from the sidelines and improve things, but until you've actually done something you don't understand it. Real innovation comes from having a deep understanding of the process and solving problems, or approaching it in a different way.

-

I've seen this get recommended online elsewhere. Personally I just shot a colour chart with the phone and made a curve to straighten out the greyscale patches and a bit of hue vs hue and hue vs sat curves to put the patches where they should be in the vector scope. I've tried using a CST and didn't like the results from that as much as my own version. After I did my conversion my other test images all straightened out nicely and the footage actually looked pretty straight-forwards to grade.

-

Another one to match the above with a slightly better matching scene (still not hard light though - the pollution in India is no joke!). Ref: iPhone grade: iPhone (ungraded): Ok, I'll shut up now.

-

Another one, this time a shot from one of the Jason Bourne films, I think this was the second one, which was shot on Kodak Vision 3 and printed on Kodak 2383. Reference image: iPhone grade: iPhone (ungraded): I'm not so happy with that one, but the subject matter was a lot more different, with the reference shot being in full sun and the iPhone image being overcast and also containing a lot of different hues. The road in the reference image is asphalt and is slightly blue in the image, whereas the "road" in the iPhone shot is actually tram tracks and concrete, not asphalt. Still, there was something in the green/magenta/yellow hues that I couldn't quite nail. Oh well. That's those HDR images from the iPhone - you have no creative control over them. If only Apple had given me a slider for saturation, sharpening, and other controls, those would have matched the look of S35mm film and Cooke lenses perfectly 😉

-

A bit more playing around with what is possible from my HDR iPhone 12 images... Reference image from the move Ava (2020): iPhone grade: iPhone (ungraded): It's not perfect, but without having them next to each other it's not terrible. I couldn't find what camera Ava was shot on, but I did find that it was shot with Panavision anamorphics. No doubt that is a contributing factor to why my iPhone shot doesn't match the exact look of the movie lol.

-

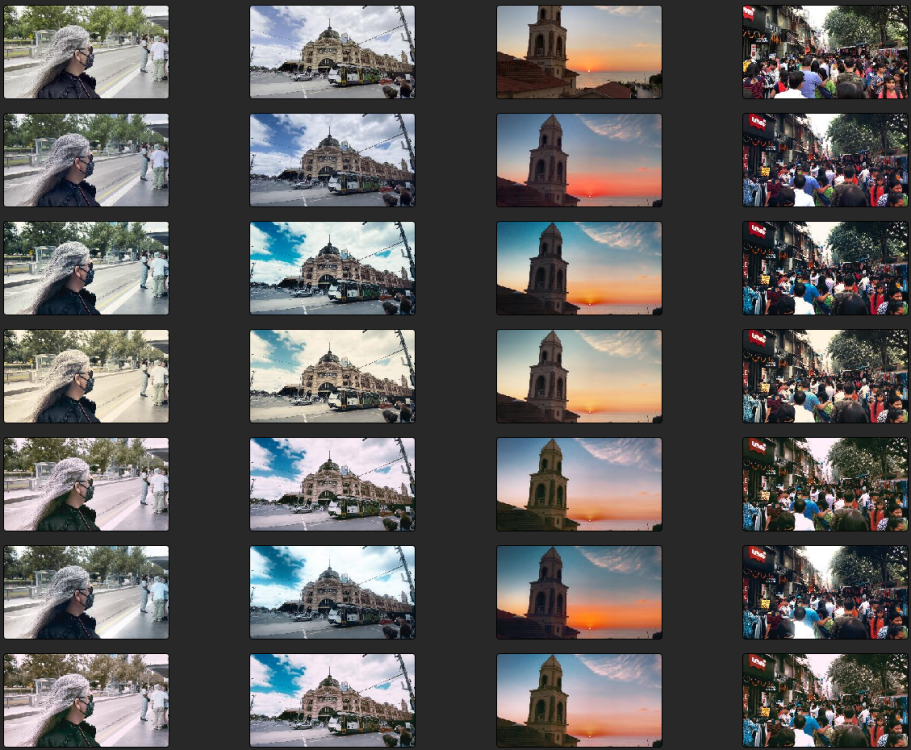

Here are a set of four shots from my iPhone 12 Mini that I have graded very quickly in a few different ways to give a sense of what is possible, and what "film looks" might be able to be created. The first row has no grading applied, the second has my standard default iPhone input transform, the rest are more creative grades, just pushing it around to create various looks. All these shots were shot on full-auto with the default iPhone app. Every shot on the same row has the same grade, including exposure and WB and everything, despite being shot on three different continents. All these use only effects that are available in Resolve - no third-party plugins or LUTs or other YT influencer bullshit. You tell me - do I look like I am currently experiencing a complete lack of creative control over my images?

-

Remember before when I said that colour grading knowledge is lacking? This is a far deeper subject than I think you're aware of, and the comments from the guy in the video are so oversimplified that they're closer to being factually incorrect than they are to just being misleading. I've been studying the "video look" because I hate it and want to eliminate it as much as possible in my own work, and you're right that over processing images will contribute to this, however that's not what the guy was saying. He said that shooting HDR gives you no "creative control over the image", which is patently false because you can get it in post. When grading any footage, regardless of the camera, there are a number of standard operations you would apply to it, and you apply all the same treatments to LOG and HDR images. I have a testing timeline where I am developing my own colour grading tools and techniques, and it contains everything from HDR iPhone footage to 709-style GX85 footage, to HLG GH5 footage to LOG XC10 footage to RAW and Prores footage from the OG BMPCC and BMMCC, to ARRI and RED RAW 8K footage. I apply a custom transform to each of them to convert them into my working colour space (which is Davinci's LOG space) and then I apply a single default node tree to all clips, regardless of which camera they came from, and then I grade all the clips in one sitting. I have done this process dozens of times. The HDR profile from the iPhone is approximated pretty well by a rec2020 conversion. In that sense, it's in a colour space just like any other footage. Do I grade the iPhone footage "differently" to the other footage? Yes, and no. I still apply the same adjustments to each clip, adjusting things like: White balance Exposure and contrast Saturation Black levels and white levels Specific adjustments to things like skin tones, colour of foliage and grass, etc Power windows to provide emphasis to the subject, usually lightening and adding contrast Removing troubled areas in the frame like anything that stands out and is distracting Texture adjustments like sharpening / softening / frequency separation Adding grain That stuff all sits underneath an overall look, which will be based on a CST or a LUT, as well as up to a dozen or so specific adjustments which are too complex to explain in this post. Does the iPhone footage "feel" different when being graded? Sure, but the XC10 and GH5 and OG BMPCC all feel more different to each other than the iPhone does. TBH it feels more like in the middle than the other cameras, and similar to the GH5 and GX85. Is it harder to grade because I'm having to overcome all the baked-in stuff? It's probably not as difficult to grade as the XC10 footage, and that's shot in C-Log, which is a proper professional LOG profile. The RAW stuff is easiest to grade. What you might not be aware of is that all the different forms of RAW also feel different. Different scenes feel different too, even from the same camera. Can you make iPhone footage look as nice as ARRI footage? No. Definitely not. But you won't be able to make the Apple LOG footage look like that either. Can you have "creative control over the image". Absolutely. You have no idea how much control you can have over the image. Apple LOG does give you more "creative control over the image", but compared to the creative control you have by even learning the basics of colour grading, the difference is minimal. The only people who have no "creative control over the image" are people that have no colour grading ability. The ironic thing is that by applying a LUT designed by someone else, you have less effective creative control than you had before you applied it. Going back to the "video" look that over processing the image gives, I have become very sensitive to it and see it online in almost all free content. It is present even on videos shot on high-end cinema cameras. The only places it is almost completely absent is on high-end productions on streaming services and in the cinema.

-

Ah, now I understand... He's a LUT peddler! This is an AD!! He doesn't want you to settle for the baked-in look from Apple that gives you no "creative control over the image" - he wants you to buy his LUT and the fact that it gives you no "creative control over the image" doesn't matter - he has money in his pocket so it's ok! Designing LUTs is hard and lots of skills are required - if he can't grade HDR footage then he falls well short of any standard.

-

What an amazing statement... at around 46s he says "by shooting in normal HDR video mode I am sacrificing all the creative control I have over the image". HA! Does Resolve disable the Colour tab when you use these files? Does FCPX or PP disable their colour tools? Does a hitman from Apple appear behind you in your editing suite and put a gun to your head when you pull up a HDR shot in your colour editing tools? What a muppet!!

-

Great stuff, this is exactly what I meant and the kind of learnings you'll get from actually trying things. Sadly, many online will use endless excuses to avoid actually trying things. One thing you might struggle with is how much effort you put into the video-making side of things. It's well known that if you're going to video yourself doing something then it takes twice as long, or more(!), and I hear people regularly saying "Sorry, I didn't film the assembly process because I had a deadline, but here's some finished shots", so it's definitely a compromise. I'd suggest you crank up the ISO and try to make and edit a test video, as this will show you what kind of light levels you need. You might find you need an F2 lens at ISO 3200, or you might find you need an F1.4 lens at ISO 25,000. You might also find that a certain level of noise in the footage is ok. All of that will require testing, and obviously will inform your equipment choices if you end up having to buy something. Also, you might try to make a video without buying new camera/lens and see how that would work. Maybe you just omit the shots where you're in full darkness? Maybe you can film shots of you setting things up with a small light on, and then the parts of the video that are in darkness are just a slideshow of your photos with a VoiceOver? It's worth trying different formats. What do other people making the same type of video show in their videos? Ben Horne is a large format stills photographer who makes great YT videos (and spectacular images) and vlogs his trips which often involve him filming bits of the vlog in darkness. From memory the shots are often: him getting stuff ready from the boot of his car (which is lit by a small light), him walking to the location which is filmed hand-held and lit with a head-lamp, etc. He's been making those trip vlog videos for many years now so it might be worth watching a few to see how he does it: https://www.youtube.com/@BenHorne/videos I think it's really just a matter of trying things and learning and adapting. The trick is to arrive at a workable setup without having to have gone down too many dead-ends that required huge equipment purchases first. I've done that - to get to where I am now I have probably spent 10k on things I tried but no longer use or need.

-

I have, and you're right that it's less sharpened than the 16:9 modes. I'm still trying to work out what is what, but my current thinking is: Analog has a decreasing contrast on fine detail RAW unprocessed digital has approximately no (or very low) lowering of contrast on fine detail Processed digital has a rising contrast on fine detail By this measure, even if there was no sharpening on it (there is, it's just less) then that would still look digital, it would just look less digital than the over sharpened stuff. I've been paying attention to peoples hair while watching TV and movies over the last few weeks, especially where there is edge lighting, and most of the material shot with high end equipment has significantly less contrast on fine detail than the test videos published by the camera and lens manufacturers. I suspect this is deliberate, so I suspect that even shooting digital requires some correction in order to not look digital. The ARRI videos demonstrating their lens lineup look quite digital to me, and not in a good way - this might be why.

-

As is the entire YT channel of WanderingDP, a real life working pro breaking down commercials. Not only does he break down the composition and lighting, and also shooting logistics like when you'd schedule different shots at different times of day, but also advice on how to be more efficient on set etc, plus he's hugely sarcastic and his videos are often hilarious... https://www.youtube.com/@wanderingdp/videos

-

Would it be better if I called it "unsharpen" ? 🙂

-

This is why I have swapped to the GX85 from the GH5 - the image quality from the GX85 isn't as good as the GH5 but the footage is better because the people in the frame are less aware of the camera. What is the workflow? That video shows the guy grading in Lightroom and then later on in Resolve, which seems rather odd. Can Resolve open the DNGs? People thought the earth was flat, and even now despite mountains of evidence some people still do. Popularity is a pretty poor way to judge what is true. The answer might just be a simple blur. Spoiler alert: I'm even wondering if over-sharpening in-camera might be a positive thing. More on this as my thoughts develop!

-

Looking at those stills close up they seem to have a nice noise structure which is actually quite filmic / organic. You can definitely tell it's RAW and not sharpened in-camera. I had the same experience with my Canon 700D when I installed Magic Lantern. Using the compressed modes was awful because the noise from the sensor (which was a lot) was awful when compressed, but in RAW it had a rather pleasing aesthetic.

-

NASA!! Wow! Great stuff! In terms of documenting what you do, could you perhaps give us some more detail about what you're hoping to do (if you have something specific in mind)? From a technical point of view the thing that immediately comes to mind is about low-light performance - how much will you be shooting after sunset with lights, and how much would you shoot in the dark? From a process point of view, I'd suggest the following: If you know what you want the work to look like, then doing some analysis would help. Make list of the types of shots and setups you'd need, then work out what equipment you'd need for each Just try shooting videos. Don't expect to post the first one, or even the first few - they're just test shoots designed for you to just figure out what equipment is missing, what shots work, what shots don't work, how to edit, what to say, etc etc. Essentially, just keep trying to make a video and making mistakes until you manage to get things sorted enough to actually finish the video. Post it. It will probably be rather clumsy but if you go back to the first videos that anyone posts online they always are. We learn by doing, so just keep making them and keep experimenting and learning and posting 🙂

-

Obviously. No-one was saying that though.