-

Posts

7,835 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by kye

-

Lots of cameras have a wifi function that will display a live preview of the image. I've used the GoPro one, the Panasonic one, Sony X3000 one, Canon one, etc. Do you have an older spare camera? It might have one. Things like Ring are all-in-one solutions that work via the cloud, but the Ring one requires a paid plan, and the quality is pretty low because it's going via the internet and the motion events are being stored on their servers etc Here's the legacy Panasonic app - it works with a million of their older camera models, some of which are probably going for almost nothing on eBay: https://av.jpn.support.panasonic.com/support/global/cs/soft/image_app/index.html

-

You should be able to use any camera that has a video out and a power in connection. The front door camera that Casey Neistat used in his studio was just a GoPro that was powered via USB and connected to a TV. Surely you'd have an old camera around that has these two connections?

-

I played with the Ptools hack but could never get it working on my GF3. Unfortunately lots of the information online seems to have been lost and the things I did find took a good deal of searching, reading entire threads and following all links etc. The GF3 would be spectacular with a bit-rate bump - 17Mbps wasn't really enough for 1080p. For anyone reading that hasn't seen them, here's a few from the mighty GF3: and here's a random comparison of JPG vs Video with a few lenses / filters: Looking back on the above, I remember the difference in detail being a lot more between the JPGs and the video - maybe the 1080p YT compression really softened it. I've done a lot more videos with it, both tests as well as real edits, and my conclusions were that: If you use it without a stabilised lens or with any lens that isn't razor sharp then it's only good for a Super-8 style final product due to the soft rendering and micro-jitters If you use it in mixed lighting or with low-CRI lighting then you're going to have a very bad time colour correcting in post If you use it in any sort of low-light situation then it might not even get up to Super-8 level of video AND you're going to have a bad time in post DAMN ITS SMALL!!!! When paired with the Olympus 15mm F8 body cap lens it is almost as pocketable as a smartphone, and if used outside when the sun is up, it'll do a great job of Super-8 style videos. It is usable with the 12-35/2.8 lens, but if you're going to have a setup that large, you may as well take the GX85 or P2K. Here's a shot of some of my collection - the GF3 is the second smallest, only larger than the mighty (pink) J20 that was what I entered into the camera challenge. It was great looking back over these GF3 videos - fun times!

-

You're right about you needing the 300/2.8 to get some background defocus for those shots, but it really depends on the subject distances involved. Your shots are of a sports ground that is absolutely enormous when compared with a lot of other very common sports grounds, like basketball, various forms of football, lacrosse, etc. My sports photography was on Australian Football fields, which seem to be significantly larger than almost any other sports field, so I struggled with lens reach and had issues manually focusing etc. This isn't always the case - if you're shooting a basketball game then it's a whole other ballgame....(sorry - couldn't resist!)

-

A lack of glass is definitely a problem. It would be a move that relied on there being more over time, that's for sure. Re-thinking about it now, it would make more sense for them to make a camera with interchangeable mounts, and make ones for EF, PL, and whatever else they could make work. As you said, it's unlikely to be a FF pocket, it would be a FF Ursa and I would think it would be their equivalent of the Alexa 65. In cinema circles, S35 is regarded as standard and FF is regarded as "larger than standard" which is why ARRI called the Mini LF even though the sensor was basically FF. I don't know how much a rumour like this would be true either. If you spend any time on the BM forums, the number of rabid camera bros becomes obvious and every move that BM makes is answered with hundreds of comments asking for everything under the sun as the next upgrade. I've seen people ask for the pocket cameras to get IBIS via a firmware upgrade! So yeah, is there a rumour that BM will go FF with L-mount, probably. Is there a rumour that BM will go FF with 17 stops of DR and IBIS and DPAF and a built in AI drone, probably! My understanding is that they want to create and control the full pipeline - to have a complete ecosystem. If you run a YT channel then you can easily do this, just but a P4K and use Resolve. I don't know enough about running a TV studio but from the outside it looks like you could set one up using all BM equipment. In all the BTS stuff I've seen for YT streamers it's normally the BM ATEM switchers they use to manage sharing their screen, one or more cameras, external sound, monitoring, and any graphics. They have studio only cameras, etc. They even have a film scanner! From this perspective, getting more people to use BRAW the better. Something you may not be aware of, Resolve supports almost all professional and broadcast codecs except Prores RAW. So BM and Apple have extended the FCPX vs Resolve competition into having dedicated input formats. This is another sign it's about ecosystems. BM continue to support entry-level cameras like the P4K but are gradually extending up into the high-end cinema line with the UMP12K (and the just-released UMP12K OLPF). The P4K is for making sure that people start with BM in film-school and the UMP12K OLPF is for making sure that your BM user-base doesn't jump ship when they get a big budget.

-

I guess if I look at it from the perspective of BM then perhaps it makes slightly more sense. BM has made cameras with S16 and MFT sensors and they used the MFT mount, and they made cameras with S35 sensors and used the EF mount. Assuming they then wanted to make a FF camera, what mount would they choose? EF mount They have used it before, and their users already have lenses that use it, but the crop factor would change between the S35 and FF sensors, and the EF mount has pretty much been abandoned by Canon, so maybe BM want something that's still in active support RF mount Canon have been quite restrictive with third-party use of the mount, so maybe Canon is blocking BM from licensing it, or maybe it's prohibitively expensive, or maybe the flange distance is too little for things like internal NDs PL mount Seems like a logical choice with lots of existing lenses and support from other manufacturers, but maybe it's a step too far for their existing customer base, or maybe they want AF support (does PL support AF?) Nikon mounts Not a lot of cine lenses for Nikon I wouldn't have thought, focus direction is the other way to EF lenses, which might be troublesome to their existing customers Fujifilm X-mount No AF lenses available that cover FF and only 5 third-party lenses that do (on B&H) MFT mount Wouldn't cover FF sensor Sony A logical choice, but like Canon RF, Sony might not want to help BM compete with their cine-cameras so might be charging a lot for the license or might be refusing outright From this perspective I think L-mount makes more sense, and sort-of aligns with their previous use of MFT and EF mounts, which were both "semi-open" systems with lots of existing glass from original and third-parties.

-

Internal BRAW would be a big draw-card for L-mount folks. I don't know how large the market would be for Panasonic L-mount users to get a BM camera though. While such a move would of course get them internal BRAW, it would also sacrifice lots of things such as IBIS, AF, etc. It's easy to see that BM and Panasonic cameras are often similar in price, sensor size, resolution, etc, but the differences aren't just in specs, they really represent a fundamentally different approach to shooting - Panasonic make hybrid MILCs and BM make cinema cameras. To me, the GH5 and BMPCC4K are just as different from each other as the GX85 and OG BMPCC are from each other, which is to say they're a night-and-day difference in what I can shoot, how fast I can shoot, etc etc. The answer to that would be to make the new BM FF camera a FF PDAF IBIS sensor, but that's not going to happen. BM might implement PDAF but there's no way that they would put IBIS in there - they're too focused on the cinema market for such a thing. It does make sense for them to realise a FF L-mount camera though. But it'll be their flagship and will cost you!

-

Or smaller sensors, deeper DoF capabilities, and worse low-light performance, but greatly reduced size and weight.

-

There is a huge difference between the RAW and video from my GF3, I would guess other models are likely the same. I don't take stills anymore, except timelapses and for that I shoot jpg for ease of editing in post. Before I went to video, I shot a few trips with the GF3 in RAW and was really happy with the images.

-

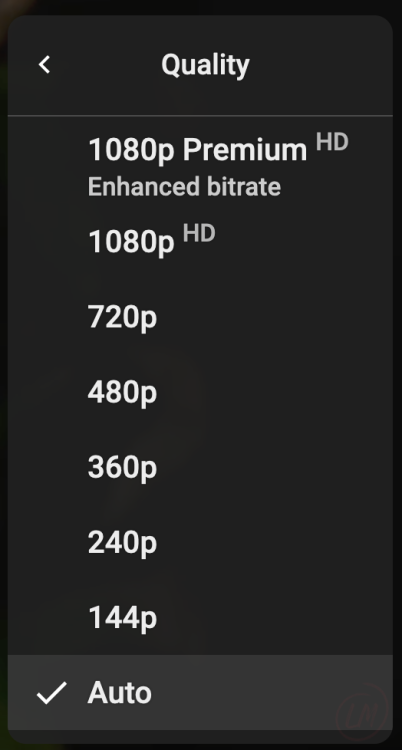

It appears to only be on videos uploaded at 1080p, not those with 1440 or 4K etc. I could also be in a test group potentially.. Wow, the option has now disappeared for me! It was on a bunch of videos I checked, but I remember specifically that it was on this one. Have a look for yourself: Maybe my feedback got me kicked out of the test group lol.

-

For our personal lives, we really only need food, clothing and shelter. We got by just fine even before we had language, so I'm pretty sure that there is no minimum number of megapixels in that equation... But, assuming that you're asking if you can print things and enjoy them, then I'd say there's no lower limit except what limits you would have to your own enjoyment. After all, mosaics have been a form of art for thousands of years and no-one has started complaining that they don't look photorealistic, and people aren't complaining about paintings not being photorealistic either. It's controversial, within online photography communities anyway, but here is Ken Rockwells thoughts on the matter: https://www.kenrockwell.com/tech/mpmyth.htm A few highlights include the idea that the larger you print something, the further you tend to stand from it: Today, even the cheapest cameras have at least 5 or 6 MP, which enough for any size print. How? Simple: when you print three-feet (1m) wide, you stand further back. Print a billboard, and you stand 100 feet back. 6MP is plenty. and also practical experience: Even when megapixels mattered, there was little visible difference between cameras with seemingly different ratings. For instance, a 3 MP camera pretty much looks the same as a 6 MP camera, even when blown up to 12 x 18" (30x50cm)! I know because I've done this. Have you? NY Times tech writer David Pogue did this hereand here and saw the same thing — nothing! Joe Holmes' limited-edition 13 x 19" prints of his American Museum of Natural History series sell at Manhattan's Jen Bekman Gallery for $650 each. They're made on a 6MP D70. I would argue that what is visible will depend on the printing technology you use. A canvas print will hide any blurriness or detail that is in the image, but a metal print will accentuate it. I have visited a house with a couple of large metal prints (maybe 3' x 5') of very high resolution shots of a rainforest with deep DoF and dappled light filtering down to the ferns and rocks by a stream. The resolution was palpable, so much so that both myself and the other non-photography people were all impressed by the print. The discussion included 'oohs and aahs' around how much it cost, how difficult it was to hang, how they had to get little custom lights to shine on it like in an art gallery, how impressive it was, etc. What was missing, however, was any discussion or appreciation of the image. All discussion was of the technology. The sharpness was distracting.

-

12MP is 4K in 4:3... with a good enough codec, that's 1.5K more than most people need!! The OG Alexa was 2.5K and most cameras these days still can't create an image as good....

-

Panasonic S5 II (What does Panasonic have up their sleeve?)

kye replied to newfoundmass's topic in Cameras

Also worth pointing out (and you may already know this) but the IS Boost mode does a spectacular job of shots with gradual movement. I've used it to get buttery smooth pans before. The feeling is kind of like moving through treacle, you have to pull the cameras image where you want it to go but it's super smooth. -

I can understand that it's an emotional thing, but I feel I have moved past attachment to any particular format or technology, and have become focused on the experience and the outputs. MFT is great, I have 5 MFT cameras and some really nice lenses, but it's a means to an end. I recently discovered I prefer the size of the GX85 to the GH5, and happily took the loss in image quality for the benefits in the shooting experience. If someone created a camera that was slightly smaller than the GX85 and had equal or better image quality and features, I'd consider switching, not giving the slightest consideration if the sensor was 1/2.5" or Medium Format. The S5 is a great camera, take lots of great images and enjoy it! Enjoy the images you captured with the MFT gear too. Cameras matter, but the better you become at using them, the less they restrict the quality of the work.

-

In terms of assessing the maximum size for printing, that's another whole debate, but a way to test it is to pull the image up on a 4K display, and then zoom in to the image until you start seeing the video compression artefacts, then reduce the size down a bit, and then measure how large the image would be if you printed it that large. Remember also that the larger you print an image the further back you will tend to view it from. A friend of mine won a certificate and got one of those canvas prints about 2' x 2' across. She sent in an early iPhone photo that was a close-up of one of her kids faces (it was a lovely photo) and the printing place said it wasn't "high enough quality" (read: not enough megapixels) and she had to insist they print it. She hung it high up on their photo wall and due to how the furniture was arranged you could see it throughout their open-plan living/dining/kitchen area, but you wouldn't have looked at it from closer than about 5' away, and even then you'd be looking up at it significantly. It looked great. Had it been taken with a high megapixel camera would it have been sharper? Sure. Would she have ever been able to afford a high megapixel camera? No. Would you ever take that kind of photo with a "real" camera? Unlikely.

-

It should be pointed out that it is not mandatory to have a short shutter speed for photos. Let's review two examples. The first is this guy, who is obviously playing at an extreme level here, with huge energy and drive: It is an incredible photo, there's no doubt. But does it convey the sense that he's pushing himself to the limit? The more I look at it the more it looks like it could be a still life, maybe he was on wires and it's a setup. In a sense, it implies motion but doesn't actually express any. Contrast that feeling to this image: There is no denying this. Not only is it a great photo, and not only does it show that world-class people are pushing themselves to the limit (the three guys on the right definitely are!) but it shows the results of that effort. Now scroll back up to the first image - what is the energy level of the first image now? I would suggest that the obsession with short shutter speeds is part of the same obsession with getting "sharp" images, which is driving lenses to be clinical, sensors to be enormous, megapixels to be endless, computers to be behemoths, and images to be soul-less. To be a bit practical, there are likely limits to how much blur you want in a scene, and it's relative to the amount of motion involved, which often varies quite significantly, even from moment to moment in a lot of sports. Some more examples of varying amounts of blur.. This one is a great image, but if it was important who the defender was then it's too blurry. If not, then maybe not.. Sometimes it's not important to get anything completely sharp.. images without feeling are of limited value, and I find that motion is full of feeling. Here's one that is full of emotion (especially if you knew the subjects): Also worth mentioning is that you don't always have to have the subject still and the background blurred, it can be the other way around, but it changes the subject of the image somewhat. and with video you're taking lots and lots of photos so the creativity is endless... Remember - don't let the technical aspects blind you to the point of capturing things in the first place.

-

I did a "Send Feedback", which thanks to the ability to attach a screenshot was pretty straightforward in showing the issue. Interestingly, there's no ticket number or anything - you pitch your feedback into the void and that's it apparently. As a paying customer normally that buys you some kind of human support, I guess not with YT? Or maybe not for me as I'm in the family account but I'm not the account that paid for it.

-

Panasonic S5 II (What does Panasonic have up their sleeve?)

kye replied to newfoundmass's topic in Cameras

Yeah, that guy isn't the most thorough reviewer, but of course he's probably a better barometer of what the YT Camera Bros think of it than someone like Gerald. -

It seems that it's only being tested on web, and that it still needs more work... I click on it, it says I need Premium membership (which is odd considering I'm logged in and the YT logo says "Premium"), then when I click on the "Get Premium" button it takes me to a page that says the offer isn't available and I need to go into settings to manage my subscriptions and purchases. I'm part of a YT Premium Family Sharing account, so maybe that's the issue.

-

Just saw a new option appear in YT.... Some quick googling revealed that it's only for YouTube Premium (paid) membership, which I am a member. Anyone else seen this? Do we know what is going on under the hood?

-

Panasonic S5 II (What does Panasonic have up their sleeve?)

kye replied to newfoundmass's topic in Cameras

The few YT camera hipsters I've seen review it had positive things to say... TL;DR: Stabilisation - "gone are the days of needing a gimbal" Colours and DR - "Super easy to handle" "easy to colour grade and get a nice aesthetic" (buy my LUT pack) AF - "GH5 was terrible" "S5 is so reliable" "it sticks onto him the whole time" "the AF is unbelievable" SSD recording - "prores is easy to work with" "speed up your workflow" "This is one of the sexiest looking cameras out there" "I would highly recommend checking it out". -

Help me on an eBay hunt for 4K under $200 - Is it possible?

kye replied to Andrew Reid's topic in Cameras

Are you perhaps thinking of the thread where we posted our submissions to the EOSHD $200 camera challenge? or the thread with the results? Submission thread: Voting / results / discussion thread: I love that my butchered GoPro is the thumbnail of that thread! Not sure if everyone else would see the same shot, but I'm pretty sure that the camera gods would be happy knowing it died trying to be converted to a D-mount camera. -

I agree, zooming definitely increases the visibility of the noise in the image. One thing I think worth remembering is that cinema cameras have traditionally been quite noisy and noise reduction used to be a standard first processing step in all colour grading workflows. It is only with the huge advancements in low-noise sensors, which allowed the incredible low-light performance that high-end hybrid cameras have today, that this noise has been reduced and people have gotten used to seeing clean images come straight from the camera. I just watched this video recently, and it is a rare example of high-quality ungraded footage zoomed right into. He doesn't mention what camera the footage is from, but Cullen is a real colourist (not just someone who pretends to be one on YT), so the footage is likely from a reputable source. It's also worth mentioning that the streaming compression that YT and others utilise has so little bitrate that it effectively works as a heavy NR filter. This really has to be seen to be believed, and I've done tests myself where I add grain to finished footage in different amounts, uploaded the footage to YT, then compared the YT output to the original files - the results are almost extreme. So this also plays a role in determining what noise levels are acceptable.

-

Interesting comparison. For anyone not aware, it's worth noting that since the iPhone 12, they quietly upgraded to doing auto-HDR and the h265 files are also 10-bit, as well with the prores files being 10-bit of course. It is interesting how little they promoted this - I have an iPhone 12 mini and didn't even know about it for the first year of owning it! This is what really unlocked the iPhone for me - I have used iPhones since the 8 for video but prior to the HDR / 10-bit update it really wasn't a competitor to anything other than cheap camcorders, as it lacked the DR to record without over-contrast or clipped highlights and bit-depth to really be able to manipulate it sufficiently in post to remove the Apple colour science and curve.

-

I was quite excited when Apple introduced prores support, thinking that it would mean that images would be more organic and less digital-looking, but wow was I wrong - they were more processed than ever. I think the truth is hiding partly behind that processing, where the RAW image from them would struggle in comparison. I shot clips at night on my iPhone as well as other cameras, and I noticed that the wide angle camera on my iPhone was seriously bad after sunset - even for my own home video standards. I did a low-light test and worked out that the iPhone 12 mini normal camera had about the same amount of noise as the GX85 at F2.8, but the iPhone wide camera was equivalent to the GX85 at F8! and that was AFTER the iPhone processing, which would be way heavier than the GX85 which had NR set to -5. Apple says that the wide camera is F2.4, so basically the GX85 has about a 3-stop noise advantage. There are lots of situations where F8 isn't enough exposure. I'm not sure where we're at in terms of low light performance, but this article says that this Canon camera at ISO 4 million can detect single photons, so there is a definite limit to how large each photosite can be. Of course, more MP = less light in each one. In terms of my travels, I still prefer the GX85 to the iPhone when I can - being a dedicated camera it has lots of advantages over a smartphone.