-

Posts

8,032 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by kye

-

If you want to visit the past, I'll buy a G7 and re-sell it to you for USD$999!! 🙂

-

I recommend the movie "The Congress" from 2013.

-

Looks good - muted in a very appropriate way. How did you handle the post workflow, especially colour grading? Fashion is perhaps one of the genres that require the most "accurate" colour so that product colours reflect the actual products.

-

Interestingly enough, they've used Zcam on previous MI films... https://ymcinema.com/2020/10/09/z-cam-e1-crash-cam-spotted-in-mission-impossible-7/ Agreed. My impression was that there are three types of productions: High budget feature films / flagship TV series These have the budget to use high resolution RAW capture, high-end cameras and fancy lenses, significant budget for professional colour grading, to promote the film they get lots of media attention and interviews etc. The process is overseen by professional folks who know how to extract every ounce of quality. Low-medium budget feature films / most TV shows These don't have the budget for extravagances and shoot with only the level of equipment that is necessary for professional results, using lower resolutions and Prores, using solid but less remarkable cameras and lenses, get minimum colour grading budget, and get far less media attention (and basically no media attention for technical matters). The process is overseen by professional folks who know how to do the basics so that the result is solid but is delivered within budget . Amateur features / short films / cat videos Devote more person-hours to their short film than major Hollywood feature films but spend that time obsessing over camera specifications and lens technical sharpness tests, scouring over the latest $100M feature film post-workflow and trying to implement every tool and technique, insisting on only the best. Most of the time their lack of basic understanding means the result is worse than even very low budget professional productions. A quick search revealed that IMDB says that Game of Thrones was shot on Prores and mastered in 2K.... https://www.imdb.com/title/tt0944947/technical/ and more searching reveals that it was the first three seasons shot in 1080p, then 3.2K from Season 4 onwards: https://thedigitalbits.com/item/game-of-thrones-complete-series-4k-uhd I wasn't able to find any original source for the above, but it appears to be the consensus. The fact it was shot in 10-bit 4:4:4 suggests it was compressed as RAW isn't 10-bit. Prores 4444 XQ spec is 396Mbps so this is roughly equivalent. Season 1 used the HDCAM SR format: https://en.wikipedia.org/wiki/HDCAM So yeah, Prores is good enough.

-

The discussion seems to be about reproducing colours that are lifelike, or accurate, or real. I posted an interview where a world renowned expert discusses the subject. I thought that it would be of interest, considering that colour grading is one of the weakest areas of knowledge online. There is no "point", only information.

-

I haven't reviewed the current batch of cameras so not really. Realistically unless you have the cameras yourself then you need to find a source online where someone has done latitude tests. CineD.com does good ones, so that's a good place to start. For example, here are a few cameras under exposed and pushed back: But when reviewing these you have to also compare how far over each camera can do as well, as different cameras put middle grey in different places. You'll also note that the Sigma FP uses different notation ("ETTR" vs "stops under") because they didn't know where to put middle grey and so compared to a different scale. Like many things in cameras - it can't be reduced to a single number so you have to do the analysis and comparison yourself because half the benefit of the information is the understanding that you get in figuring out how to compare them yourself.

-

Peter Doyle (colourist on Harry Potter, Lord of the Rings, etc) speaks about how closely we can reproduce the colours in the real world. Spoiler: no. (linked to the relevant timestamp)

-

I should also add to the above, that if you're shooting with any modern camera with 12stops or more of DR in a high-DR environment and the shadow noise and highlight clipping are both visible, then stop grading your images so they look like log footage and add some contrast FFS 🙂

-

I have also swapped from reviewing DR figures to looking at latitude testing, and even did some latitude tests of my own cameras as they were not performed by CineD. Sure, you might have one or two more stops in outright DR, but if those extra stops are bright purple or leafy green then they don't count as much as ones where the colours are neutral and the only issue is noise. This is especially relevant when shooting in high DR environments I think, as one of two things can happen: You're shooting with proper exposures and the right ratios, in which the extra stops of DR will only be included deep into the highlight and shadow shoulder/knee rolloffs, so are providing a relatively minor improvement to the image You're not shooting with the right exposure and ratios and you need the extra DR in order to bring up relevant details from the shadows or pulling down the highlights to bring out relevant details from there - which in either case is heavily dependent on how good those areas look When you start evaluating cameras on that basis, the rankings get shuffled significantly with some "high DR" cameras taking a pretty awful fall from grace, whereas others with more modest DR numbers climb up that ranking because all those stops are neutral and very usable.

-

Even if you live in a timezone that doesn't exist!

-

Not really, and it's sort-of complicated. The short version is I choose the mode with the bit-rate and codec I want, then look at what resolution options the camera gives for those settings. The long version is that it's more complicated, and you should really do tests to see what works best for you as it might vary between situations, for example if it involves more or less movement in the frame, more or less noise in the image, etc. At a high-level, there are a few advantages to shooting at the delivery resolution: The downsampling happens in-camera, rather than in your NLE, so it should be faster in post Rolling shutter might be less Bit depth on the sensor read-out might be higher But, there are also some advantages to shooting a higher resolution and downsampling in post: Downsampling in post is likely to be higher quality than in-camera, because in-camera it has to be done real-time and things like battery life and heat dissipation are considerations Any non-native resolutions might involve line-skipping, pixel binning and other non-optimal downsampling, which this may avoid Compression artefacts tend to scale with the resolution, so downsampling to timeline resolution in post lessens how visible these are You can punch-in in post more if the need arises, which also occurs when stabilising For a given bitrate the image quality is normally higher at a higher resolution, so you're starting with a better position For my setup, I shoot the GH5 in the 200Mbps ALL-I mode because it's ALL-I and therefore is nicer in post. I decided this over the 150Mbps 4K mode because that is IPB, making it much more intensive to edit with, and also over the 400Mbps 4K ALL-I mode because that would require me to buy a UHS-II card which was hugely expensive. However, I shoot the GX85 in the 4K 100Mbps IPB mode rather than the 1080p 20Mbps IPB mode. The 4K mode is IPB, but having 100Mbps is more important than the editing performance. I'd rather have a 100Mbps ALL-I mode, but if we're wishing for things then we'd be changing topics, so I choose the best from what I have. In terms of the GH6 and Prores, they're all 10-bit ALL-I modes (as they should be!) so the performance in post is mostly a moot point compared to IPB codecs, so it's probably more a case of making the trade-off between disk space and if you need the extra resolution for anything in-particular. I think with projects that are more straight-forwards then shooting at 200Mbps 1080p is perfectly sufficient. Remember that many low-budget feature films were shot with 1080p Prores HQ around 170-180Mbps and they were screened in theatres on screens larger than the walls of most private home-theatres, so the image quality should be sufficient for anything we could be doing. If you're shooting in uncontrolled situations where post is difficult, or if you're unsure what the footage will be used for in future, or if you're doing VFX, essentially if there are any special circumstances around the project, then having more resolution and MUCH higher bitrates might be worthwhile. There's an argument to be made for shooting slightly higher than the delivery resolution, at maybe 2.5K for a 1080p master, so that's something to think about as a sort-of middle ground, if that is available in the GH6 - I'm not that aware of what modes it offers and I know the GH5 has some intermediate sizes, like 3.3K 4:3 in the anamorphic modes.

-

Hopefully that will get better over time. Overall I view it as a good thing - if you're willing to go to fully-manual lenses then the budget Chinese manufacturers have drastically reduced the price of lenses over even the last 5 years, and they're now doing the same to anamorphics.

-

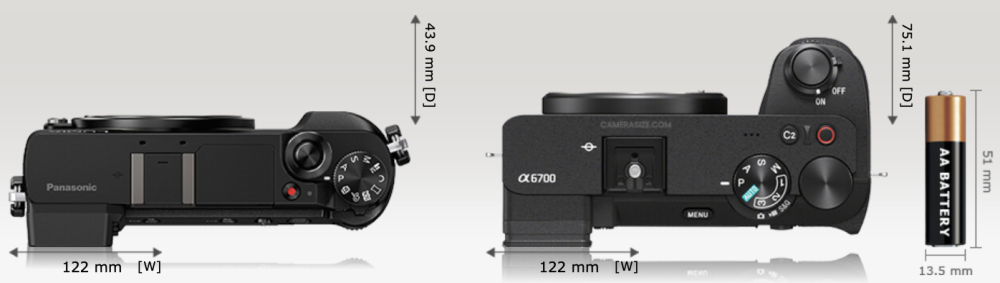

Yes, according to Camerasize, it is a similar size as the GX85: Obviously it's thicker and has a larger grip, but this will be dwarfed by the size of the lenses. Here are both with their kit zooms, which are probably amongst the smallest likely to be used if size was a key consideration: While I'm no fan of Sony cameras, it is good to see that there is still an interest in making small cameras - camera size matters and the general approach from the industry is that only people who can have huge cameras deserve image quality, which is simply not true.

-

This page is my reference for bitrates and other technical details: https://blog.frame.io/2017/02/13/compare-50-intermediate-codecs/ It doesn't show all the resolutions, but Prores is a constant bitrate-per-pixel (so UHD is 4x 1080p and DCI4K is 4x 2K) so you can always figure it out for custom resolutions. That said, for UHD, the bitrates are: HQ is 707Mbps, 422 is 471Mbps and LT is 328Mbps. If their 5.7K is the same aspect ratio as UHD then it would be 2.2x the number of pixels and bitrate. I guess 400Mbps isn't that far off if that's for 5.7K. I know that 1080p HQ is about 175Mbps, and the attraction for me was that UHD Prores Proxy was 145Mbps, so you get the benefit of the higher res without the ridiculous data rates. Still, 400Mbps isn't that much more than 175Mbps.

-

I've found a couple more videos where it's enabled, but I can't see much difference. I wonder if it would be more visible if the material had grain or lots of fast movement.

-

It's back! I skipped through ARRIs YT channel to look for 1080p videos that had it, and I found a couple of interesting things: ARRI upload videos in all sorts of resolutions, 4K, 1080p, 1440p, even 720p, even recently The 1080p uploads they did only have the Enhanced Bitrate option very recently - the 1080p ones uploaded more than a couple of months ago didn't have the option This video has it: Here's a comparison. This is the 1080p video displayed full-screen on my UHD display and then a screen-grab taken. Standard 1080p: Enhanced Bitrate 1080p: Standard 1080p: Enhanced Bitrate 1080p: Oddly, it's not available on this 1080p upload, which is more recent than the above. @kaylee Do you have it enabled yet?

-

I think you might be low-balling your estimate...

-

I see metalworkers releasing things like Loctite by just applying heat. They typically do it with a blowtorch, which obviously I wouldn't recommend, but it might be a simple case of applying a soldering iron for a bit perhaps? It depends on how much superglue you used. "A few dabs" isn't very scientific a measurement!!

-

Just a hint? I'd suggest that there's more than just a hint of je ne sais quoi from the Komodo!! Good practical questions. If the battery life wouldn't last then a V-mount could be added, potentially via a cable with the battery kept elsewhere, like in a pocket, but it would potentially add to the weight of the rig, so should be taken into consideration. One trick that I got from a fellow forum member for stability and holding a camera for long periods was to buy a belt and put a tape-measure pocket on it, and then put the camera on a monopod and put the foot of the monopod into the tape-measure pocket. This puts most of the weight of the rig onto the belt, and provides a third point of contact, although your hips aren't completely stable if you're walking around. It's quite a minimal setup, can easily be held with only one hand (potentially even quickly changing batteries like this), and the rig can easily be quickly taken out of the belt pocket and used as a normal monopod or packed away etc. If @backtoit put the camera on a gimbal and then extended the handle of the gimbal with a monopod into the belt then that might help stabilise things sufficiently. They might still get the vertical bobbing up and down motion when walking, but it might not be that visible.

-

Artificial voices generated from text. The future of video narration?

kye replied to Happy Daze's topic in Cameras

Also, paranoia seems to be setting in..... https://petapixel.com/2023/07/11/real-photo-disqualified-from-photography-contest-for-being-ai/ The judges looked at the metadata (it was taken with an iPhone) but couldn't work out if it was real or not, so disqualified the image because they weren't sure. -

Artificial voices generated from text. The future of video narration?

kye replied to Happy Daze's topic in Cameras

This one is incredible. I'm not sure how available it is for users though - I saw this video posted by the developer who is sharing their work on the liftgammagain forums just this morning. -

Not a user, but having RAW 5.7K60 and C4K120 and 4.4K anamorphic seems a lot like it's now in cinema camera territory. Things like the ability of RED cameras to have RAW at high frame rates was one of the things that I thought separated them from the usual prosumer cameras that mere mortals like I could afford. Maybe I'm just behind the times, but if you were shooting something serious like high-end music videos / high-end docs / low-budget features and had the ability for 5.7K up to 60p (for those emotional/surreal moments) and also C4K 120p for any special effects shots (like if shooting an emotional sports doco) then it makes it a serious camera for those tasks.

-

Wow - that looks really good! I shoot with my phone regularly so I see a reasonable amount of iPhone footage and I agree that the iPhone doesn't look that good. To my eyes it's clearly RAW. Despite the low bitrates that YT uses to compress things, if you give it something shot on RAW then the quality of the YT stream goes up significantly - things like the falling snow and fine detail gives this away. Here's an example of a very high-quality RAW capture, but this is from 2015 and only uploaded in 1080p: It has the same look to me. Definitely agree with this. I would go further and suggest that the lesser phones (any phone shooting a compressed codec) are modern Super 8 emulations. I say this because: they get used for home videos like 8mm used to be and are the perfect tool for shooting for fun and not thinking about the quality of the work by the time you process the footage so the compression isn't visible they're not as sharp as RAW 4K they're likely using SS to expose there's enough hand-held motion shown in the frames that it's a different look to a S16 film camera I have to resist the temptation to turn random iPhone shots in my projects into 8mm film emulation 🙂

-

If you take time-lapses using RAW stills then they look pretty good. No-one is expecting it to look like an Alexa, but it looks way better than the prores files that the latest iPhone creates.

-

Absolutely! I must admit that my excitement to hear that Apple introduced prores was topped only by my disappointment when I saw that they were the first people in history to implement prores to record an image that had been dragged through all 7 levels of hell first, rather than it just being a neutral high-quality compressed version of the RAW image.