-

Posts

7,835 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by kye

-

How do they assess if it's a MILC or fixed lens? I wonder if a small enough camera with a "fixed" looking lens would work?

-

Yes, being able to anticipate the moment and compensate for it by hitting the button early would be highly dependent on how predictable the perfect moment is, how long the delay time is, and how good you are at compensating for the delay. It seems like you have particularly good timing and are operating in less predictable scenarios so the performance on offer isn't sufficient to meet your needs. Pity. Ultimately though, you have to go with what gets you the most keepers. After all, cameras are tools, not toys, right 🙂

-

I've found that adjusting diopters can be really tricky because if you adjust them then your eye just adjusts to compensate, so your eyes kind of don't tell you when the diopter is adjusted wrong - you just end up with eye strain after a while and you don't really know why.

-

Getting used to there being a delay in something is absolutely a thing. I've had the experience several times where I went from something that had a delay to something that had less delay and the sensation that you get is that the thing happens before you hit the button! Obviously it wasn't beforehand, but I was so used to the delay being there that my brain had eliminated it. Some people may be better at adapting to small delays than others of course.

-

What is the bottle-neck on most cameras? is it the sensor, or some chip that is receiving the data down the line? If it's the latter then it could be something that could get a bump by buying a faster processor for whatever function that is, but if it's the former then it's really about the sensor and I suspect there's probably no free lunch in that regard. If you want a faster read-out then you tend to only get one with lower resolutions or lower bit-depths. In that sense, manufacturers have been upgrading the data rates but instead of giving us the same resolution as the last generation and better RS, they're giving us more pixels and same/worse RS. Just another reason why this resolution fetish is screwing the majority of us for the sake of a few people who actually benefit.

-

I'm also downloading, but it'll take some time (both to download and to check them out!). Thanks, this should be really interesting. Do you have some thoughts after shooting it? I presume you've had a bit of a look at how they compare?

-

No, but I think it might have been either on the DSLR Video Shooter YT channel or on the Anamorphic on a Budget channel. I vaguely remember that it might have been in a monitor roundup style video where they compare various monitors, but I can't recall exactly. Hope that helps, although googling things like "custom desqueeze monitor" etc might generate some results?

-

Or mil-vuc Or mil-vac Or M-ilv-K In terms of the label, it doesn't help at all. Every camera from an Alexa LF to the OG BMPCC to a Sony VG900 Handycam is a MILVC.

-

That makes more sense. I'm assuming the de-squeeze functions of the monitors aren't orientation specific - otherwise just tilting the monitor would fix it. I'm not aware of any, but I'd suggest that @Tito Ferradans might be the best person to ask, if he's around and notices I've tagged him 🙂 I know there are monitors around where you can enter the squeeze factor yourself rather than choosing pre-defined values, but I don't know if they would go <1.

-

I'm confused. Anamorphic lenses squeeze the image and the monitor de-squeezes it so that round things stay round and your actors don't sue you. If you're shooting with an anamorphic lens then wouldn't you still need to have a monitor that desqueezes it? Or do you want to have people looking like bean poles / trolls?

-

This is how I operate my GH5 - using MF primes and getting extra reach using the 2x digital zoom which recording 1080p on the GH5 is still oversampled from 2.5K. The only issue with such a setup is if your lenses are sharp enough! Hardly anyone seems to shoot this way as it's basically never talked about, which means that this feature set isn't that common - even the GH6 doesn't have it. It's a big deal for me because I've literally designed my lens set around this feature. I agree that it should be compared to video/cinema cameras, not hybrids, although these days there's a spectrum on the video-side of cameras between completely manual cinema cameras and completely automatic video cameras, with many cameras sitting somewhere in-between, so a lot of cameras have video features that are hybrids of cinema and video cameras. This spectrum isn't something that most people are really that aware of, and people just seem to look at the video functions of cameras as a random soup of potential features that have no overall structure or philosophy, which TBH couldn't be further from the truth.

-

There were sign replacement VFX shots? Didn't notice them at all.

-

Have you tried adjusting the EVF diopter? It sounds like yours isn't adjusted properly. My understanding of how to adjust them is to set it so that your eye doesn't have to change focus when looking at the subject directly and looking at the subject through the viewfinder. These adjustments are super-powerful - my dad wears pretty strong glasses (which obviously aren't compatible with putting your eye right up to the viewfinder) and he's always been able to adjust them to compensate for his vision, so they're at least as powerful as a pair of relatively strong prescription glasses.

-

Yes, the FP is a fascinating data point - having a spectacular image but it not being "better" in many/most of the specs that we tend to pay attention to. Food for thought.

-

+1. I think that how the camera makes you feel when operating is very under-rated, and even more-so if you're also directing. I've shot with cameras before that just make you feel like you're fighting it the whole time - the exact opposite headspace than you want to be in when doing something creative. I know that I find the GH5 to be particularly pleasing to use, partly because it's a reliable and comfortable in the hand, but also because I know the images it captures are almost always really nice to edit / colour in post, to which I attribute the IBIS, high-bitrate 10-bit log, and my favourite lenses which are fast primes.

-

Fun thread! I'm happy to dig up a few stills. Did you want nice easy ones, or some more challenging ones? 🙂

-

Yeah, I thought that 12-bit RAW sensor readout (and the corresponding 10bit log) was pretty much the standard on almost all cameras that have a log profile. I thought that some cameras had a 14-bit readout but it wasn't that common. Of course, that is just another feather in the cap for 5D with Magic Lantern - 14-bit RAW straight to the card. Still a standout spec and stand-out image, 14 years later.

-

The latest batteries for the OG BMPCC get WAY more than that - I got 49 minutes of RAW recording from one 1200mAh battery, and 33 minutes of Prores HQ from a different battery (but it wasn't fully charged). I unpacked the brand new Wasabi 1200mAh batteries and put them on to charge, so the test was done taking a brand new battery straight out of the charger. I was recording in RAW the card filled up after 23m, with the battery at 54%, so I formatted the card and hit record again, and when it got to 5% battery life it was at 26m, which makes 49m for one the life of a single 1200mAh battery. That's probably a best-case scenario, but it's pretty darn good if you ask me. Second brand new battery recording Prores HQ died around the 20% battery mark, and recorded a 33:22s file. I'm not sure if it charged properly as the charger kept turning off but it only registered 81% in the camera when I put it in, so maybe not fully charged, but that's also not bad. This is approaching the battery life of the modern gigapixel monsters that dominate the market now. The BMPCC battery life complaints are something that used to be bad but are much more 'normal' now, not great, but not unheard of either.

-

OG BMPCC - costs less to buy outright than renting a C70..... in 2022!

-

Or on startup it asks "Would you like to get the best image quality?" and yes takes you to 8K, and probably also enables that stupid motion smoothing thing that makes everything look like Days Of Our Lives.

-

Wow, that's a pretty impressive set of specs, and especially the low power consumption which minimises heat and power draw. The fact that lower resolutions keep scaling up the frame rate is welcome too. With Canon/Sony/BM/etc being more aggressive with their ecosystems, hopefully one of the smaller manufacturers who don't make their own sensors will decide to ditch Sony sensors and build something on this one. Then again, the FP sensor is made by Sony (I believe) and it looks fantastic, so maybe it's the sensor model itself or the implementation?

-

Damn! That's the wrong way to make a day more exciting!

-

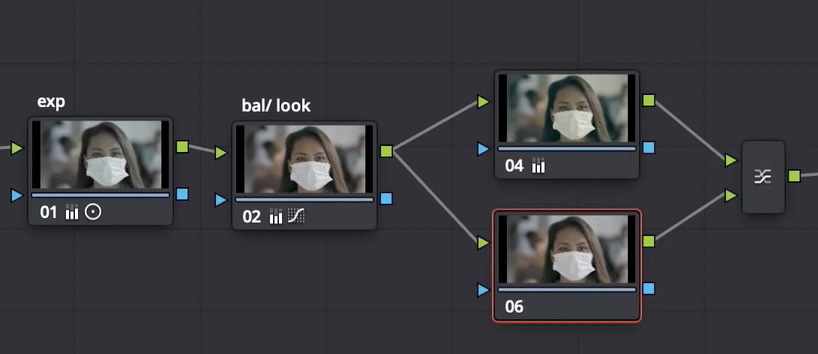

I think the colourists refer to the initial adjustments as "balancing" the image (although it's not a universally used term) and they'll then apply a creative colour grade over the whole scene / film. I don't know how technical you like to get with grading, and it's a very very deep and technical topic, but I'll try and give you some examples. It also depends on what software you're grading in, because Resolve uses Nodes and that means that either some things get done differently to a layer-based package, or it means you can do things that can't get done in a layers-based package. I had a video in mind that showed it clearly but couldn't find it, but this one describes a similar approach - I've linked to the relevant timestamp: This is part of the node graph from that grade: Node 4 takes the balanced image from node 2 and does a serious grade on the image that screws up the skin-tones, but node 6 takes the same balanced image from node 2 and has a key on it to only select the skintones and then he grades in node 6 to push the skintones so they look ok sitting on top of the grade from node 4. ie, node 6 is at 100% opacity over the top of node 4. An alternate approach, and the one I was describing, would be to have node 4 do the same grade, and keep node 6 as being a key of the skintones, but instead of node 6 having a grade on it and sit at 100% opacity, I'd keep it having no grade at all and starting from 0% opacity just bring the opacity up until the skintones stop looking wrong. This technique works well if the grade in node 4 is absolutely full-on, because if that is the case and node 6 was at 100% opacity then you'd have to do a lot of grading on node 6 to get the skintones to sit nicely over the top. A completely different approach is to grade the whole image with a serious grade, then in a node after that you'd try and get a key of the skintones and then adjust them how you want them. This is a way to do it when you don't have parallel nodes, but it makes pulling the key much more difficult. One way to make this easier, if the software allows it, is to pull a key of the skintones from the balanced node before the image has been really messed with but use that key for the skintones node after the serious grade. This is another awesome feature of Resolve - the blue inputs and outputs on the nodes are for the key, so you can pull a key on any node and then connect that Key Out to the Key In on another node. (Side note: you can also connect between the key ins/outs and the image ins/outs which allows you to process the key using the normal controls of a node. I haven't seen anyone else do this but it works and I've used it in real projects to refine keys etc). There are other approaches as well, and it's worth setting up a test project and trying to think of all the different approaches and different tools you could use, and just trying each of them to see which ones you like and which ones you find easier to use etc. Just for fun, here's another video that uses parallel nodes and all kinds of fun stuff to create a strong look:

-

Good idea, I'm near the beach so that's definitely possible. I've found that if you zoom in to 200-400% in post then it's easy to see through the YT compression to what is the original compression, especially if I get lots of movement in the shot. Also, most content is delivered via some form of streaming (but not all) so if it's not visible on 4K YT then that's a useful conclusion in itself.

-

More grading fun with cheap Lumix FZ47 CCD sensor bridge camera

kye replied to dreamplayhouse's topic in Cameras

It's not the stock that counts, it's how you use it.... 😉