-

Posts

7,835 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by kye

-

I also find the brittleness of the image (britality? is that a word? it feels appropriate!) to be disappointing. The RAW video from the Android phones doesn't look brittle at all, and I don't know of anything that would necessitate that look from a smaller sensor. I'd be curious to see some test shots of the iPhone 13 h264 vs h265 vs Prores for myself rather than through a YT video, but I completely agree that it's probably not the codec itself rather the processing that happens beforehand. One thing that @mercer mentioned was that it could be the quality of the compression that is done in the device. We've likely all seen that various cameras create compressed files that are of identical resolution/bitrate/bit-depth but vary drastically in quality. Anyone that is unaware can purchase a cheap 1080p camera from eBay and witness the quality of image that is almost a crime against videography, despite still having the same specs. The C100 was notable for the opposite - it had (IIRC) ~25Mbps 1080p that was better than the 4K of a lot of competing cameras. I'm still planning to experiment further. I didn't notice any quality differences from the iPhone 12 default camera app on the test I posted above, but I have also shot clips with the default app that looked rubbish, so I don't think I've really stress-tested the various codecs. Right now I'm torn between a few different projects, but have plans on putting more effort into this one.

-

I can't blame you for wanting to downsize from a Z6 with Ninja Star, but I question if the FP will do what you hope it will. Firstly, the FP has limitations on what resolutions/frame-rates it can record internally, with an external SSD required for the rest (violating your size / rigging criteria). Secondly, the FP screen doesn't articulate so depending on how you shoot you might require an external monitor. There is an EVF but it's an add-on. Also, there are very few lenses in the L-mount system that have OIS, and the FP has no IBIS, which means that if you go down the route of not having either then you would most likely require a larger rig to get steady enough shots. The FP is a bit of a specialist tool in this sense - the image is spectacular and codecs potentially glorious but it's not an all-in-one shooter that will fit around you and your needs like many other cameras are. It's a bit of a diva.

-

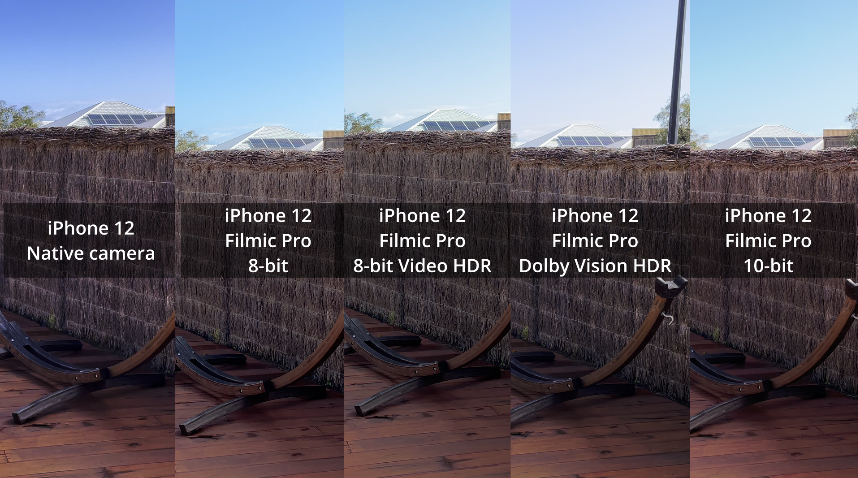

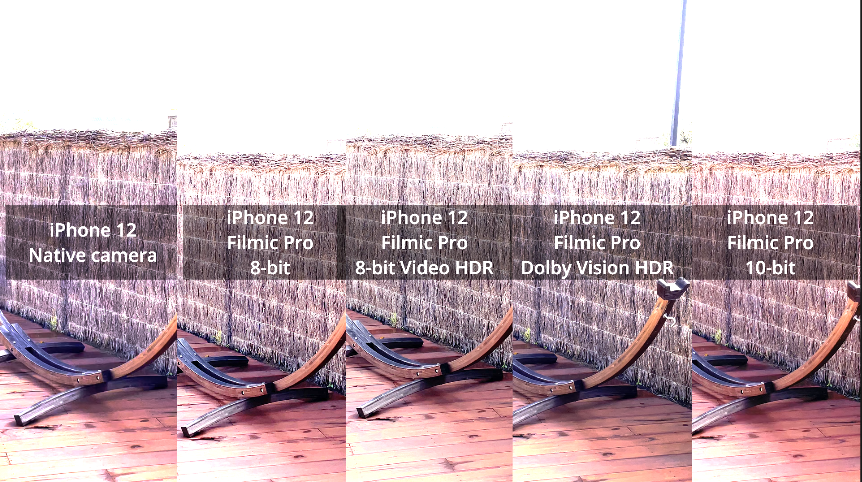

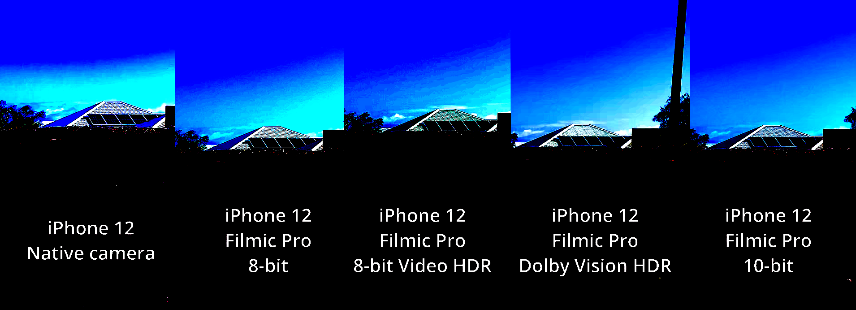

When I got my iPhone 12 mini I didn't really look at the camera specs, but I've since discovered it can do 10-bit video and have been examining its performance. What I've found is that there seems to be a potential for high-DR video that isn't realised. I've only really researched the iPhone 12, but I think that what I've found applies to all modern smartphones. Here's what I've found so far. This video does a test between various modes on the iPhone 12 camera: That video showed the 10-bit to have more subtle colours but lacked some of the tests I was interested in such as dynamic range, so I did my own. My phone actually has five options rather than the four, and I shot a very unscientific test in the backyard but of a high DR scene. I should have done it using manual settings but I didn't and I was comparing the default app to Filmic Pro too and the default app doesn't have any controls so the test was always going to be a compromise. Here's some stills from my test.. I did some matching in post to compensate for levels but didn't correct WB. Same image with the levels pushed right up to reveal the noise floor (the levels adjustment was applied to all clips identically): Adjusted down to look at clipping levels: and with a ridiculous curve to try and break the image and see any banding that is occurring in the sky: One thing I noticed was that according to Resolve, the file from the default camera app was a 10-bit file. The iPhone marketing indicates that the app will automagically switch to whatever mode is best, so I assume that it either uses 10-bit all the time or saw I was pointing it at a high-DR scene and switched to it. I haven't followed that up, but it's worth noting. It's also worth noting that the bitrate from the default app might not be the same as the Filmic Pro one as I had set Filmic Pro to its maximum setting. What I took away from this (and playing with the phone in very high-DR situations where there was huge clipping) was that the 10-bit has the same, or very similar, DR to the Dolby Digital and "Video HDR" modes, and that the 8-bit mode has less DR than the 10-bit (I assume the 8-bit just rejects the two most-significant bits the same way that JPG images clip earlier compared to RAW images on digital cameras). Incidentally, I couldn't find anywhere what this "Video HDR" mode actually did - it's not mentioned online anywhere I could find. So what's the problem? Well, where is the high dynamic range? I mean, where is the multiple-exposures high-DR? Imagine this.. a smartphone can pull images off the sensor at least 120 or 240 times per second. Why not just bracket those two frames and merge them together? Motion is a problem, but if you're talking about camera shake then you have OIS to smooth that out and we're talking 1/120s or 1/240s delay - that's minuscule, and if you're talking about subject movement then that's well well under the delay of any temporal noise reduction mechanism which operates at as much as 1/24s delay (10 times the potential delay) and exists in many high-end cameras. Why do I care? Think about this. A smartphone has something like, what, 10-stops of DR (I'm being pessimistic here). If you imagine that we ignore the bottom two due to noise, and we decide to overlap a stop for a smooth transition between exposures, that would still give us video with 17 stops of DR. SEVENTEEN! Hot damn would that be amazing!! Now, don't get me wrong, 17 stops is a huge issue in colour grading - rec709 only has 5 stops or something so trying to compress all that into an SDR output would be very difficult, but imagine if all they did was to emulate the exposure curve of film by having some stops in the middle that are relatively linear and then roll off the highlights and shadows. Even if they only exposed the darker stop one or two stops darker (and thus created a 1-2 stop shadow rolloff) that would mean they could have an extended highlight rolloff like film. If the app gave you control over this contrast then you could dial in having a higher-contrast look or lower-contrast look with more stops in the linear range. Dial the contrast right down and you'd have a flat image that could be graded nicely. The people who shoot with their phones are more likely rather than less to be shooting outdoors in high-DR situations. Is this a greater trend to not space out your DR bracketing? From what it looks like, there's no multi-exposure HDR going on at all, or if it is then it's not bracketing the two images 7-stops apart. I've noticed that most dual-native ISO cameras only have their native ISOs a few stops apart, 3 maybe 4. These are 12-stop cameras that do this - WHY? The Sigma FP is a notable exception, with its native ISOs being 5-stops apart. Smartphones have a different set of strengths and weaknesses than normal cameras, this is a potential strength and serious potential advantage - why isn't it being utilised?

-

Got rid of the pinned topics, contribute your ideas next

kye replied to Andrew Reid's topic in Cameras

Hi Andrew - some thoughts for your consideration.. A few observations. I'm registered with a number of the other forums and chat sites (Facebook, Discord, etc) and they're either dead or are full of one-line posts where almost no real discussion can take place. Having a forum means that nuance and complex topics can be discussed - this seems to be one of the most active camera forums around so I'd suggest keeping it as a forum. It's the only place that has kept my attention about cameras. I don't think that cameras or video are in decline - quite the opposite, however it's changing. Looking at your recent pinned threads was very illuminating - there are more and more cameras and as they get better over time there is less and less reason to upgrade. This means that there will be less and less people who all own the same camera. I think it used to make sense to organise all discussions by camera model but that seems to be less and less useful. I think there are two types of topics now: 1) what should I buy / should I buy camera X? (purchase advice), and, 2) wow - video is hard - now I have my camera WTF do I do with it? (accessories advice / technique / etc) In the (few) years I've been around my impression is that the brand you have built for yourself and the blog is 'call it how you see it' and in alignment with this the forums are a bit more like that too (as compared with other PR-centric or PC-centric approaches). The site is also a rare home to discussions that are more reminiscent of cinematography rather than technology (for example, discussions of c-mount lenses, classic cameras like early Pan models and BM models, etc). Yes, there are more than a few people who can't see past the specifications, but many who prefer the image of a 2K Alexa with vintage primes over an 8K camera with a low-distortion zoom. Some suggestions. I suggest a strategy of playing to your strengths and harnessing your interests. What I mean by this is: Embrace the 'call it how you see it' approach, and just write more content. You have written about cameras, the industry, lenses, and various other topics, and I think this is still useful and desirable content. You've lamented not getting review models because you didn't kowtow to brands PR departments, but I don't think this is a barrier - when the NDA expires and we see a dozen 'reviews' of a product why not watch them and then share your thoughts? With a bit of careful attention to the footage posted and reading between the lines on who says what, quite a lot can be deduced and I'm sure that your audience would be keen to hear your thoughts instead of having to watch all the videos / articles about it. I'd also encourage you to share your thoughts not just on the products but also what they mean too. If your impression is that something is yet another soul-less spec chasing camera with mediocre colour science in a system with no practical lenses then that's something people would like to hear. If the opposite is true, they'd like to hear that too. You seem to love smartphones and they're definitely eating the market from the bottom-up, so embrace that. I suggest you include those on the forums too. The cameras in them are almost overlapping with the sensor sizes and dynamic range of good S16 cameras, so why not just treat them like any other camera and discuss them here too. While most of us won't have the latest android whatever-it-is model of phone, most of us don't have the R5C or Komodo either, and yet they're still popular subjects to discuss, so I don't think that phones are really any different. Talk about the industry. Talk about technique. Talk about accessories. Talk about camera-related lens-related cinema-related broadcast-related streaming-related editing-related audio-related post-production-related everything, whatever interests you. The audience for this stuff must be absolutely enormous when there are millions and millions of people who live-stream or run a YT channel or simply want to get more from the camera in their phone. If your audience is everyone that's interested in recording their own video, then your audience has probably multiplied in size by a million since you started this site. In terms of the forum, I suggest this: Collapse all the sub-forums into the main forum (we all know that sub-forums are where threads go to die) so just let the forum flow - it will keep it looking busy but not unmanageable and will focus all the attention on it. The only sub-forum should be the one for hot-topics like the politics thread you recently created. This will allow you to direct any discussions that get a little heated into that sub-forum and will 'cool off' those topics which is potentially required so that will work in your favour. Create a new forum post for every new blog post. I don't look at the sites main page but whenever you post a thread about an article I see it and normally go read it so I can understand peoples replies in context. I'm not sure what your stats say about how much traffic the forums drive to the blog posts? Fundamentally, more people are doing video than ever before, but honesty and experience (without a vested interest) is rarer than ever, so there is more demand than ever before for your insights. I suggest playing to your strengths and just up your output. Hope that's useful 🙂 -

That makes sense, considering that if a certain value gets applied in Linear, then the conversion to Log would reduce that difference the brighter the Luma value is. Funnily enough, my post to you took about 20 minutes to write, as I would write something, have a think about it, google it, read a forum thread / blog post / etc, think about it again, and then adjust or delete what I wrote. My post ended up stating that the grain was higher in the mids and highlights as that was one of the dominant sentiments from what I read. Thinking further about it, and thinking about motion picture film, wouldn't there be noise in both the shadows and highlights, as in a two-stage development process (original negative then positive for projection) one stock would put the noise in the highlights and the other would put it in the shadows (which is why there are rolloffs at both ends)?

-

Good video, thanks for sharing. One thing that struck me was that I'd like to see a comparison between the DR Boost On vs Off but comparing them against their lowest ISOs. ie, [V-Log DR Boost OFF at ISO 250] vs [V-Log DR Boost ON at ISO 2000] and then [V-Log DR Boost OFF at ISO 400] vs [V-Log DR Boost ON at ISO 2500], etc. That would then kind of be a test of how much ISO range you get in each mode. It sort of looks like it has a dual-native-ISO and that the DR Boost feature is using both at once and therefore doesn't have as large a range of clean ISO values you can use.

-

IIRC there's something about film that makes it have more noise in the midtones and highlights? I know colourists often recommend applying noise in Linear prior to the conversion, which would impact the distribution across the Luma range. Keen to hear more about your impressions - personally I like grain as it makes an image more organic - all the modern cameras with the same Sony-sensor-in-a-box look all seem sterile to me.

-

This video confirms that it doesn't crop in Prores for 24fps and will downsample in-camera - I linked to the relevant time: The caveat is that this only applies at normal speeds (he says 24p) but that makes sense as I don't think it can read the whole sensor faster than the 40p and might not be able to downsample above normal frame rates either. How much slow motion do you do? The C70 certainly has some very usable options, and Canon sure know how to make a cinema camera, so the C70 comes from a long line of expertise so hasn't got any excuses beyond any dents from the cripple hammer that might exist. If I was to ever end up with a Komodo I'd be rigging it with the external batteries and lens and using the LCD as well. Did you see the Hassleblad Medium Format viewfinder attachment for the top LCD? That looks super useful and something I'd consider.

-

Yeah, in the OG BMPCC groups I'm in, a common issue is the HDMI port failing. It seems to be one of the major issues that people ask about - the other is broken screens.

-

It might be worth renting one to verify what the experience is like? One gotcha from the Komodo is the cropping for alternative resolutions and higher frame rates - not sure if that's an issue for you, and I can't recall if it also afflicts the C70? I don't think I ever got a straight answer on if the Komodo could shoot Prores at lower resolutions by downscaling internally, which might be worth considering if you're not always shooting 6K. From what I've read the Achilles heel of the C70 is the screen, which if you were planning to use it with an external monitor would close the gap between the two in terms of rigging etc. Personally, For run-n-gun stuff I always keep the camera rigged up and ready to go if possible. I know lots of shooters will tear their rig down for flights etc, but will build out the rig prior to the shoot days and have a bag or case that can take it rigged up and they'll use that to transport it to the shoot location so there's no rigging occurring on set. I'm lucky with my smaller setups, but I'd be shifting that stuff away from shoot days if at all possible.

-

I agree with your impressions - I think the image from the Komodo is right up there with the top tier of cameras. What stood out to me from the good examples of the footage was the noise and colour science, it had that feel where you just look at the image and know it just feels 'right'. Rightly or wrongly RED also has the brand reputation and I'd imagine that a Komodo would get you onto higher-end sets than a C70?

-

Further to what BTM said about doing something custom, if you can't do that then it's worth spending a few hours looking through the camera cage manufacturers websites (small rig, etc) and also scrolling through Google Images search results. I've found in the past that there are rigging attachments that you only find when you see a photo of a rig when flicking through image results. There is a real art to building out a rig that's functional but compact. It's soooo much easier to build a rig when you don't care what it looks like, if there are cables hanging out everywhere, or how big it is.

-

Definitely agree that RAW video from these things looks absolutely lovely. Are there any smartphones that shoot RAW and Prores? From my limited experience with cameras that shoot both codecs (P2K / BMMCC) and downloading footage in both formats from various RED, ARRI, etc cinema cameras, the Prores files were basically as nice to work with and grade as the RAW files. My theory was that Prores isn't better as a codec per-se but it's just that on cameras that shoot RAW the manufacturers know how to dial in the codec to look good. If it is in the ballpark of RAW image quality then the file-size differences can be a useful benefit. The fact that many movies screened in theatres were shot on 1080p Prores HQ was a pretty big call as far as I was concerned - if it's good enough to project that big then I figure it's better than most of us need!

-

I'll admit I haven't been following any threads on here that discuss the A74, but I've seen a number of videos on YT recently that seem to have some positive reflections on real-world use of the A74. Apart from the overheating challenges, this seems like it could be a good contender - FF, PDAF, potentially good Sony colour science, and heaps of DR for the money.

-

I'm not being deliberately problematic, just stating why the answers provided don't really suit me and how I shoot. That's fine - not all solutions have answers (if they do then I want the 100g Alexa Pocket for $100!), but you don't find out unless you ask - sometimes you ask the question and someone comes up with an answer you hadn't thought of or didn't know about, so it's worth a bit of a discussion. I'm perhaps putting more emphasis on this than what people might think is reasonable, but it's actually the tip of a large question for me. Currently I shoot with IBIS cameras (GH5, GX85) in auto-modes but am actively considering moving to a more cinema-camera style of shooting without IBIS or auto-modes when it's time for my next upgrade as that can get me a bump in image quality, so there's many thousands of dollars in camera bodies / lenses / accessory purchases that depend on things like me being able to get acceptably stable shots in windy situations. In this sense my problem isn't "how do I get good stabilisation with an OIS only lens", it's really "does OIS give me stable enough shots, in the situations I encounter, with how I shoot". Not so useful in conditions so windy I can't hold the camera steady with both hands! 🙂 🙂 I think you nailed it by talking about being self-conscious, which definitely applies to me. If I could change how I feel then I would do it immediately, but that's not how it works! Getting older is gradually making me give less shits, but I'm not there enough yet 🙂 In terms of running into Karen's, I've found they're everywhere in terms of giving you dirty looks and giving you the non-verbal equivalent of the viral videos you see, which just makes it not worth it for me. That's why I like to do what I can to fly under the radar and not trigger it to begin with. I also shoot a lot in places you're not allowed to be pro in, like museums and galleries and all the other types of private spaces that hold all the interesting things. You never know when you're going to run into a power-hungry security guard who is going to teach you a lesson - unfortunately this seems to be the majority of security guards (although it probably isn't the case it certainly seems like it).

-

I'm gradually pushing myself to see how basic/manual a camera I can get away with using as a proof of concept. That's why I'm shooting with my P2K and my GX85 in full-manual. If I find that these things are do-able then it will put cameras like the P4K, P6K Pro, Sigma FP, etc on the potential list, which have much nicer image quality and DR than the GH5 and (potentially) GH6. We haven't seen a lot from the GH6 but that just means that the jury is still out on it, rather than it being ruled out. Whenever I hear someone saying "I want.." as some sort of statement that implies they should get it just because they want it, I just reply with "I want a Lamborghini." and normally follow it with "what's your point?" or "but back here in reality" or "I love to dream" etc... The kids probably find it annoying as hell but it amuses me no end!

-

I want a new camera, despite not wanting to want a new camera. There are a great many things in life that I don't want to want, but want anyway. Or the opposite, I want to want some things, but I just don't.

-

I'm comparing UHD on a 1080p timeline, but they're rough figures anyway. In terms of not being able to see 1-2 stops of DR difference, if its right at the edges of what is visible then yeah, you can totally see it. Have you ever even shot in uncontrolled conditions and tried to pull detail from both ends of the spectrum? It's really sounding like you haven't because I have, and it's really quite obvious.

-

Everyone wants a new camera all the time. Except when they're asleep, and when they're thinking about what they're shooting and what is in the final video.

-

No, I wasn't. This is from CineD. Source is: https://www.cined.com/content/uploads/2018/10/recent-results.jpg What's the article that shows the chart you showed? I don't recall having seen that before.

-

The better I get at grading the more I can see aspects in the OG BMPCC / BMMCC images that aren't there in modern sensors. I made that thread about every camera looking like a Sony some time ago and it's still the case - almost every camera looks like a Sony sensor in a box. The theory is that a perfect camera can emulate any other camera, but there are two caveats with this: The perfect camera has to surpass the "target" camera in every way, and there are certain aspects of image quality that the OG BMPCC BMMCC cameras still surpass every modern camera except the Alexa and Red and perhaps the Venice and other random examples. The colourist has to be up to the challenge. Steve Yedlin famously emulated film with an Alexa, but this was pretty controversial because most colourists are simply not capable of that level of image processing and he had to write his own custom software to do it, let alone taking a Sony image and making it look like an Alexa. To put it bluntly, even if Sony made a sensor good enough (and you could afford it), you're not good enough to grade it, and neither is almost anyone else, perhaps on the planet. In the camera sensor landscape there are essentially four players that I know about. On Semiconductor who make the Alexa sensor, Fairchild who made the OG BMPCC and BMMCC sensors, Canon who make their own sensors, and Sony who make basically everything else. The development of the Alexa sensor and the Fairchild sensors was done at a time when the noise and colour reproduction of film were the most crucial aspects of image quality for their customers. The primary driving factors for Sony is resolution and dynamic range. You're right that smartphones will replace most cameras, but not because they will be better - it's obvious that they aren't - but because people will change their tastes to align with what smartphones can do. If all you see are "Sony" images then you'll be happy with them because you don't know any different. Almost everyone on these forums has now adjusted to the look of Sony sensors, which is an increase in technical specification and a huge decrease in emotional performance. This emotional performance is why companies like Leica stay in business making cameras that are technically worse but still sell for many times the price.

-

You're thinking about this wrong. The P4K / P6K / P6K Pro have a very strong feature-set and this addition gives them PDAF. The only other ways to add good AF to a setup the same way is to either hire a focus puller, or to upgrade to a camera that has everything the BM cameras have but also has PDAF. Either of these options easily runs into the tens-of-thousands-of-dollars. The problem with cameras is that people don't compare the full package, only isolated features, but when you're buying a camera you can get one feature very cheap, but for each extra feature you want it multiplies the cost - by the time you have internal RAW and 13 stops of DR the addition of PDAF creates a pretty small and expensive list of potential candidates.

-

You're thinking about this wrong - when you shoot LOG you create highlight rolloff in post using the methods I have mentioned. For all practical purposes, all cameras are just large arrays of linear light measurement - they don't have any highlight rolloff at all. The "Look" of each camera is defined almost exclusively by the colour processing that happens after the image is captured (and a tiny bit by the sensor) and when you shoot log you're in control of the vast majority of that processing that occurs. I couldn't find reliable DR tests for the Z6, but the GH5 doesn't compare well to the XT-3... GH5 v1 has 10.8 stops, GH5 v2 has 11.5 and XT-3 has 13 stops. DR can make more of a difference than many people think when shooting in uncontrolled conditions and when it's not just art but needs to be informational as well. In shooting the GH5 v1 I am often forced to choose between clipping the whole sky and being able to identify the person sitting in the shade. A choice between "here's a photo of Susan and the sky is digital white" and "here's a nice photo of .... someone? who is that?" isn't a choice that I enjoy having to make. Of course, this choice is actually a factor of how usable the image is in latitude tests rather than just DR as a single digit. Having a photo of Susan where she's bright purple is better than her not being recognisable, but still leaves a huge amount to be desired.

-

The ultimate statement about DR is perhaps that people shooting with an Alexa or RED which have the highest DR capturing around still light and control their contrast ratios. Yes, they can push and pull the RAW / Prores files from those cameras in ways that we can only dream of, but they still take the time and effort to capture it right in-camera. There's no substitute for making your scene look how you want it, even if you have 15+ stops of DR and have 14-bit RAW.

-

There's no substitute for dynamic range. Ultimately you can emulate the nice rolloff (highlights, shadows, or both) of high-DR cameras by using curves but that will increase the contrast of the image. If there's already lots of contrast in the image then you can emulate the lower-contrast look by reducing contrast so the mid-tones are softer, but that will ruthlessly reveal your white and black clipping levels. Another trick is to raise the levels of your image, which means you have some extra space to lower contrast or rolloff the highlights nicely, but that will raise your noise and black levels, so the trick is to apply noise to mask this. The cost of this look is that you'll have elevated black levels and lots of noise in the image. It's not really for modern work but it can work for vintage / film looks though. High DR cameras are desirable because they give you the flexibility in post to choose whichever look you want, but lower-DR captures (like 709 profiles that don't include the full DR of the camera) simply can't do everything - you have to choose the trade-offs. Higher DR cameras are also a challenge in post because you're trying to pack all the DR into the lower-DR 709 profile to publish them, so in a way your lower-DR capture is just doing in prod what you would have to do somehow in post anyway, except that you don't get to fine-tune or apply curves like you can in post. I shoot higher DR shots with moderate DR cameras (GH5 HLG which is almost 11 stops and OG BMPCC and BMMCC which are 12.5 stops) and even these are difficult in the grade when you are capturing the full DR on these.