-

Posts

8,043 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by kye

-

The latest batteries for the OG BMPCC get WAY more than that - I got 49 minutes of RAW recording from one 1200mAh battery, and 33 minutes of Prores HQ from a different battery (but it wasn't fully charged). I unpacked the brand new Wasabi 1200mAh batteries and put them on to charge, so the test was done taking a brand new battery straight out of the charger. I was recording in RAW the card filled up after 23m, with the battery at 54%, so I formatted the card and hit record again, and when it got to 5% battery life it was at 26m, which makes 49m for one the life of a single 1200mAh battery. That's probably a best-case scenario, but it's pretty darn good if you ask me. Second brand new battery recording Prores HQ died around the 20% battery mark, and recorded a 33:22s file. I'm not sure if it charged properly as the charger kept turning off but it only registered 81% in the camera when I put it in, so maybe not fully charged, but that's also not bad. This is approaching the battery life of the modern gigapixel monsters that dominate the market now. The BMPCC battery life complaints are something that used to be bad but are much more 'normal' now, not great, but not unheard of either.

-

OG BMPCC - costs less to buy outright than renting a C70..... in 2022!

-

Or on startup it asks "Would you like to get the best image quality?" and yes takes you to 8K, and probably also enables that stupid motion smoothing thing that makes everything look like Days Of Our Lives.

-

Wow, that's a pretty impressive set of specs, and especially the low power consumption which minimises heat and power draw. The fact that lower resolutions keep scaling up the frame rate is welcome too. With Canon/Sony/BM/etc being more aggressive with their ecosystems, hopefully one of the smaller manufacturers who don't make their own sensors will decide to ditch Sony sensors and build something on this one. Then again, the FP sensor is made by Sony (I believe) and it looks fantastic, so maybe it's the sensor model itself or the implementation?

-

Damn! That's the wrong way to make a day more exciting!

-

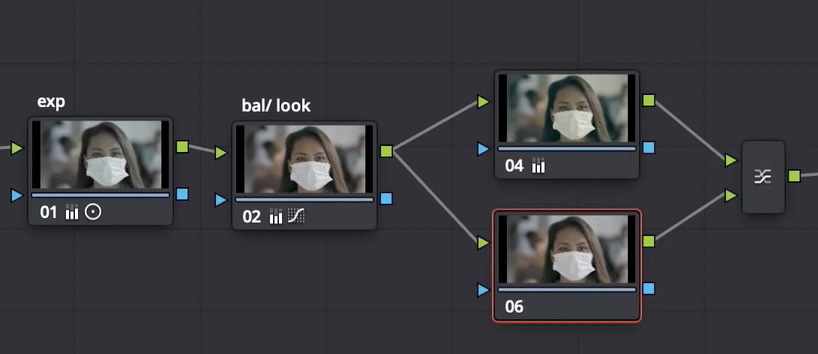

I think the colourists refer to the initial adjustments as "balancing" the image (although it's not a universally used term) and they'll then apply a creative colour grade over the whole scene / film. I don't know how technical you like to get with grading, and it's a very very deep and technical topic, but I'll try and give you some examples. It also depends on what software you're grading in, because Resolve uses Nodes and that means that either some things get done differently to a layer-based package, or it means you can do things that can't get done in a layers-based package. I had a video in mind that showed it clearly but couldn't find it, but this one describes a similar approach - I've linked to the relevant timestamp: This is part of the node graph from that grade: Node 4 takes the balanced image from node 2 and does a serious grade on the image that screws up the skin-tones, but node 6 takes the same balanced image from node 2 and has a key on it to only select the skintones and then he grades in node 6 to push the skintones so they look ok sitting on top of the grade from node 4. ie, node 6 is at 100% opacity over the top of node 4. An alternate approach, and the one I was describing, would be to have node 4 do the same grade, and keep node 6 as being a key of the skintones, but instead of node 6 having a grade on it and sit at 100% opacity, I'd keep it having no grade at all and starting from 0% opacity just bring the opacity up until the skintones stop looking wrong. This technique works well if the grade in node 4 is absolutely full-on, because if that is the case and node 6 was at 100% opacity then you'd have to do a lot of grading on node 6 to get the skintones to sit nicely over the top. A completely different approach is to grade the whole image with a serious grade, then in a node after that you'd try and get a key of the skintones and then adjust them how you want them. This is a way to do it when you don't have parallel nodes, but it makes pulling the key much more difficult. One way to make this easier, if the software allows it, is to pull a key of the skintones from the balanced node before the image has been really messed with but use that key for the skintones node after the serious grade. This is another awesome feature of Resolve - the blue inputs and outputs on the nodes are for the key, so you can pull a key on any node and then connect that Key Out to the Key In on another node. (Side note: you can also connect between the key ins/outs and the image ins/outs which allows you to process the key using the normal controls of a node. I haven't seen anyone else do this but it works and I've used it in real projects to refine keys etc). There are other approaches as well, and it's worth setting up a test project and trying to think of all the different approaches and different tools you could use, and just trying each of them to see which ones you like and which ones you find easier to use etc. Just for fun, here's another video that uses parallel nodes and all kinds of fun stuff to create a strong look:

-

Good idea, I'm near the beach so that's definitely possible. I've found that if you zoom in to 200-400% in post then it's easy to see through the YT compression to what is the original compression, especially if I get lots of movement in the shot. Also, most content is delivered via some form of streaming (but not all) so if it's not visible on 4K YT then that's a useful conclusion in itself.

-

More grading fun with cheap Lumix FZ47 CCD sensor bridge camera

kye replied to dreamplayhouse's topic in Cameras

It's not the stock that counts, it's how you use it.... 😉 -

I wouldn't be too focussed on getting skintones right on the line, there is quite a lot of variation in where skintones get placed. If you're curious I can dig up some previous tests, but I looked at a promo video from Canon and one from ARRI and the Canon had skintones to the right of the line (towards red) and ARRI had then to the left (towards yellow). Both videos were exemplary and the skintones in each looked fantastic, so they are definitely correct, despite the vector scope saying they're 'wrong'. If you're curious, I recommend doing some tests where you take a few images and rotate the hues as far towards yellow as you can go without making people look sick, and then as far as you can towards red without making them look sunburnt and then enable the vector scope and see where you put them. Maybe your preferences are really attune and you really do prefer them very close to the line, but maybe you will find that there's a range of hues that you find acceptable. It's also worth mentioning that getting the skintones 'right' (whatever that means for you) is only really relevant in neutral grades. If you start grading a project with a more creative grade, like a film emulation, a split colour (like orange/teal or yellow/magenta etc), or just applying a single colour as a wash over the top, then your skintones can still look fine but they will be waaaay off in pure technical terms. The normal approach for colouring in this way is to apply a strong look to the footage and then blend a bit of the original (balanced) skintones back over the top with opacity, just to pull them back into the range where they look ok but also look like they fit into the world of your colour grade as well. Happy to find an example of that process if your interested as well. I'd also be very interested in that test. Please share 🙂 I'd suggest that matching exposures between them is a more relevant test rather than matching ND or Apertures, which is only a relevant test if you're planning on using the two cameras side-by-side. Then again, considering how malleable each of these are in post a small difference in exposure or WB shouldn't be an issue. This is a test that I think is worth doing as properly as you're able to (I am aware how much time these take to do) because I don't think that anyone else has done this comparison, and I think it's actually a very important comparison because I think the FP will actually get far closer than almost anything else around on the market at the moment. I really feel that the FP / FP-L cameras are radically under-appreciated and that if there was more awareness of how good the image really is then it would be far more widely appreciated. (and Sigma would be far more likely to make an FPii and that would be good for all of us!)

-

I don't think any manufacturer can win on these boards. Yeah, Canon gets a lot of criticism. A lot. But so does Sony. People say their older cameras had intolerable colour science, their lenses are too expensive, their image is soul-less, people who like them are shills etc. Good luck having a conversation about Panasonic without being drowned out by people talking about auto-focus like they have Tourettes Syndrome, and if you're talking about a MFT camera then why would you - the mount is dead don't you know? and didn't Panasonic used to have a cinema camera division......? Fuji is criticised for not having FF options, despite offering S35 and a range of Medium Format cameras that produce lovely images, and is currently laid bare with people doing extractions of the Cr channel and wondering WTF they are doing to the image to make them so awful..... in a dedicated thread. Sigma is loved for having one of the nicest sensors in many years, provides 4K and high-res models to choose from, but is criticised heavily because it is secretly the successor to the OG BMPCC with lovely images and almost nothing else going for it. Plus, you know, almost no-one bought one. Olympus? Aren't they bankrupt? Did you know they released a new camera recently? No? Neither did anyone else - there's no mention of it on here at all. The worst kind of criticism is being ignored. Pentax? The 80's called and wants it's camera back! *cough* Samsung *cough* NX-1 *cough* better go have a covid test......

-

How many batteries did you have with you. Don't say 13!! I've had the same thought. I suspect that it's difficult to make a mechanism that is robust enough, small enough, and doesn't consume too much power. Perhaps more practically, maybe something could be made that would need to be manually installed (perhaps with the same complexity as an interchangeable lens mount). That would mean that on different projects the camera could be used in different ways, and not have to be two separate models (like GH5 and GH5s). I can't think of anyone that would want to just be able to turn it on and off instantly. Most people that want it to be able to be hard-mounted will also just use a gimbal to stabilise it. Those who want IBIS probably aren't hard-mounting it on the same day as shooting hand-held. Maybe I'm wrong though.

-

Very nice. Obviously it's ungraded (a colourist would do lots more work) but it really reminds me of the image from a high-end cinema camera. I don't know what it is about this image, and the other images from the FP, but they seem to have that subtle rendering of colours and dynamic range that high-end cameras have. It's obviously uncompressed, but these days that's less of a differentiating factor, especially with >4K RAW captures. I had a long discussion on the colourist forums about if the Alexa files have any tweaks built into their files, and someone posted a latitude test which showed that between clipping and the noise floor, normalising the luminance of each under/over image was a perfect match for the the properly exposed one. This shows that the RAW output is purely logarithmic. If it wasn't purely log (e.g. if it tinted the shadows or desaturated highlights etc) then you wouldn't get the same image when over/under exposing and normalising on post. What that said to me was that the magic of the Alexa is either in their LUT (and can therefore be accessed by anyone) or is a factor of the sensor being a very high quality capture of what was in the scene. I think it's probably both. I mention this because I think that the FP is also an unusually high-quality sensor that also delivers a very high-quality capture of what was in the scene. I think it was earlier in this thread that I posted some tests of the FP that showed it was able to capture a very wide gamut, IIRC similar to the Sony Venice. On paper the FP is really not the right camera for me, but the image is so compelling that I can't eliminate it from my short-list for my next camera, whenever I end up doing an upgrade. In terms of image quality, I really think that the FP could be the last camera you'd ever need to buy.

-

I wonder how much of that is simply editing. Reality TV normally focuses on dramatic situations, often deliberately making them more dramatic, and then edits out all the dross, once again often making them more dramatic that it really was. Good amateur content is good because it's well edited. In terms of good amateur narrative, writing is the best form of editing, and in terms of good amateur YT content, the best editing is working out what you want to say before hitting the record button. When people ask me how to edit the video clips they've recorded of family and friends, the first thing I tell them is "Film as much as you can, then just chop out the boring bits!". I normally follow that up by saying "and most of it will be boring so keep chopping things out until it's really exciting". I was once told that a good edit will feel a little too fast. That will feel about right for people watching. Thus, lots of cat footage? It's the same everywhere. Another example is that the Davinci Resolve FB group got so clogged with newbies asking the same questions about cinematic film emulation LUTs etc that they created a private group for advanced users. Now I'm in it I can't see the questions you had to answer to get in but I remember they were rather pointed lol. In this case the group might be a little too advanced, there's not many questions, but when they are they are often so technical and obscure that no-one knows how to help. Resolve reminds me of photoshop and how you can basically never learn everything about it. Of course, newbies aren't all bad. In the GH5 / Panasonic groups I'm in, there are people who are so new that they talk about all these irrelevant things like lighting and writing and editing! How little they know!

-

I'm the same although with ticket prices here I only go to the cinema for the "big" films. We stopped going during COVID and haven't really started back again, but before it hit I did plan to go more often. Certainly, every time I go I wonder why it took so long to come back - I think we forget between sessions. Partly returning to the topic of resolution, I wonder how much of the cinematic look is simply the lack of sharpness. For me, there's this 'larger than life' character that cinema has. I've heard people say that it's due to the size of the screen (which can't be emulated at home because when you match the viewing angle your eyes know how far away the screen is and so know it's only a TV instead of a huge wall). I've also heard it put that it is due to the close-up and the powerful emotion that good acting can express, and doesn't have the same visceral impact when the image isn't so close up. I think that both of the above are probably contributing factors, but I really think that sharpness has an impact too. Obviously if you're only delivering in 2K then that will limit the sharpness, and I have noticed that the level of sharpening from feature films is much much less than most YT videos. If you were shooting on film or with a 2K sensor (no downsampling) then that will also limit the sharpness too. But delivering in 4K or 8K or even higher doesn't automatically mean the image will be sharp, it just means the resolution doesn't put a limit on the amount of micro-contrast in the image. Maybe higher resolutions are simply a 'new toy' for most who are shooting with them and they can't resist turning up the sharpening to see the extra resolution that they're paying a lot of attention to. Perhaps professional colourists help to prevent feature films from concentrating too much on this, or perhaps people who are too obsessed with high-resolution simply don't progress to a point where they're being projected in cinemas? I think that to some extent, the aesthetic of cinema being 'larger than life' is kind of by definition due to the limited extent that it looks like real life, and that anything that makes image quality more realistic (beyond a certain optimum point of course) is going to make it look less cinematic. I think shooting and watching 60p video is less cinematic because it's more like reality in some way (it definitely doesn't look like reality, but it's closer than 24p). Computer games that are rendered in 100fps or more are more like real-life again, and definitely don't look cinematic. To me, the moments of human experience that aren't like real life are dreams or high-emotional situations where the world goes into slow motion. I wonder how much that the limited sharpness, the 24p motion-blur, and the numerous other techniques involved are targeting that aesthetic of being recognisable as reality but not quite being real, and therefore allow us to perceive them differently - "suspension of disbelief". To me, some of the best films, games, stories, etc take place in worlds where the nature of reality is somehow altered, it doesn't have to be by a huge amount, but enough to take you to another place. Resolution and 8K capture doesn't prevent that, but it comes with a cost: the equipment has to be much more advanced for other aspects not to suffer (RS, etc) the film-maker has to have the creative vision to realise that sharpness and resolution of the image should be adjusted in service of the objective aesthetic of the project, and that the optimum amount isn't the maximum possible

-

I'd be very curious to see the differences. Having the differences in the same cinema is likely to eliminate a fair few variables from the equation (such as projector calibration, any image processing, etc etc), as opposed to comparing two different cinema screens from two different providers in different locations. I don't think we have such differences here, although I must admit that I haven't really looked. One thing I do notice every time I go to the cinema though is how the images don't look 'sharp' and when I imagine the image being sharper it doesn't seem as nice somehow. Not as 'larger than life' perhaps.

-

It wasn't a miraculous firmware fix - it was deliberately crippling their product and getting called out for it and then them fixing it without apologising. I wouldn't call you dubious, I'd call you a realist. Now, I'd happily buy a Canon camera if my needs aligned with one and the price was reasonable, but before I parted with any money I'd have to do a borderline forensic analysis to make sure that the camera did everything I needed it to do, because history has shown that what you assume will be provided may not be.

-

Good points. Perhaps the first thing I should say is that I've never declared that MF is superior, just better for me, and better for some people, although probably a minority at this point. I'm trying to engage in a more nuanced discussion, rather than it just being one-sided. Nothing is all-good or all-bad, and everything depends on perspective. In terms of "getting the shot" the situation is more complicated than just AF. The primary one, for me, is shooting stable hand-held footage. No point slightly improving my focusing hit-rate if I'm going to lose more shots to shake than I gain by having better focus. I find that shooting MF with left-hand under the lens gripping the MF ring and right-hand on the cameras grip is a pretty good approach if the camera is lower than eye-level. If it's above eye level then I'll normally be lifting it high above my head and so I'll normally close the aperture a bit and pre-focus and then hold onto something near the end of the lens that doesn't move in order to keep my two hands as far apart as possible. I don't normally miss focus on these and the screen is normally too small for anything except composition so this setup probably doesn't matter either way. My favourite position is looking through the EVF with left-hand doing MF and right-hand on the grip (like above), and I'll be getting three points of contact. Stabilisation isn't normally a big issue in this setup as I wouldn't normally do this unless I was stationary, whereas the other setups are often when I'm walking or squatting or holding the camera out at arms length etc. There's no good way I've seen to use touch-AF on the screen while also stabilising the camera with both hands, so that doesn't beat MF in terms of getting the most shots. It also doesn't work when using the EVF (pretty sure I'm pressing the screen with my face at this point too!). I could potentially use the thumb of my right-hand on a joystick, although that would really lessen the grip from that hand as it would really limit the amount of pressure I could apply with my thumb so I wouldn't really be holding the camera much with that hand. Thinking about it now, MF loses me far less shots that stabilisation does. If it was the other way around then I could absolutely see that my cost/benefit assessment of AF would change substantially. The other thing to consider is that, at least in my eyes, imperfect MF still has a loose kind of human feel that suits the content that I shoot, but when AF misses focus or is in the process of acquiring focus the aesthetic just isn't desirable. I tend to miss focus or fail to track a subject when there are lots of things happening and lots of movement, so it suits the vibe. If I have a second or two to adjust aperture and focus then I don't miss those shots. When AF misses a shot it just seems really at odds with the aesthetic that the rest of the image is creating. Watching AF pulse, or rack focus either too fast or too slowly always seems so artificial, like a robot having a mal-function. Also, I'm not sure how many AF mechanisms are able to ease-in? They seem to rack at one speed and instantly stop on the subject, or pulse momentarily (which is worse). This is probably made worse when the focus speed is set too high, but I'd suggest it's still a factor. "Getting the shot" isn't just making sure it's exposed and focused properly and not too shaky, it's about getting the shot that has the most chance of making the final edit. Getting the technical things right are the bare-minimums in this context and only get a shot to survive the assembly stage of the edit, it's the subject matter and the aesthetic that determine if the shot makes it to the final edit, and if the shot is interesting then technical imperfections can be tolerated. I can imagine that if you're shooting commercially, the equation between technical imperfection and aesthetic is very different, so would generate different decisions based on different trade-offs.

-

Well, we're back to the "what is visible" topic again. *sigh* I setup my office (and the display that I'm writing this on) according to the THX and SMPTE standards for cinemas by choosing a screen-size and viewing-distance ratio that falls right in the middle of their recommendations (the two were a bit different so I picked a point between them). My setup is a 32" UHD display at about 50" viewing distance. At this distance I cannot reliably differentiate between an 8K RAW video downscaled to UHD and downscaled to 1080p. At my last eye check I had better than perfect vision. For 8K to be visibly different to 4K, you'd have to have a viewing angle considerably wider than I have while sitting here, which would (for those billboard screens) make them either incredibly large, or quite close to street level. Judging from the angle and lack of wide-angle lens distortion from those videos, I'd suggest those signs are too far away from street level and not large enough to compensate, but maybe I mis-judged. It really is a factor of viewing angle. I don't doubt that they're effective though. HDR is hugely impressive and looks incredibly realistic in comparison to lower DR displays. I'd imagine that they'd have added more than a modicum of sharpening in post to those images, plus they're CGI which will be pixel perfect 4:4:4 to begin with (unlike any image generated by a physical lens and captured 1:1 from a sensor). In terms of your cinema comparison, I don't doubt that the images are different, although half of each cinema will be sitting closer than the recommended distance, and the distance that the 4K vs 1080p differences are tested at. If we assumed that the middle-row is where 4K stops being visibly better than 2K, then I would imagine that the first row is probably more than half that distance to the screen, so it might be past the point where 4K can keep up with 8K. I remember sitting in the first row of a crowded cinema (the last seats available unfortunately!) and having to turn my head side-to-side during scenes with more than one person - the viewing angle on that must have been absolutely huge!

-

When I wrote that I was only thinking of video, yes. Of course, unless you're shooting sports or wildlife then most AF is pretty good these days. The GH5 AF is quite unreliable for video, but for stills it's pretty good. In terms of conspiracies, there are none. The common theme of my posts is that manufactures are shafting their consumers as much as they can to maximise their profits as much as they can - that's not a conspiracy, that's basic economics! I don't like it, but that doesn't make it a conspiracy. I think you've oversimplified this. I shoot in some of the least controlled situations around and switched to manual focus because the AF didn't choose the right thing to focus on, not because it couldn't focus on the thing it picked. The more chaotic a scene the more things there are to choose from and the less chance the AF will choose correctly. The way I see it, it's the middle of the spectrum that benefits most from good AF: if you have complete control of the scene then MF is probably fine if you have a simple scene like an interview then AF is great because it will be reliable in choosing what to focus on and can track your subject as they move forwards and backwards, or a scene where you have a bridal couple standing clear of other objects and people and you're filming them from a gimbal, etc if you have too much chaos then the AF will lose more shots by choosing the wrong thing than MF would miss by not hitting the target People keep forgetting that focus is a creative element, one of the elements that is used to direct the viewers attention and experience over the course of your final edit, not a purely technical aspect. The GH5 AF is spectacular at focusing, it's crap at choosing which thing to focus on. That's the broader challenge... People talk about Eye-AF, but notice that they test AF with only one person in frame? There's a reason for that, and it's not that camera reviewers on YT don't have any friends 🙂 I'm none of those things, I'm into this thing that people don't seem to have heard of, it's called "getting the shot". I get more shots with MF because I know what I want to focus on. The Canon R3 mode where the AF point moves around by looking at your eye would probably be the only AF system that would meet my needs, and if that was available in a camera within my budget then I'd happily swap to that. I'd probably miss the ability to have live control on how fast the focus transition was, but it would still be a better outcome because I'd miss less shots. I get more shots with IBIS because I shoot in situations where even OIS can't compensate (OIS doesn't stabilise roll, for example), and those shots are unusable. If you've somehow concluded that I'm anti-AF then you've (once again) misinterpreted what I'm saying. I'm saying that AF isn't perfect, and that sometimes it gets in the way of the shot. As a member of the "get the shot" club, I'm against that. If you're in situations where AF will help you get the shot, then cool, I'm all for it. If manufacturers want to push AF as the only acceptable way to operate, that's fine with me up until they start limiting MY ability to get the shot, which does happen. Thus my posts talking about the downsides of it. Mistaking some mild criticism of a technical function for trying to "discredit the majority of users out there that value things like AF" is, quite frankly, preposterous. Well, if that's your argument, then you're agreeing with me. Camera YT, and all the camera groups that I can find online, idolise all the things that are associated with cinema. Colour science is best when it's like film (no-one is talking about getting that VHS colour science), lenses are best when the aperture is fastest (people aren't talking about which lenses go to F32 vs F22 in order to get that deep-DoF fuzzy camcorder look), etc etc. Besides, EOSHD seems to be a rare sanctuary of people who know things. Most other groups only seem to talk about shallow-DOF, LUTs, and how to get your camera and the Sigma 18-35 to balance on your gimbal, and never get beyond that.

-

That would be great. "The pixels are just awful, but it's ok because there's a bazillion of them!" was never an attractive concept. Of course, photographers have been shooting RAW for way longer than we have in video, and in video they give you more bitrate for higher resolutions, so it was never about the straight number of pixels anyway. We may get there eventually, but it's not going to be any time soon!

-

I'd also be particularly interested in an FPii if they added: tilt or flip screen IBIS (OIS doesn't stabilise rotation, which has ruined shots of mine on many occasions) improved compressed codecs (the h264 in the FP was very disappointing compared to the RAW) I do wonder though if those additions (especially IBIS) would kind-of make it a different type of camera altogether. Hollywood really dislikes IBIS (as the sensor moves around even when it's off) so adding IBIS kind of eliminating it from the world of cinema cameras, however, they didn't really market it as a cinema camera to being with, despite it being a 4K FF uncompressed RAW camera, so I'm not sure who / what Sigma thinks this camera is for.

-

Considering the economics, I'd suggest that the shift towards AF and native lenses is (at least partially) due to manufacturers taking advantage of naive consumers by pushing these self-serving concepts in order to sell more lenses and lock users into their own ecosystems. I say 'naive' because huge numbers of internet users want AF and cinematic images, despite the fact that cinematic images are generated mostly by adapted manual lenses.

-

I never thought about that, but yes, that makes sense. When I was doing stills I would shoot exclusively RAW images as the JPG versions always clipped the highlights (which is madness, but there we are), so if the file sizes of those doubled/tripled/quadrupled then that would potentially be a big deal and most people wouldn't really want 48MP over 12MP / 16MP / 24MP. I mean, 12MP sounds pretty low res, but it's the same detail as 4K RAW video, which is plenty good enough for most purposes. Of course, the storage requirements of shooting RAW stills is laughable compared to that of video, but for stills-only shooters it might be a thing.

-

These little things really make me wonder what Canon are doing. I mean, if one Canon development team-lead spent one day at CES writing down all the things that people suggested then things like this would be added to every camera without any real challenges at all - if they already have a function that puts frame guides on the screen and a menu to choose between different ones, then adding more should be only a day or two worth of work for an engineer. This seems relatively plausible. A WFM requires that the image (or a low-res version of it) be processed and a graph generated from that analysis. My guess is that they might not have a spare chip available to generate the WFM, or (if they can do it in preview mode but not during recording) it might be being generated by a chip that is only busy during recording (eg, for NR or compression etc). False-colour, on the other hand, could simply just be a display LUT, which requires no additional processing requirements as the functionality to apply a display LUT and a recording LUT are already present.

-

Another way to look at it is that for the same sensor read-out (data rate), you can have: 8K with 26.8ms of RS (that's what the A74 gives in full-sensor readout mode) 4K with 6.7ms of RS (roughly on par with the Alexa 35) I know which of those I'd prefer. Unfortunately, video is so complex that much of the camera-buying public (from parents to professional videographers) simply doesn't know any better and are therefore subject to the "more is better" marketing tactics. In cameras, and also in life, I've come to realise that every statement that is worthwhile begins with "well, it's actually more complicated than that, ...." but I've also come to realise that most people tune out when they hear those exact words. There is one thing that I am quite puzzled about, which is why they don't use the extra pixels to increase the DR of the camera. Especially considering that DR is one of the hyped marketing specs that gets used a lot. For example, if they took an 8K sensor, installed an OLPF that gave ~4K of resolution, and made it so that each colour (RGB) was made of a 2x2 grid of photosites of that colour, they could either: Average the values of each group of 4 photosites to lower the noise-floor by a couple of stops, or They could make each of the photosites in the 2x2 grid have a different level of ND dye, in addition to the RGB dye, potentially giving that hue (RGB) at up to 4 different luminance levels If they did the latter, spacing the ND dyes perhaps 3 stops apart (which is lots of overlap considering each photo site will have at least 10-stops of DR on its own), then the photo site with the most ND would have 9 stops of extra highlights before clipping, potentially giving 20 stops of DR when combined with its neighbours in that 2x2 grid. This wouldn't need to include two separate ADR circuits the way that ALEV/DGO/GH6 sensors work, it would only need a very simple binary processor to merge the 8K readout into a 4K signal with huge DR. I mean, wouldn't Sony marketing department love to have a camera with 4K and 20 stops of DR? That's more than ARRI and would make headlines everywhere. Plus, it can be done with existing tech and just a single extra chip in the camera. Of course, they'd charge $10K for it, but still.