Llaasseerr

Members-

Posts

347 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by Llaasseerr

-

Thanks for the clip, by way of comparison I rendered it within a basic color managed setup. In this case I decided to ingest the PRR file in Play Pro to V-log/Vgamut based on the included metadata tags. This is assuming that maybe the Ninja V image is just V-log (unconfirmed), so that would create monitoring consistency with the Ninja V. Since I took this into an ACES-managed imaging pipeline, it would not have really mattered if I exported as Alexa LogC, since I would just use the appropriate input transform for the log/gamut encoding into the common ACES space. First I have the ACES default rendering for sRGB (good for web viewing). This is just a film print-like transform that is parametrically generated as opposed to being fixed like a LUT, so it's able to account for the output device. Basically, it's similar to an Arri to Rec709 LUT except it's adjustable on the fly for the output device. If the Ninja V is really showing a V-log image, then you could apply a "V-log to ACES to Rec709" LUT to get the same appearance while shooting. Next I pulled down the exposure by -2 stops so you can see how the output transform handles the rolloff when there are more distinct transitions visible. The underlying exposure transform is on the linear floating point file, and the final look is just a view transform: Now I disabled the output transform so you can see the linear file. This is probably the sort of thing people see in FCPX with no transform applied when they first import their PRR footage, with all the info clipped: This is the same image with -2 stops exposure change so you can see that the highlight detail is in fact there, but it was not in viewing range and with no rolloff to look the way we want:

-

Yeah, I still won't understand this until I see it. Of course I'm operating under the assumption that the naked image might be V-log, but that might be wrong. Originally I thought the Ninja was showing the "OFF" image from the camera, but now I'm not so sure. So if you don't have the V-log LUT switched on (I assume you mean V-log to Rec709), then the log image, if it is in fact V-log, should show all highlight detail. But maybe the naked image directly into the recorder just is not being displayed as V-log at all and is just the "OFF" profile, meaning "flat-ish", and the display is still clipping the highlights somewhat. Then the PQ preview is the only solution that is readily available. For sure, it makes sense that for some cams that Atomos just decided on adding a default V-log tag to the metadata. Although Z cam does have its own log format so that's surprising. That sounds correct in that there should not be a decision forced on the user about a generalised way to interpret a raw file. The issue is that most people don't know how to handle the data, so they just start playing with the controls to bring the image in range. However the inspector does seem to offer a number of viable options as to how to transform the raw image to make it instantly usable for most people. The basic thing you have to do with raw data is apply a log transform and then apply a log-to-display transform. Right, that's what I meant when I said exposure, but it may be more accurate to say ISO or gain. That's not inherent in the PRR file format. Really, we are just talking about choices by Atomos and Sigma as to how much gain to add or not add to an ISO invariant image and there seems to be some difference here for internal DNG vs external PRR. Tbh in this case, neither is ideal. Yes ISO 800 internally is just ISO 100 with +3 stops of gain. Then the Ninja V is doing something as well that is more ISO invariant. But if the underlying ISO is known when you account for the weird "interpretations" and the image is exposed correctly, then at least at a glance the image is pretty much the same. With the caveat of factors like shadow noise levels and highlight clipping point. Yes the fp is baking the gain transform in as opposed to an EI approach, which is more what the Ninja is doing. If you look at cameras that shoot with an exposure index mode (EI) like a Sony cine cam, they are just shooting at the base ISO and are not baking in the extra gain, and the exposure difference is just metadata. So that does make more sense professionally. What is idiosyncratic is that just shooting at the base ISO 100 internally and cranking by +3 stops is not as good as shooting at ISO 800 when accounting for noise floor and highlight clipping point. There is some internal processing beyond just a +3 stop gain increase. So I think I can understand why Atomos decided to start at ISO 800 instead. I can't remember my findings from 1600+, except that they have diminishing returns with the exception of a slight noise floor advantage for 3200 in some situations because it's the higher base ISO.

-

I should say that even thought DNG and PRR are essentially the same thing except for compression and highlight recovery, the display of the raw images while shooting is what differs. Also, based on your tests, maybe there's some funny business with the ISO interpretation on the Ninja, but nothing that can't be accounted for. I'd say the only advantage of using PQ on the Ninja V is that it's a quick and easy way to bring the FP raw image into viewable range if you want to check highlights. Or if you wanted to use the same PQ display in FCPX. But if my V-log theory turns out to be true, where Atomos made the executive decision to encode the raw as V-log for display, then a display LUT can be added on top of that on the Ninja so that a more defined color pipeline can be set up so that the image appears consistent to the look design when monitoring and later when bought into the NLE. That means there would be two levels of non-baked display transforms on the Ninja: raw to V-log, and then a user-applied V-log to display LUT.

-

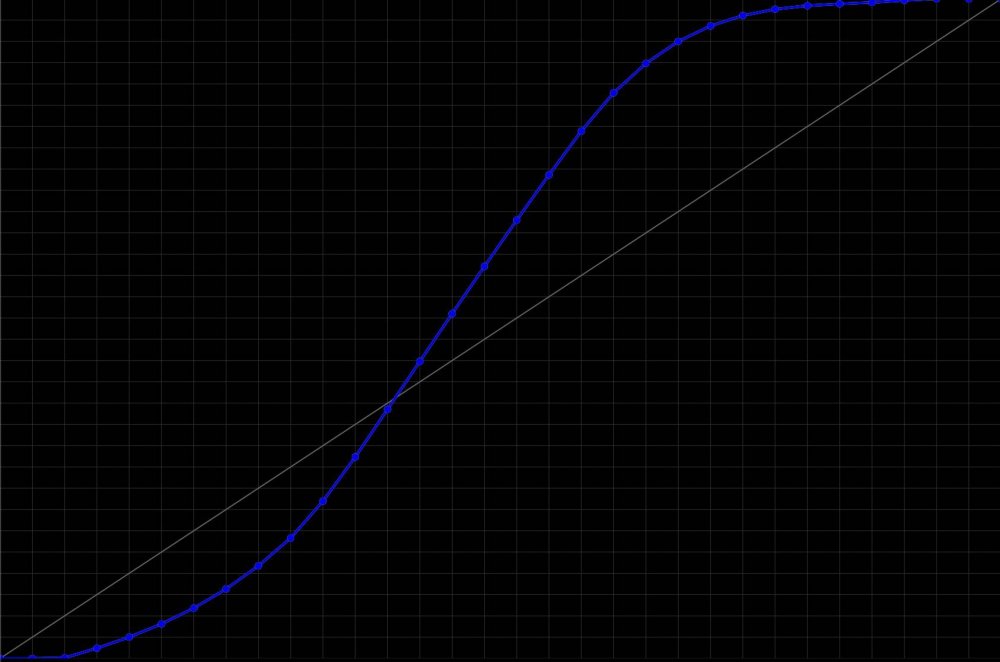

It's worth keeping in mind that ProRes Raw is essentially identical to DNG, but in this case the workflow is confusing because of the apparent unknowns around the Ninja V, and possibly FCPX which is limited in how much you can control the image. The only real variable between PRR and DNG is that you appear to be having exposure issues with PRR. If I get hold of the camera in the coming weeks I'll see if I can do a test myself. To be clear, every linear RAW format and every log format is an HDR format. All PQ does is offer a Rec709-like display-referred image, but where the highlights are sent into the brighter part of the high-nit display so they pop more. So it's not any different to a "log to rec709" LUT on an SDR display, except the highlight rolloff is not as aggressive. If you look at the K1S1 Arri LogC to Rec709 1D s-curve, you can see how aggressively the entire linear raw range of the Alev III sensor (encoded as LogC as an intermediary) is rolled off on the shoulder. If it was LogC to PQ, it would be a less aggressive rolloff with a more steep slope due to the additional headroom of the HDR display.

-

Yes this is worth checking, I had previously mentioned video vs data levels and just needing to establish consistency. Probably easiest check is interpret the clip in the NLE as video levels and see if it then matches the Ninja V.

-

OK i think I know what you mean, you probably explained it before. So there's no difference in the display image between ISO 100 and 800 on the Atomos. For DNGs, ISO 800 just has an increased signal (gain) vs the base ISO 100. So yes, the clipping point is a lot lower on ISO 100, 200, 400 images. It does sound like Native mode is more predictable on the Ninja. Need to still look at your follow-up post with example images.

-

Right, there is no standard and having not tested it, I'm not sure how the Atomos false color responds with all these different input log images. I just don't see how it could be reliable. The built-in fp false color is at least designed just for that camera though.

-

I'm a bit confused because I thought you said the Ninja recording starts at 800. What about on a waveform instead of false colors? What is the max IRE value according to that? I assume you mean you're just applying the standard V-log to Rec709 LUT on the naked image on the Ninja. There is a chance that this doesn't work and you're actually stuck with some random flat image from the camera.

-

Was just checking out the new Fuji X-H2S and its new F-log2 curve that has a lot more range than the original F-log. The fact you can record ProRes raw open gate on the APS-C, and I would say the Atomos will apply the new F-log2 curve for monitoring, this is awesome and exactly what Sigma needs to do. Simple. Well, if we figure out that V-log matches between the Ninja and NLE then that will actually be effective enough.

-

Does the linear to V-log transform (without the log to 709 LUT) in FCPX match the default image on the Ninja V? That would be another way of checking this. Could the slight mismatch be a video vs data levels display issue?

-

Naively I can say that there is less saturation in highlights in film, so that sort of makes sense. To be honest, I don't think there's anything really magical about the LUT but the classic K1S1 was enduringly popular as a SOOC look. I'm guessing maybe you could experiment with Sat/Luna curves in Resolve?

-

It is really hard to say, but the display might be V-log since 0% black is at IRE 7.3 and 90% white is at 61 IRE. The rest of the range above that is highlight information, probably up to 85-90 IRE in V-log depending on the sensor. Middle grey is at 42 IRE which is convenient because this is pretty much the same as what you would expect with a Rec709 LUT. So it's possible Atomos just made the decision to use V-log to display Sigma raw since there's no Sigma log, which would mean that the V-log metadata tag it adds is not superfluous. One way to check would be to add the standard V-log to Rec709 LUT on the Atomos and see if it matches V-log to Rec709 in FCPX, assuming that you first interpret the linear raw files as V-log as well. https://pro-av.panasonic.net/en/cinema_camera_varicam_eva/support/pdf/VARICAM_V-Log_V-Gamut.pdf

-

I would not use IRE unless I had tight control of the color imaging pipeline, then I might use it in a pinch to check things on a log image since it can only show a 0-1 display referred space. It seems for now we established we have no idea what the Ninja V is doing as far as display, so to me IRE and really false color too (on the Ninja) is useless until that is figured out. When viewing in a linear space compositing tool (Nuke) that requires zero image manipulation to see the entire range of the image, it's very easy to check the max linear value of the pixel, and the DNGs with highlight reconstruction have a higher value than the PRR images by about 3/4 of a stop. In terms of linear floating point values where middle grey is 0.18, I'm reading about 16.5 max RGB clipping value in DNGs and about 9.35 in the ProRes Raw files.

-

I had a look at the MLV App a few months ago and I was quite impressed. From memory, I think exporting as ProRes LogC worked out okay and was predictable. I'm personally not into winging it because I'm used to a color managed workflow. I've been able to get fairly predictable results with the DNGs from the fp, which is good enough for me. I know I could always fall back on a light meter, but I'm pretty sure some people got the new false colors working too so I'm interested to give that a try if I get the camera.

-

I personally think it's possible to use the v4 firmware false color feature on the camera, but I don't have it yet to verify that. I've just been playing around with test footage.

-

The trick is that with something like Resolve that has more high end colour management, it can be dealt with. But with tools like Premiere and FCPX, it's more likely that custom LUTs need to be generated from other tools then chained together. I think the post process is actually fine, but it's more the lack of monitoring that will give you the same image as your starting point in post, and we are still a little unclear as to how to expose using camera tools. A light meter would be best for now, probably. But I'm sure there's an obvious solution to expose based on the middle with the new false color feature, even if the highlights remain unseen. With the Ninja V it's a little more tricky to say for now. According to Atomos there's no log monitoring option apparently, but OleB has come up with a workaround of using PQ to view the highlights which is handy to know. It would be good if the Atomos display is actually V-log/Vgamut which is what they are embedding in the file metadata, but I think that is not the case. I think it's more similar to the OFF mode from the camera itself. I'll be interesting to check this if I get a chance.

-

I looked up online at the raw to log conversion options available in FCPX in the Info Inspector, and I can see that you can transform to Panasonic V-log/Vgamut which is coincidentally the same default log curve added by the Ninja V to the FP file metadata. This is similar to Arri LogC, but I created a LUT that transforms from V-log/Vgamut to LogC/AWG (attached) as a next step so that it's more accurate. It is just a slight shift. Then you can later apply the FCPX built-in Arri to Rec709 LUT, or download the LUT from Arri, or use any LUT that expects LogC/AWG as an input, which is many of them. So it's convenient and helps quickly wrangle the raw footage with predictable results. This is kind of a workaround for a lack of a managed color workflow in FCPX. You want to break apart the linear to log then log to display transform into separate operations, rather than apply them in the same operation on import in the "Info Inspector", so that you can grade in-between. https://www.dropbox.com/s/43qwzizvbid79c1/vlog_vgamut_to_logC_AWG.cube?dl=0

-

You don't need to convince yourself because it's true. I already posted a wedge test in an ACES project where I did the same thing with the Sigma FP footage posted. I equalized the input image just by doing exposure shifts in linear space and the result is the same image with different clipping points and levels of sensor noise. Which is why shifting the range towards highlight capture will give Arri-like, or really, film-like rolloff at the expense of more noise obviously. And it's important to know that it's not the Arri Rec709 LUT in itself that is the Mojo. That LUT expects the LogC/AWG input. Using ACES gives the same kind of result too with the basic display transform. Both can prove as a starting point and then other kinds of look modification can be added. And then obviously people like Steve Yedlin are doing an accurate film print emulation instead, and have their own managed workflow that is not specifically ACES, but it's the same idea. It depends on the show and the people involved.

-

That LUT is a default example of how well the rolloff can be utilised. Re: ML Raw, yes it's really the same workflow as with any raw footage. At some point, the raw footage needs to be transformed to log to apply a film print style LUT. In ACES that transform is built into the reference rendering transform/output device transform. If doing it manually, it's the process of transforming to, for example, LogC then to Rec709. Those are the two basic, most common examples.

-

Here is the same thing, but with your ISO 6400 image and 6400 -2 stops. The point of these is not to just show another look, but this is a "good enough" technically accurate pipeline for most people based on a scene referred image, and I did not need to do any special modifications.

-

Here's the image rendered through the default ACES look transform. In this case, I first exported the PRR files as Arri LogC/AWG then I used the Alexa input device transform to ACES. This is from the ISO 3200 image you provided. It's quite a graceful transition for blown out highlights. If we pull the exposure on this image down -2 stops, it's not too bad because the LUT is doing a good job interpreting the raw data. But you start to see the sensor clipping and the rolloff is more abrupt towards the core which is lacking detail. This is where the Alexa wins out, as it will allow the LUT to keep going with the rolloff. It's the LUT that is providing the rolloff, the sensor data is just linear - but the Alexa has a lot more of it captured on the top end by default. If you underexpose with the Sigma, obviously you will keep that rolloff going too and it will not look like a flat white blob with magenta edges at the transition.

-

With raw there's no such thing as SOOC. I think that what you're seeing as SOOC is just some random interpretation by FCP. But as long as it works for you that's the main thing. You should get a good predictable starting point just by transcoding raw to Arri LogC and applying the standard Arri log to Rec709 LUT, which has a visually similar highlight rolloff to the default ACES look transform but it's a bit less contrasty. Both are based roughly on the s-curve of a film print. Why make life hard? Everyone talks about Alexa highlight rolloff, but all they are really looking at most of the time is the rolloff of the 709 LUT from the raw sensor data since the sensor itself has no highlight rolloff in the captured image. My opinion from limited testing is that working internally with DNGs seems pretty straightforward.

-

This is all super frustrating, and clearly it goes back to Sigma not thinking through a more predictable workflow and then Atomos just running with whatever they could get out of Sigma. I do think if I personally get hold of the camera that with some extra attention I'll find a predictable workflow despite that. But it's definitely not easy communicating back and forth via a chat forum. The value proposition with the Ninja V is clearly compressed raw. It has a bit less highlight dynamic range because it doesn't have the highlight recovery of DNGs, but that is mostly fake information at best. It can work well, other times not. I do think that there's a way to incorporate the Ninja V with more predictable exposure and monitoring. But again, I would need to sit down and test it myself. Obviously, there's no straightforward solution for people who have not got some industry knowledge about color imaging pipelines and know their linear raw vs log vs rec709, etc. Also I would recommend maybe look into transcoding the PRR footage to PR4444 in Assimilate Play Pro. Assimilate seem to have the most mature PRR workflow for ingest. I managed to get a free license with my Ninja V. Then you can for example export full quality Arri log footage from the linear raw footage and take it back to FCPX. So what is the value proposition of this camera? It is very affordable for what it does, and it's quite unique at the moment. It seems like a camera that's best to experiment with because it may drive you mad if you try to apply a predictable workflow. It is a lot more affordable than a Sony FX3. I was initially turned off by the slow rolling shutter speed and the fact that the raw output is downsampling from the full sensor. I still don't get how that is even possible for a raw file. But it seems the camera still has a lot to offer. So I feel like that is it for now! Hopefully I'll get one in the next few weeks and I can do my own tests.

-

Just a heads up that PQ pushes 100 IRE white in Rec709 down to 15 IRE, which is about 2.74 stops darker. So I don't know if that explains your exposure mismatch which you compensated for by opening the aperture by +3 stops. I'm totally guessing at this point, having not used it. Maybe just try exposing with a light meter for ISO 800, ignore false colors and the Ninja V completely for now, then import into FCPX and do something simple like transform the raw image to Arri LogC/Arri wide gamut, then apply the default LogC to Rec709 LUT. If that looks right, then you can figure out how to get the exposure monitoring looking correct on the Ninja. The aim here is not to match anything, but just to check your exposure worked as expected like you're exposing film that you can't monitor at the time of shooting. Middle grey should fall at about 41 IRE with the Arri Rec709 LUT, so then you'll know your light meter exposure worked.

-

When you say "matching", I assume you mean visually matching between the Ninja and FCPX. Overall it doesn't matter if they match or not, but it's useful right now while you're diagnosing the larger problem. Maybe just email Atomos support for clarification as to how to use false colors with the Sigma FP raw capture. Also explain that you're monitoring in PQ which I think they don't recommend. To me, the way you're describing "native" working is the way ISO changes are meant to work, so I don't get the issue. If it was more of an exposure index approach where it's recording a native ISO with no baked-in ISO change then that would be different.