herein2020

Members-

Posts

934 -

Joined

-

Last visited

Content Type

Profiles

Forums

Articles

Everything posted by herein2020

-

Does an APS-C Crop on a FF Sensor Increase Background Compression?

herein2020 replied to herein2020's topic in Cameras

And therein lies all of the confusion over compression. I really think people's definition of compression is two fold: 1-The background seems farther away 2- The background is more out of focus If you define compression as having to meet both criteria then yes it does not exist because the background is not really farther away it is just perception distortion. But if you define compression as a shallower depth of field when comparing a 50mm lens to an 85mm lens at the same aperture and distance from the subject then you could say compression is not a myth. In my particular case I was focusing on the DOF and trying to figure out if the DOF would change when using the APS-C crop mode and the answer seems to be that no, it will not change, the only thing that will change is the FOV which is not an accurate representation of a true 85mm lens. But the ironic part is that the perception distortion would be equivalent to the longer lens because the subject would appear closer to the camera which would probably create the same perception distortion as a real 85mm. Personally I don't care about the perception distortion aspect and instead would rather have the shallower DOF instead. There is a great YouTube video on this but I cannot find it now; the one thing even that video did not go into though was a situation like this where the only thing that changes is the crop factor of the sensor. I get what you are saying but in this particular case I am still not convinced. I don't think the adapter changes the crop factor, but I do think the adapter communicates with the camera's firmware and in the firmware I think it would be pretty simple to adjust the crop factor based on the type of lens and adapter connected. The S5 definitely knows when the adapter is connected because it disables continuous AF, it also knows when a crop sensor lens is attached to the adapter because it disables FF mode. I do think you are probably right though in that it just sticks to a crop factor of 1.5x because my EF-S 10-22mm lens is unusable on the S5, yet all of my other EF-S lenses are fine. With the EF-S 10-22mm lens the barrel is clearly visible and the image is unusable; but then again this could simply be because the lens is older and does not properly communicate with the adapter. I really think in this particular case the only way to know for sure would be to set up a static composition and compare a native L-Mount lens with APS-C mode enabled to an EF-S lens attached to the adapter with an identical focal length as the native lens to see if there is a difference in the FOV. The only reason that I think this matters is because for those of us using the adapter, it would be nice to know ahead of time which EF-S lenses work with the S5 without having to find out through trial and error. The problem of course is that Panasonic does not care about Canon EF-S lenses and Canon does not care about Panasonic camera bodies so neither vendor has any incentive to produce a compatibility list of APS-C lenses. -

Does an APS-C Crop on a FF Sensor Increase Background Compression?

herein2020 replied to herein2020's topic in Cameras

I am aware that the lenses do not have a crop, my crop reference is strictly referring to the crop factor of the S5 specifically when the Sigma L mount to EF mount adapter is added AND when the S5 is then placed into APS-C crop mode. The part I don't know is if the Sigma Adapter or the S5 firmware tells it to set the crop factor of the SENSOR to 1.6x because it detects a Canon EF-S lens or if it sets the SENSOR crop factor to 1.5x because it is a Panasonic. -

Does an APS-C Crop on a FF Sensor Increase Background Compression?

herein2020 replied to herein2020's topic in Cameras

I was hoping to get the DOF as well, but like everyone is saying, its just a crop, not an actual lens element change, can't have everything I guess. I have both native and EF mount but setting up a scene and actually testing it sounds too much like work to me especially since I can't remove the EF adapter without first removing my tripod plate. 🙂 -

Does an APS-C Crop on a FF Sensor Increase Background Compression?

herein2020 replied to herein2020's topic in Cameras

That is a really cool tool. oh and my math was a bit off, 50mm with a 1.6x crop is 80mm. The tool confused me again though, it shows the background is more out of focus, whereas I think with the S5 the background bokeh stays the same with the crop. @MrSMW - are you sure, I thought the image circle in the crop sensor lenses for EF-S lenses needed 1.6x crop to not see the lens barrel. I need to test with and without the adapter. So much to test...but I guess none of it really matters when you are out shooting. -

Does an APS-C Crop on a FF Sensor Increase Background Compression?

herein2020 replied to herein2020's topic in Cameras

Now that is pretty cool, I kind of figured that, but I don't have an 85mm to test with and didn't want to compare a zoom to a prime. -

Does an APS-C Crop on a FF Sensor Increase Background Compression?

herein2020 replied to herein2020's topic in Cameras

I know actual compression doesn't exist but I guess my question is....will an 85mm lens produce an identical image on a FF sensor as a 50mm with a 1.6x APS-C crop on the same FF sensor if all else remains equal (i.e. distance to subject). Even though it is just throwing away pixels to achieve the crop, it is still bringing the subject closer to the camera without sacrificing resolution. @Mark Romero 2 Canon's crop is 1.6x and I'm using Canon lenses with the Sigma adapter so I am assuming that when it enters APS-C mode with the adapter and Canon lens attached that it will crop by 1.6x especially since Canon EF-S lenses which require that 1.6x crop vs 1.5x work on the S5. -

I have been thinking about this for awhile now. I have been shooting with the Panasonic S5 and sometimes I use the APS-C crop mode to better compose the shot without having to change my position. So for example if I am shooting with a 50mm and want a tighter crop, instead of coming closer I will just switch to APS-C mode. In APS-C mode since its a Canon lens I get a 1.6x crop to give me a FOV of 85mm. Using this technique its almost like I have two primes on the camera at the same time. The part I keep thinking about though is if the background compression also increases to an 85mm equivalent or if its just an 85mm FOV.

-

Ok, now I am truly impressed....you can finally change the timeline resolution after adding clips to the timeline. I know every other NLE could do this years ago, but it was one of my pet peeves with Resolve and they finally fixed it. Also, for some reason it stopped crashing for me, I am now editing entire projects with version 17 Beta and it is back to being rock solid. I haven't had time yet to watch their presentation to see all of the new features, but the ones I've found so far are great.

-

I stopped using any IS for my real estate GH5 gimbal work. I just turn it all off, I shoot 60FPS and stabilize in post if needed. At least for the MFT sensor size I found I could get much better results this way. I also spent many hours tweaking my Ronin S settings and perfecting my ninja walk. What I have learned after many years of shooting real estate videos is that you literally should slow every movement to nearly a crawl, especially pans and walks. If you don't start out super slow with every movement you will regret something about the footage later. By moving super slow you will not need IS at all as long as your gimbal settings are optimal. I then use speed ramps and 60FPS to control the actual speed of the footage. You can always speed up footage, you can't always remove instability or slow down the footage enough to make it smooth. But if you start super slow and focus on stability you have much more flexibility in post. For really large properties I've seen people on YouTube use things like skateboards, etc.....to try to remove the up/down bouncing, but if you perfect your ninja walk and go super slow or use speed ramps Resolve can even remove the Z axis movement. The other mistake people make is when they speed ramp real estate footage they only go to 200% or 500%, that's a mistake, that just magnifies any instability that is already there; if you are going to speed ramp do it right.....jump to 2000% or higher then add the motion blur FX and suddenly your footage is buttery smooth; then drop the ramp down to 50% since you shot 60FPS and it looks great. Obviously don't over use this technique and its better for larger houses or commercial properties; for smaller ones I use a mixture of a monopod for pans, tilts, and detail shots, and only a little gimbal work and jump cuts to go from room to room. But back to the S5, I haven't shot any real estate with it and really don't know what lens I would use if I were to shoot one tomorrow. I feel like the 16-35 EF Canon lens that I have would be too front heavy for the gimbal. It would be great if Panasonic released a 14mm F1.8 prime lens for landscape/real estate use.

-

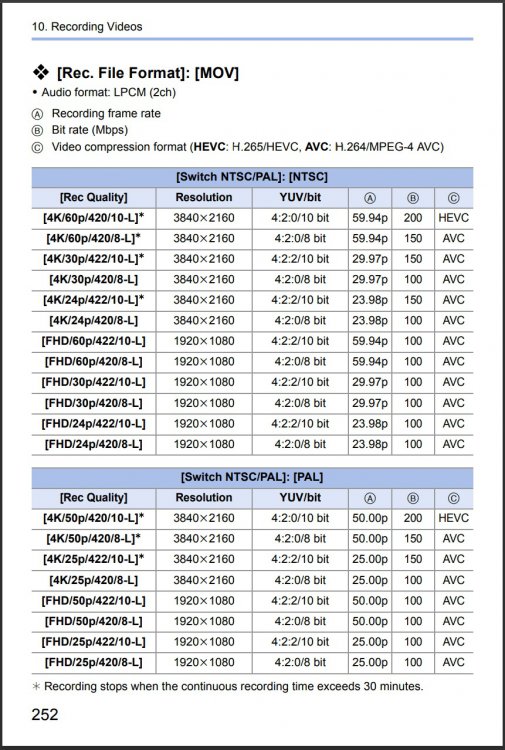

Actual overheating concerns aside, keep in mind that the record limit is only for the 10 bit modes. I shot for years with the GH5 and only ever used 8 bit, so before buying the S5 I decided if I needed to shoot for longer than 30 min I would just use the 8 bit modes. As long as you WB and expose properly 8 bit holds up quite well. I have never had a client tell me that I didn't use enough bits for their project. My use cases are a bit different though since I rarely shoot weddings. Most of my work is short takes and not in direct sunlight.

-

I think they will get back to stability very quickly. This is the first major version that I have tested in beta. The other betas were just small incremental changes. This one seems to have really revamped a lot of stuff. They also are already looking at my bug reports and asking for more input so I'm sure they'll figure it out. The good thing is it is actually crashing; the harder problems to figure out are when it stutters or something locks up but it doesn't actually crash.

-

Did anyone else realize with the S5 that you cannot shoot 4K60FPS 10 bit in H.264? All this time I thought my 4K60FPS clips stored in the MOV container was H.264 but while testing the new Davinci Resolve they all showed up as H.265 and sure enough it only shoots 4K60FPS 10bit using H.265. What is really cool though is Davinci Resolve and Windows 10 can still use HW acceleration because the H.265 codec can be HW accelerated as long as it is 10 bit 4:2:0, so either this was a genius move on Panasonic's part so that people could still edit the footage or a camera HW limitation that happened to align with current HW acceleration capabilities. The R6 on the other hand shot 10 bit 4:2:2 which cannot be HW accelerated and needed proxies for everything.

-

I'll admit that I still like speed ramping. I do think it is bordering on overdone, but for me its very useful to keep the viewer interested when filming real estate videos. Instead of jump cutting to the other side of the room or wasting precious seconds showing the walk there or down a long hallway, speed ramping is a great way to get there in what I consider a visually pleasing way. I also use it quite a bit with drone work as well, Long orbits, or long dolly shots get pretty boring so I use it to quickly start with the big picture then jump in closer without jump cutting. When balanced properly and not overused I think its another useful tool in your storytelling belt just like anything else. I think everyone is speed ramping these days because it is something new that couldn't be done before. You need a lot of elements for a visibly smooth speed ramp (good gimbal, proper technique, a camera that will shoot at least 50/60FPS, and decent editing skills when putting it together in the NLE) so when you pull it off it tends to separate you from the ones that do not have all of those elements. If this video were anything but a camera promo type video I'd definitely think they used speed ramping too much, but I just enjoyed it for what it was and my personal opinion is that it is well done.

-

I don't like Nicest either, but yes it gets the closest out of the ones I tried. Below is a quick LUT comparison. For me Nicest always seems to turn out too dark like I under exposed (maybe I am underexposing?). But GC always seems right the min I apply the LUT. Only in the last image (Ground Control + Noam Kroll) did I slightly adjust the highlights and shadows. That does sound pretty cool, I'll see what Noam does with his LUT, if its not as good as the GHAlex one I may buy that one instead.

-

I have a Core i9 14 core CPU, RTX2080Ti video card with the latest Studio drivers, 64GB of memory, NVME RAID array for my OS drive, NVME RAID array for my projects drive, and dedicated SSD RAID array for my cache drive. I did discover that the clips from the S5 that I thought were H264 were actually H265, so I imported some H264 clips instead and it hasn't crashed yet. I also turned off HW acceleration closed DR, opened DR turned back on HW acceleration and restarted DR one more time. It's no longer crashing with either H265 or H264 clips but I wouldn't trust it for a real project. The interface seems more responsive, Fusion clips cache faster, the Inspector tab is more useful, and the color page looks nicer, but many of the annoying behaviors remain as well.

-

Well Resolve 17 made a liar out of me.....completely unusable for me so far. The interface looks much improved but it crashes non stop on my machine. I dragged in two clips and it crashed so hard that I had to reboot my whole computer. It did send a crash report to Blackmagic Design so at least that's something. I tried again and just adding two clips, a quick color grade, then trimming a clip and it crashed hard; the footage is out of the S5 so H264 4K60FPS 4:2:0 LongGOP. I tried one more time with some R6 footage just to see if H.265 was any better and it played slightly smoother than the previous version but would still need proxies. A really cool new feature is you get to preview your Fusion effects, that was so annoying to me in the previous version. So I guess Resolve 17 is great as long as I don't add any footage to the timeline, hopefully whatever is happening on my machine will get fixed soon.

-

All that I can tell you is that I tried the Panasonic conversion LUTs and they looked worse to me than the GC LUT, it is easy to try and see if it will work for you.

-

That was the case as recently as a few years ago, but there are plenty now, still not as many as PP, but I have found everything that I need for all the types of projects that I work on. Just look for Fusion titles, lower thirds, and transitions. My problem with Resolve is how bad Fusion sucks, I always seem to be waiting for something Fusion related to cache, that's why my only wish is for them to improve it. The concept of Fusion is awesome, now I love node based editing, so much better than layers, but it's so unstable that I hate using it for any real work.

-

It has been rock solid for me and all I seem to use are the beta versions. The only source of instability that I have ever had was in Fusion. Even the betas are far more stable than Premier Pro was for me. Stability in my opinion is its best 'feature'. I had many infuriating performance issues with Resolve for a long time, I also started with the free version then paid for the Studio version...one very simple thing fixed everything for me...I had to create a new user account on my system in Windows 10. I think something on the free version got left behind in my user profile and kept HW acceleration from working properly. If you are using Windows 10 I would try creating a new user account and testing it there. You can, you just need to use proxy files. Once you generate optimized media in Resolve its like editing any other footage. The problem though is generating optimized media takes forever since there is no HW acceleration.

-

I use Noam Kroll's Master Pack III LUTS, they are great for finishing a project (very pricey though). He also contacted me asking for some sample VLOG footage out of the S5 because he is going to make a VLOG to Rec709 LUT and I have no doubt that it will be great, I will start using his new VLOG LUT as soon as it is done. For now though I'm using Ground Control's free Panasonic VLOG to Rec709 LUT which works better for me than Panasonic's LUTS. What I have found is with GC's LUT, I need to do the least amount of work to get the footage to look right from scene to scene: Ground Control Free Rec709 to VLOG LUT https://groundcontrolcolor.com/products/free-sony-v-log-to-rec-709-lut Noam Kroll Master Pack III https://cinecolor.io/products/master-pack-iii

-

There's a lot of annoying quirks that I have just gotten used to especially when it comes to multi-cam; it definitely does not sync multiple cameras using audio as well as Premier did and when you have a multi-cam clip you lose your color grade if you flatten the clip, you also can't reset your crop factor on multi-cam clips without flattening the clip first...etc, etc. Plenty of little quirks but Fusion is my biggest problem. It might as well not even be a tab in the NLE since it is so unstable, and even if I manage to work my way through a Fusion comp, good luck getting it to play smoothly in the edit page until it is cached which could take forever. After Effects was way smoother and more stable when it came to playback.

-

Amazing video and cinematography, not one thing seems off to me, I guess sometimes people just see what they want to see. They did a lot of speed ramping which isn't for everyone, but this wasn't supposed to be a corporate video; it really showcased the slow motion capabilities of the S5. What I found really interesting is they even used 1080P 120FPS for some of the shots which most people are saying is trash due to the bitrate; clearly pretty much anything is useable if you work within its limitations, have a good story, and know your camera. The entire video was flawless IMO and really showcased what this camera can do; they also managed to upscale the 1080P back to 4K without it being obvious which parts were which. Really well done. This video makes me look at 1080P out of this camera in a whole different way and I may try it one day if I want 120FPS.

-

I have an external monitor for my C200 in addition to its Canon monitor, but unless it is a pretty well paying shoot I don't bother pulling it out. I guess I've gotten used to just dealing with tiny screens, the hassle of setting up the monitor, dealing with the cables, figuring out how to power it, etc. just isn't worth it to me. Also to me its a PITA to not see all of the settings on one of the screens, or only the on board monitor is a touch screen, or with the GH5 when you connect something to the HDMI port the audio will not play back through the headphones. Still have to pay for it, so yea make sure if you get one second hand that it has the upgrade. The lack of a tilt / flip screen is a big one for me. I do a lot of gimbal work and low shots, that tilt flip screen is a life saver. The lack of a tilt/flip screen was one of the main reasons that I never considered the S1 or S1R when they came out; that and they didn't do 4K60FPS at the time.

-

I used to think modular was the way to go since the rig could get so small, but after seeing how much was needed to make it usable I now know hybrid meets my needs better. Also, I saw you included the C70 in your modular list....in my opinion it is too complete to be considered modular. With the C70 you can just add a lens and batteries then go shoot.

-

I don't use external recorders or monitors, I am already carrying around too much gear as it is, and even if I did, I wouldn't use one with the S5 because of the mini HDMI port. I do a lot of gimbal work, hybrid shoots, etc., I can't add a monitor to my kit. I'll make the S5 work, but focusing will never be easy.