In 99% of cases there seems to be little benefit to using 10bit other than more difficult to edit, larger file sizes.

10bit is one of those easy to get excited about specs on paper. It’s a bit like the amount of memory in your PC or the speed of the CPU.

The higher number, the better!

What actually makes the difference when it comes to image quality? Well it’s a bit complicated. RAW is the ultimate codec, and the ultimate pain in the ass. LOG is great, no question. Lots of dynamic range, small file sizes, easy to edit and to apply a LUT. And for great LOG you need 10bit, right?

Well it seems that on the A7S III the difference is much of the time impossible to even see.

Not that this was not already proven by the Canon 1D C and that infamous MJPEG codec. The 8bit Canon LOG mode on that was incredibly nice. Fast forward nearly 10 years and there’s far less difference between 10bit 4:2:2 and 8bit 4:2:0 on the Sony A7S III than you might expect.

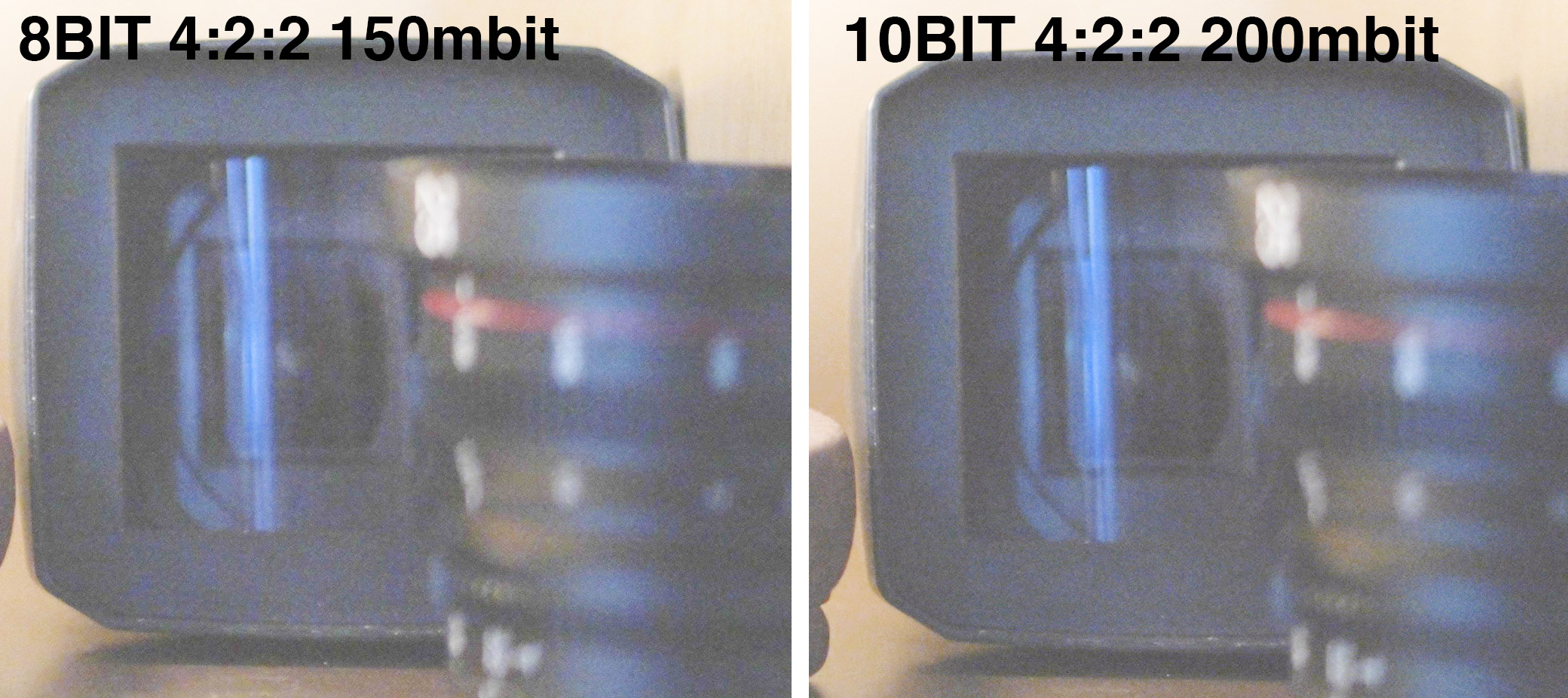

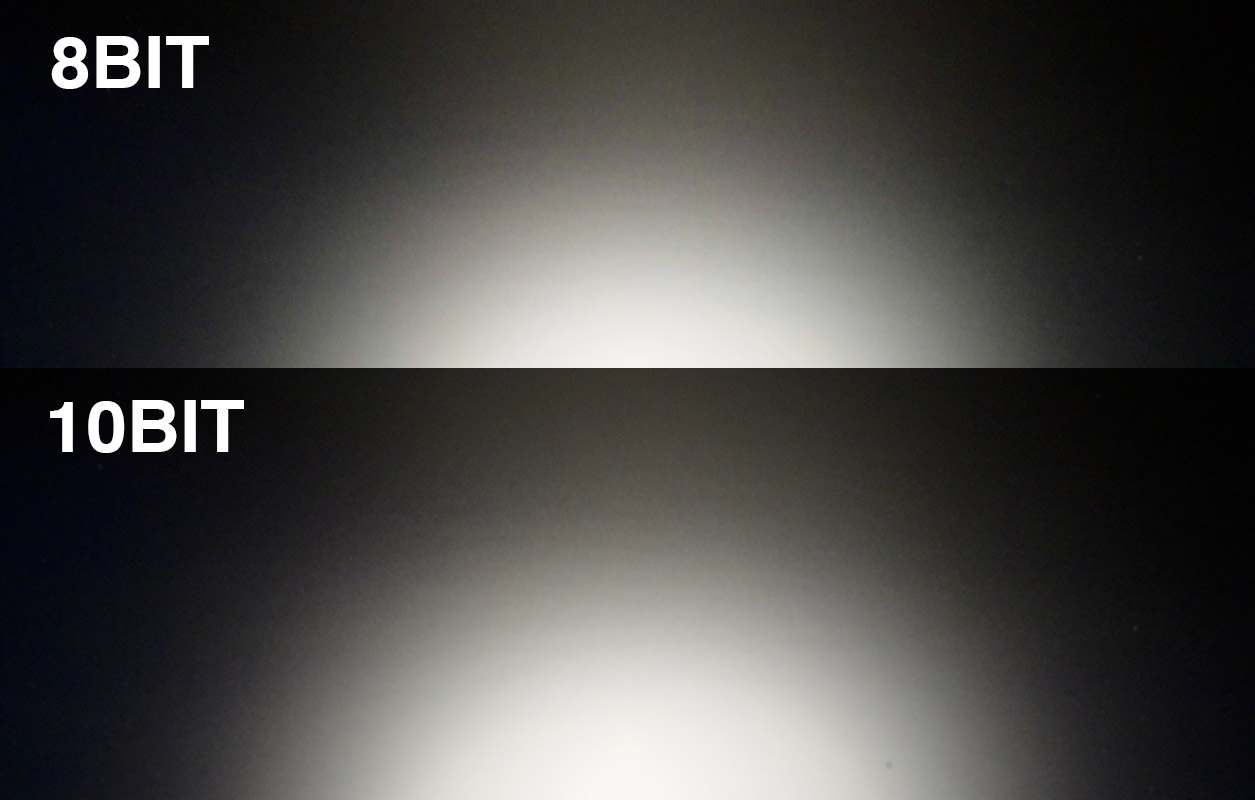

In this shot I exposed for the bright bulb filament in S-LOG 3 and wanted to see the quality of information in the shadows… i.e. dynamic range. That is specifically: detail, noise, compression and banding.

So here is a 1:1 per pixel crop of what I found (4K/60p mode) on the A7S III:

Even when really pushing the image by 5 stops in post and shooting in the flattest S-LOG 3 profile for maximum dynamic range, essentially 8bit goes toe to toe with 10bit for almost identical results. The outcome was so similar I had to do the export from my NLE twice just to check I hadn’t made a mistake and exported the same frame from the same clip.

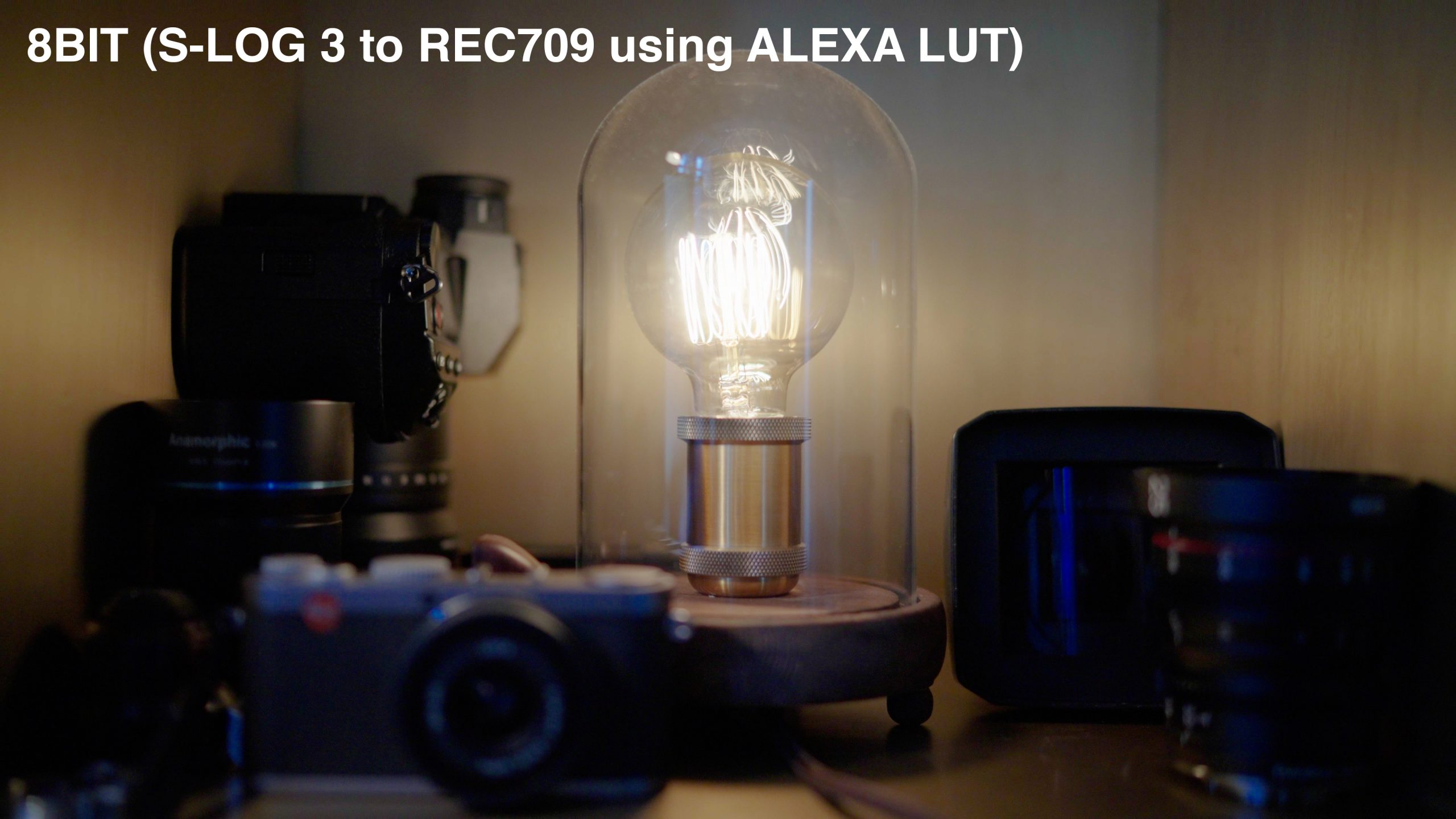

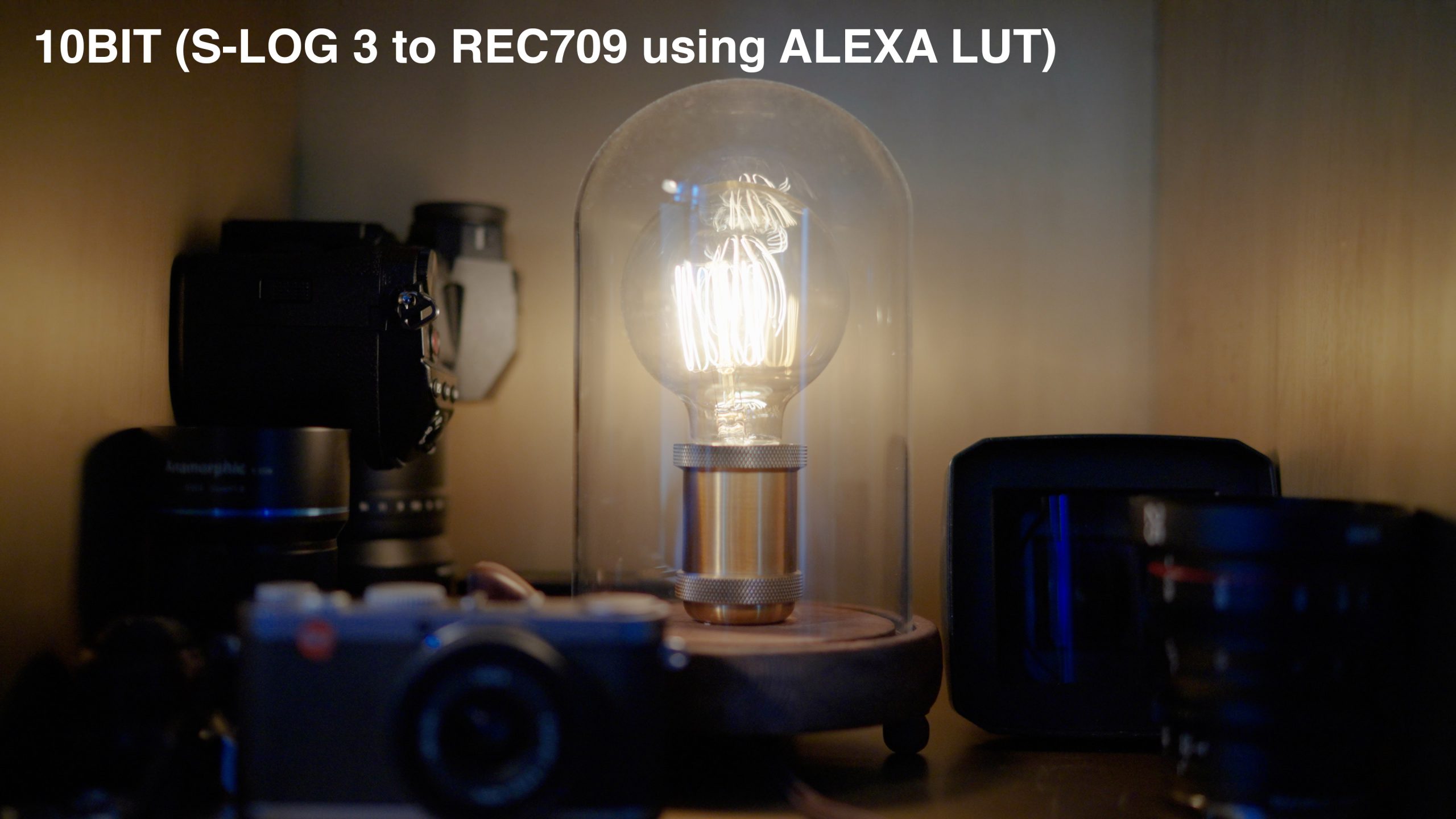

For the next test using the same S-LOG3 clips from my Sony A7S III shot in 8bit and 10bit, I converted to Rec709 using Adobe’s ARRI ALEXA LOGC to REC709 LUT which is a good match for many of the flatter LOG profiles on mirrorless cameras. It gives a pleasing image.

I can see a bit more compression in the blue and red areas when pixel peeping the 4K frame very close-up, but it’s a minor difference and probably down to the slightly lower bitrate of the 8bit codec (150Mbit vs 200Mbit). Again this was 4K/60p in H.264 10bit and 8bit…

Here’s the full 4K frame for reference which you can click on and drag to desktop for a full 3840 x 2160 version…

I’ve often tried to avoid S-LOG 3 on Sony cameras in 8bit due to the banding in plain areas of the shot like a blank wall or blue sky – if shooting S-LOG3 this was very visible on the older A7S II.

Has this changed with the new A7S III?

It is hard to get a blue sky in the UK at the moment so you will have to make do with a wall!

Or better this reflection of a light on a powered-off LCD creating a very smooth and gradual gradient from light to dark.

The results are practically identical, perhaps because of the amount of noise creating a dithering effect.

With the A7S III you can shoot down to 640 in S-LOG 3 or even 160 if you don’t mind a few compromises.

So I tried this again in both 8bit and 10bit to try and get banding, and miraculously the clouds cleared…

Sure enough here I could JUST about eek out a difference. Notice the discolouration (posterisation) in the sky when S-LOG 3 is graded with strong contrast.

However it isn’t really night and day is it?

So no extra dynamic range.

No finer noise grain.

Not really any less banding in most situations where silky smooth gradients are part of the shot.

Posterisation a bit less.

Why is everyone NUTS for 10bit then?

So what about RAW? Well to compare like for like, we must use the same sensor, and process uncompressed RAW sensor data on the same camera, unfortunately this is not a thing in video mode on the A7S III. ProRes RAW is not quite really RAW. There is no uncompressed RAW codec internally on the A7S III, but we can still compare by shooting a 4K photos in Sony ARW format and importing these into Adobe Camera RAW. These offer the ultimate amount of data from the sensor in the A7S III so it is interesting to compare the quality of these to the 10bit 4K video clips…

The result is far better. Ignoring the fact that RAW and LOG are completely different formats and you’re looking at the RAW debayer here, so the image is much punchier, everything about it is superior to the 10bit. Pushed 5 stops reveals plenty of information in the shadows, more dynamic range and a filmic noise grain that is really quite pleasing.

Exposed for the highlights:

Exposed in post for the shadows (RAW pushed 5 stops to the right):

1:1 crop of the dynamic range in the RAW file:

Compared to the less impressive 10bit S-LOG in 4K video mode:

So the conclusion is pretty clear on this one.

I’d like internal RAW codecs and we need them now. 10bit is a bit of a fudge!

You may as well shoot with a good 8bit codec in Canon LOG really.

The Canon 1D C really does prove that this can work brilliantly. It is a silky smooth, fantastically malleable result you get from it. What a fantastic sensor that was as well.

On the Sony A7S III the codec in 8bit is pretty good too, but it lacks something that is hard to put your finger on. It does not have the cleanness to it that the Canon 1D C had in C-LOG 8bit 422 all those years back in 2012. That is nearly 10 years ago now and we are talking MJPEG!!

RAW is certainly a worthwhile leap up from 8bit, but it seems, equally as large a step up from 10bit as well.

And yet there’s still no answer to RED in terms of compressed, easy to edit RAW codec in our mirrorless cameras and due to patents may still be a long time away.

I think this shows why it is important not too hyped about 10bit.

On balance I’d prefer better editing performance (ProRes 10bit or a good 8bit file format) and for H.265/H.264 to remain a streaming codec for the web rather than for capture.